Users Online

· Members Online: 0

· Total Members: 188

· Newest Member: meenachowdary055

Forum Threads

Latest Articles

Articles Hierarchy

Skill 2.3: Manage access and monitor storage

Skill 2.3: Manage access and monitor storage

Skill 2.3: Manage access and monitor storage

We have already learned how Azure Storage allows access through access keys, but what happens if we want to gain access to specific resources without giving keys to the entire storage account? In this topic, we’ll introduce security issues that may arise and how to solve them.

Azure Storage has a built-in analytics feature called Azure Storage Analytics used for collecting metrics and logging storage request activity. You enable Storage Analytics Metrics to collect aggregate transaction and capacity data, and you enable Storage Analytics Logging to capture successful and failed request attempts to your storage account. This section covers how to enable monitoring and logging, control logging levels, set retention policies, and analyze the logs.

This skill covers how to:

![]() Generate shared access signatures, including client renewal and data validation

Generate shared access signatures, including client renewal and data validation

![]() Create stored access policies

Create stored access policies

![]() Regenerate storage account keys

Regenerate storage account keys

![]() Configure and use Cross-Origin Resource Sharing (CORS)

Configure and use Cross-Origin Resource Sharing (CORS)

![]() Set retention policies and logging levels

Set retention policies and logging levels

![]() Analyze logs

Analyze logs

Generate shared access signatures

By default, storage resources are protected at the service level. Only authenticated callers can access tables and queues. Blob containers and blobs can optionally be exposed for anonymous access, but you would typically allow anonymous access only to individual blobs. To authenticate to any storage service, a primary or secondary key is used, but this grants the caller access to all actions on the storage account.

An SAS is used to delegate access to specific storage account resources without enabling access to the entire account. An SAS token lets you control the lifetime by setting the start and expiration time of the signature, the resources you are granting access to, and the permissions being granted.

The following is a list of operations supported by SAS:

![]() Reading or writing blobs, blob properties, and blob metadata

Reading or writing blobs, blob properties, and blob metadata

![]() Leasing or creating a snapshot of a blob

Leasing or creating a snapshot of a blob

![]() Listing blobs in a container

Listing blobs in a container

![]() Deleting a blob

Deleting a blob

![]() Adding, updating, or deleting table entities

Adding, updating, or deleting table entities

![]() Querying tables

Querying tables

![]() Processing queue messages (read and delete)

Processing queue messages (read and delete)

![]() Adding and updating queue messages

Adding and updating queue messages

![]() Retrieving queue metadata

Retrieving queue metadata

This section covers creating an SAS token to access storage services using the Storage Client Library.

More Info: Controlling Anonymous Access

To control anonymous access to containers and blobs, follow the instructions provided at http://msdn.microsoft.com/en-us/library/azure/dd179354.aspx.

More Info: Constructing an Sas Uri

SAS tokens are typically used to authorize access to the Storage Client Library when interacting with storage resources, but you can also use it directly with the storage resource URI and use HTTP requests directly. For details regarding the format of an SAS URI, see http://msdn.microsoft.com/en-us/library/azure/dn140255.aspx.

Creating an SAS token (Blobs)

The following code shows how to create an SAS token for a blob container. Note that it is created with a start time and an expiration time. It is then applied to a blob container:

SharedAccessBlobPolicy sasPolicy = new SharedAccessBlobPolicy();

sasPolicy.SharedAccessExpiryTime = DateTime.UtcNow.AddHours(1);

sasPolicy.SharedAccessStartTime = DateTime.UtcNow.Subtract(new TimeSpan(0, 5, 0));

sasPolicy.Permissions = SharedAccessBlobPermissions.Read | SharedAccessBlobPermissions.

Write | SharedAccessBlobPermissions.Delete | SharedAccessBlobPermissions.List;

CloudBlobContainer files = blobClient.GetContainerReference("files");

string sasContainerToken = files.GetSharedAccessSignature(sasPolicy);

The SAS token grants read, write, delete, and list permissions to the container (rwdl). It looks like this:

?sv=2014-02-14&sr=c&sig=B6bi4xKkdgOXhWg3RWIDO5peekq%2FRjvnuo5o41hj1pA%3D&st=2014

-12-24T14%3A16%3A07Z&se=2014-12-24T15%3A21%3A07Z&sp=rwdl

You can use this token as follows to gain access to the blob container without a storage account key:

StorageCredentials creds = new StorageCredentials(sasContainerToken);

CloudStorageAccount accountWithSAS = new CloudStorageAccount(accountSAS, "account-name",

endpointSuffix: null, useHttps: true);

CloudBlobClientCloudBlobContainer sasFiles = sasClient.GetContainerReference("files");

With this container reference, if you have write permissions, you can interact with the container as you normally would assuming you have the correct permissions.

Creating an SAS token (Queues)

Assuming the same account reference as created in the previous section, the following code shows how to create an SAS token for a queue:

CloudQueueClient queueClient = account.CreateCloudQueueClient();

CloudQueue queue = queueClient.GetQueueReference("queue");

SharedAccessQueuePolicy sasPolicy = new SharedAccessQueuePolicy();

sasPolicy.SharedAccessExpiryTime = DateTime.UtcNow.AddHours(1);

sasPolicy.Permissions = SharedAccessQueuePermissions.Read |

SharedAccessQueuePermissions.Add | SharedAccessQueuePermissions.Update |

SharedAccessQueuePermissions.ProcessMessages;

sasPolicy.SharedAccessStartTime = DateTime.UtcNow.Subtract(new TimeSpan(0, 5, 0));

string sasToken = queue.GetSharedAccessSignature(sasPolicy);

The SAS token grants read, add, update, and process messages permissions to the container (raup). It looks like this:

?sv=2014-02-14&sig=wE5oAUYHcGJ8chwyZZd3Byp5jK1Po8uKu2t%2FYzQsIhY%3D&st=2014-12-2 4T14%3A23%3A22Z&se=2014-12-24T15%3A28%3A22Z&sp=raup

You can use this token as follows to gain access to the queue and add messages:

StorageCredentials creds = new StorageCredentials(sasContainerToken);

CloudQueueClient sasClient = new CloudQueueClient(new

Uri("https://dataike1.queue.core.windows.net/"), creds);

CloudQueue sasQueue = sasClient.GetQueueReference("queue");

sasQueue.AddMessage(new CloudQueueMessage("new message"));

Console.ReadKey();

Important: Secure Use of Sas

Always use a secure HTTPS connection to generate an SAS token to protect the exchange of the URI, which grants access to protected storage resources.

Creating an SAS token (Tables)

The following code shows how to create an SAS token for a table:

SharedAccessTablePolicy sasPolicy = new SharedAccessTablePolicy();

sasPolicy.SharedAccessExpiryTime = DateTime.UtcNow.AddHours(1);

sasPolicy.Permissions = SharedAccessTablePermissions.Query |

SharedAccessTablePermissions.Add | SharedAccessTablePermissions.Update |

SharedAccessTablePermissions.Delete;

sasPolicy.SharedAccessStartTime = DateTime.UtcNow.Subtract(new TimeSpan(0, 5, 0));

string sasToken = table.GetSharedAccessSignature(sasPolicy);

The SAS token grants query, add, update, and delete permissions to the container (raud). It looks like this:

?sv=2014-02-14&tn=%24logs&sig=dsnI7RBA1xYQVr%2FTlpDEZMO2H8YtSGwtyUUntVmxstA%3D&s

t=2014-12-24T14%3A48%3A09Z&se=2014-12-24T15%3A53%3A09Z&sp=raud

Renewing an SAS token

SAS tokens have a limited period of validity based on the start and expiration times requested. You should limit the duration of an SAS token to limit access to controlled periods of time. You can extend access to the same application or user by issuing new SAS tokens on request. This should be done with appropriate authentication and authorization in place.

Validating data

When you extend write access to storage resources with SAS, the contents of those resources can potentially be made corrupt or even be tampered with by a malicious party, particularly if the SAS was leaked. Be sure to validate system use of all resources exposed with SAS keys.

Create stored access policies

Stored access policies provide greater control over how you grant access to storage resources using SAS tokens. With a stored access policy, you can do the following after releasing an SAS token for resource access:

![]() Change the start and end time for a signature’s validity

Change the start and end time for a signature’s validity

![]() Control permissions for the signature

Control permissions for the signature

![]() Revoke access

Revoke access

The stored access policy can be used to control all issued SAS tokens that are based on the policy. For a step-by-step tutorial for creating and testing stored access policies for blobs, queues, and tables, see http://azure.microsoft.com/en-us/documentation/articles/storage-dotnet-shared-access-signature-part-2.

Important: Recommendation for Sas Tokens

Use stored access policies wherever possible, or limit the lifetime of SAS tokens to avoid malicious use.

More Info: Stored Access Policy Format

For more information on the HTTP request format for creating stored access policies, see: https://docs.microsoft.com/en-us/rest/api/storageservices/establishing-a-stored-access-policy.

Regenerate storage account keys

When you create a storage account, two 512-bit storage access keys are generated for authentication to the storage account. This makes it possible to regenerate keys without impacting application access to storage.

The process for managing keys typically follows this pattern:

-

When you create your storage account, the primary and secondary keys are generated for you. You typically use the primary key when you first deploy applications that access the storage account.

-

When it is time to regenerate keys, you first switch all application configurations to use the secondary key.

-

Next, you regenerate the primary key, and switch all application configurations to use this primary key.

-

Next, you regenerate the secondary key.

Important: Managing Key Regeneration

It is imperative that you have a sound key management strategy. In particular, you must be certain that all applications are using the primary key at a given point in time to facilitate the regeneration process.

Regenerating storage account keys

To regenerate storage account keys using the portal, complete the following steps:

-

Navigate to the management portal accessed via https://portal.azure.com.

-

Select your storage account from your dashboard or your All Resources list.

-

Click the Keys box.

-

On the Manage Keys blade, click Regenerate Primary or Regenerate Secondary on the command bar, depending on which key you want to regenerate.

-

In the confirmation dialog box, click Yes to confirm the key regeneration.

Configure and use Cross-Origin Resource Sharing

Cross-Origin Resource Sharing (CORS) enables web applications running in the browser to call web APIs that are hosted by a different domain. Azure Storage blobs, tables, and queues all support CORS to allow for access to the Storage API from the browser. By default, CORS is disabled, but you can explicitly enable it for a specific storage service within your storage account.

More: Info Enabling Cors

For additional information about enabling CORS for your storage accounts, see: http://msdn.microsoft.com/en-us/library/azure/dn535601.aspx.

Configure storage metrics

Storage Analytics metrics provide insight into transactions and capacity for your storage accounts. You can think of them as the equivalent of Windows Performance Monitor counters. By default, storage metrics are not enabled, but you can enable them through the management portal, using Windows PowerShell, or by calling the management API directly.

When you configure storage metrics for a storage account, tables are generated to store the output of metrics collection. You determine the level of metrics collection for transactions and the retention level for each service: Blob, Table, and Queue.

Transaction metrics record request access to each service for the storage account. You specify the interval for metric collection (hourly or by minute). In addition, there are two levels of metrics collection:

![]() Service level These metrics include aggregate statistics for all requests, aggregated at the specified interval. Even if no requests are made to the service, an aggregate entry is created for the interval, indicating no requests for that period.

Service level These metrics include aggregate statistics for all requests, aggregated at the specified interval. Even if no requests are made to the service, an aggregate entry is created for the interval, indicating no requests for that period.

![]() API level These metrics record every request to each service only if a request is made within the hour interval.

API level These metrics record every request to each service only if a request is made within the hour interval.

Note: Metrics Collected

All requests are included in the metrics collected, including any requests made by Storage Analytics.

Capacity metrics are only recorded for the Blob service for the account. Metrics include total storage in bytes, the container count, and the object count (committed and uncommitted).

Table 2-1 summarizes the tables automatically created for the storage account when Storage Analytics metrics are enabled.

TABLE 2-1 Storage metrics tables

|

METRICS |

TABLE NAMES |

|

Hourly metrics |

$MetricsHourPrimaryTransactionsBlob $MetricsHourPrimaryTransactionsTable $MetricsHourPrimaryTransactionsQueue $MetricsHourPrimaryTransactionsFile |

|

Minute metrics (cannot set through the management portal) |

$MetricsMinutePrimaryTransactionsBlob $MetricsMinutePrimaryTransactionsTable $MetricsMinutePrimaryTransactionsQueue $MetricsMinutePrimaryTransactionsFile |

|

Capacity (only for the Blob service) |

$MetricsCapacityBlob |

More Info: Storage Analytics Metrics Tabale Schema

For additional details on the transaction and capacity metrics collected, see: https://docs.microsoft.com/en-us/rest/api/storageservices/storage-analytics-metrics-table-schema.

Retention can be configured for each service in the storage account. By default, Storage Analytics will not delete any metrics data. When the shared 20-terabyte limit is reached, new data cannot be written until space is freed. This limit is independent of the storage limit of the account. You can specify a retention period from 0 to 365 days. Metrics data is automatically deleted when the retention period is reached for the entry.

When metrics are disabled, existing metrics that have been collected are persisted up to their retention policy.

More Info: Storage Metrics

For more information about enabling and working with storage metrics, see: http://msdn.microsoft.com/en-us/library/azure/dn782843.aspx.

Configuring storage metrics and retention

To enable storage metrics and associated retention levels for Blob, Table, and Queue services in the portal, follow these steps:

-

Navigate to the management portal accessed via https://portal.azure.com.

-

Select your storage account from your dashboard or your All resources list.

-

Scroll down to the Usage section, and click the Capacity graph check box.

-

On the Metric blade, click Diagnostics Settings on the command bar.

-

Click the On button under Status. This shows the options for metrics and logging.

-

![]() If this storage account uses blobs, select Blob Aggregate Metrics to enable service level metrics. Select Blob Per API Metrics for API level metrics.

If this storage account uses blobs, select Blob Aggregate Metrics to enable service level metrics. Select Blob Per API Metrics for API level metrics.

![]() If this storage account uses tables, select Table Aggregate Metrics to enable service level metrics. Select Table Per API Metrics for API level metrics.

If this storage account uses tables, select Table Aggregate Metrics to enable service level metrics. Select Table Per API Metrics for API level metrics.

![]() If this storage account uses queues, select Queue Aggregate Metrics to enable service level metrics. Select Queue Per API Metrics for API level metrics.

If this storage account uses queues, select Queue Aggregate Metrics to enable service level metrics. Select Queue Per API Metrics for API level metrics.

-

Provide a value for retention according to your retention policy. Through the portal, this will apply to all services. It will also apply to Storage Analytics Logging if that is enabled. Select one of the available retention settings from the slider-bar, or enter a number from 0 to 365.

Note: Choosing a Metrics Level

Minimal metrics yield enough information to provide a picture of the overall usage and health of the storage account services. Verbose metrics provide more insight at the API level, allowing for deeper analysis of activities and issues, which is helpful for troubleshooting.

Analyze storage metrics

Storage Analytics metrics are collected in tables as discussed in the previous section. You can access the tables directly to analyze metrics, but you can also review metrics in both Azure management portals. This section discusses various ways to access metrics and review or analyze them.

More Info: Storage Monitoring, Diagnosing, and Troubleshooting

For more details on how to work with storage metrics and logs, see: http://azure.microsoft.com/en-us/documentation/articles/storage-monitoring-diagnosing-troubleshooting.

Monitor metrics

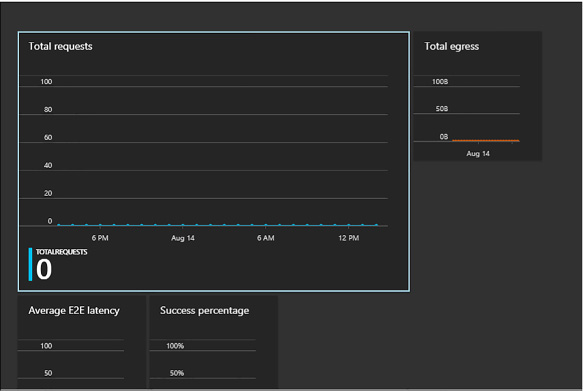

At the time of this writing, the portal features for monitoring metrics is limited to some predefined metrics, including total requests, total egress, average latency, and availability (see Figure 2-4). Click each box to see a Metric blade that provides additional detail.

FIGURE 2-4 Monitoring overview from the portal

To monitor the metrics available in the portal, complete the following steps:

-

Navigate to the management portal accessed via https://portal.azure.com.

-

Select your storage account from your dashboard or your All Resources list.

-

Scroll down to the Monitor section, and view the monitoring boxes summarizing statistics. You’ll see TotalRequests, TotalEgress, AverageE2ELatency, and AvailabilityToday by default.

-

Click each metric box to view additional details for each metric. You’ll see metrics for blobs, tables, and queues if all three metrics are being collected.

Note: Customizing the Monitoring Blade

You can customize which boxes appear in the Monitoring area of the portal, and you can adjust the size of each box to control how much detail is shown at a glance without drilling into the metrics blade.

Configure Storage Analytics Logging

Storage Analytics Logging provides details about successful and failed requests to each storage service that has activity across the account’s blobs, tables, and queues. By default, storage logging is not enabled, but you can enable it through the management portal, by using Windows PowerShell, or by calling the management API directly.

When you configure Storage Analytics Logging for a storage account, a blob container named $logs is automatically created to store the output of the logs. You choose which services you want to log for the storage account. You can log any or all of the Blob, Table, or Queue servicesLogs are created only for those services that have activity, so you will not be charged if you enable logging for a service that has no requests. The logs are stored as block blobs as requests are logged and are periodically committed so that they are available as blobs.

Note: Deleting the Log Container

After Storage Analytics has been enabled, the log container cannot be deleted; however, the contents of the log container can be deleted.

Retention can be configured for each service in the storage account. By default, Storage Analytics will not delete any logging data. When the shared 20-terabyte limit is reached, new data cannot be written until space is freed. This limit is independent of the storage limit of the account. You can specify a retention period from 0 to 365 days. Logging data is automatically deleted when the retention period is reached for the entry.

Note: Duplicate Logs

Duplicate log entries may be present within the same hour. You can use the RequestId and operation number to uniquely identify an entry to filter duplicates.

More Info: Storage Logging

For more information about enabling and working with Azure storage logging, see: http://msdn.microsoft.com/en-us/library/azure/dn782843.aspx and http://msdn.microsoft.com/en-us/library/azure/hh343262.aspx.

Set retention policies and logging levels To enable storage logging and associated retention levels for Blob, Table, and Queue services in the portal, follow these steps:

-

Navigate to the management portal accessed via https://portal.azure.com.

-

Select your storage account from your dashboard or your All resources list.

-

Under the Metrics section, click Diagnostics.

-

Click the On button under Status. This shows the options for enabling monitoring features.

-

If this storage account uses blobs, select Blob Logs to log all activity.

-

If this storage account uses tables, select Table Logs to log all activity.

-

If this storage account uses queues, select Queue Logs to log all activity.

-

Provide a value for retention according to your retention policy. Through the portal, this will apply to all services. It will also apply to Storage Analytics Metrics if that is enabled. Select one of the available retention settings from the drop-down list, or enter a number from 0 to 365.

Note: Controlling Logged Activities

From the portal, when you enable or disable logging for each service, you enable read, write, and delete logging. To log only specific activities, use Windows PowerShell cmdlets.

Enable client-side logging

You can enable client-side logging using Microsoft Azure storage libraries to log activity from client applications to your storage accounts. For information on the .NET Storage Client Library, see: http://msdn.microsoft.com/en-us/library/azure/dn782839.aspx. For information on the Storage SDK for Java, see: http://msdn.microsoft.com/en-us/library/azure/dn782844.aspx.

Analyze logs

Logs are stored as block blobs in delimited text format. When you access the container, you can download logs for review and analysis using any tool compatible with that format. Within the logs, you’ll find entries for authenticated and anonymous requests, as listed in Table 2-2.

TABLE 2-2 Authenticated and anonymous logs

|

Request type |

Logged requests |

|

Authenticated requests |

|

|

Anonymous requests |

|

Logs include status messages and operation logs. Status message columns include those shown in Table 2-3. Some status messages are also reported with storage metrics data. There are many operation logs for the Blob, Table, and Queue services.

More Info: Status Messages and Operation Logs

For a detailed list ofx specific logs and log format specifics, see: http://msdn.microsoft.com/en-us/library/azure/hh343260.aspx and http://msdn.microsoft.com/en-us/library/hh343259.aspx.

TABLE 2-3 Information included in logged status messages

|

Column |

Description |

|

Status Message |

Indicates a value for the type of status message, indicating type of success or failure |

|

Description |

Describes the status, including any HTTP verbs or status codes |

|

Billable |

Indicates whether the request was billable |

|

Availability |

Indicates whether the request is included in the availability calculation for storage metrics |

Finding your logs

When storage logging is configured, log data is saved to blobs in the $logs container created for your storage account. You can’t see this container by listing containers, but you can navigate directly to the container to access, view, or download the logs.

To view analytics logs produced for a storage account, do the following:

Using a storage browsing tool, navigate to the $logs container within the storage account you have enabled Storage Analytics Logging for using this convention: https://<accountname>.blob.core.windows.net/$logs.

View the list of log files with the convention <servicetype>/YYYY/MM/DD/HHMM/<counter>.log.

Select the log file you want to review, and download it using the storage browsing tool.

More Info: Log Metadata

The blob name for each log file does not provide an indication of the time range for the logs. You can search this information in the blob metadata using storage browsing tools or Windows PowerShell.

View logs with Microsoft Excel

Storage logs are recorded in a delimited format so that you can use any compatible tool to view logs. To view logs data in Excel, follow these steps:

-

Open Excel, and on the Data menu, click From Text.

-

Find the log file and click Import.

-

During import, select Delimited format. Select Semicolon as the only delimiter, and Double-Quote (“) as the text qualifier.

Analyze logs

After you load your logs into a viewer like Excel, you can analyze and gather information such as the following:

![]() Number of requests from a specific IP range

Number of requests from a specific IP range

![]() Which tables or containers are being accessed and the frequency of those requests

Which tables or containers are being accessed and the frequency of those requests

![]() Which user issued a request, in particular, any requests of concern

Which user issued a request, in particular, any requests of concern

![]() Slow requests

Slow requests

![]() How many times a particular blob is being accessed with an SAS URL

How many times a particular blob is being accessed with an SAS URL

![]() Details to assist in investigating network errors

Details to assist in investigating network errors

More Info: Log Analysis

You can run the Azure HDInsight Log Analysis Toolkit (LAT) for a deeper analysis of your storage logs. For more information, see: https://hadoopsdk.codeplex.com/releases/view/117906.