Quick access to online references 28

Errata, updates, & book support 28

Chapter 1. Create and manage virtual machines 31

Skill 1.1: Deploy workloads on Azure ARM virtual machines 31

Identify supported workloads 32

Skill 1.2: Perform configuration management 37

Configure VMs with Custom Script Extension 39

Configuring a new VM with Custom Script Extension 39

Local Configuration Manager 46

Creating a configuration script 46

Deploying a DSC configuration package 47

Configuring an existing VM using the Azure Portal 48

Scale up and scale down VM sizes 53

Scaling up and scaling down VM size using the Portal 53

Scaling up and scaling down VM size using Windows PowerShell 53

Deploy ARM VM Scale Sets (VMSS) 54

Deploy ARM VM Scale Sets using the Portal 55

Deploying a Scale Set using a Custom Image 60

Configuring Autoscale when provisioning VM Scale Set using the Portal 64

Configuring Autoscale on an existing VM Scale Set using the Portal 67

Skill 1.4: Design and implement ARM VM storage 69

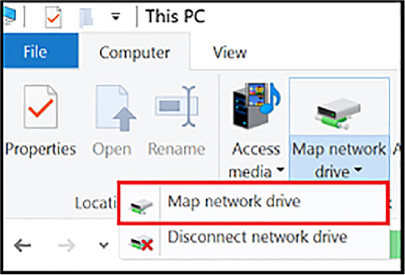

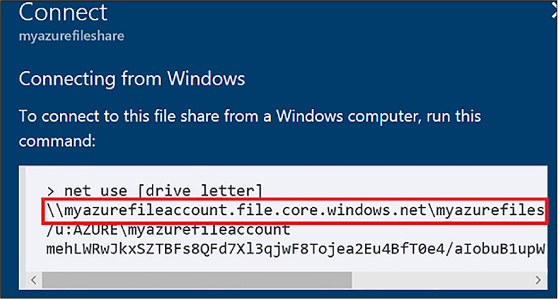

Configure shared storage using Azure File storage 87

Accessing files within the share 90

Implement ARM VMs with Standard and Premium Storage 91

Implement ARM VMs with Standard and Premium Storage using the Portal 91

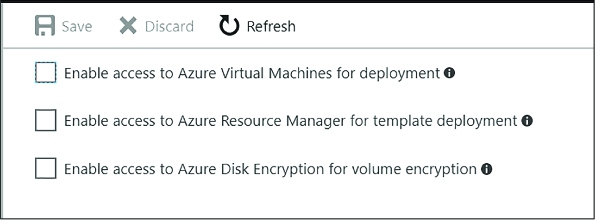

Implement Azure Disk Encryption for Windows and Linux ARM VMs 92

Implement Azure Disk Encryption for Windows and Linux ARM VMs using PowerShell 92

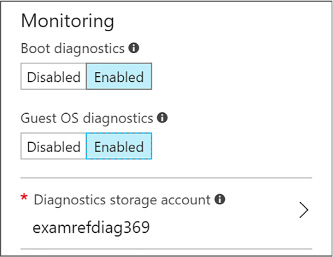

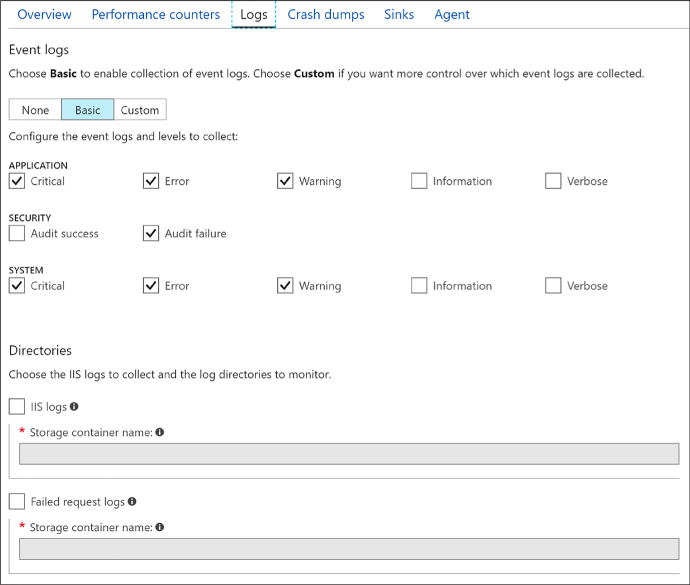

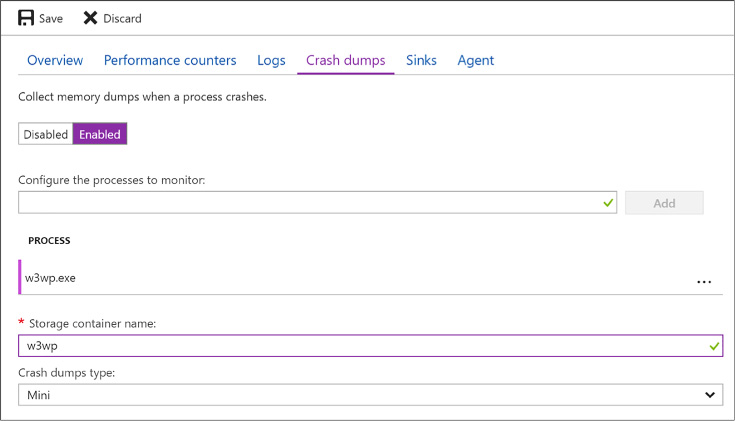

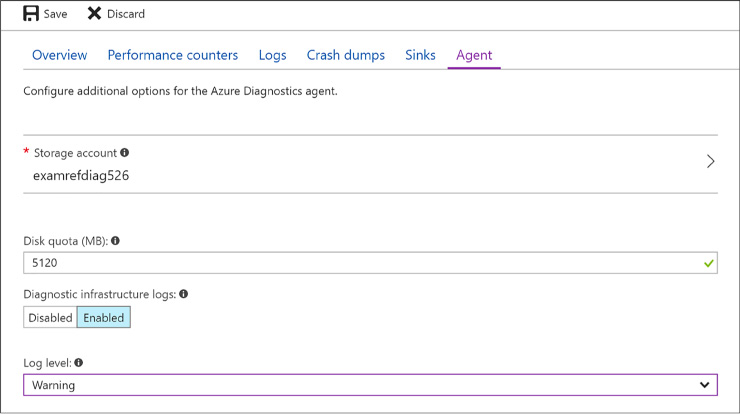

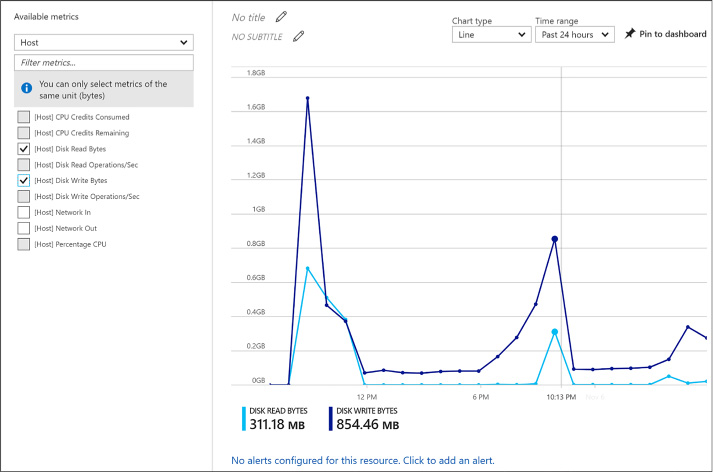

Configure monitoring and diagnostics for a new VM 96

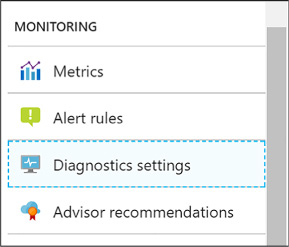

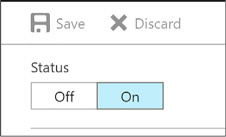

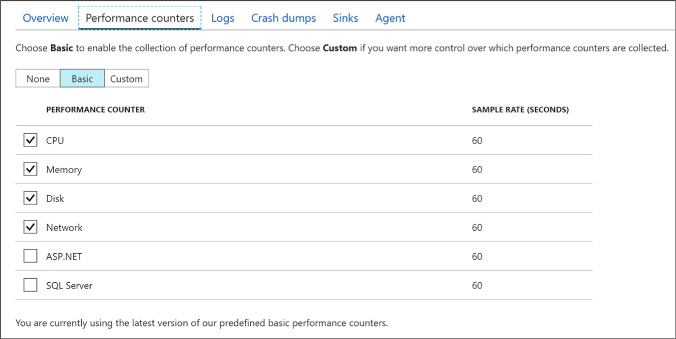

Configure monitoring and diagnostics for an existing VM 98

Viewing event logs, diagnostic infrastructure logs, and application logs 104

Skill 1.6: Manage ARM VM Availability 106

Configure availability sets 106

Availability sets and application tiers 107

Configuring availability sets 107

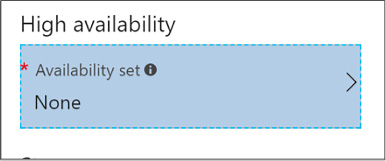

Configuring an availability set for a new ARM VM 107

Configuring an availability set for an existing ARM VM 109

Configuring an availability set using Windows PowerShell 110

Combine the Load Balancer with availability sets 110

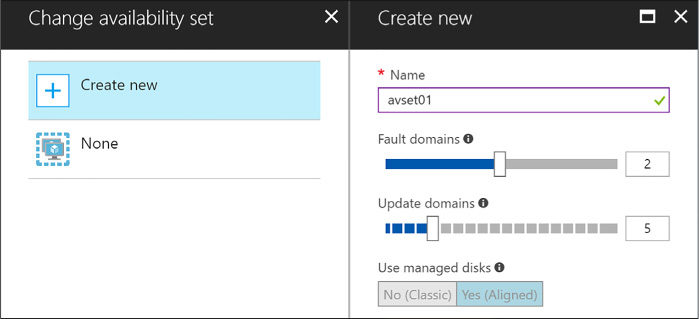

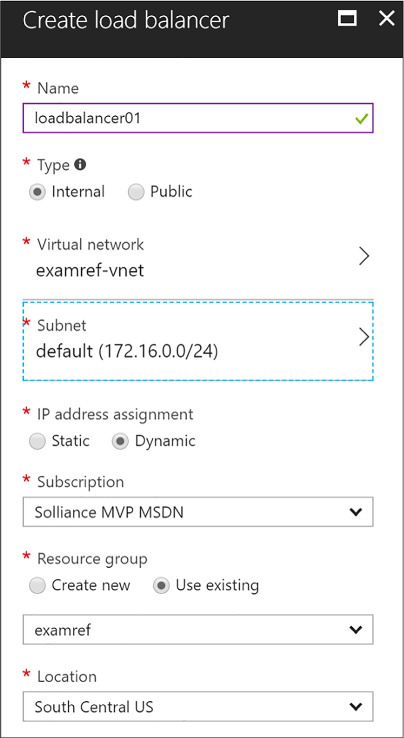

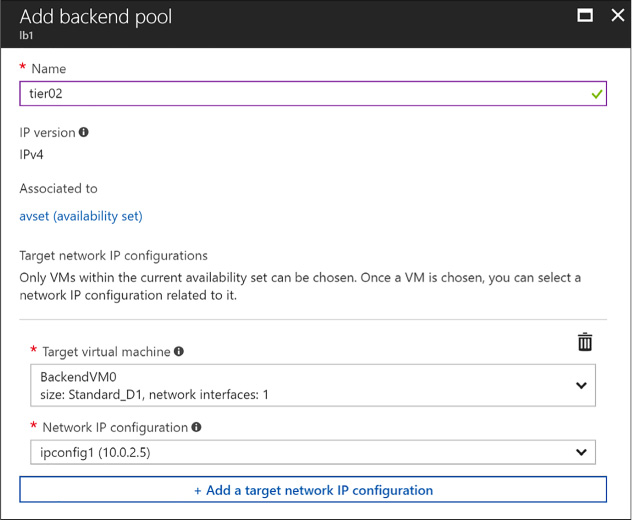

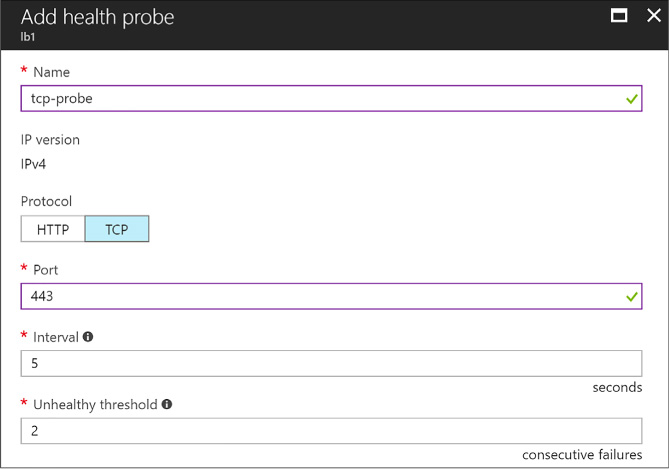

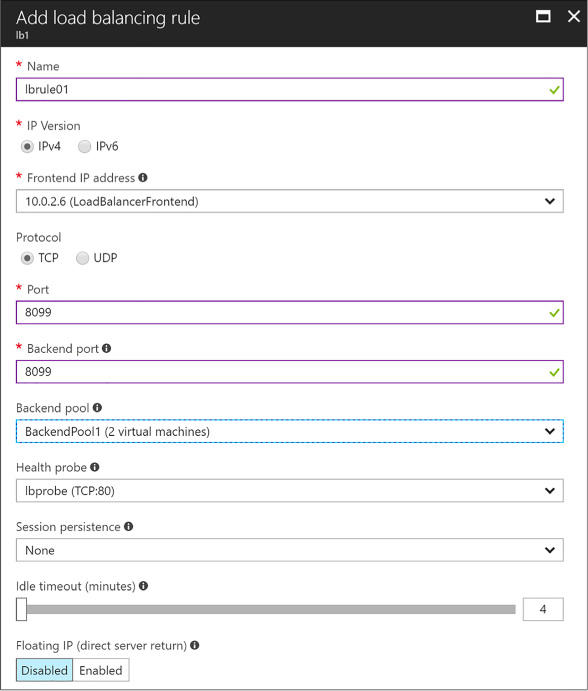

Configuring a Load Balancer for VMs in an Availability Set 110

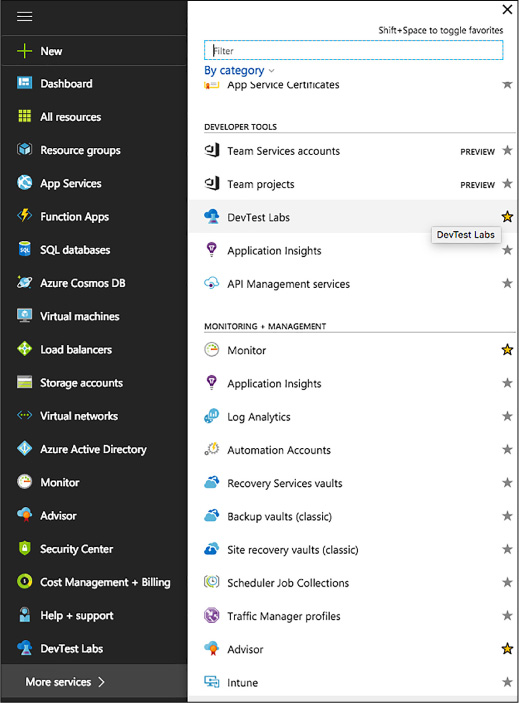

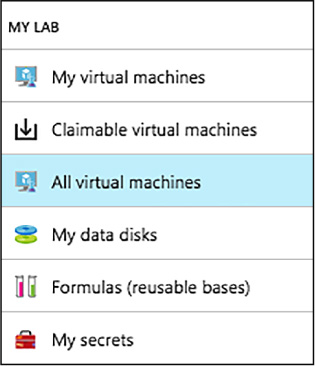

Skill 1.7: Design and implement DevTest Labs 117

Create and manage custom images and formulas 121

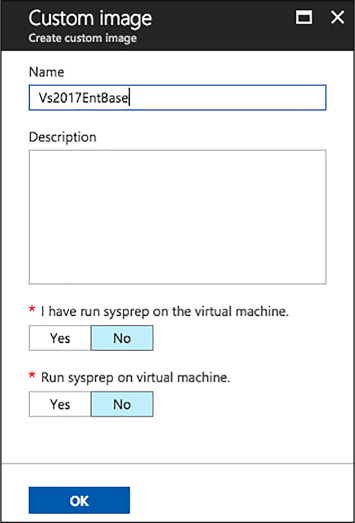

Create a custom image from a provisioned virtual machine 122

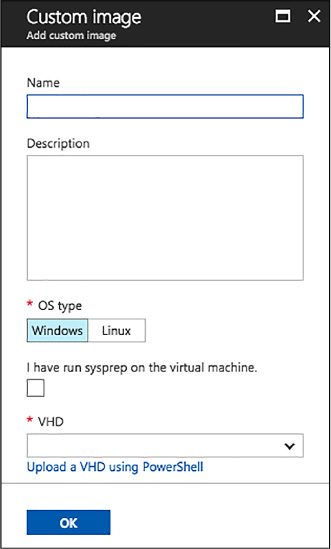

Create a custom image from a VHD using the Azure Portal 124

Create a custom image from a VHD using PowerShell 126

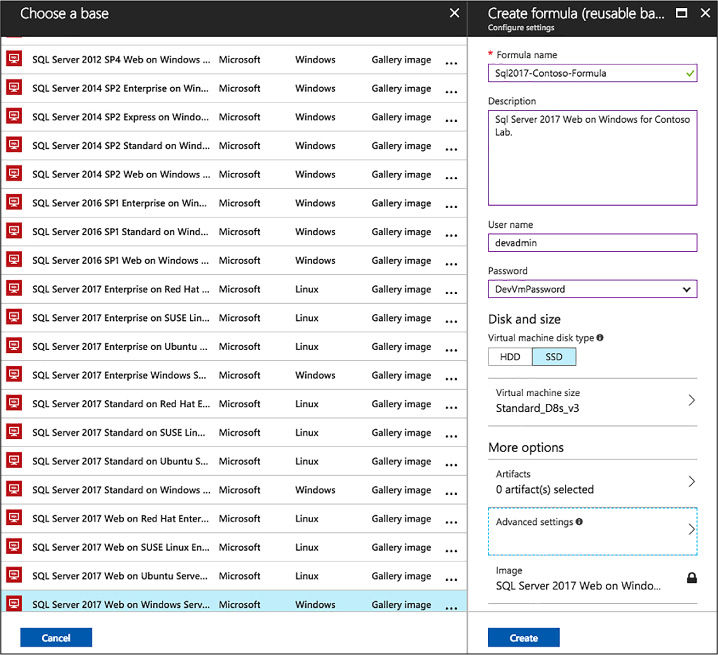

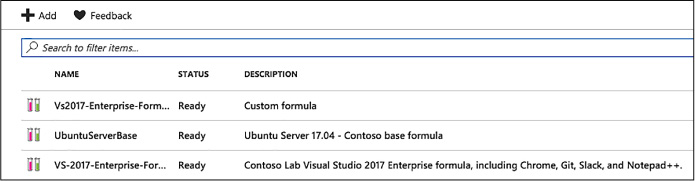

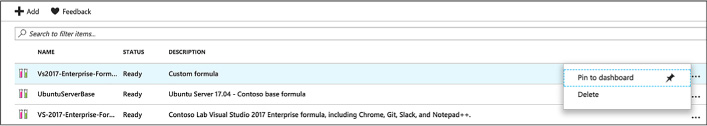

Create a formula from a base 129

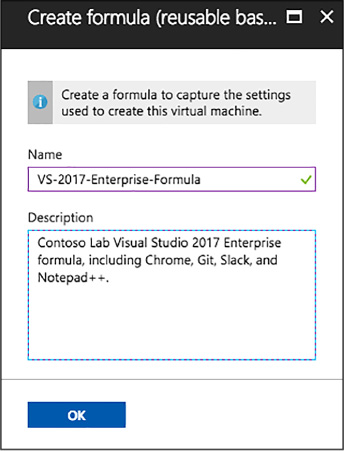

Create a formula from a VM 131

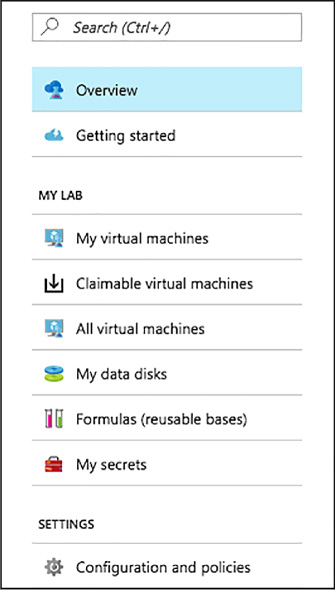

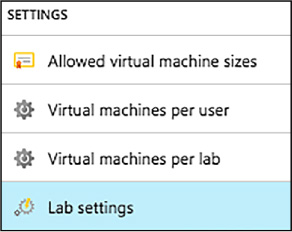

Configure a lab to include policies and procedures 136

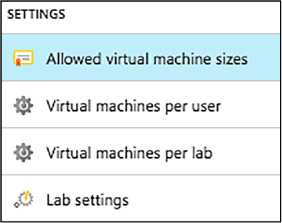

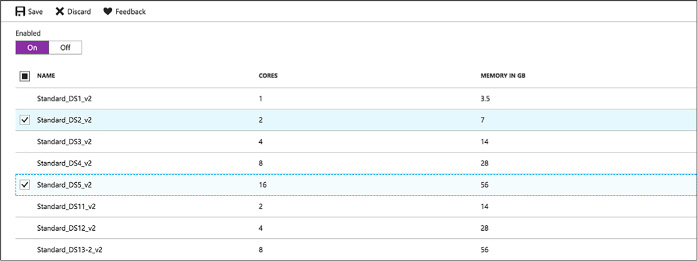

Configure allowed virtual machine sizes policy 136

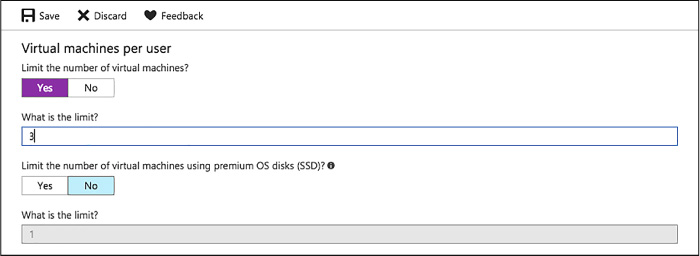

Configure virtual machines per user policy 138

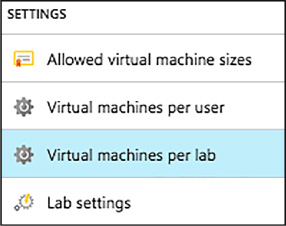

Configure virtual machines per lab policy 139

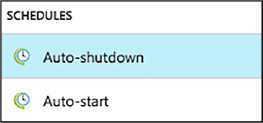

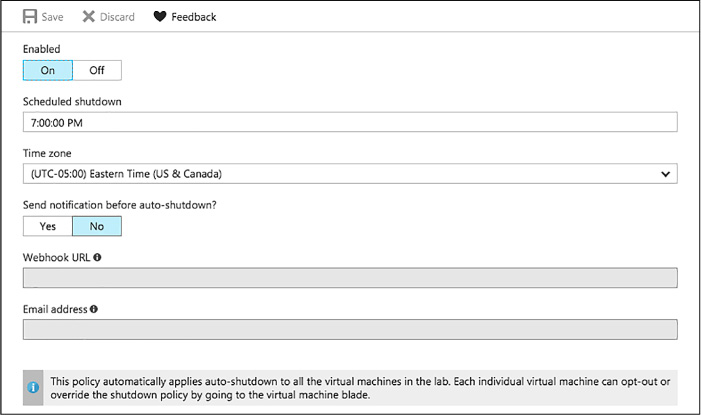

Configure auto-shutdown policy 140

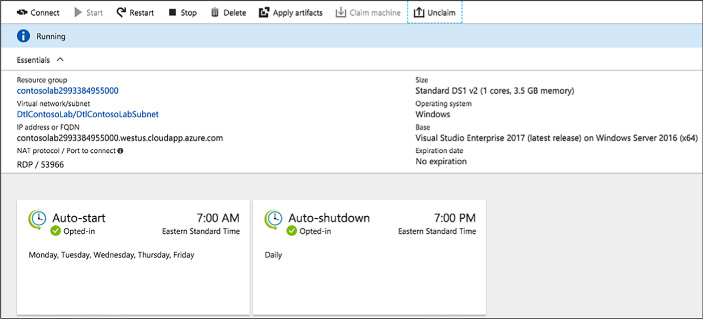

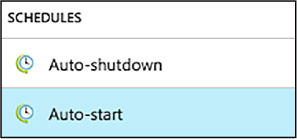

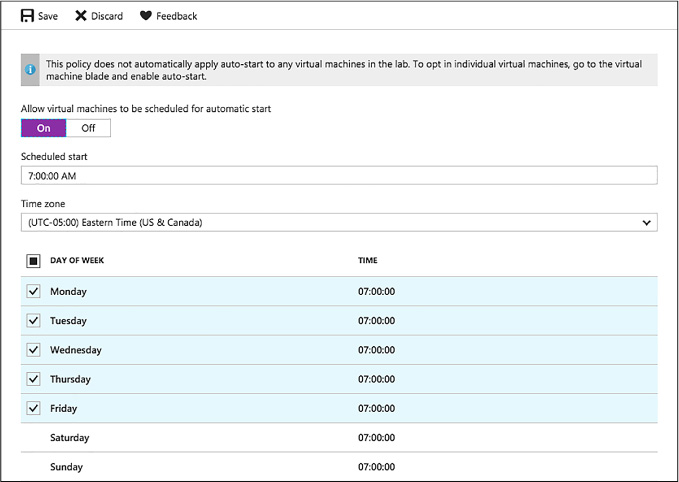

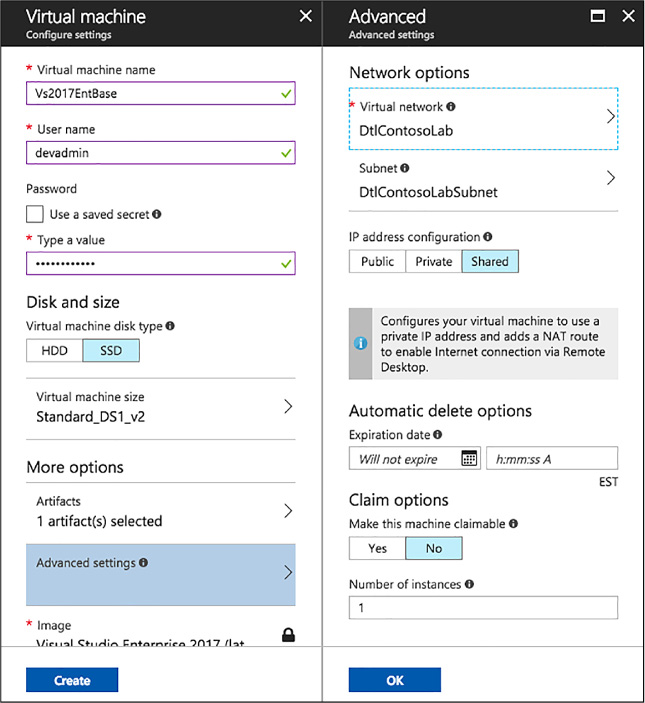

Configure auto-start policy 144

Set expiration date policy 146

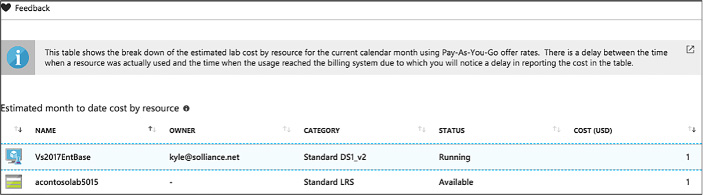

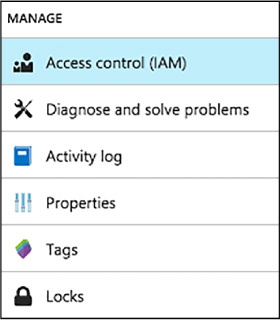

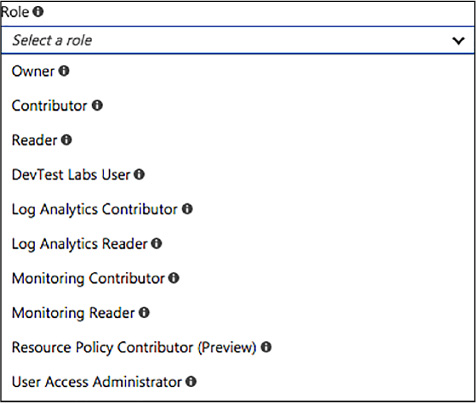

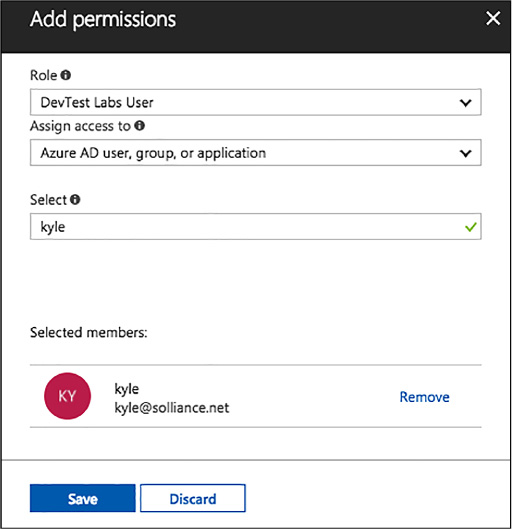

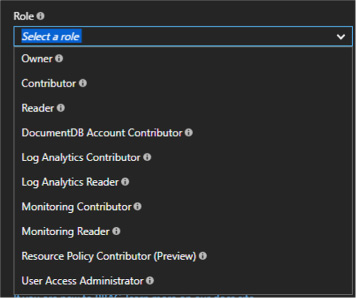

Add an owner or user at the lab level 154

Add an external user to a lab using PowerShell 157

Use lab settings to set access rights to the environment 158

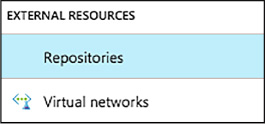

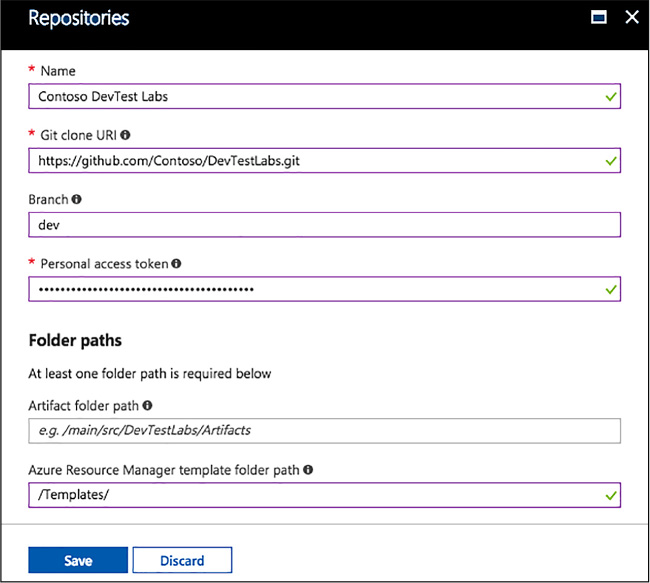

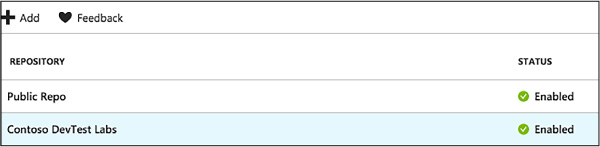

Configure an ARM template repository 160

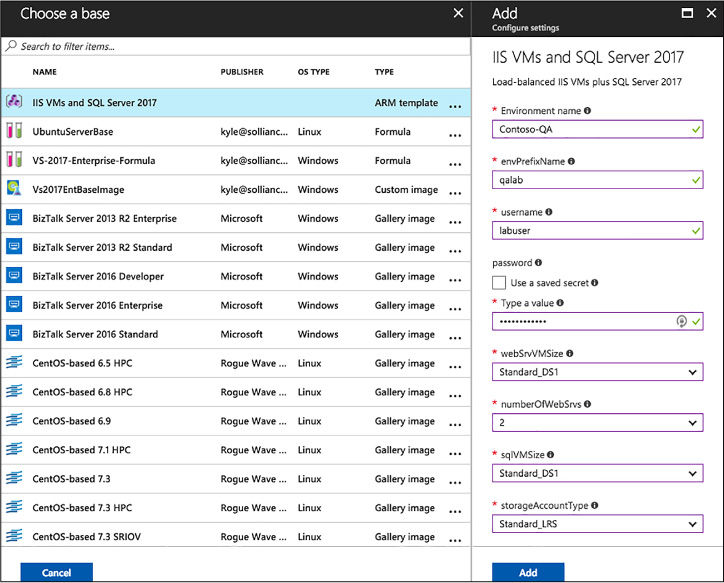

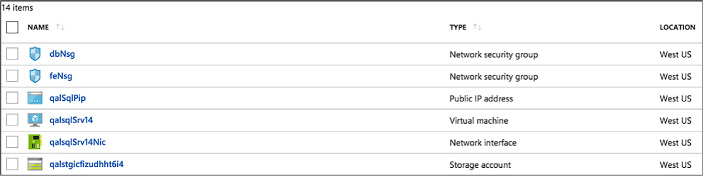

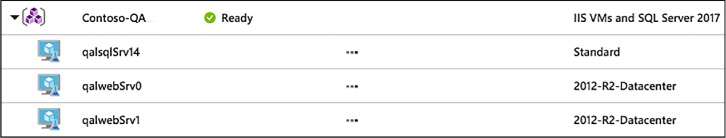

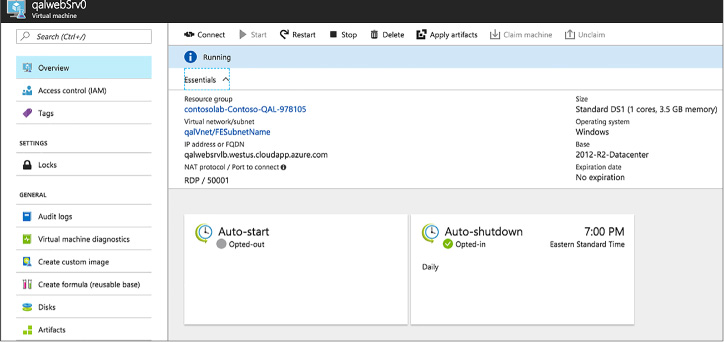

Create an environment from an ARM template 163

Chapter 2. Design and implement a storage and data strategy 169

Skill 2.1: Implement Azure Storage blobs and Azure files 169

Create a blob storage account 170

Set metadata on a container 173

Setting user-defined metadata 174

Reading user-defined metadata 174

Store data using block and page blobs 175

Configure a Content Delivery Network with Azure Blob Storage 177

Create connections to files from on-premises or cloudbased Windows or, Linux machines 181

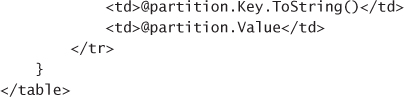

Skill 2.2: Implement Azure Storage tables, queues, and Azure Cosmos DB Table API 185

Using basic CRUD operations 186

Inserting multiple records in a transaction 189

Getting records in a partition 189

Designing, managing, and scaling table partitions 192

Adding messages to a queue 193

Retrieving a batch of messages 195

Choose between Azure Storage Tables and Azure Cosmos DB Table API 196

Skill 2.3: Manage access and monitor storage 197

Generate shared access signatures 197

Creating an SAS token (Blobs) 198

Creating an SAS token (Queues) 199

Creating an SAS token (Tables) 200

Create stored access policies 201

Regenerate storage account keys 201

Regenerating storage account keys 202

Configure and use Cross-Origin Resource Sharing 202

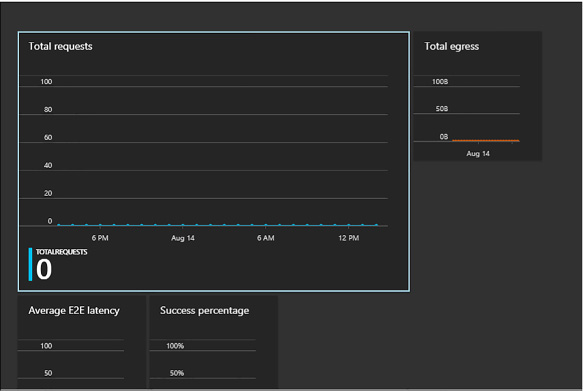

Configuring storage metrics and retention 204

Configure Storage Analytics Logging 209

Enable client-side logging 211

View logs with Microsoft Excel 212

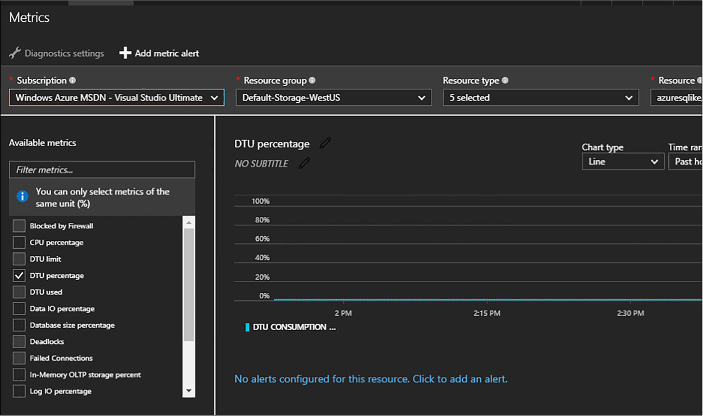

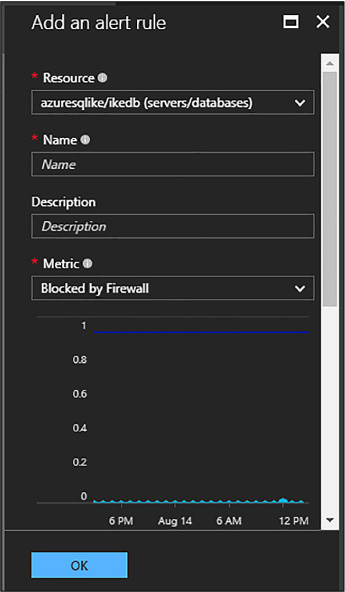

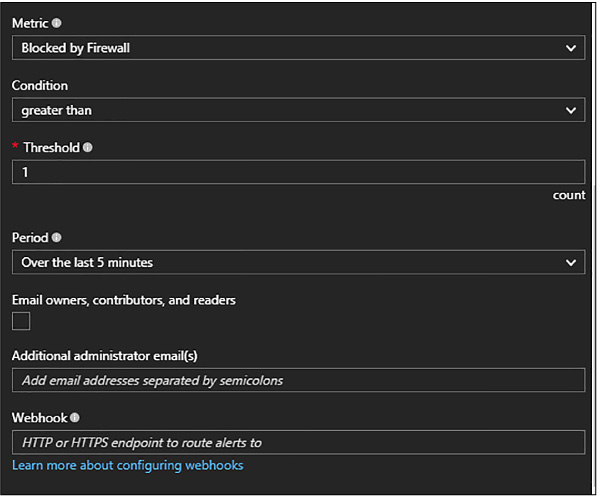

Skill 2.4: Implement Azure SQL databases 213

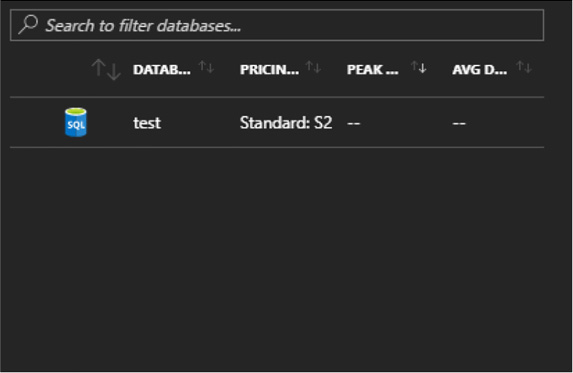

Choosing the appropriate database tier and performance level 213

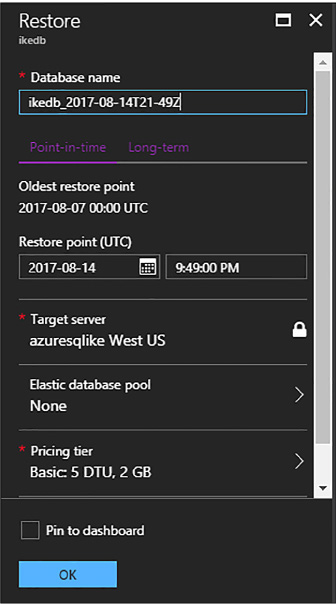

Configuring and performing point in time recovery 217

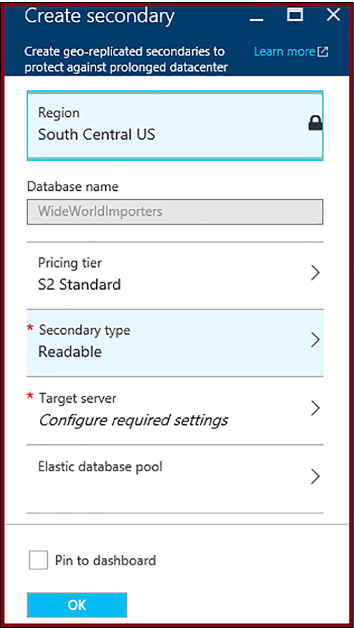

Creating an offline secondary database 220

Creating an online secondary database 220

Creating an online secondary database 221

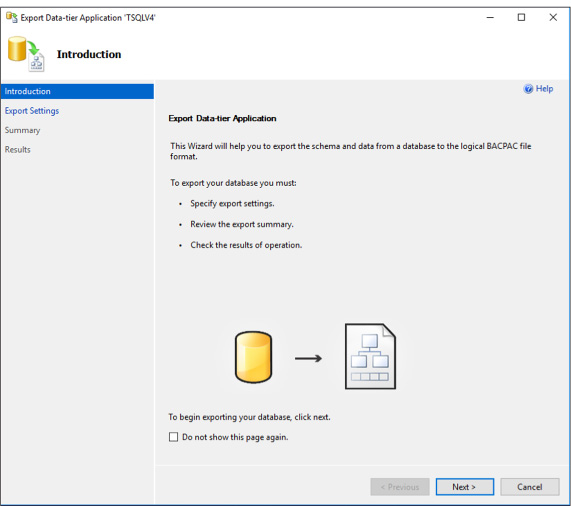

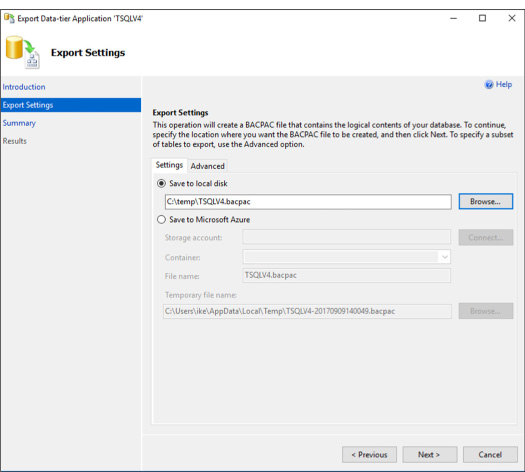

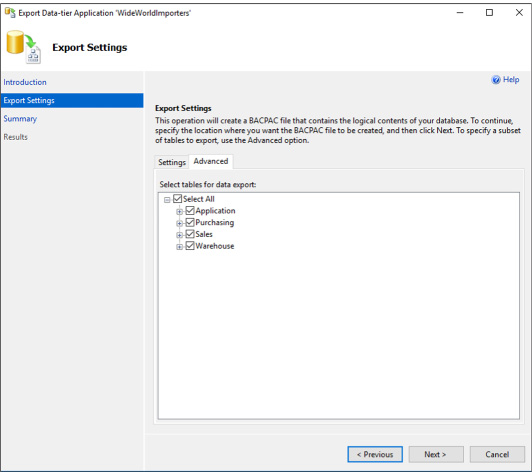

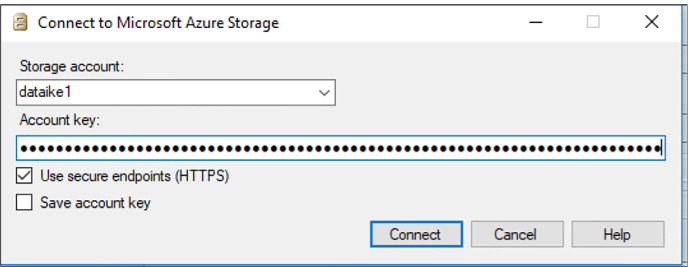

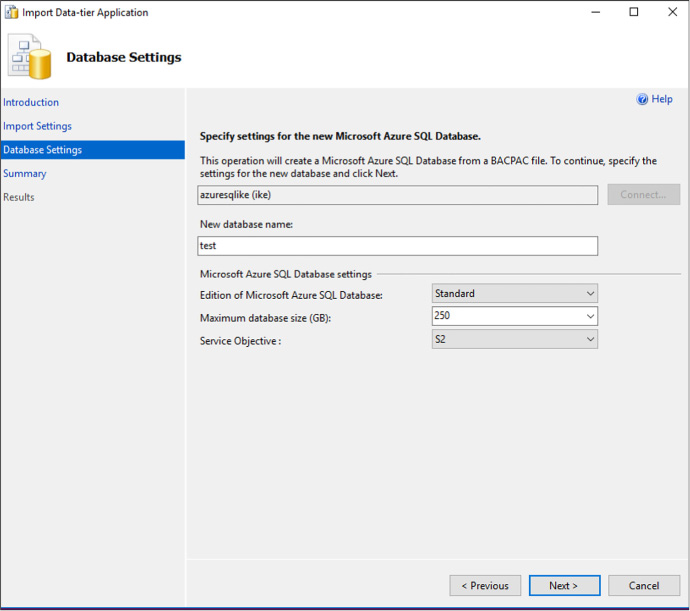

Import and export schema and data 222

Import BACPAC file into Azure SQL Database 225

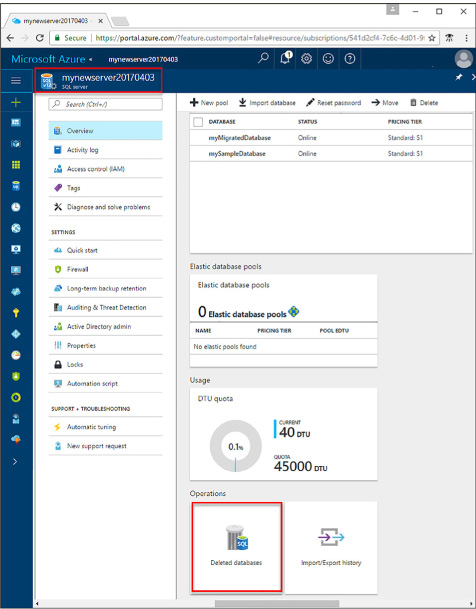

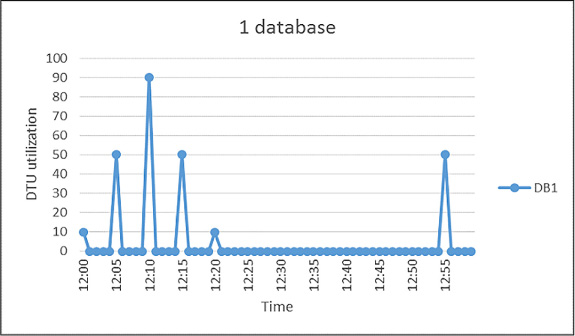

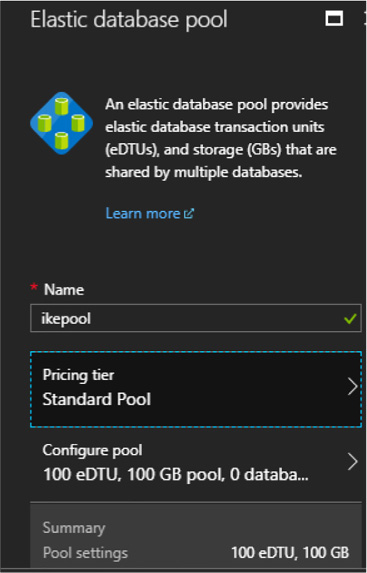

Managed elastic pools, including DTUs and eDTUs 229

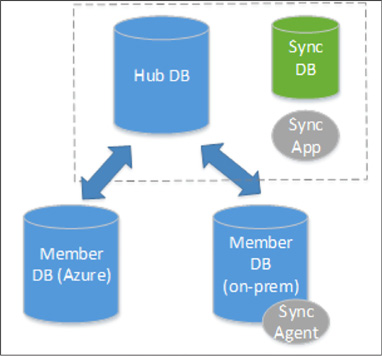

Implement Azure SQL Data Sync 233

Implement graph database functionality in Azure SQL Database 234

Skill 2.5: Implement Azure Cosmos DB DocumentDB 236

Choose the Cosmos DB API surface 237

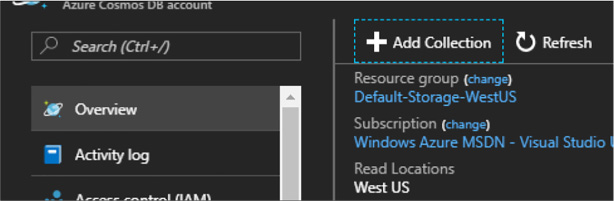

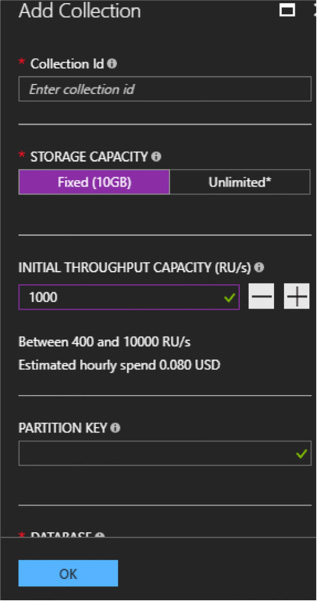

Create Cosmos DB API Database and Collections 238

Create Graph API databases 243

Implement MongoDB database 244

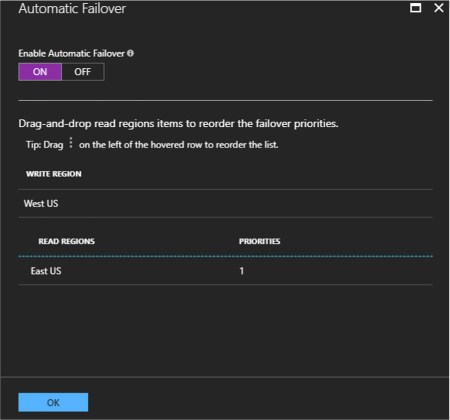

Manage scaling of Cosmos DB, including managing partitioning, consistency, and RUs 244

Implement stored procedures 252

Access Cosmos DB from REST interface 252

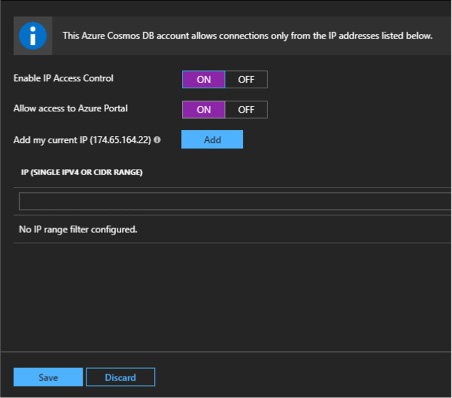

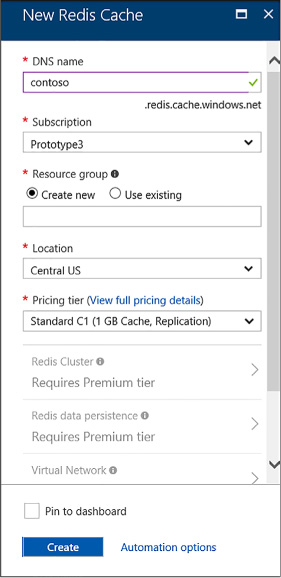

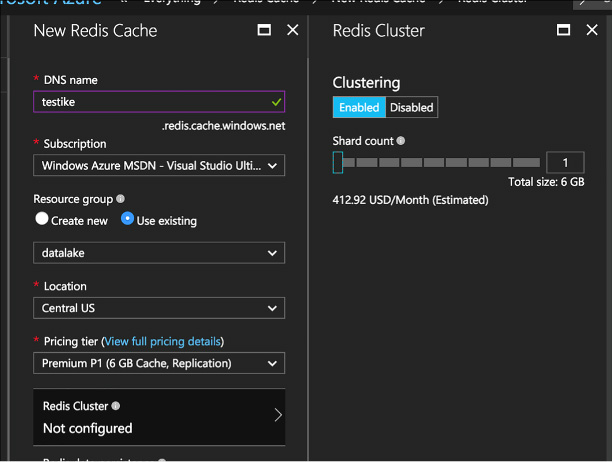

Skill 2.6: Implement Redis caching 256

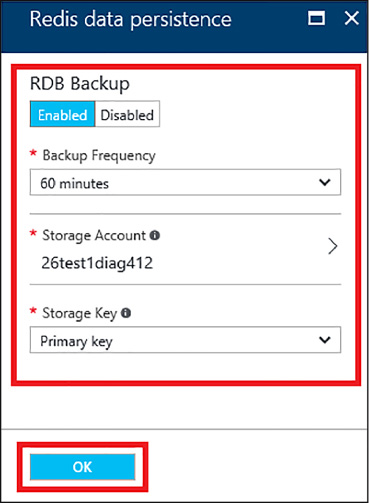

Implement data persistence 259

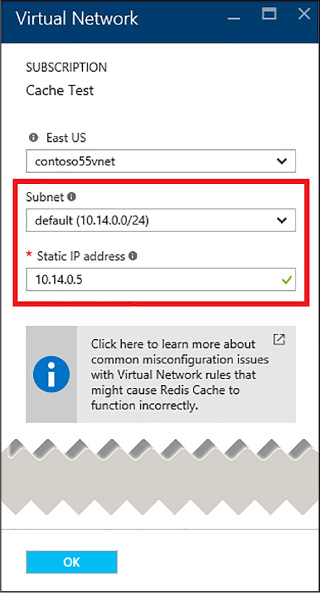

Implement security and network isolation 260

Integrate Redis caching with ASP.NET session and cache providers 262

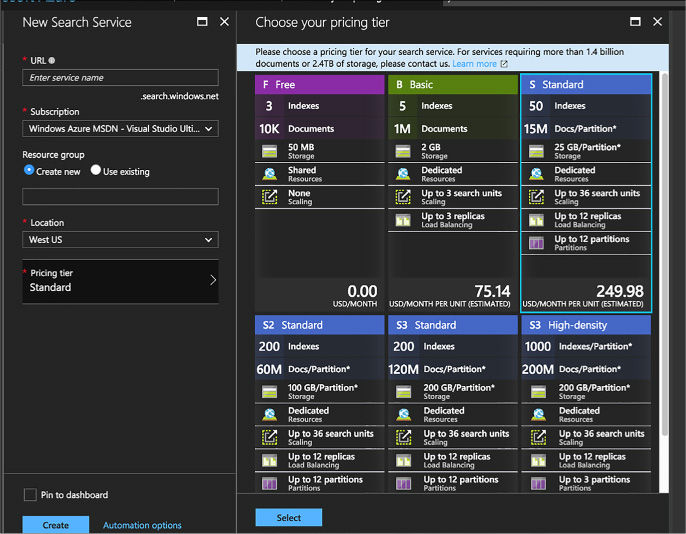

Skill 2.7: Implement Azure Search 263

Thought experiment answers 269

Chapter 3. Manage identity, application and network services 273

Skill 3.1: Integrate an app with Azure AD 273

Preparing to integrate an app with Azure AD 274

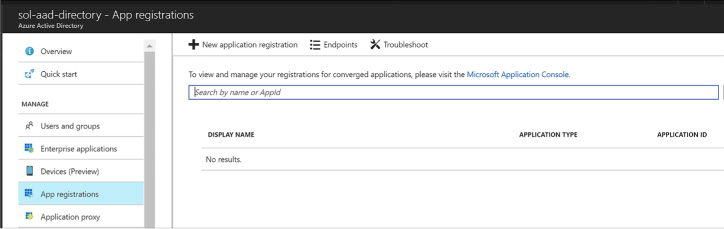

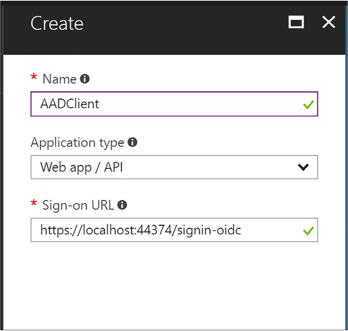

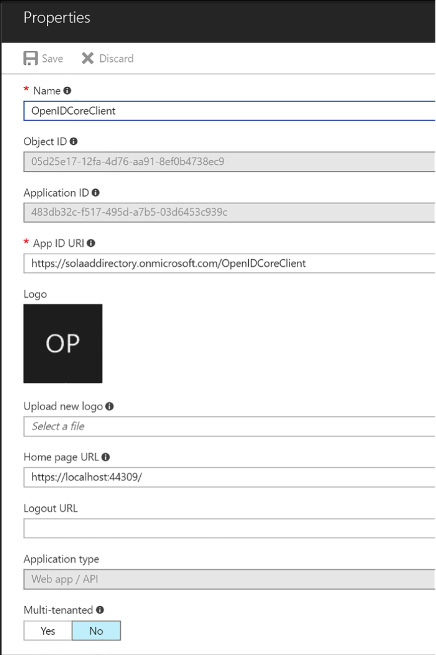

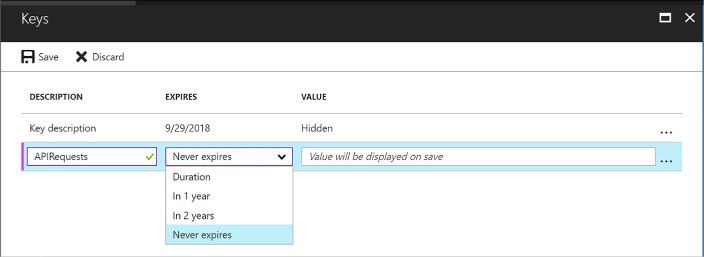

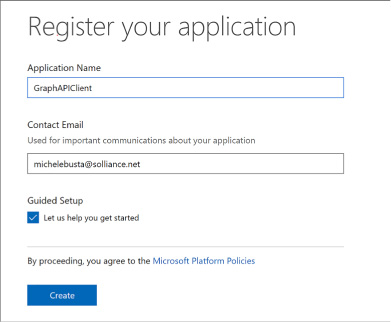

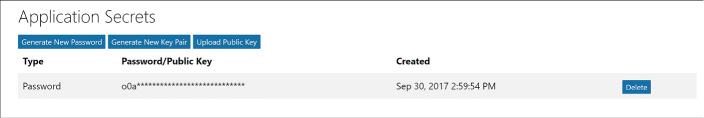

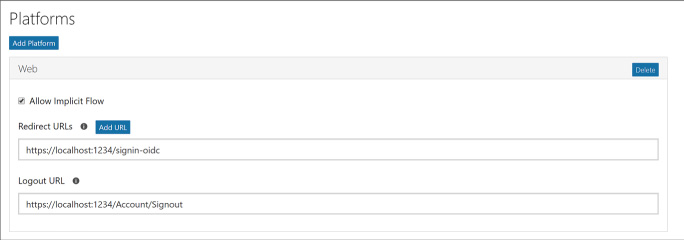

Registering an application 280

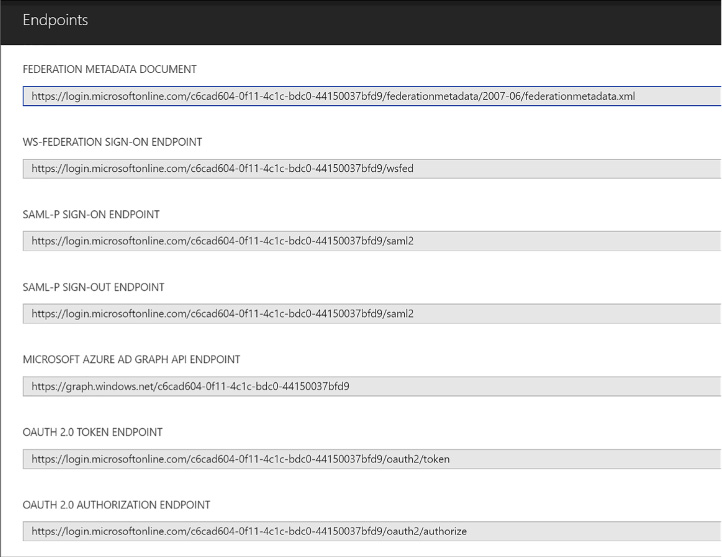

Viewing integration endpoints 282

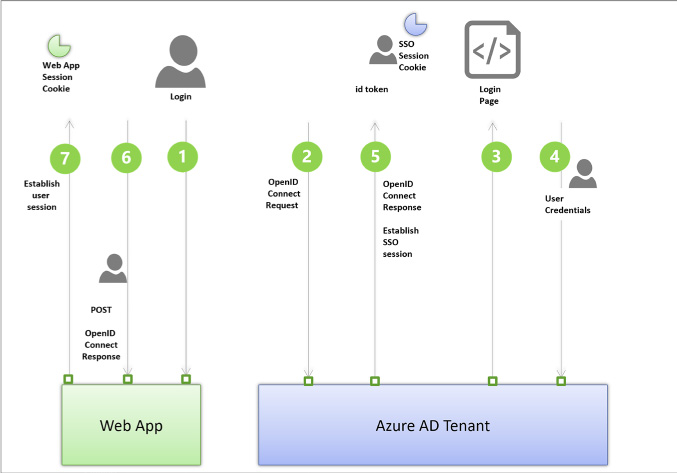

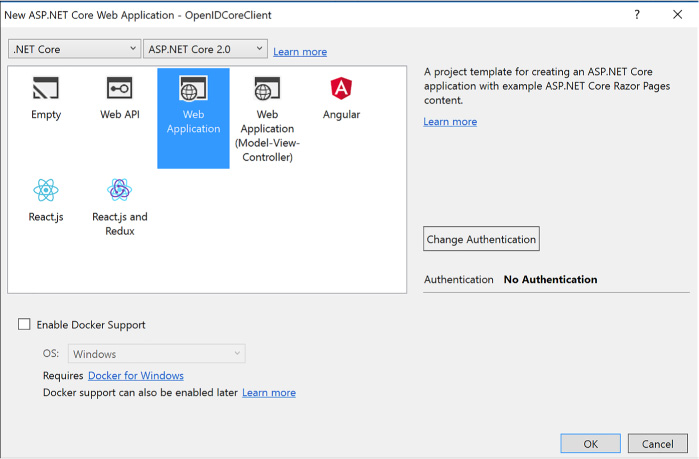

Develop apps that use WS-Federation, SAML-P, OpenID Connect and OAuth endpoints 284

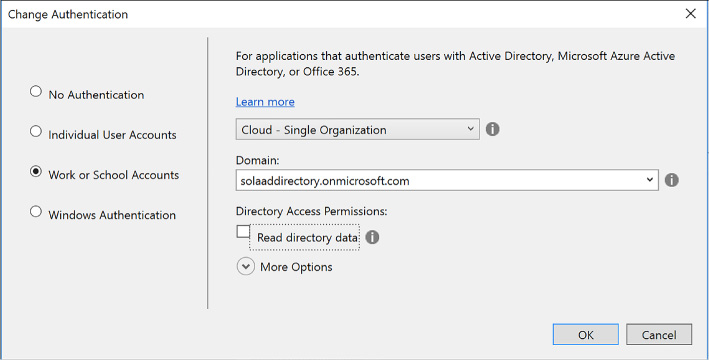

Integrating with OpenID Connect 284

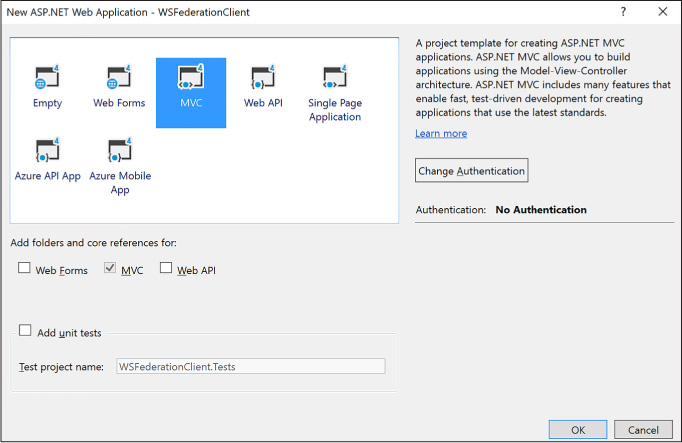

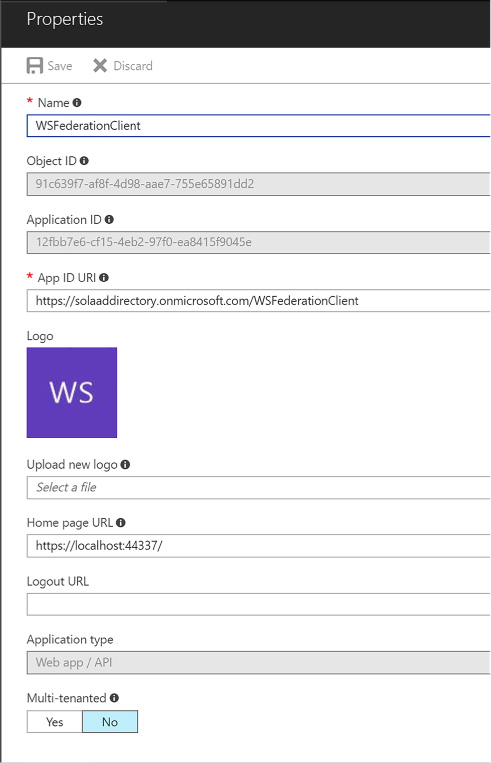

Integrating with WS-Federation 290

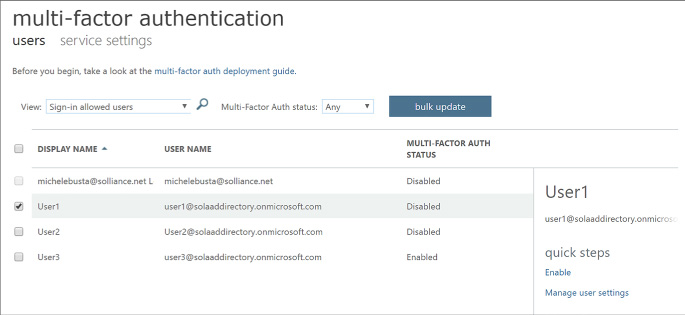

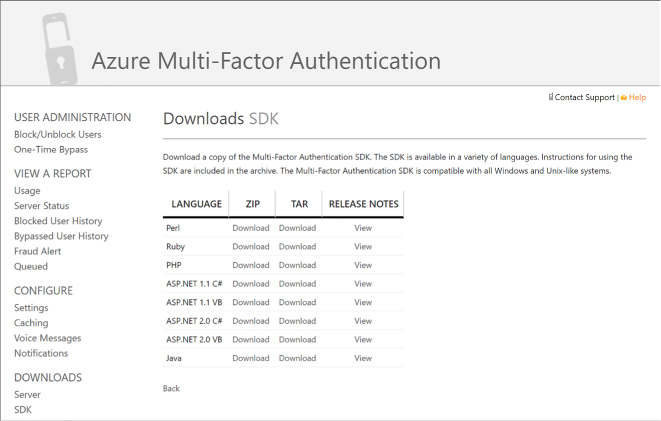

Query the directory using Microsoft Graph API, MFA and MFA API 295

Query the Microsoft Graph API 296

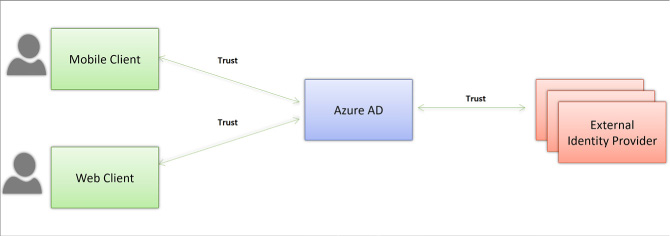

Skill 3.2: Develop apps that use Azure AD B2C and Azure AD B2B 310

Design and implement apps that leverage social identity provider authentication 310

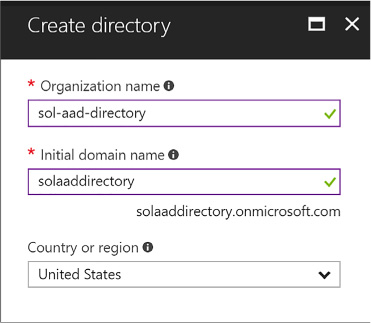

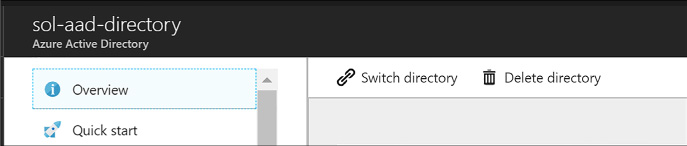

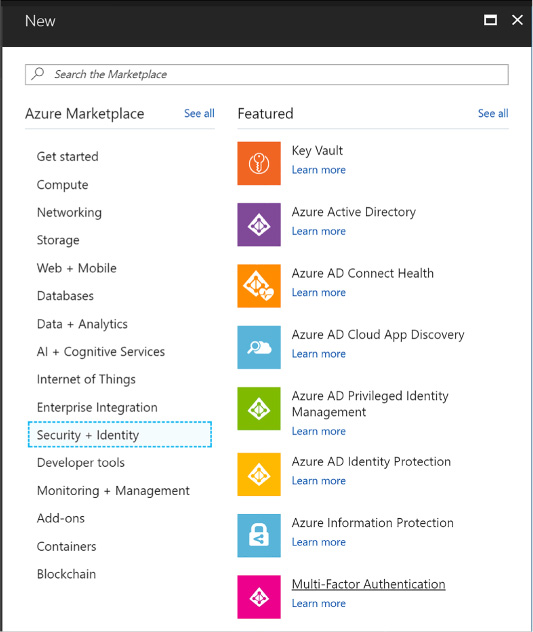

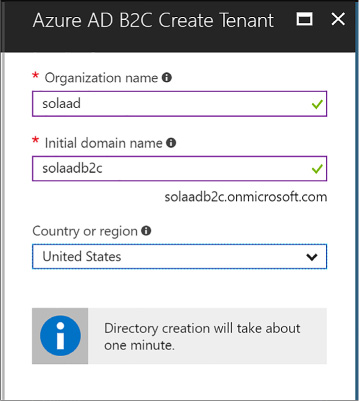

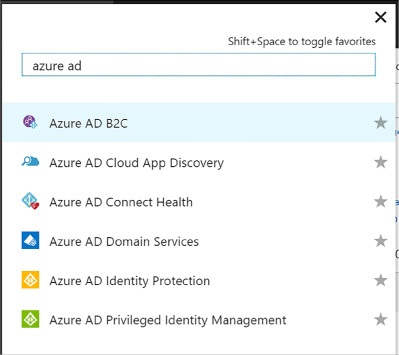

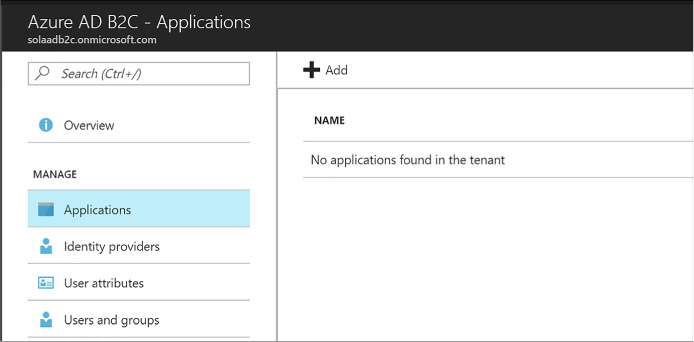

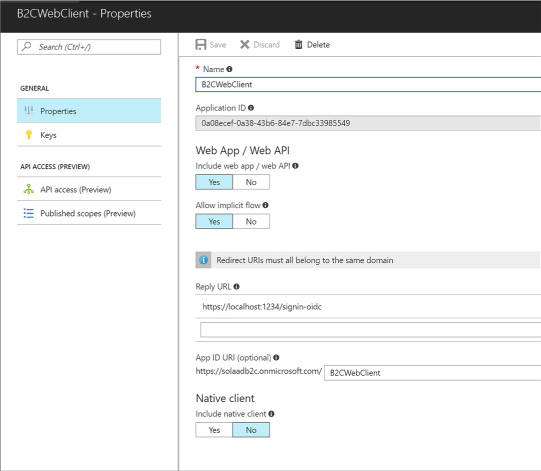

Create an Azure AD B2C tenant 312

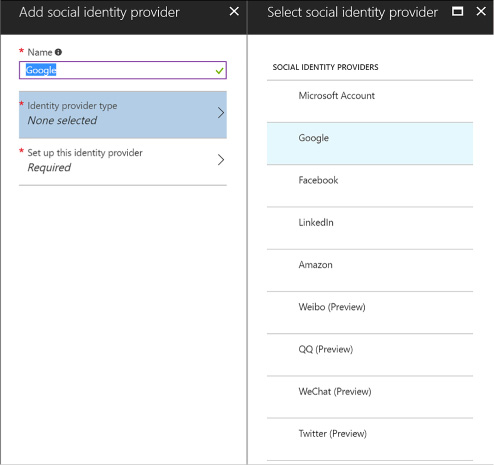

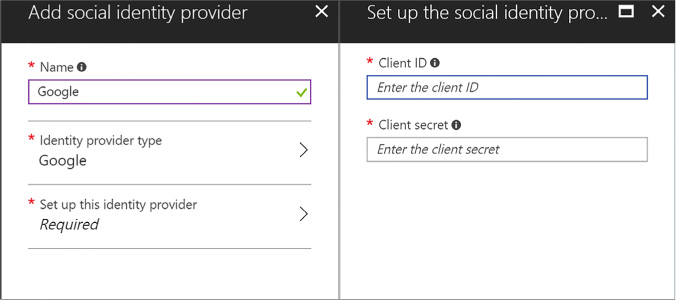

Configure identity providers 317

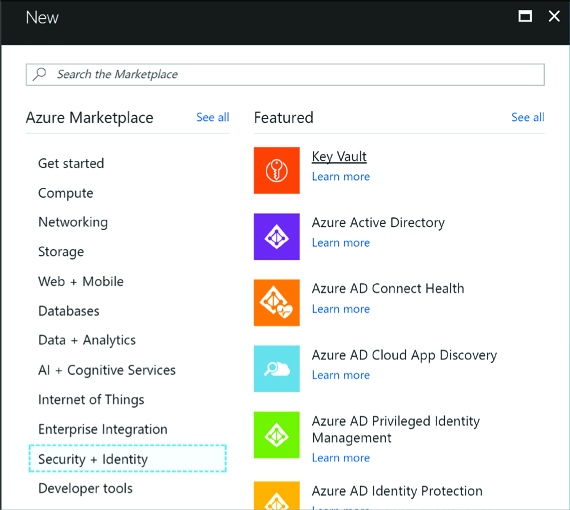

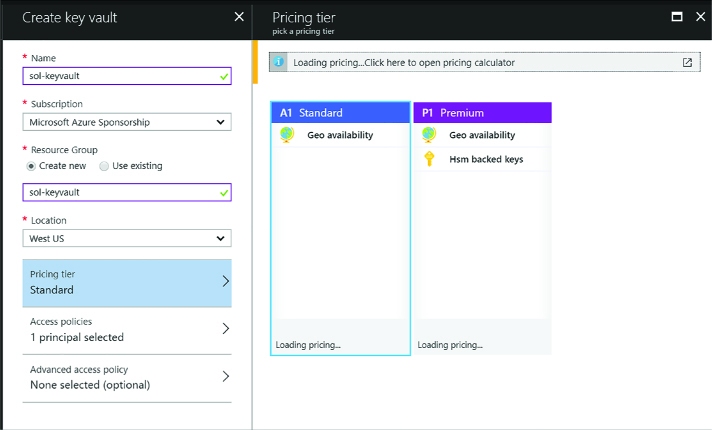

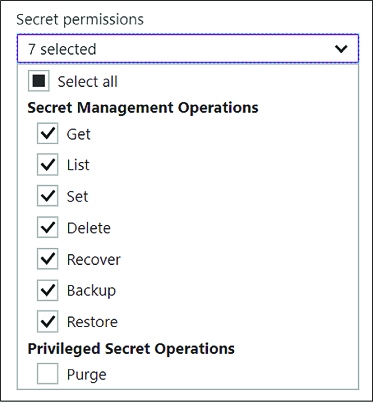

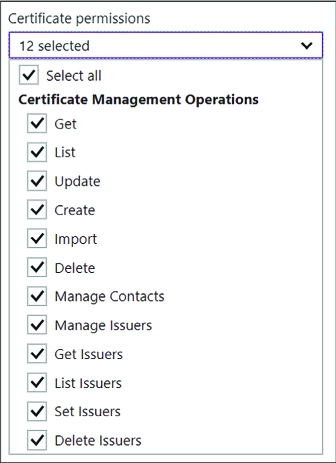

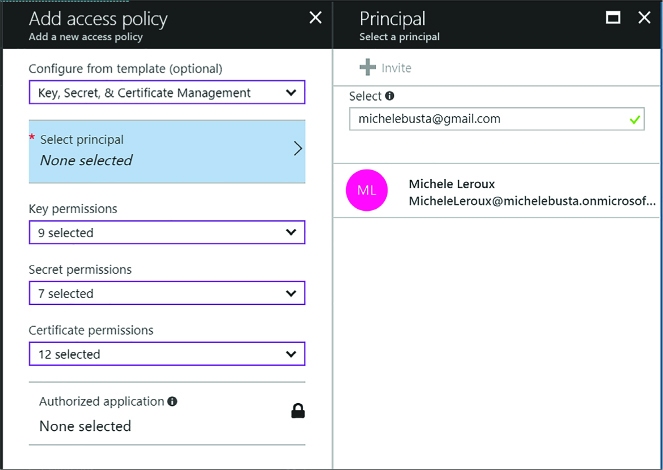

Skill 3.3: Manage Secrets using Azure Key Vault 321

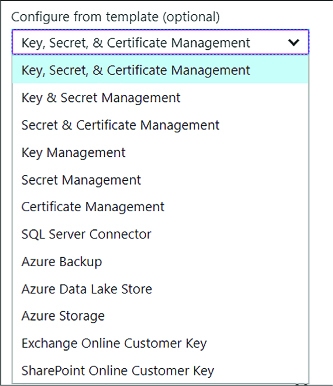

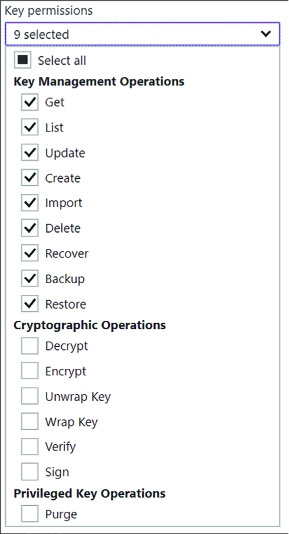

Manage access, including tenants 323

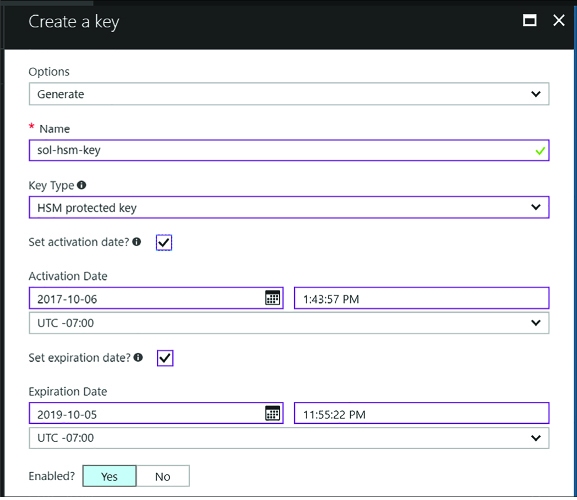

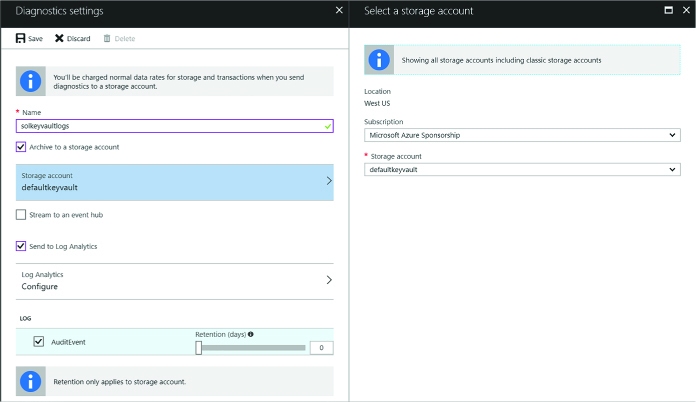

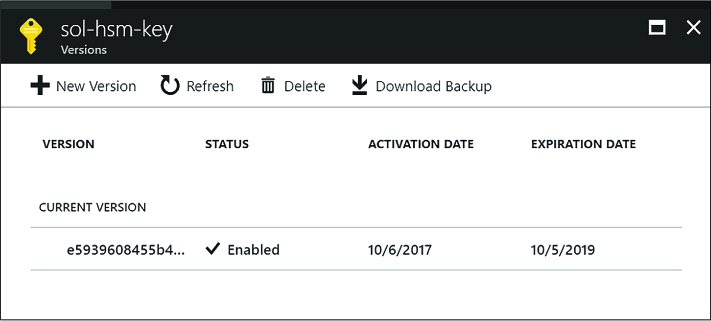

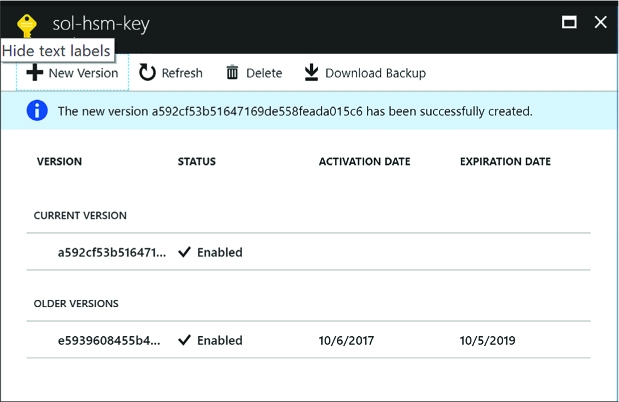

Implement HSM protected keys 329

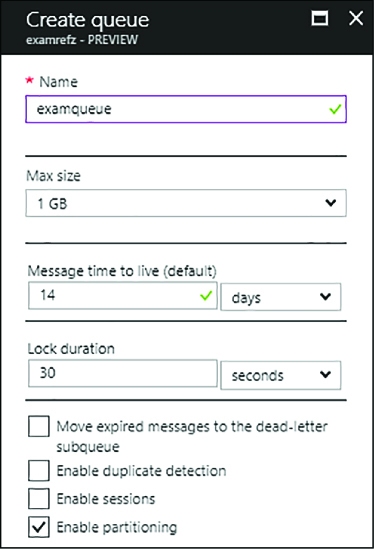

Skill 3.4: Design and implement a messaging strategy 335

Selecting a protocol for messaging 338

Introducing the Azure Relay 339

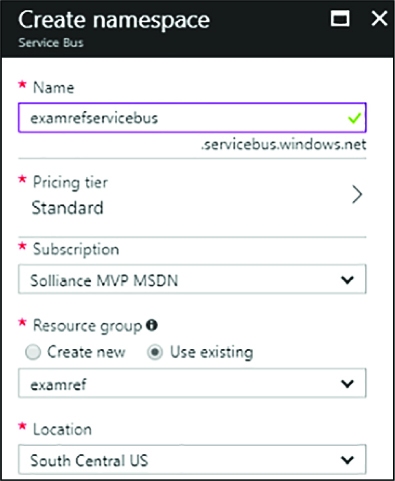

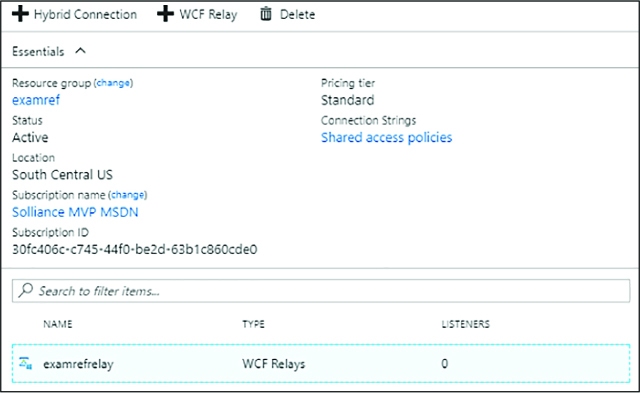

DEPLOY AN AZURE RELAY NAMESPACE 340

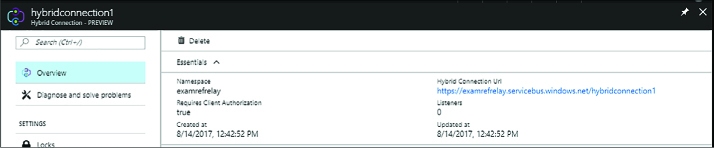

DEPLOY A HYBRID CONNECTION 340

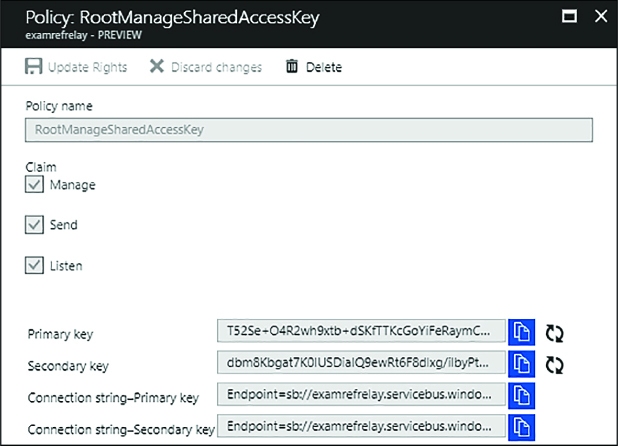

RETRIEVE THE CONNECTION CONFIGURATION 341

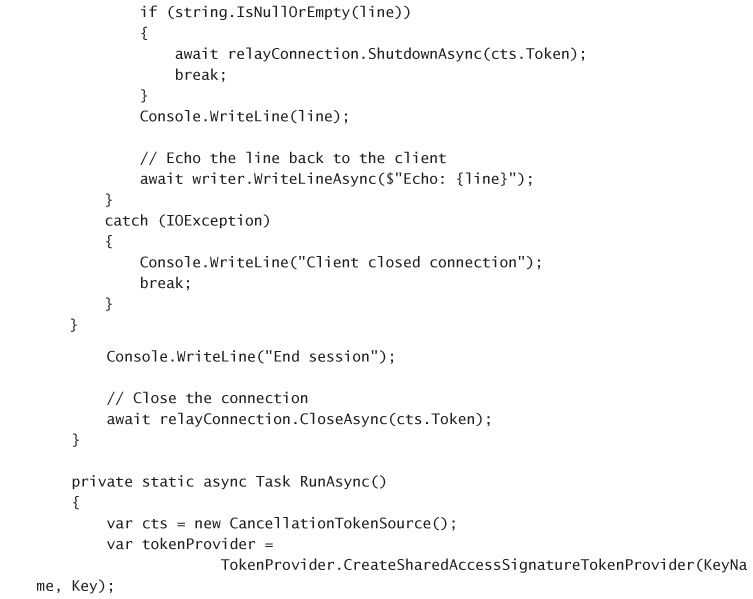

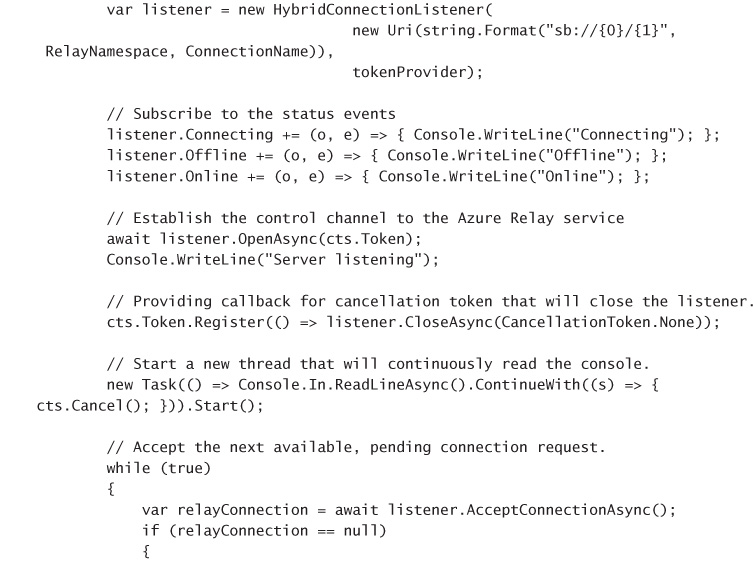

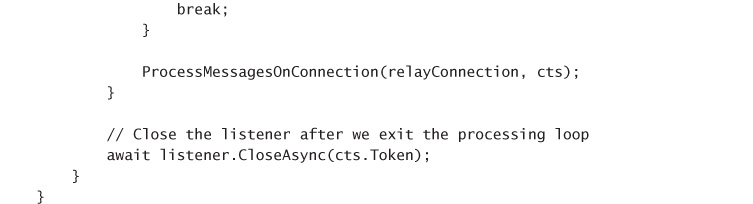

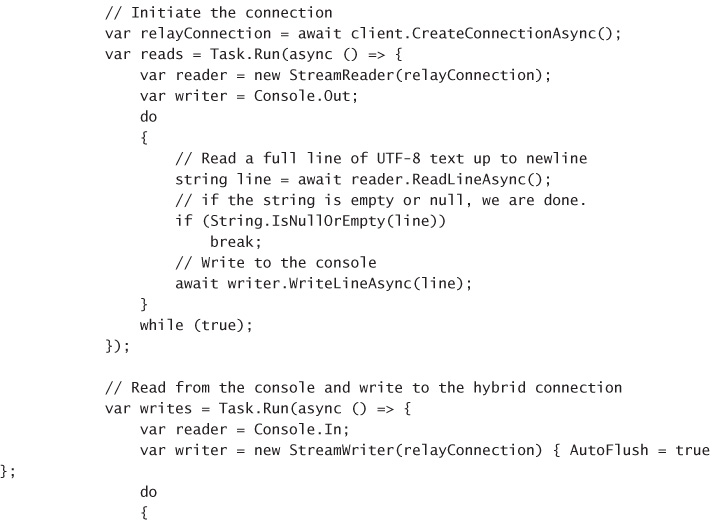

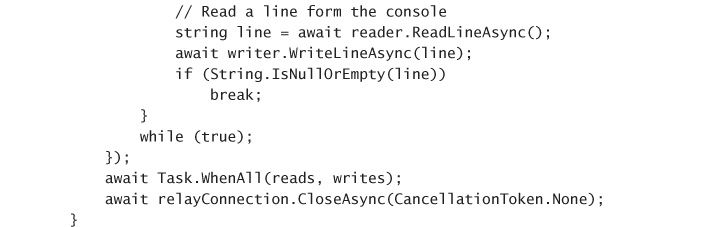

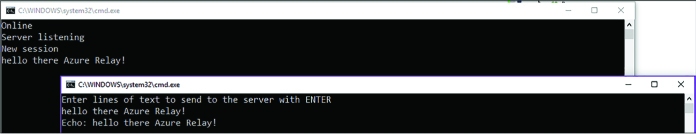

CREATE A LISTENER APPLICATION 342

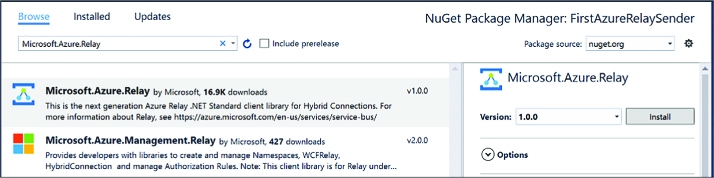

CREATE A SENDER APPLICATION 345

Managing relay credentials 351

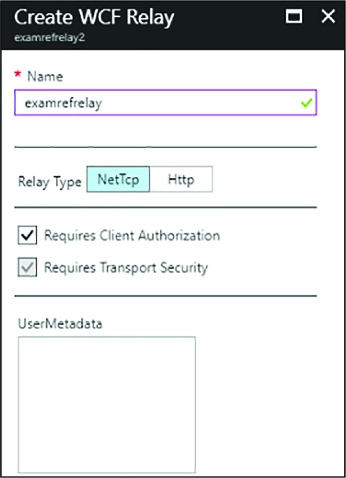

CREATING A RELAY AND LISTENER ENDPOINT 353

SENDING MESSAGES THROUGH RELAY 355

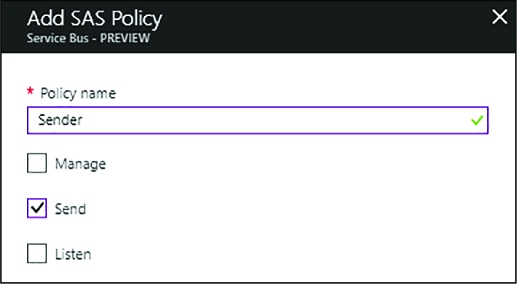

Managing queue credentials 358

FINDING QUEUE CONNECTION STRINGS 359

SENDING MESSAGES TO A QUEUE 360

RECEIVING MESSAGES FROM A QUEUE 361

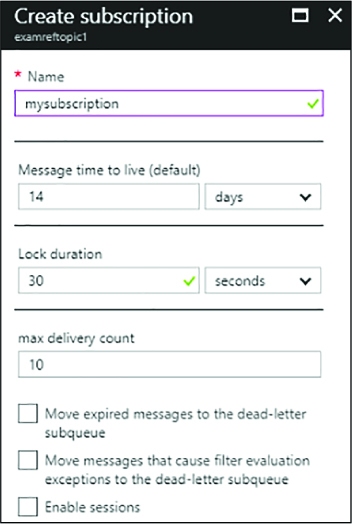

Using Service Bus topics and subscriptions 363

CREATING A TOPIC AND SUBSCRIPTION 364

MANAGING TOPIC CREDENTIALS 366

SENDING MESSAGES TO A TOPIC 367

RECEIVING MESSAGES FROM A SUBSCRIPTION 368

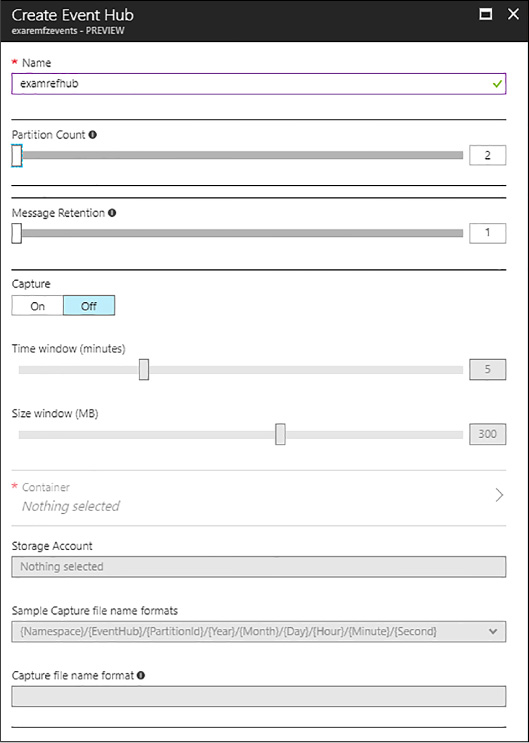

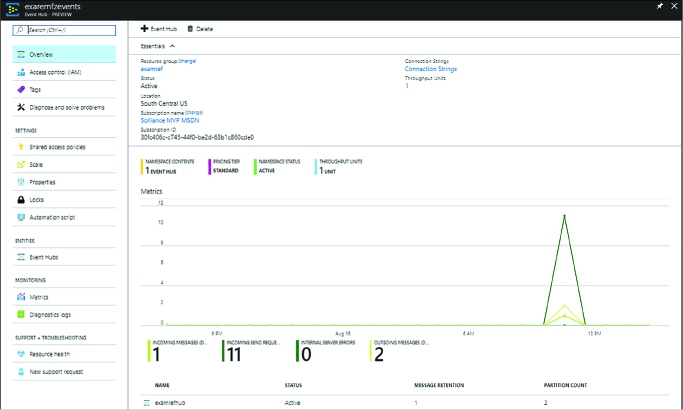

MANAGING EVENT HUB CREDENTIALS 374

FINDING EVENT HUB CONNECTION STRINGS 374

SENDING MESSAGES TO AN EVENT HUB 374

RECEIVING MESSAGES FROM A CONSUMER GROUP 375

CREATING A NOTIFICATION HUB 377

IMPLEMENTING SOLUTIONS WITH NOTIFICATION HUBS 377

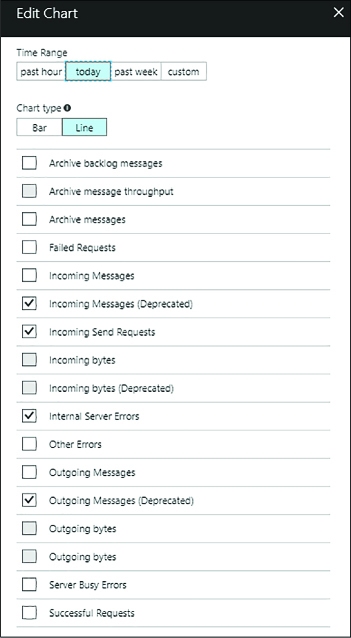

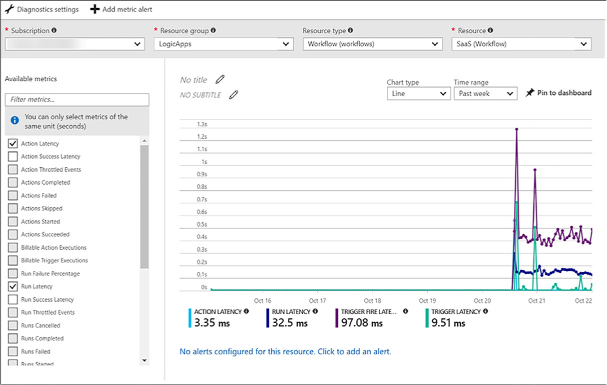

Scale and monitor messaging 378

Scaling Service Bus features 379

Monitoring Service Bus features 383

MONITORING NOTIFICATION HUBS 385

Determine when to use Event Hubs, Service Bus, IoT Hub, Stream Analytics and Notification Hubs 386

Thought experiment answers 387

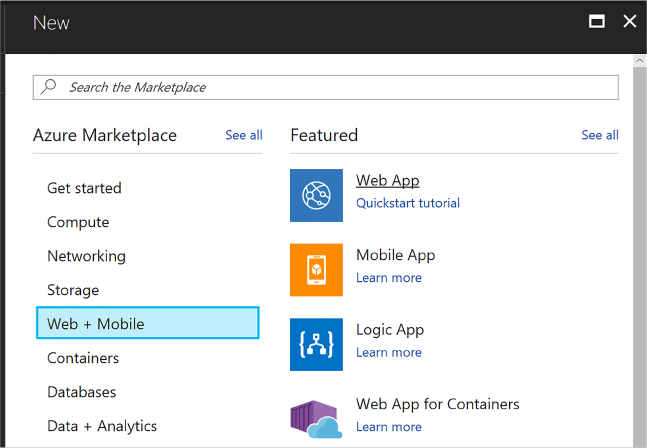

Chapter 4. Design and implement Azure PaaS compute and web and mobile services 390

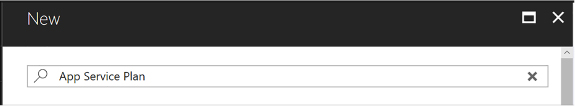

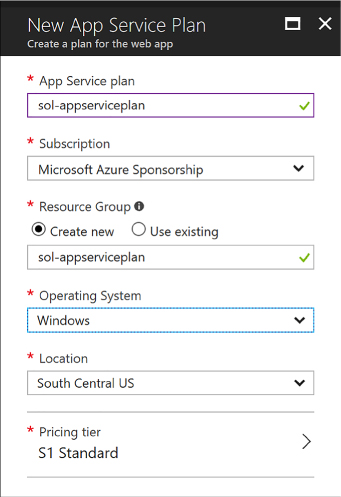

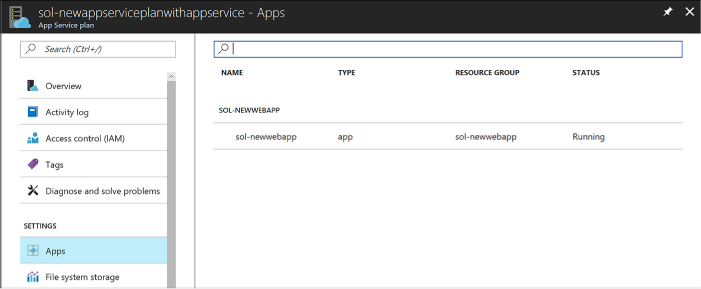

Skill 4.1: Design Azure App Service Web Apps 390

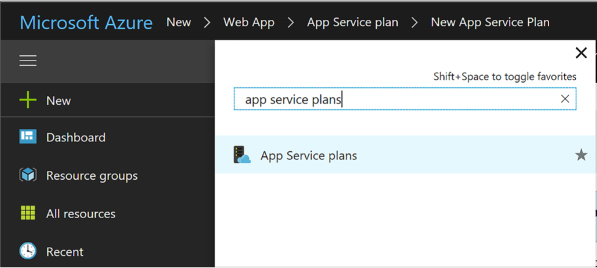

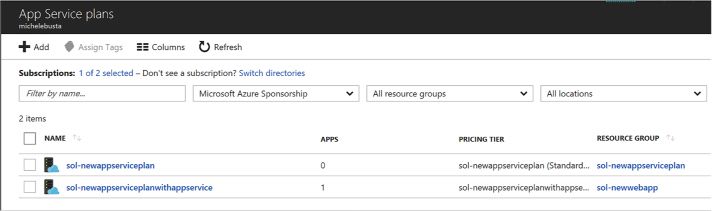

Define and manage App Service plans 391

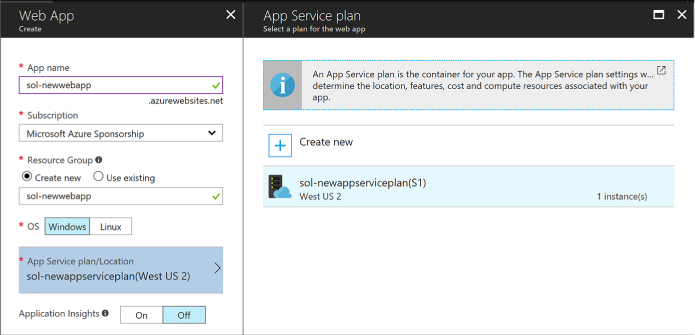

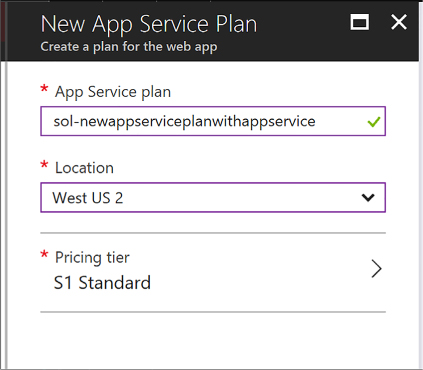

Creating a new App Service plan 391

Creating a new Web App and App Service plan 397

Review App Service plan settings 399

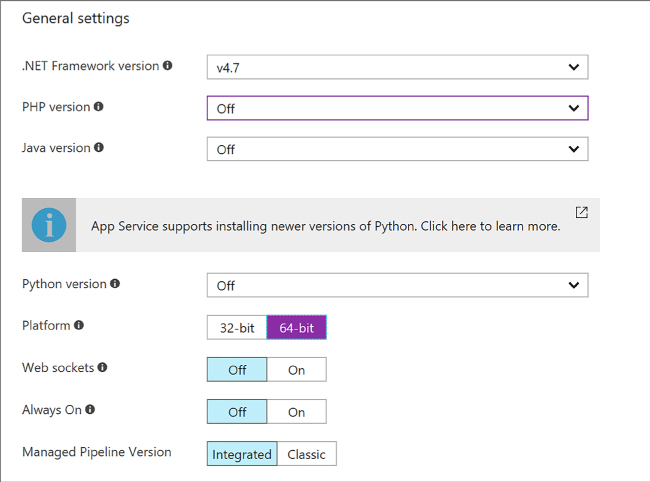

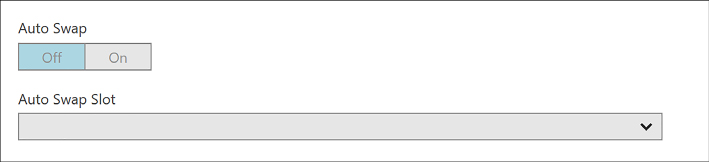

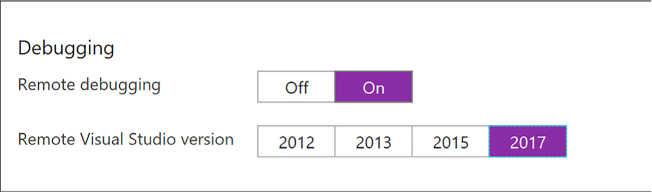

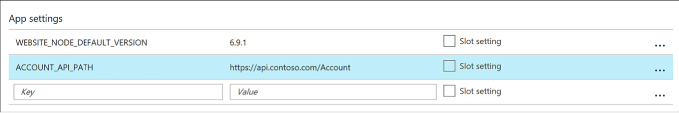

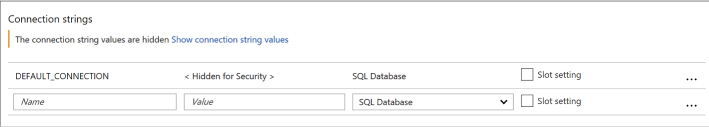

Configure Web App settings 401

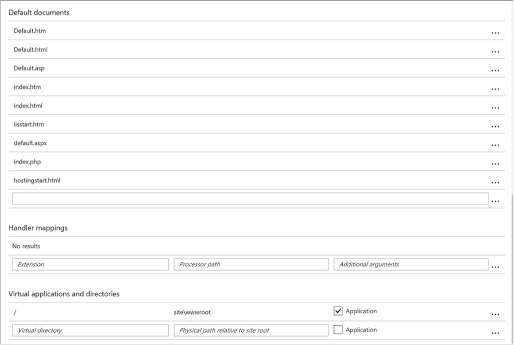

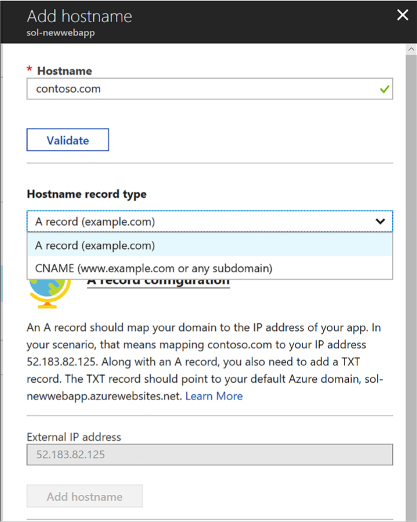

Configure Web App certificates and custom domains 406

Mapping custom domain names 407

Configuring a custom domain 408

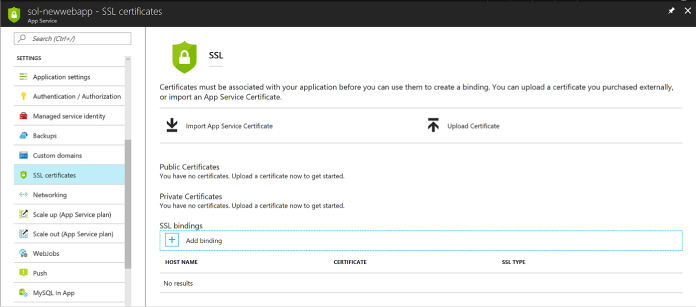

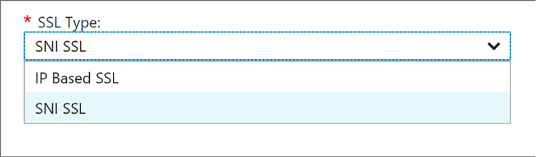

Configuring SSL certificates 412

Manage Web Apps by using the API, Azure PowerShell, and Xplat-CLI 414

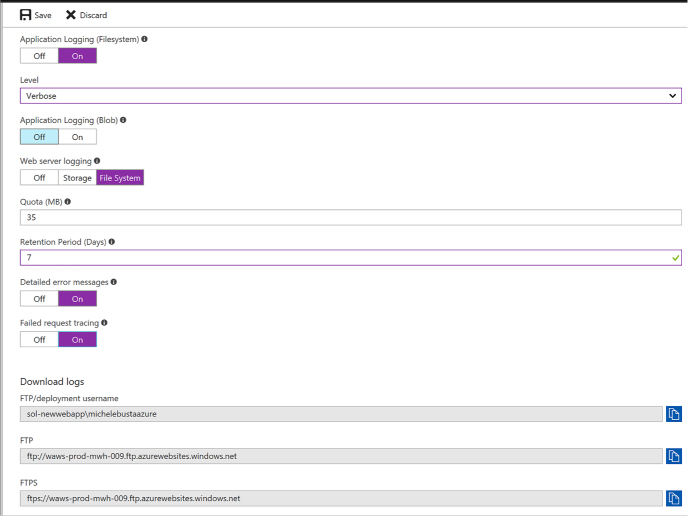

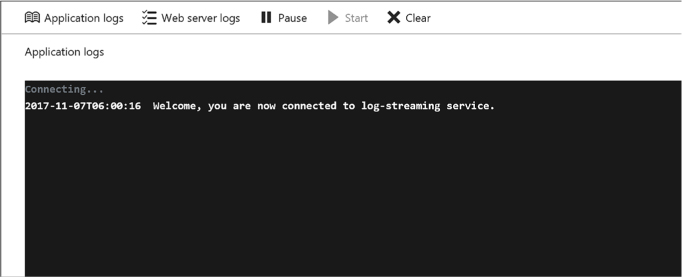

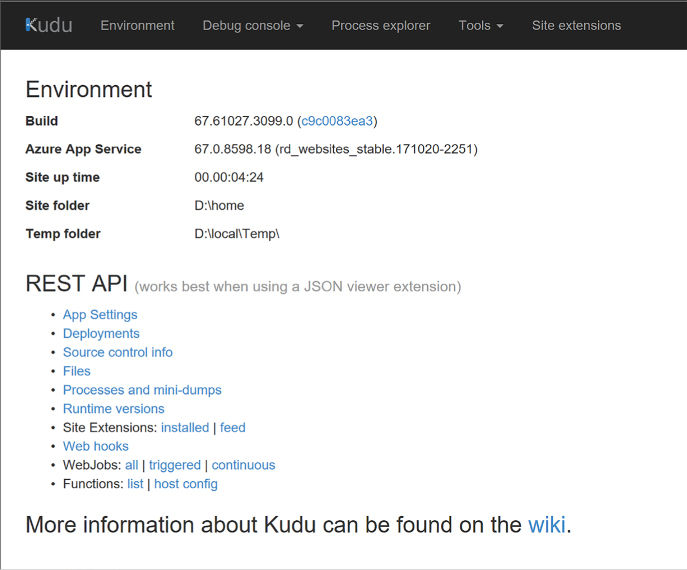

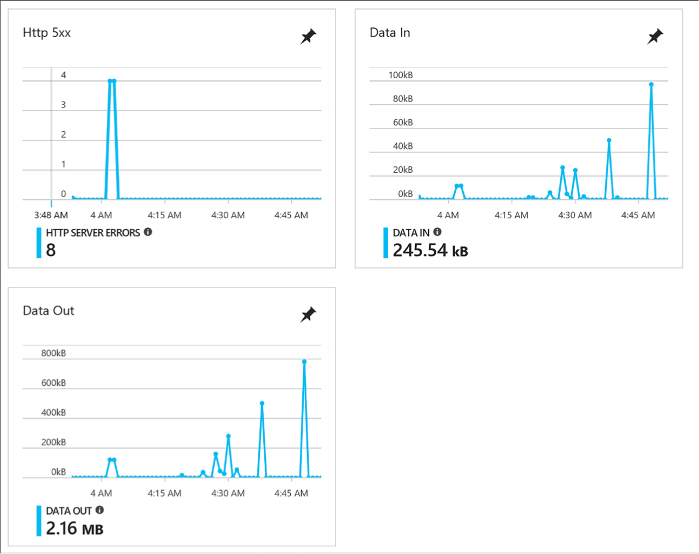

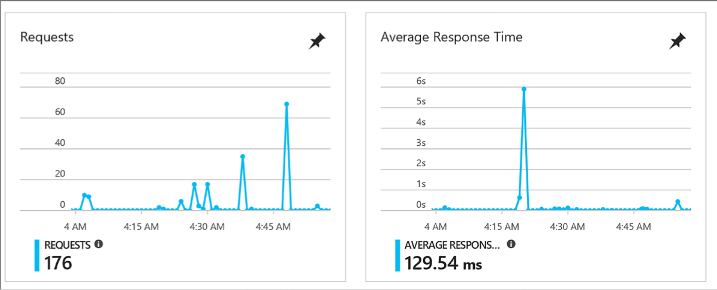

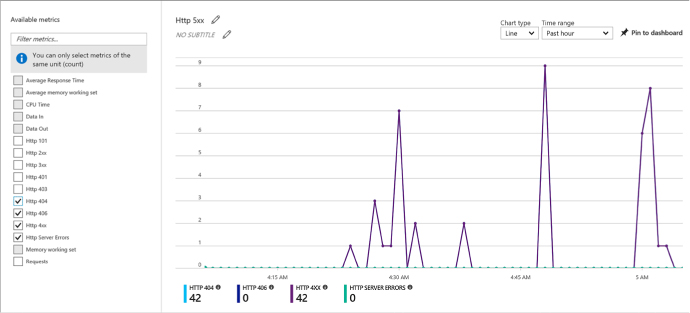

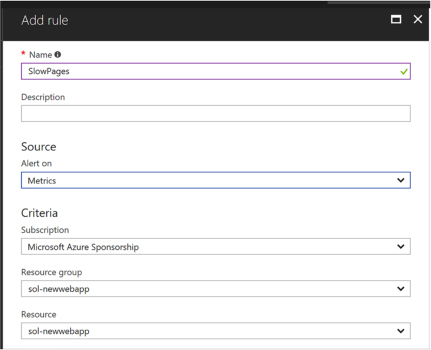

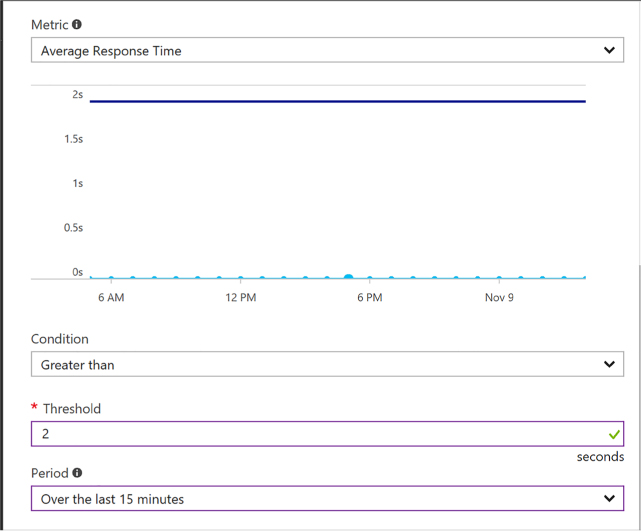

Implement diagnostics, monitoring, and analytics 414

Configure diagnostics logs 415

Configure endpoint monitoring 419

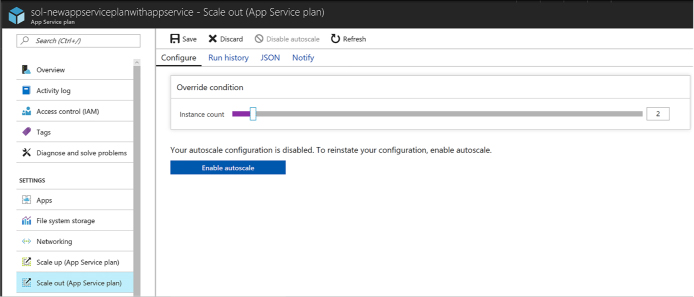

Design and configure Web Apps for scale and resilience 426

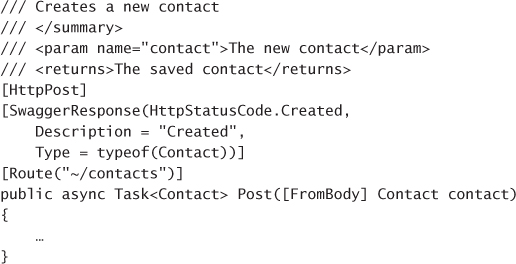

Skill 4.2: Design Azure App Service API Apps 427

Create and deploy API Apps 428

Creating a new API App from the portal 428

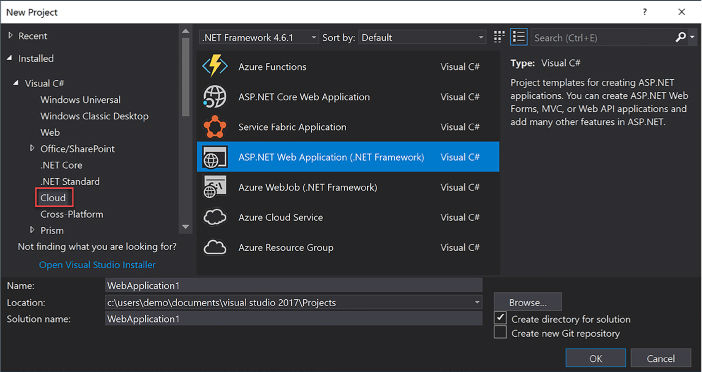

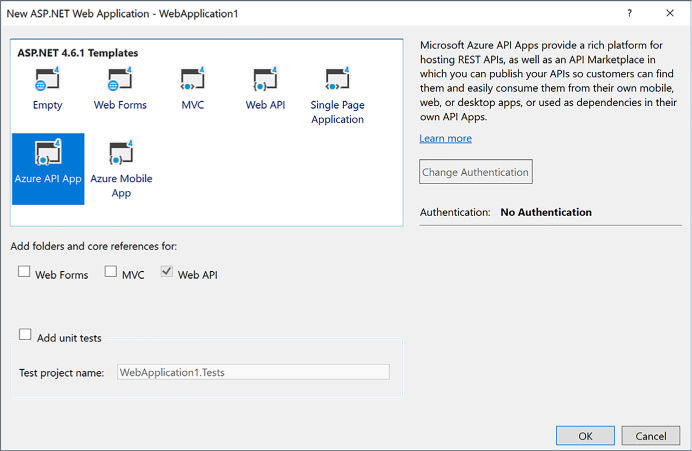

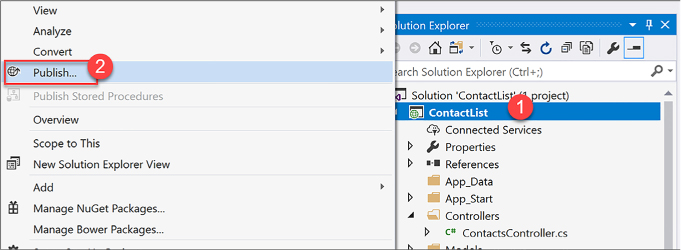

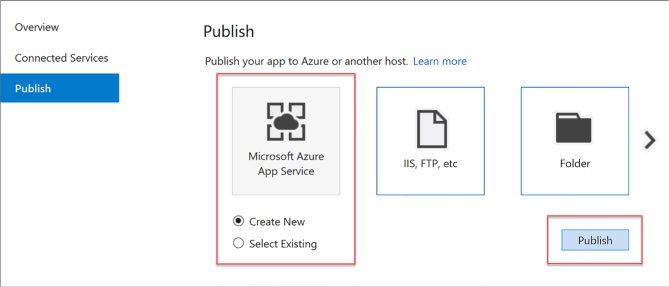

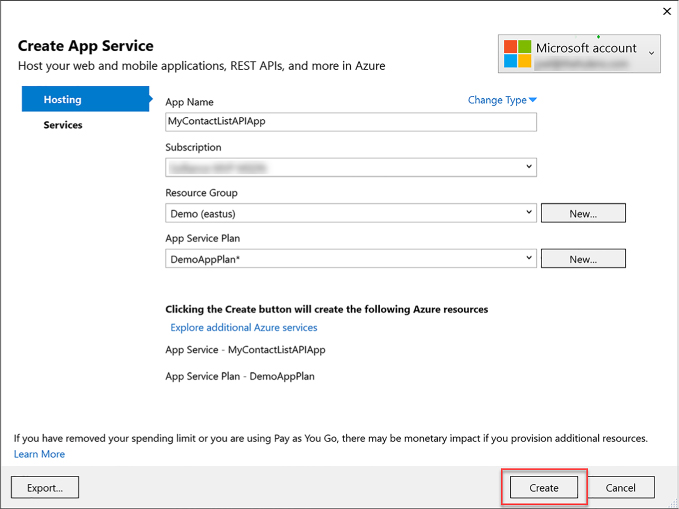

Creating and deploying a new API app with Visual Studio 2017 430

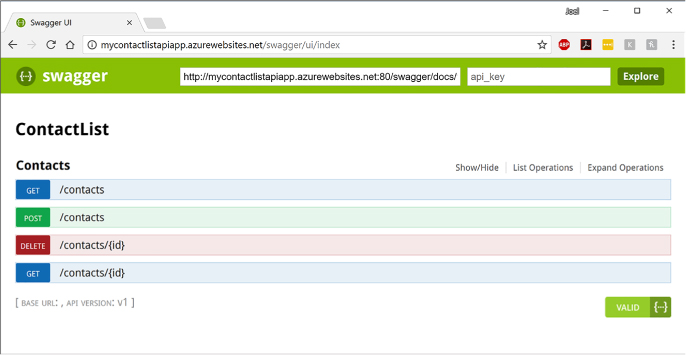

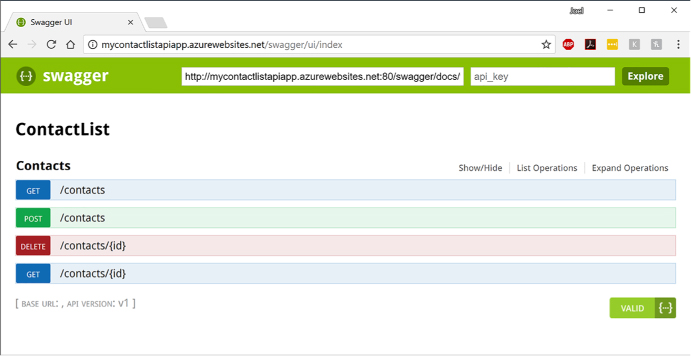

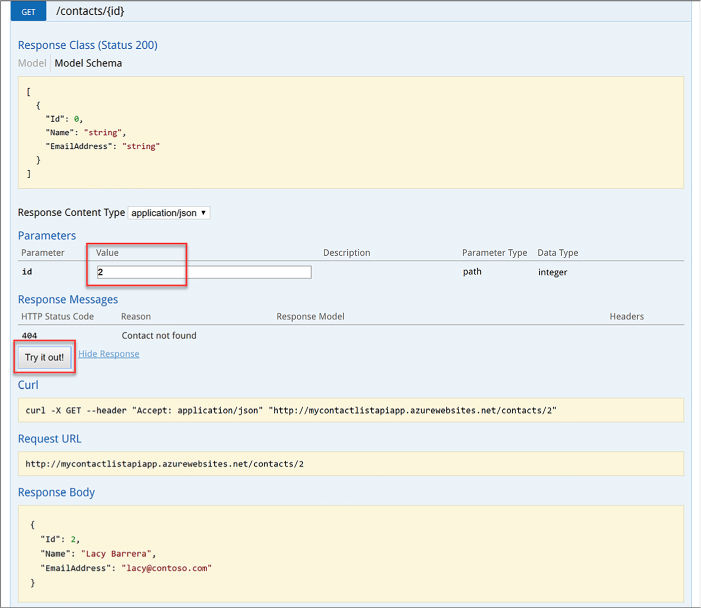

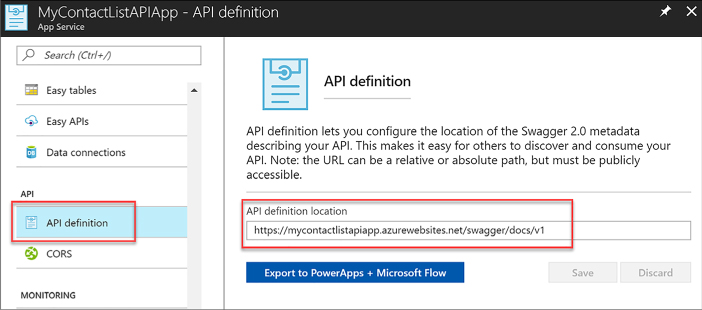

Automate API discovery by using Swashbuckle 435

Use Swashbuckle in your API App project 436

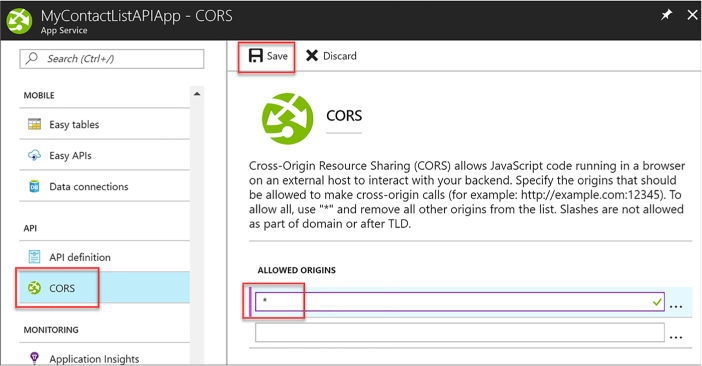

Enable CORS to allow clients to consume API and Swagger interface 439

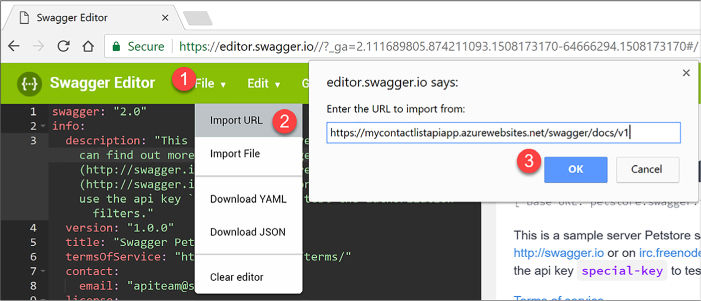

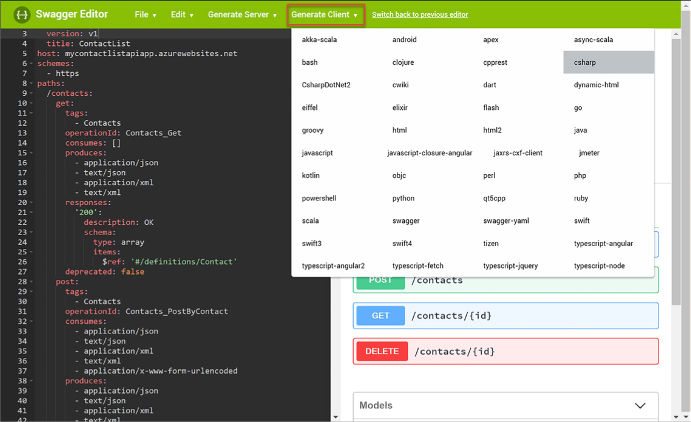

Use Swagger API metadata to generate client code for an API app 440

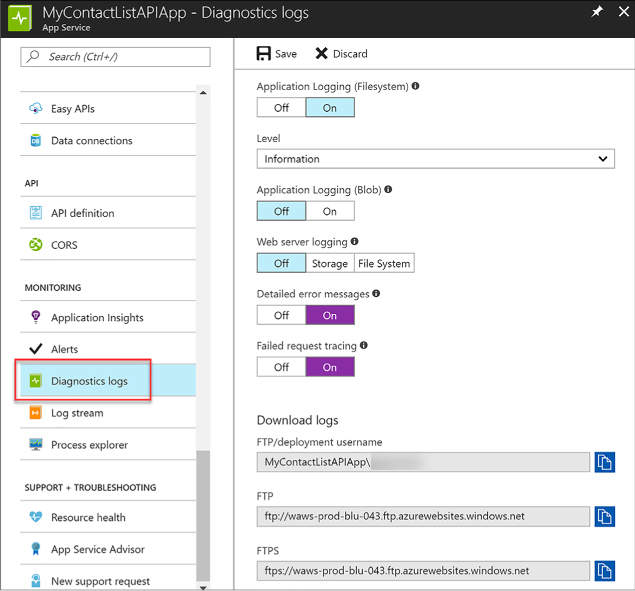

Enable and review diagnostics logs 443

Skill 4.3: Develop Azure App Service Logic Apps 445

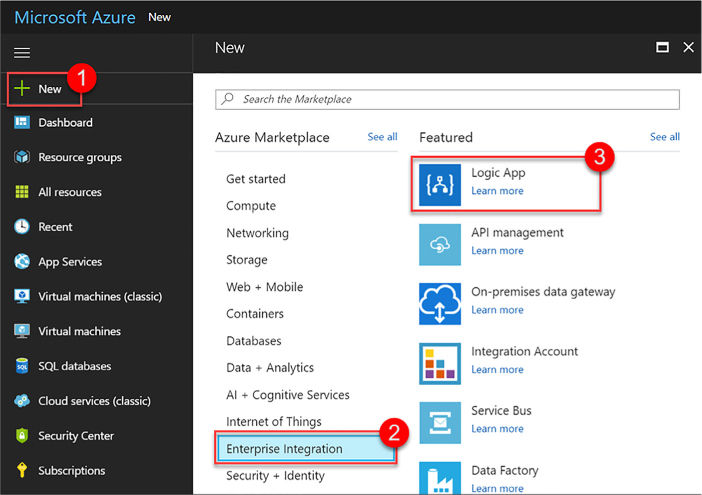

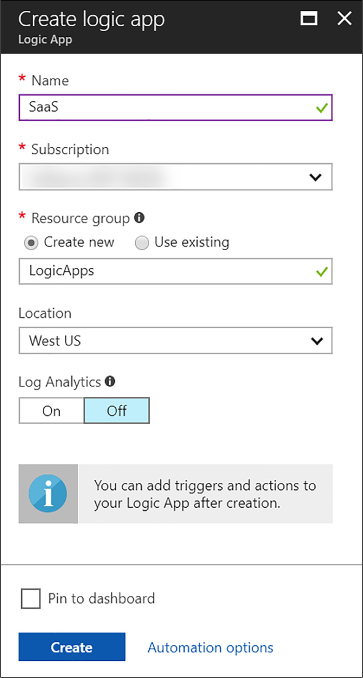

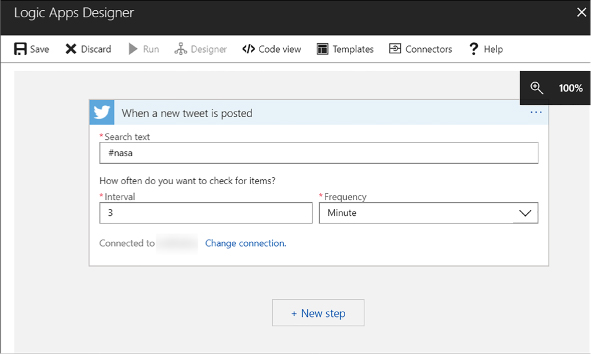

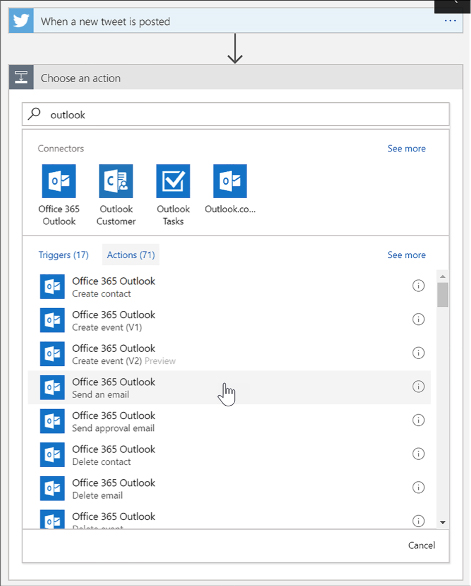

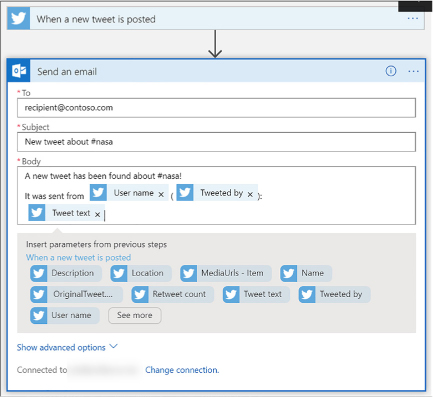

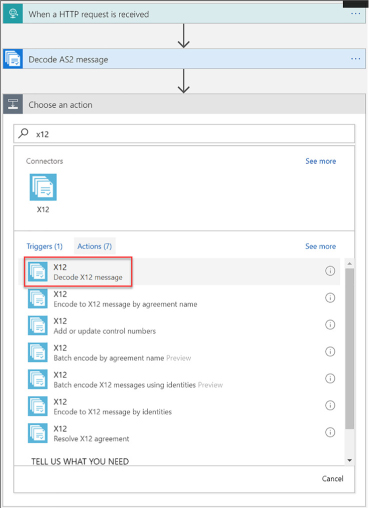

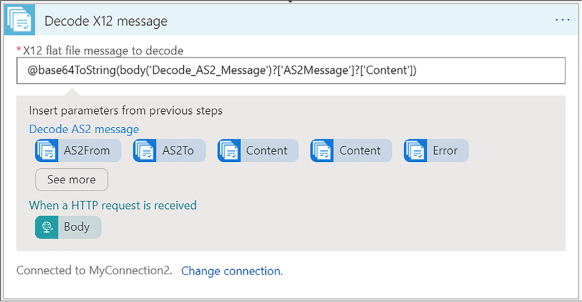

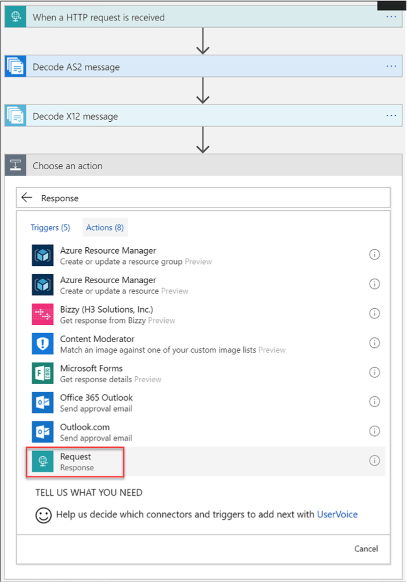

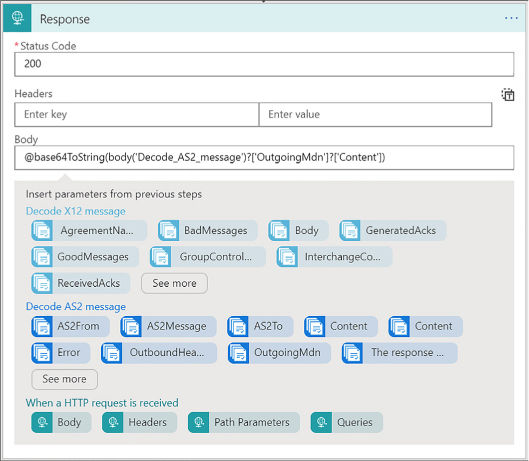

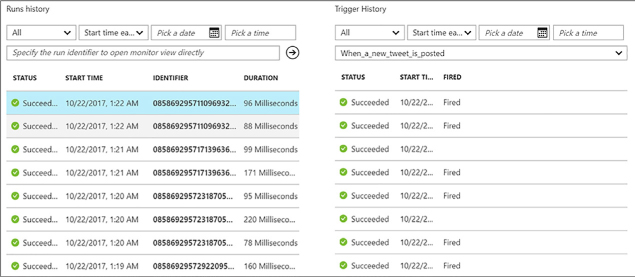

Create a Logic App connecting SaaS services 446

Create a Logic App with B2B capabilities 451

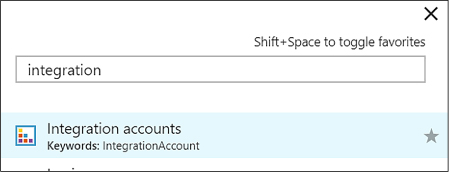

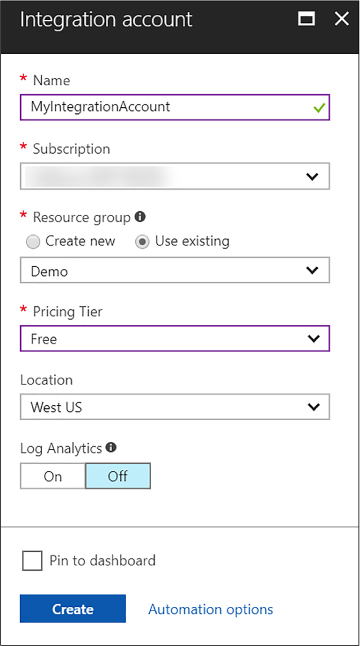

Create an integration account 451

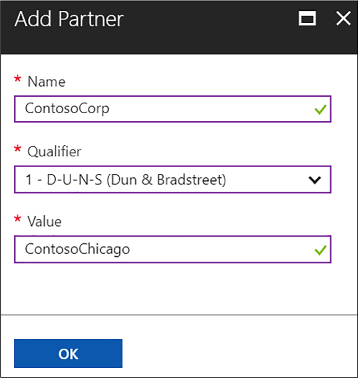

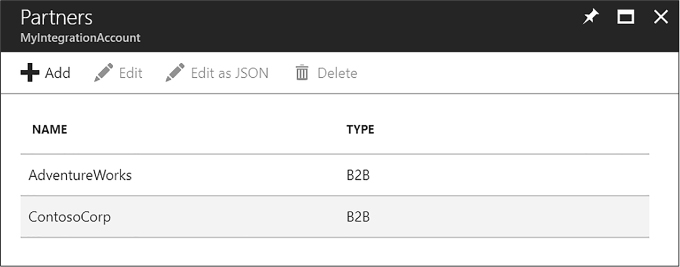

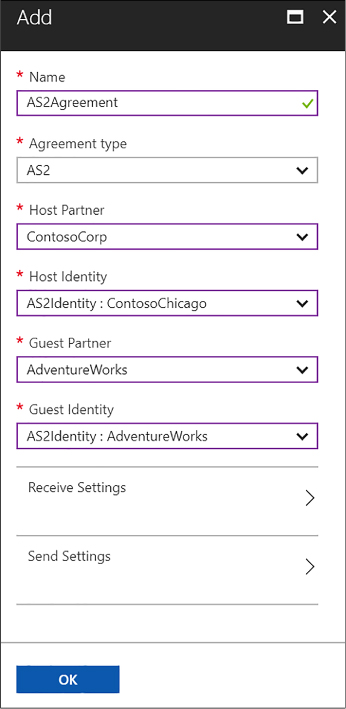

Add partners to your integration account 454

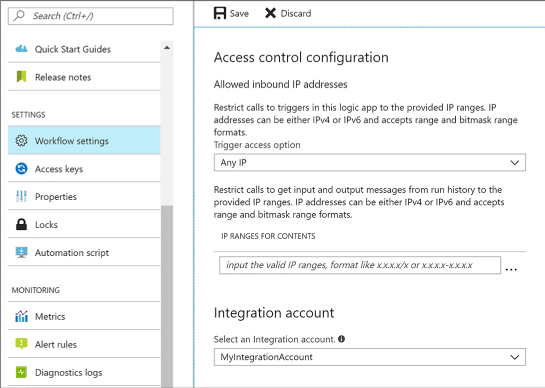

Link your Logic app to your Enterprise Integration account 457

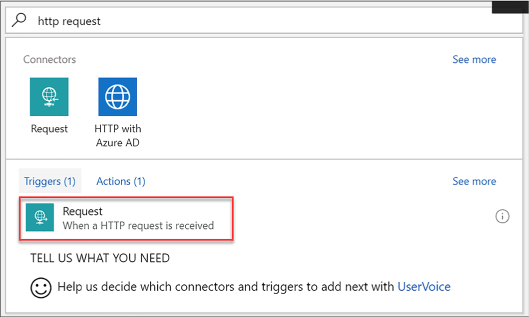

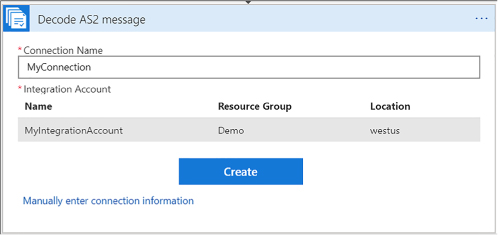

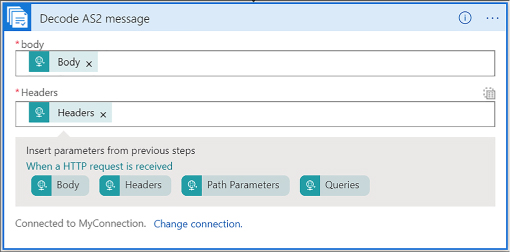

Use B2B features to receive data in a Logic App 458

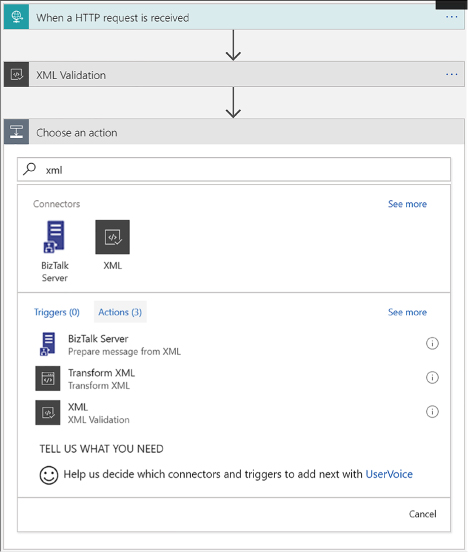

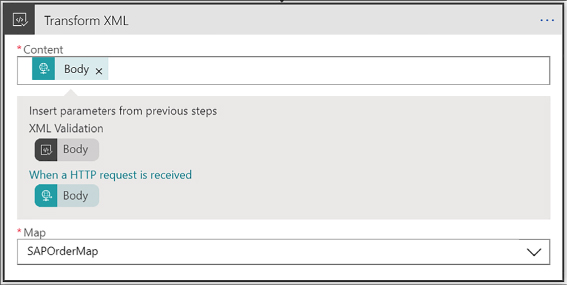

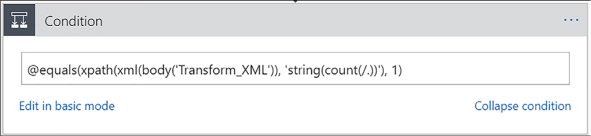

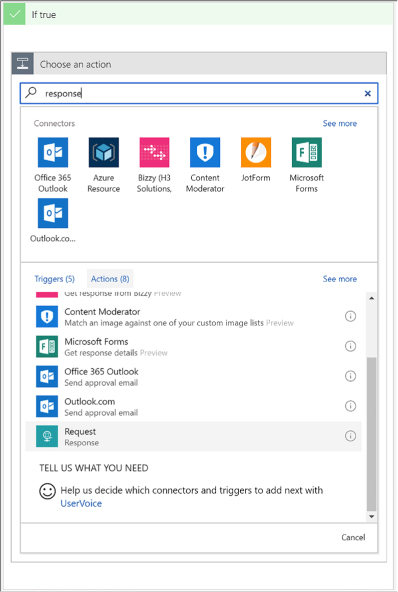

Create a Logic App with XML capabilities 466

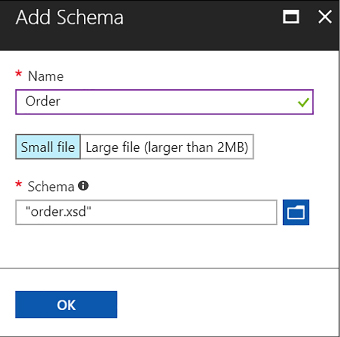

Add schemas to your integration account 467

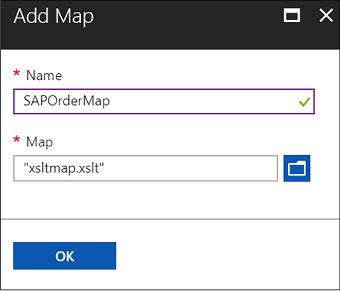

Add maps to your Integration account 467

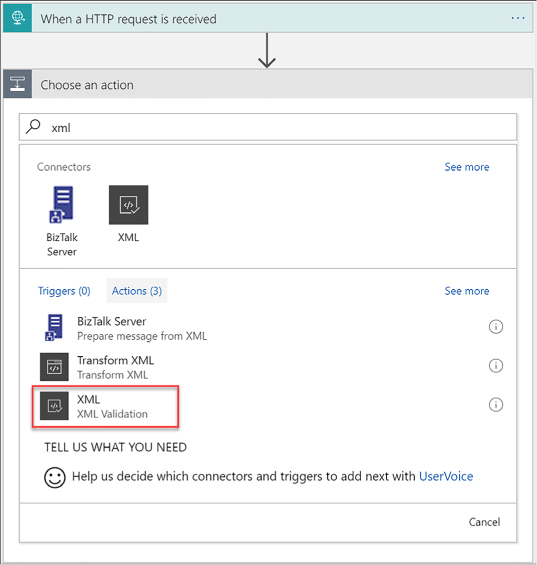

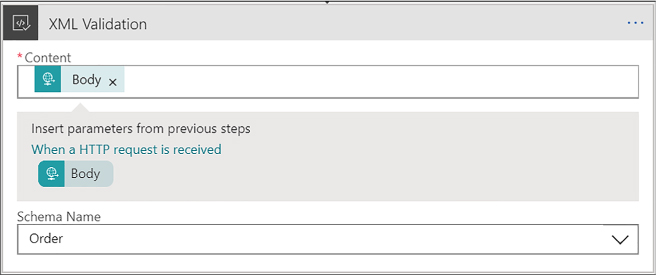

Add XML capabilities to the linked Logic App 468

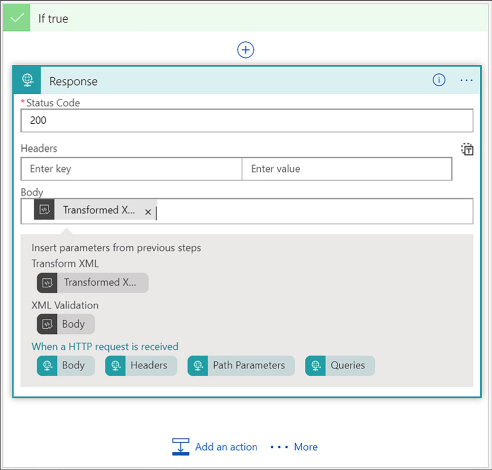

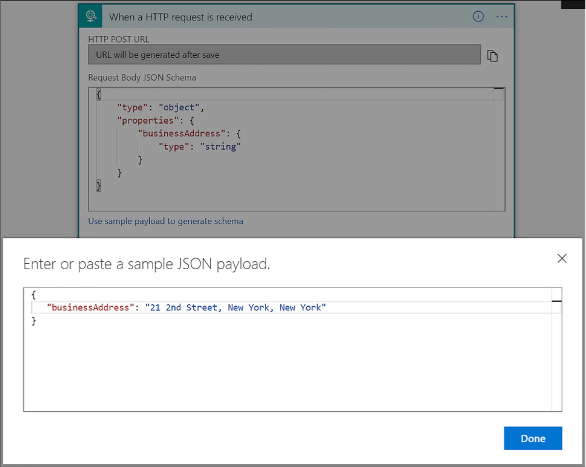

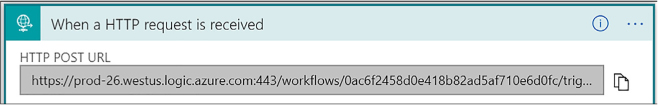

Trigger a Logic App from another app 476

Create an HTTP endpoint for your logic app 476

Create custom and long-running actions 478

Long-running action patterns 479

Skill 4.4: Develop Azure App Service Mobile Apps 482

Identify the target device platforms 483

Prepare your development environment 484

Deploy an Azure Mobile App Service 484

Configure your client application 487

Add authentication to a mobile app 487

Add offline sync to a mobile app 490

Add push notifications to a mobile app 492

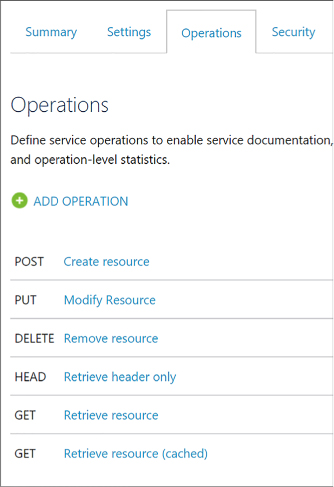

Skill 4.5: Implement API Management 493

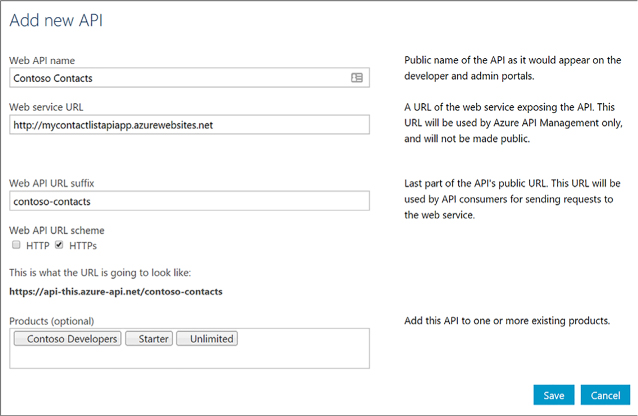

Create an API Management service 495

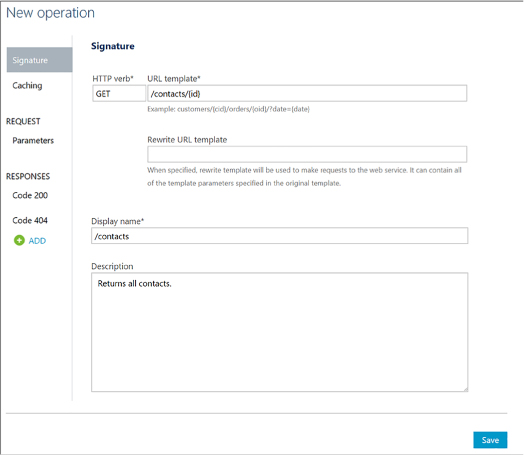

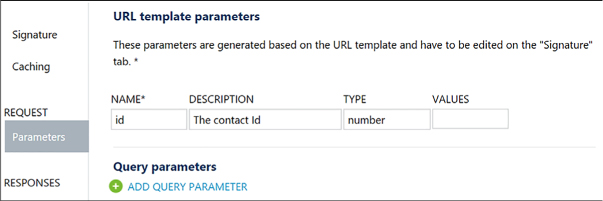

Add an operation to your API 497

Publish your product to make your API available 499

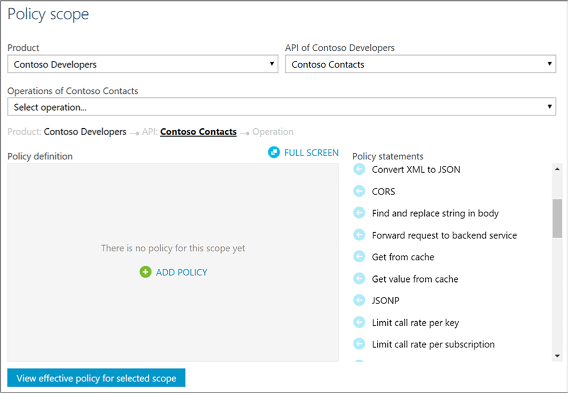

Configure API Management policies 499

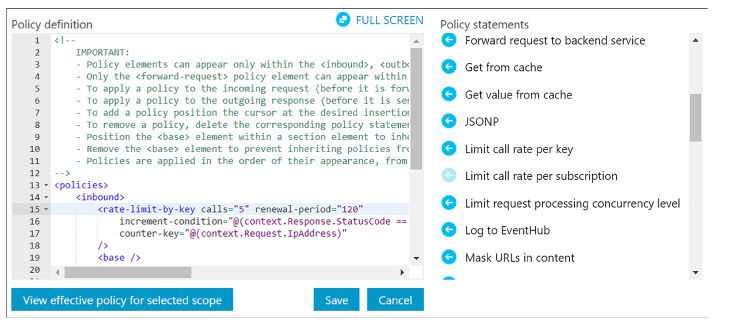

Protect APIs with rate limits 506

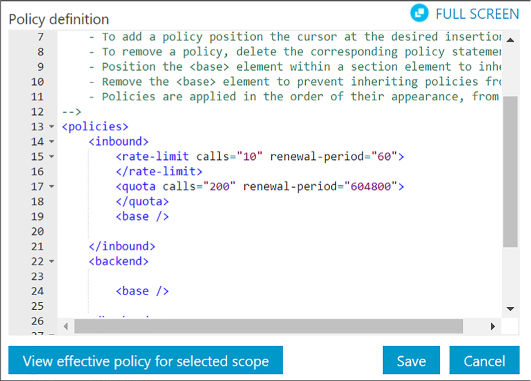

Create a product to scope rate limits to a group of APIs 506

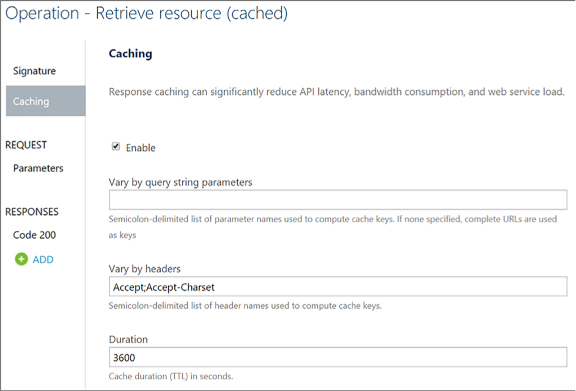

Add caching to improve performance 508

Customize the developer portal 513

Edit static page content and layout elements 513

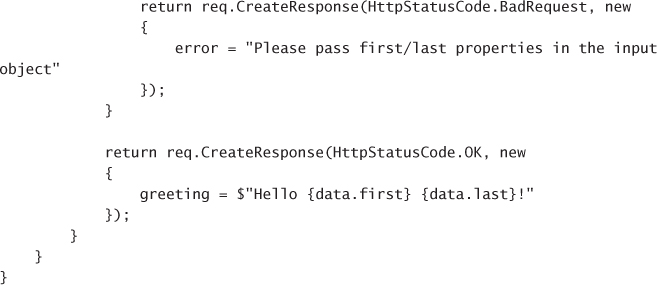

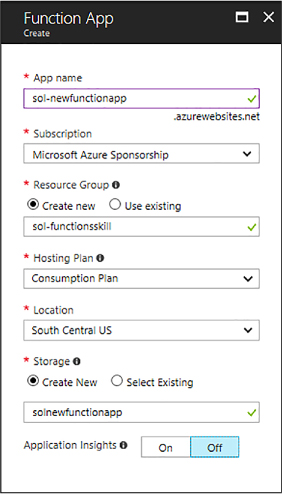

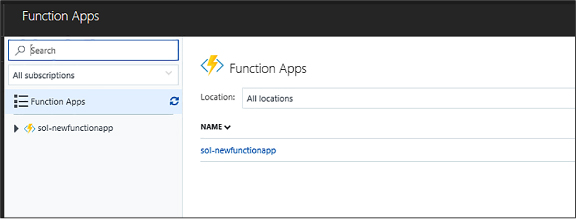

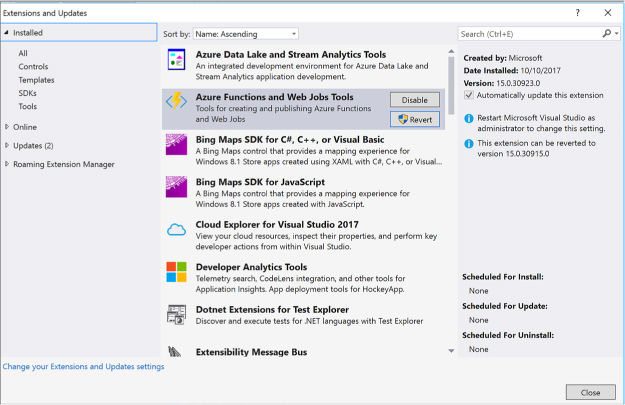

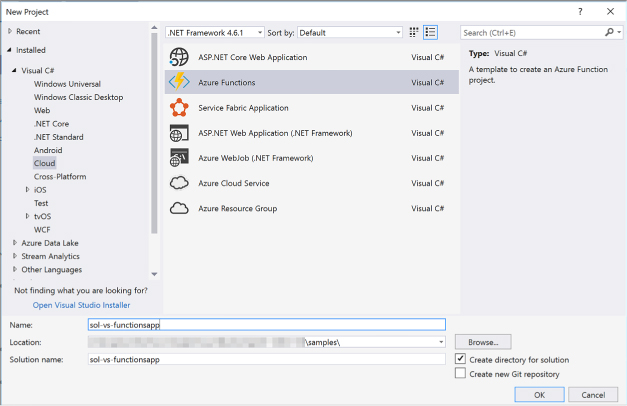

Skill 4.6: Implement Azure Functions and WebJobs 517

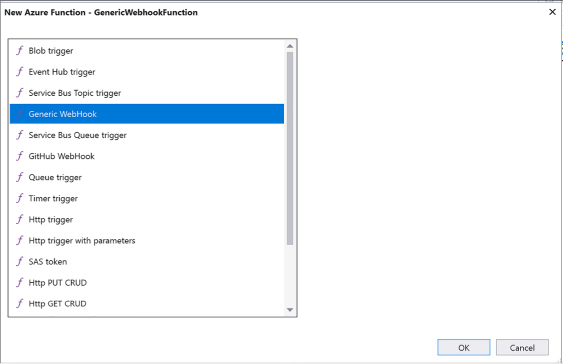

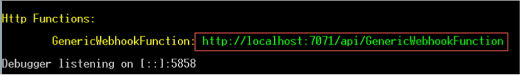

Implement a Webhook function 521

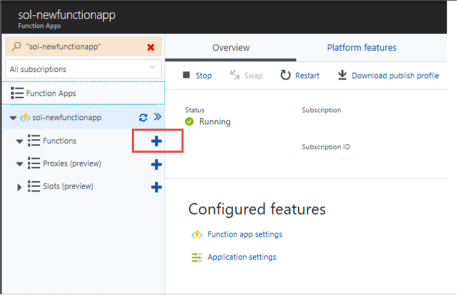

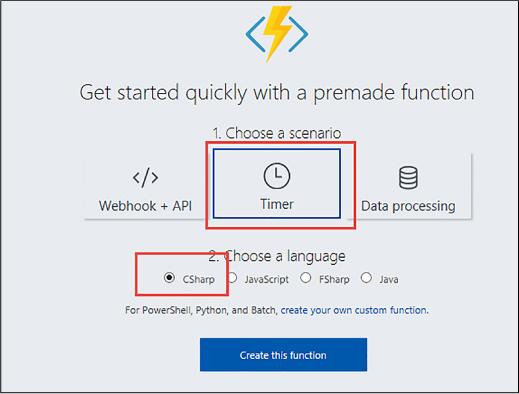

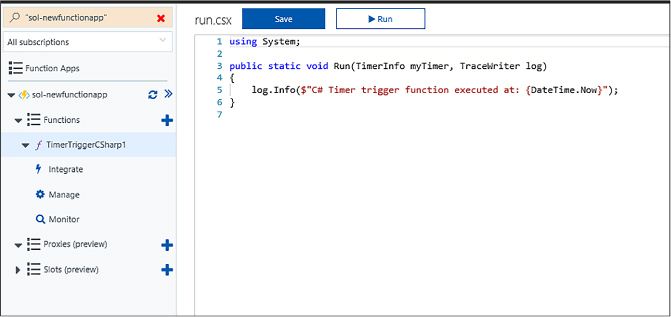

Create an event processing function 524

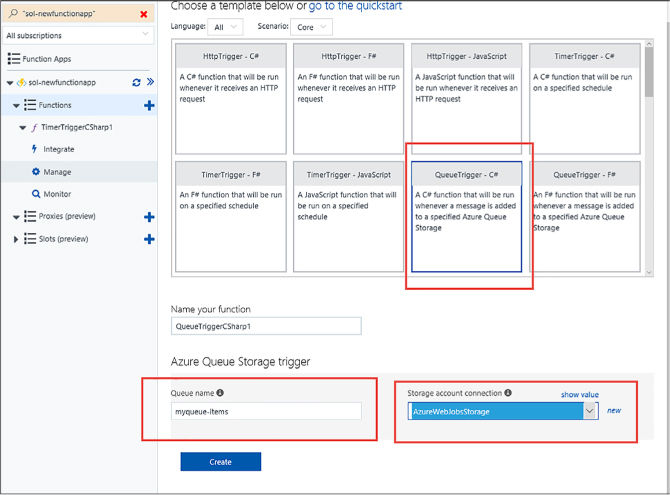

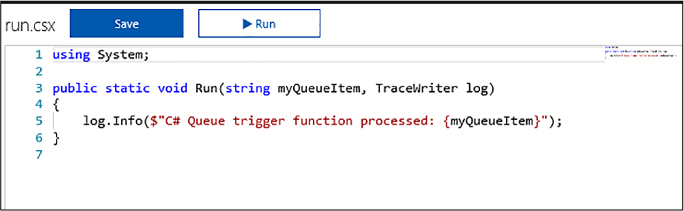

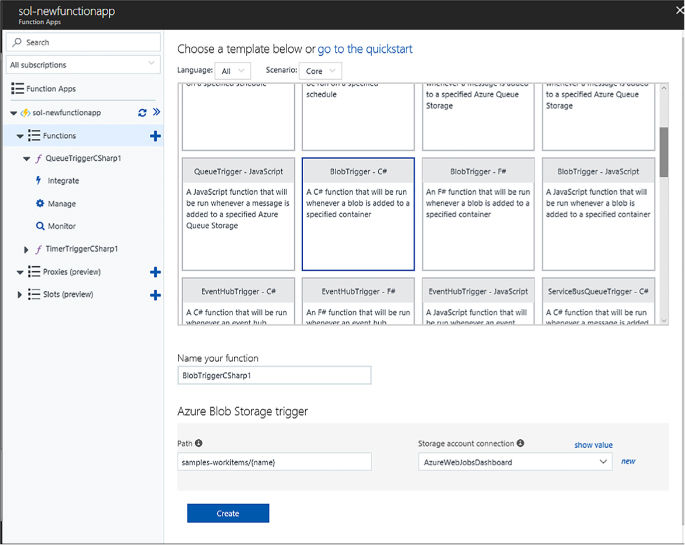

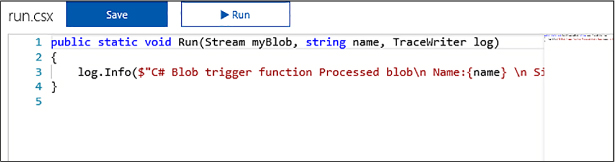

Implement an Azure-connected function 526

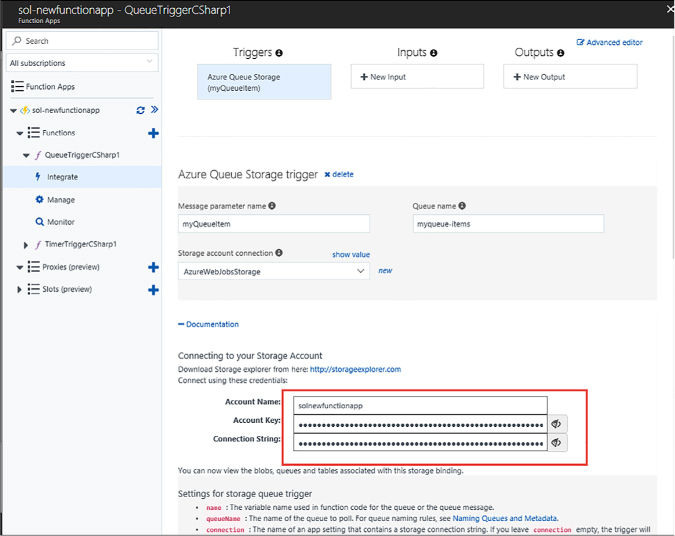

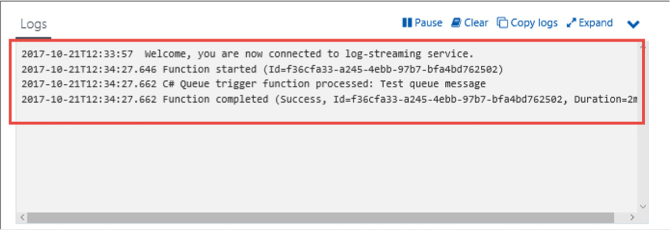

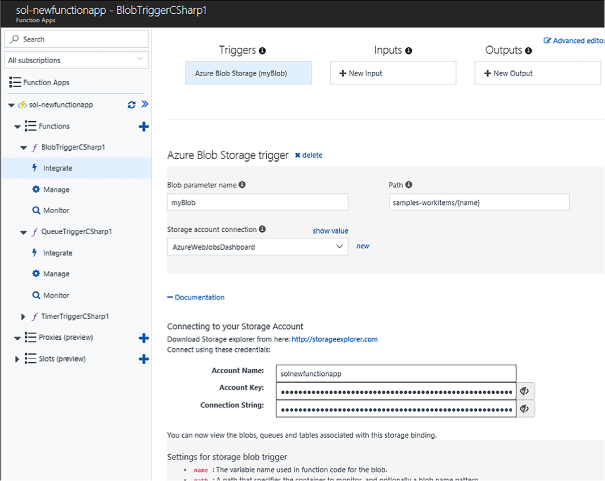

Integrate a function with storage 529

Design and implement a custom binding 532

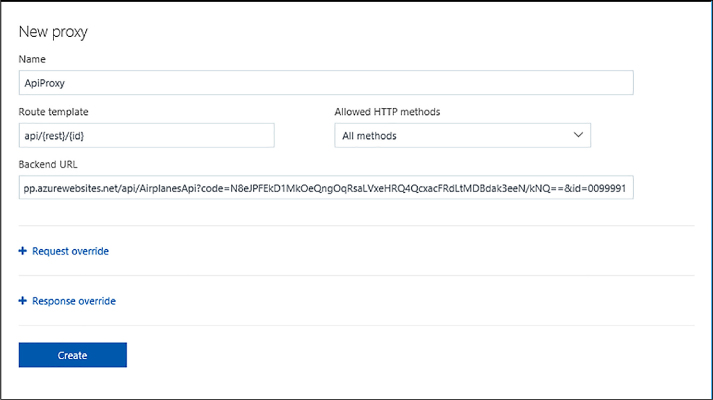

Implement and configure proxies 533

Integrate with App Service Plan 535

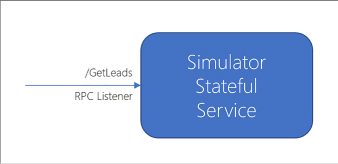

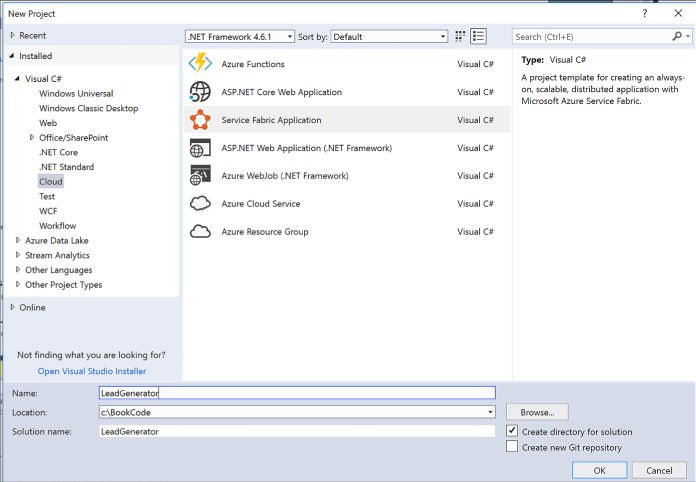

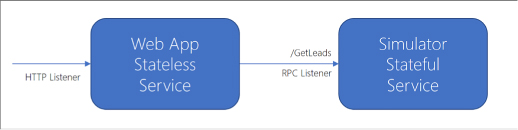

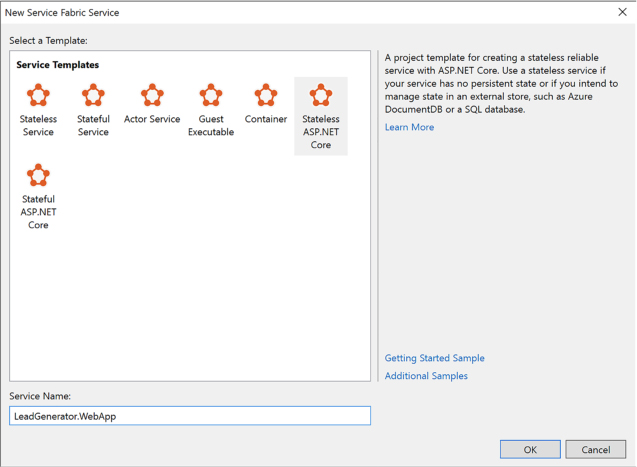

Skill 4.7: Design and Implement Azure Service Fabric apps 535

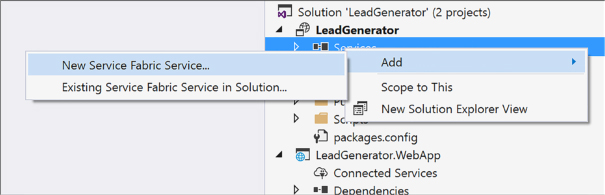

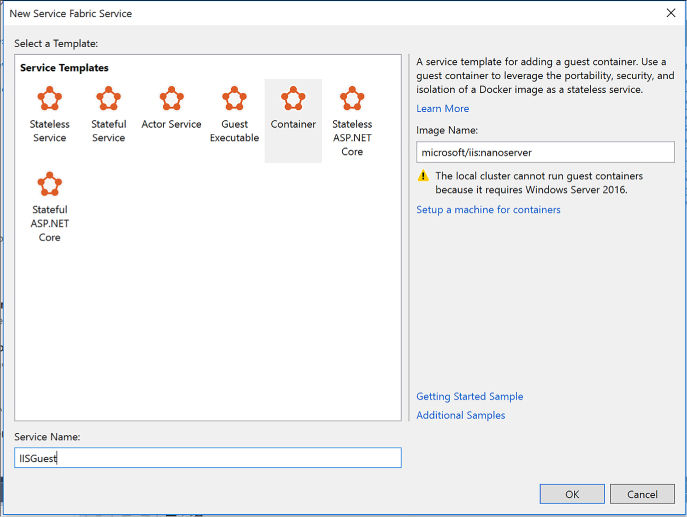

Create a Service Fabric application 536

Add a web front end to a Service Fabric application 541

Build an Actors-based service 546

Monitor and diagnose services 546

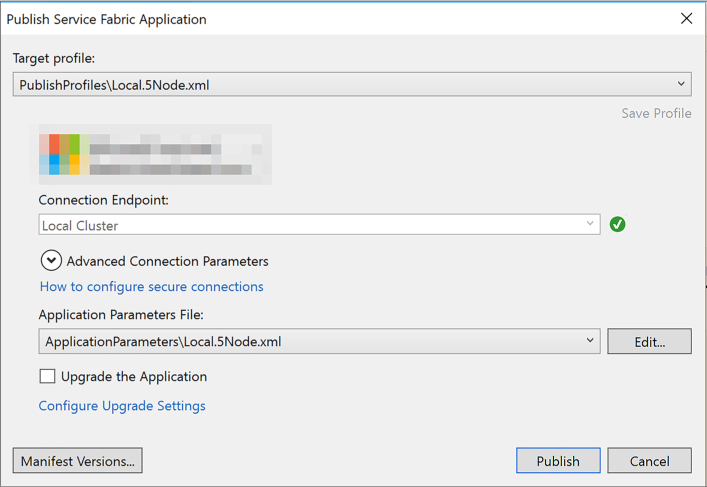

Deploy an application to a container 546

Migrate apps from cloud services 548

Scale a Service Fabric app 548

Create, secure, upgrade, and scale Service Fabric Cluster in Azure 550

Skill 4.8: Design and implement third-party Platform as a Service (PaaS) 550

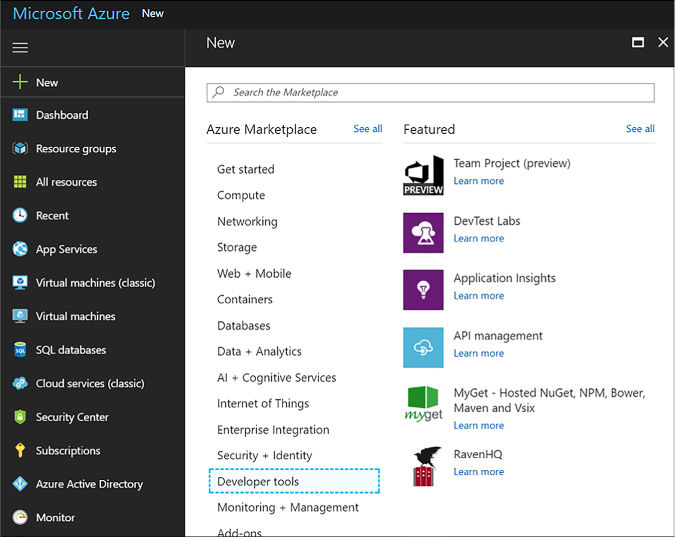

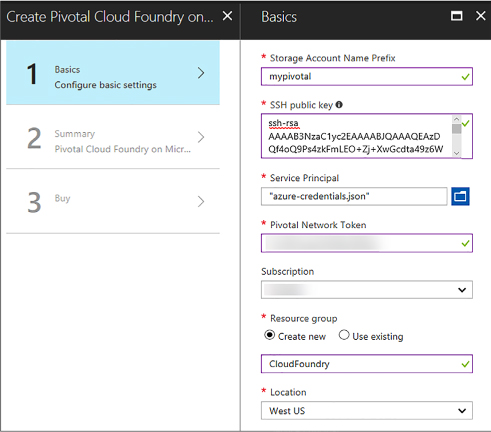

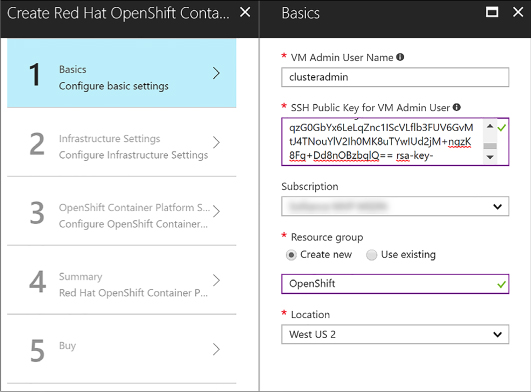

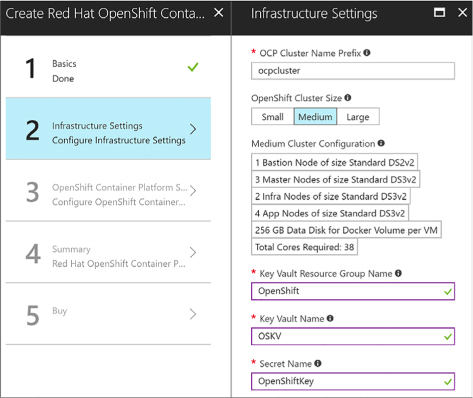

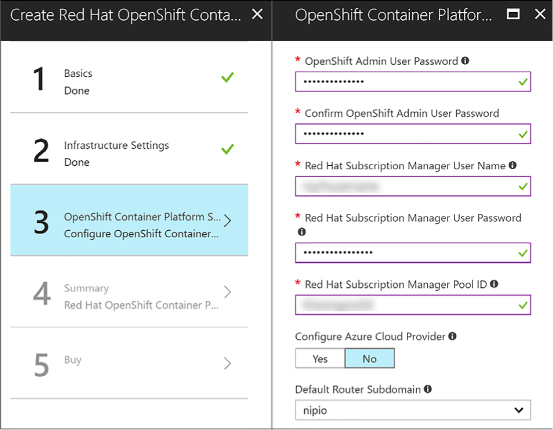

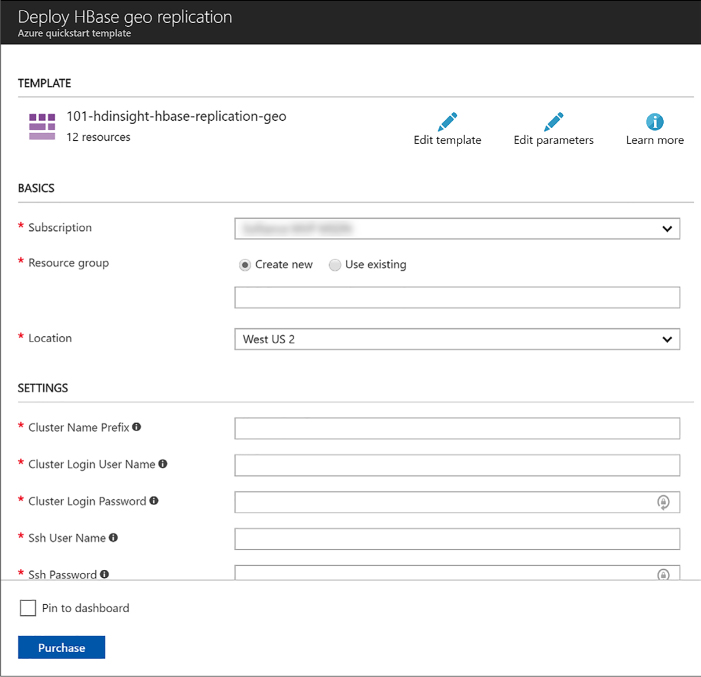

Provision applications by using Azure Quickstart Templates 557

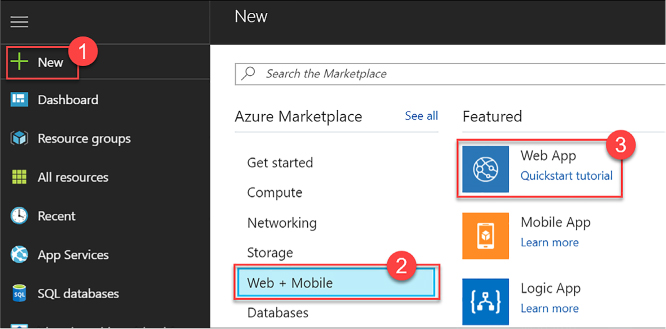

Build applications that leverage Azure Marketplace solutions and services 559

Skill 4.9: Design and implement DevOps 560

Instrument an application with telemetry 560

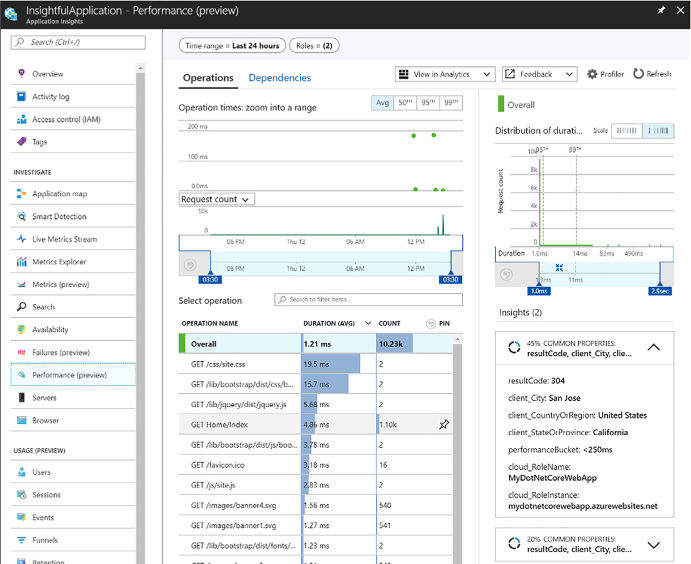

Discover application performance issues by using Application Insights 562

Deploy CI/CD with third-party platform tools (Jenkins, GitHub, Chef, Puppet, TeamCity) 572

Exam Ref 70-532 Developing

Microsoft Azure Solutions

2nd

Edition

Zoiner Tejada

Michele

Leroux Bustamante

Ike Ellis

![]()

Exam Ref 70-532 Developing Microsoft Azure Solutions, 2nd Edition

Published with the authorization of Microsoft Corporation by:

Pearson Education, Inc.

Copyright © 2018 by Pearson Education

All rights reserved. Printed in the United States of America. This publication is protected by copyright, and permission must be obtained from the publisher prior to any prohibited reproduction, storage in a retrieval system, or transmission in any form or by any means, electronic, mechanical, photocopying, recording, or likewise. For information regarding permissions, request forms, and the appropriate contacts within the Pearson Education Global Rights & Permissions Department, please visit www.pearsoned.com/permissions/. No patent liability is assumed with respect to the use of the information contained herein. Although every precaution has been taken in the preparation of this book, the publisher and author assume no responsibility for errors or omissions. Nor is any liability assumed for damages resulting from the use of the information contained herein.

ISBN-13:

978-1-5093-0459-2

ISBN-10: 1-5093-0459-X

Library

of Congress Control Number: 2017953300

1 18

Trademarks

Microsoft and the trademarks listed at https://www.microsoft.com on the “Trademarks” webpage are trademarks of the Microsoft group of companies. All other marks are property of their respective owners.

Warning and Disclaimer

Every effort has been made to make this book as complete and as accurate as possible, but no warranty or fitness is implied. The information provided is on an “as is” basis. The authors, the publisher, and Microsoft Corporation shall have neither liability nor responsibility to any person or entity with respect to any loss or damages arising from the information contained in this book or programs accompanying it.

Special Sales

For information about buying this title in bulk quantities, or for special sales opportunities (which may include electronic versions; custom cover designs; and content particular to your business, training goals, marketing focus, or branding interests), please contact our corporate sales department at corpsales@pearsoned.com or (800) 382-3419.

For government sales inquiries, please contact governmentsales@pearsoned.com.

For questions about sales outside the U.S., please contact intlcs@pearson.com.

Editor-in-Chief

Greg

Wiegand

Acquisitions Editor

Laura Norman

Development Editor

Troy Mott

Managing

Editor

Sandra Schroeder

Senior Project Editor

Tracey Croom

Editorial Production

Backstop Media

Copy

Editor

Liv Bainbridge

Indexer

Julie

Grady

Proofreader

Christina

Rudloff

Technical Editor

Jason Haley

Cover

Designer

Twist Creative, Seattle

CHAPTER 1 Create and manage virtual machines

CHAPTER 2 Design and implement a storage and data strategy

CHAPTER 3 Manage identity, application and network services

CHAPTER 4 Design and implement Azure PaaS compute and web and mobile services

Quick access to online references

Errata, updates, & book support

Chapter 1 Create and manage virtual machines

Skill 1.1: Deploy workloads on Azure ARM virtual machines

Skill 1.2: Perform configuration management

Configure VMs with Custom Script Extension

Scale up and scale down VM sizes

Deploy ARM VM Scale Sets (VMSS)

Skill 1.4: Design and implement ARM VM storage

Configure shared storage using Azure File storage

Implement ARM VMs with Standard and Premium Storage

Implement Azure Disk Encryption for Windows and Linux ARM VMs

Configure monitoring and diagnostics for a new VM

Configure monitoring and diagnostics for an existing VM

Skill 1.6: Manage ARM VM Availability

Combine the Load Balancer with availability sets

Skill 1.7: Design and implement DevTest Labs

Create and manage custom images and formulas

Configure a lab to include policies and procedures

Chapter 2 Design and implement a storage and data strategy

Skill 2.1: Implement Azure Storage blobs and Azure files

Store data using block and page blobs

Configure a Content Delivery Network with Azure Blob Storage

Create connections to files from on-premises or cloudbased Windows or, Linux machines

Skill 2.2: Implement Azure Storage tables, queues, and Azure Cosmos DB Table API

Designing, managing, and scaling table partitions

Retrieving a batch of messages

Choose between Azure Storage Tables and Azure Cosmos DB Table API

Skill 2.3: Manage access and monitor storage

Generate shared access signatures

Regenerate storage account keys

Configure and use Cross-Origin Resource Sharing

Skill 2.4: Implement Azure SQL databases

Choosing the appropriate database tier and performance level

Configuring and performing point in time recovery

Creating an offline secondary database

Creating an online secondary database

Creating an online secondary database

Import and export schema and data

Managed elastic pools, including DTUs and eDTUs

Implement graph database functionality in Azure SQL Database

Skill 2.5: Implement Azure Cosmos DB DocumentDB

Choose the Cosmos DB API surface

Create Cosmos DB API Database and Collections

Manage scaling of Cosmos DB, including managing partitioning, consistency, and RUs

Access Cosmos DB from REST interface

Skill 2.6: Implement Redis caching

Implement security and network isolation

Integrate Redis caching with ASP.NET session and cache providers

Skill 2.7: Implement Azure Search

Chapter 3 Manage identity, application and network services

Skill 3.1: Integrate an app with Azure AD

Preparing to integrate an app with Azure AD

Develop apps that use WS-Federation, SAML-P, OpenID Connect and OAuth endpoints

Query the directory using Microsoft Graph API, MFA and MFA API

Skill 3.2: Develop apps that use Azure AD B2C and Azure AD B2B

Design and implement apps that leverage social identity provider authentication

Skill 3.3: Manage Secrets using Azure Key Vault

Manage access, including tenants

Skill 3.4: Design and implement a messaging strategy

Develop and scale messaging solutions using Service Bus queues, topics, relays and Notification Hubs

Determine when to use Event Hubs, Service Bus, IoT Hub, Stream Analytics and Notification Hubs

Chapter 4 Design and implement Azure PaaS compute and web and mobile services

Skill 4.1: Design Azure App Service Web Apps

Define and manage App Service plans

Configure Web App certificates and custom domains

Manage Web Apps by using the API, Azure PowerShell, and Xplat-CLI

Implement diagnostics, monitoring, and analytics

Design and configure Web Apps for scale and resilience

Skill 4.2: Design Azure App Service API Apps

Automate API discovery by using Swashbuckle

Use Swagger API metadata to generate client code for an API app

Skill 4.3: Develop Azure App Service Logic Apps

Create a Logic App connecting SaaS services

Create a Logic App with B2B capabilities

Create a Logic App with XML capabilities

Trigger a Logic App from another app

Create custom and long-running actions

Skill 4.4: Develop Azure App Service Mobile Apps

Add authentication to a mobile app

Add offline sync to a mobile app

Add push notifications to a mobile app

Skill 4.5: Implement API Management

Configure API Management policies

Add caching to improve performance

Customize the developer portal

Skill 4.6: Implement Azure Functions and WebJobs

Create an event processing function

Implement an Azure-connected function

Integrate a function with storage

Design and implement a custom binding

Implement and configure proxies

Integrate with App Service Plan

Skill 4.7: Design and Implement Azure Service Fabric apps

Create a Service Fabric application

Add a web front end to a Service Fabric application

Deploy an application to a container

Migrate apps from cloud services

Create, secure, upgrade, and scale Service Fabric Cluster in Azure

Skill 4.8: Design and implement third-party Platform as a Service (PaaS)

Provision applications by using Azure Quickstart Templates

Build applications that leverage Azure Marketplace solutions and services

Skill 4.9: Design and implement DevOps

Instrument an application with telemetry

Discover application performance issues by using Application Insights

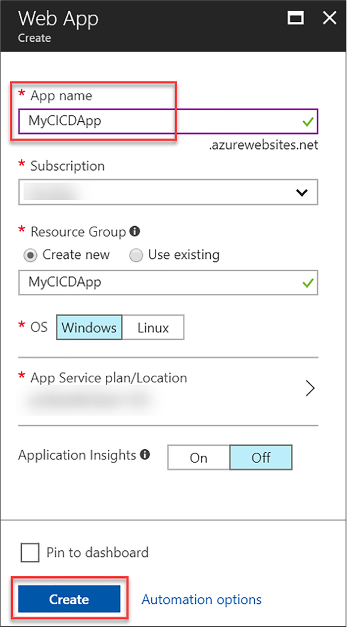

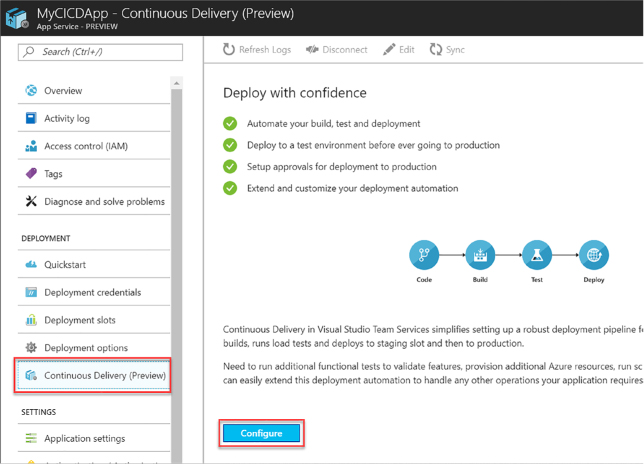

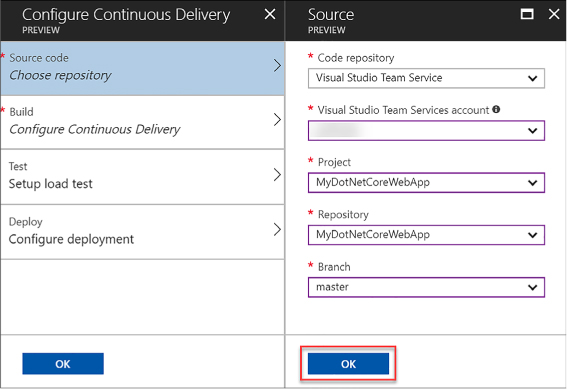

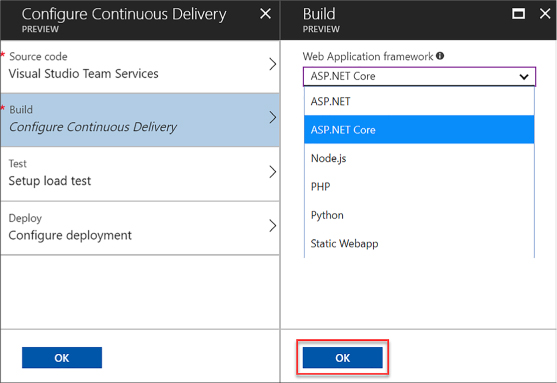

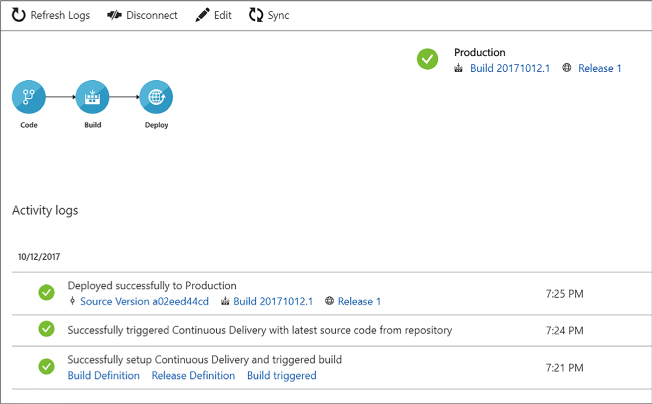

Deploy Visual Studio Team Services with continuous integration (CI) and continuous development (CD)

Deploy CI/CD with third-party platform tools (Jenkins, GitHub, Chef, Puppet, TeamCity)

What do you think of this book? We want to hear from you!

Microsoft is interested in hearing your feedback so we can continually improve our books and learning resources for you. To participate in a brief online survey, please visit:

The 70-532 exam focuses the skills necessary to develop software on the Microsoft Azure Cloud. It covers Infrastructure-as-a-Service (IaaS) offerings like Azure VMs and Platform-as-a-Service (PaaS) offerings like Azure Storage, Azure CosmosDB, Azure Active Directory, Azure Service Bus, Azure Event Hub, Azure App Services, Azure Service Fabric, Azure Functions and other relevant marketplace applications. This book will help get started with these and other features of Azure so that you can begin developing and deploying Azure applications.

This book is geared toward cloud application developers who focus on Azure as the target host environment. It covers choosing from Azure compute options for IaaS and Paas, incorporating storage and data platforms. It will help you choose when to use features such as Web Apps, API Apps, API Management, Logic Apps and Mobile Apps. It will explain your data storage options between Azure CosmosDB, Azure Redis Cache, Azure Search, and Azure SQL Database. It also covers how to secure applications with Azure Active Directory using B2C and B2B features for single sign-on based on OpenID Connect, OAuth2 and SAML-P protocols, and how to use Azure Vault to protect secrets.

This book covers every major topic area found on the exam, but it does not cover every exam question. Only the Microsoft exam team has access to the exam questions, and Microsoft regularly adds new questions to the exam, making it impossible to cover specific questions. You should consider this book a supplement to your relevant real-world experience and other study materials. If you encounter a topic in this book that you do not feel completely comfortable with, use the “Need more review?” links you’ll find in the text to find more information and take the time to research and study the topic. Great information is available on MSDN, TechNet, and in blogs and forums.

This book is organized by the “Skills measured” list published for the exam. The “Skills measured” list is available for each exam on the Microsoft Learning website: https://aka.ms/examlist. Each chapter in this book corresponds to a major topic area in the list, and the technical tasks in each topic area determine a chapter’s organization. If an exam covers six major topic areas, for example, the book will contain six chapters.

Microsoft certifications distinguish you by proving your command of a broad set of skills and experience with current Microsoft products and technologies. The exams and corresponding certifications are developed to validate your mastery of critical competencies as you design and develop, or implement and support, solutions with Microsoft products and technologies both on-premises and in the cloud. Certification brings a variety of benefits to the individual and to employers and organizations.

More Info All Microsoft Certifications

For information about Microsoft certifications, including a full list of available certifications, go to https://www.microsoft.com/learning.

Zoiner Tejada A book of this scope takes a village, and I’m honored to have received the support of one in making this second edition happen. My deepest thanks to the team at Solliance who helped make this possible: my co-authors Michele Leroux Bustamante and Ike Ellis and the hidden heroes, and Joel Hulen and Kyle Bunting helped us with research and coverage on critical topics as the scope of the book grew with the fast pace of Azure. Laura Norman, our editor, thank you for helping us navigate the path to completion with structure and compassion. To my wife Ashley Tejada, my eternal thanks for supporting me in this effort, the little things count and they don’t go unnoticed.

Michele Leroux Bustamante I want to thank Joel Hulen, Virgilio Esteves and Khaled Hikmat – who have been part of key Solliance projects in Azure, including this book – and this work and experience reflects in the guidance shared in the book. Thank you for being part of this journey! Thank you also to, Laura Norman, our editor – who was very supporting during challenging deadlines. A level head keeps us all sane. To my husband and son – thank you for tolerating the writing schedule – again. I owe you - again. Much love.

Ike Ellis First and foremost, I’d like to thank my wife, Margo Sloan, for her support in taking care of all the necessities of life while I wrote. Our editor, Laura Norman, had her hands full in wrangling three busy co-authors, and I’m very grateful for her diligence. I’m very grateful to my co-authors, Zoiner and Michele. It’s a joy to work with them on all of our combined projects.

Build your knowledge of Microsoft technologies with free expert-led online training from Microsoft Virtual Academy (MVA). MVA offers a comprehensive library of videos, live events, and more to help you learn the latest technologies and prepare for certification exams. You’ll find what you need here:

https://www.microsoftvirtualacademy.com

Throughout this book are addresses to webpages that the author has recommended you visit for more information. Some of these addresses (also known as URLs) can be painstaking to type into a web browser, so we’ve compiled all of them into a single list that readers of the print edition can refer to while they read.

Download the list at https://aka.ms/examref5322E/downloads.

The URLs are organized by chapter and heading. Every time you come across a URL in the book, find the hyperlink in the list to go directly to the webpage.

We’ve made every effort to ensure the accuracy of this book and its companion content. You can access updates to this book—in the form of a list of submitted errata and their related corrections—at:

https://aka.ms/examref5322E/errata

If you discover an error that is not already listed, please submit it to us at the same page.

If you need additional support, email Microsoft Press Book Support at mspinput@microsoft.com.

Please note that product support for Microsoft software and hardware is not offered through the previous addresses. For help with Microsoft software or hardware, go to https://support.microsoft.com.

At Microsoft Press, your satisfaction is our top priority, and your feedback our most valuable asset. Please tell us what you think of this book at:

We know you’re busy, so we’ve kept it short with just a few questions. Your answers go directly to the editors at Microsoft Press. (No personal information will be requested.) Thanks in advance for your input!

Let’s keep the conversation going! We’re on Twitter: http://twitter.com/MicrosoftPress.

Microsoft certification exams are a great way to build your resume and let the world know about your level of expertise. Certification exams validate your on-the-job experience and product knowledge. Although there is no substitute for on-the-job experience, preparation through study and hands-on practice can help you prepare for the exam. We recommend that you augment your exam preparation plan by using a combination of available study materials and courses. For example, you might use the Exam ref and another study guide for your “at home” preparation, and take a Microsoft Official Curriculum course for the classroom experience. Choose the combination that you think works best for you.

Note that this Exam Ref is based on publicly available information about the exam and the author’s experience. To safeguard the integrity of the exam, authors do not have access to the live exam.

Virtual machines (VMs) are part of the Microsoft Azure Infrastructure-as-a-Service (IaaS) offering. With VMs, you can deploy Windows Server and Linux-based workloads and have greater control over the infrastructure, your deployment topology, and configuration as compared to Platform-as-a-Service (PaaS) offerings such as Web Apps and API Apps. That means you can more easily migrate existing applications and VMs without modifying code or configuration settings, but still benefit from Azure features such as management through a centralized web-based portal, monitoring, and scaling.

Important: Have you read page xvii

It contains valuable information regarding the skills you need to pass the exam.

![]() Skill 1.1: Deploy workloads on

Azure ARM virtual machines

Skill 1.1: Deploy workloads on

Azure ARM virtual machines

![]() Skill 1.2: Perform configuration

management

Skill 1.2: Perform configuration

management

![]() Skill 1.4: Design and implement ARM

VM storage

Skill 1.4: Design and implement ARM

VM storage

![]() Skill 1.6: Manage ARM VM

availability

Skill 1.6: Manage ARM VM

availability

![]() Skill 1.7: Design and implement

DevTest Labs

Skill 1.7: Design and implement

DevTest Labs

Microsoft Azure ARM VMs can run more than just Windows and .NET applications. They provide support for running many forms of applications using various operating systems. This section describes where and how to analyze what is supported and how to deploy three different forms of VMs.

This skill covers how to:

![]() Identify

supported workloads

Identify

supported workloads

![]() Create

a Windows Server VM

Create

a Windows Server VM

![]() Create

a Linux VM

Create

a Linux VM

![]() Create

a SQL Server VM

Create

a SQL Server VM

A workload describes the nature of a solution, including consideration such as: whether it is an application that runs on a single machine or it requires a complex topology that prescribes the operating system used, the additional software installed, the performance requirements, and the networking environment. Azure enables you to deploy a wide variety of VM workloads, including:

![]() “Bare

bones” VM workloads that run various versions of Windows Client,

Windows Server and Linux (such as Debian, Red Hat, SUSE and Ubuntu)

“Bare

bones” VM workloads that run various versions of Windows Client,

Windows Server and Linux (such as Debian, Red Hat, SUSE and Ubuntu)

![]() Web

servers (such as Apache Tomcat and Jetty)

Web

servers (such as Apache Tomcat and Jetty)

![]() Data

science, database and big-data workloads (such as Microsoft SQL

Server, Data Science Virtual Machine, IBM DB2, Teradata, Couchbase,

Cloudera, and Hortonworks Data Platform)

Data

science, database and big-data workloads (such as Microsoft SQL

Server, Data Science Virtual Machine, IBM DB2, Teradata, Couchbase,

Cloudera, and Hortonworks Data Platform)

![]() Complete

application infrastructures (for example, those requiring server

farms or clusters like DC/OS, SharePoint, SQL Server AlwaysOn, and

SAP)

Complete

application infrastructures (for example, those requiring server

farms or clusters like DC/OS, SharePoint, SQL Server AlwaysOn, and

SAP)

![]() Workloads

that provide security and protection (such as antivirus, intrusion

detection systems, firewalls, data encryption, and key management)

Workloads

that provide security and protection (such as antivirus, intrusion

detection systems, firewalls, data encryption, and key management)

![]() Workloads

that support developer productivity (such as the Windows 10 client

operating system, Visual Studio, or the Java Development Kit)

Workloads

that support developer productivity (such as the Windows 10 client

operating system, Visual Studio, or the Java Development Kit)

There are two approaches to identifying supported Azure workloads. The first is to determine whether the workload is already explicitly supported and offered through the Azure Marketplace, which provides a large collection of free and for-pay solutions from Microsoft and third parties that deploy to VMs. The Marketplace also offers access to the VM Depot, which provides a large collection of community provided and maintained VMs. The VM configuration and all of the required software it contains on the disk (or disks) is called a VM image. The topology that deploys the VM and any supporting infrastructure is described in an Azure Resource Manager (ARM) template that is used by the Marketplace to provision and configure the required resources.

The second approach is to compare the requirements of the workload you want to deploy directly to the published capabilities of Azure VMs or, in some cases, to perform proof of concept deployments to measure whether the requirements can be met. The following is a representative, though not exhaustive, list of the requirements you typically need to take into consideration:

![]() CPU

and RAM memory requirements

CPU

and RAM memory requirements

![]() Disk

storage capacity requirements, in gigabytes (GBs)

Disk

storage capacity requirements, in gigabytes (GBs)

![]() Disk

performance requirements, usually in terms of input/output operations

per second (IOPS) and data throughput (typically in megabytes per

second)

Disk

performance requirements, usually in terms of input/output operations

per second (IOPS) and data throughput (typically in megabytes per

second)

![]() Operating

system compatibility

Operating

system compatibility

![]() Networking

requirements

Networking

requirements

![]() Availability

requirements

Availability

requirements

![]() Security

and compliance requirements

Security

and compliance requirements

This section covers what is required to deploy the “bare bones” VM (that is, one that has the operating system and minimal features installed) that can serve as the basis for your more complex workloads, and describes the options for deploying a pre-built workload from the Marketplace.

Fundamentally, there are two approaches to creating a new VM. You can upload a VM that you have built on-premises, or you can instantiate one from the pre-built images available in the Marketplace. This section focuses on the latter and defers coverage of the upload scenario until the next section.

To create a bare bones Windows Server VM in the portal, complete the following steps:

Navigate to the portal accessed via https://manage.windowsazure.com.

Select New on the command bar.

Within the Marketplace list, select the Compute option.

On the Compute blade, select the image for the version of Windows Server you want for your VM (such as Windows Server 2016 VM).

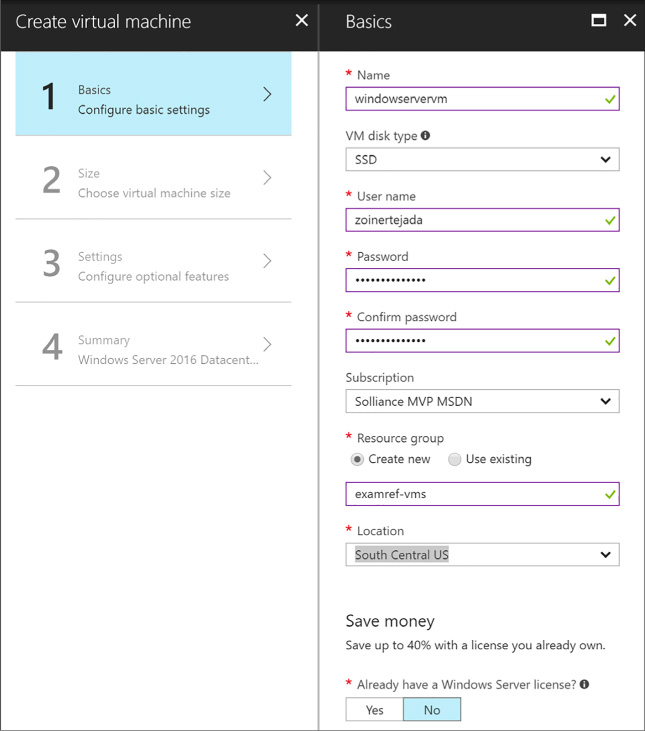

On the Basics blade, provide a name for your VM, the Disk Type, a User Name and Password, and choose the Subscription, Resource Group and Location into which you want to deploy (Figure 1-1).

FIGURE 1-1 The Basics blade

Select OK.

On the Choose A Size Blade, select the desired tier and size for your VM (Figure 1-2).

FIGURE 1-2 The Choose A Size blade

Choose Select.

On the Settings blade, leave the settings at their defaults and select OK.

On the Purchase blade, review the summary and select Purchase to deploy the VM.

To create a bare bones Linux VM in the portal, complete the following steps:

Navigate to the portal accessed via https://portal.azure.com.

Select New on the command bar.

Within the Marketplace list, select the Compute option.

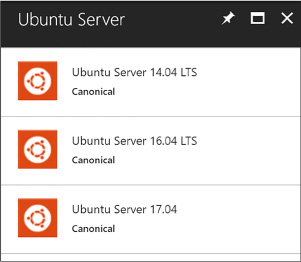

On the Compute blade, select the image for the version of Ubuntu Server (Figure 1-3) you want for your VM (such as Ubuntu Server 16.04 LTS).

FIGURE 1-3 The Ubuntu Server option

Select Create.

On the Basics blade, provide a name for your VM, the Disk Type, a User Name and Password (or SSH public key if preferred), and choose the Subscription, Resource Group and Location into which you want to deploy.

Select OK.

On the Choose a size blade, select the desired tier and size for your VM.

Choose select.

On the Settings blade, leave the settings at their defaults and select OK.

On the Purchase blade, review the summary and select Purchase to deploy the VM.

More Info: SSH Key Generation

To create the SSH public key that you need to provision your Linux VM, run ssh-keygen on a Mac OSX or Linux terminal, or, if you are running Windows, use PuTTYgen. A good reference, if you are not familiar with using SSH from Windows, is available at: https://docs.microsoft.com/azure/virtual-machines/linux/ssh-from-windows.

The steps for creating a VM that has SQL Server installed on top of Windows Server are identical to those described earlier for provisioning a Windows Server VM using the portal. The primary differences surface in the fourth step: instead of selecting a Windows Server from the Marketplace list, select a SQL Server option (such as SQL Server 2016 SP1 Enterprise) and follow the prompts to complete the configuration (such as the storage configuration, patching schedule and enablement of features like SQL Authentication and R Services) of the VM with SQL Server and to deploy the VM.

A number of configuration management tools are available for provisioning, configuring, and managing your VMs. In this section, you learn how to use Windows PowerShell Desired State Configuration (DSC) and the VM Agent (via custom script extensions) to perform configuration management tasks, including automating the process of provisioning VMs, deploying applications to those VMs, and automating configuration of those applications based on the environment, such as development, test, or production.

This skill covers how to:

![]() Automate

configuration management by using PowerShell Desired State

Configuration (DSC) and the VM Agent (using custom script extensions)

Automate

configuration management by using PowerShell Desired State

Configuration (DSC) and the VM Agent (using custom script extensions)

![]() Configure

VMs with Custom Script Extension

Configure

VMs with Custom Script Extension

![]() Use

PowerShell DSC

Use

PowerShell DSC

![]() Configure

VMs with DSC

Configure

VMs with DSC

![]() Enable

remote debugging

Enable

remote debugging

Before describing the details of using PowerShell DSC and the Custom Script Extension, this section provides some background on the relationship between these tools and the relevance of the Azure Virtual Machine Agent (VM Agent) and Azure virtual machine extensions (VM extensions).

When you create a new VM in the portal, the VM Agent is installed by default. The VM Agent is a lightweight process used for bootstrapping additional tools on the VM by way of installing, configuring, and managing VM extensions. VM extensions can be added through the portal, but they are also commonly installed with Windows PowerShell cmdlets or through the Azure Cross Platform Command Line Interface (Azure CLI).

More Info: Azure CLI

Azure CLI is an open source project providing the same functionality as the portal via the command line. It is written in JavaScript and requires Node.js and enables management of Azure resources in a cross-platform fashion (from macOS, Windows and Linux). For more details, see https://docs.microsoft.com/cli/azure/overview.

With the VM Agent installed, you can add VM extensions. Popular VM extensions include the following:

![]() PowerShell

Desired State Configuration (for Windows VMs)

PowerShell

Desired State Configuration (for Windows VMs)

![]() Custom

Script Extension (for Windows or Linux)

Custom

Script Extension (for Windows or Linux)

![]() Team

Services Agent (for Windows or Linux VMs)

Team

Services Agent (for Windows or Linux VMs)

![]() Microsoft

Antimalware Agent (for Windows VMs)

Microsoft

Antimalware Agent (for Windows VMs)

![]() Network

Watcher Agent (for Windows or Linux VMs)

Network

Watcher Agent (for Windows or Linux VMs)

![]() Octopus

Deploy Tentacle Agent (for Windows VMs)

Octopus

Deploy Tentacle Agent (for Windows VMs)

![]() Docker

extension (for Linux VMs)

Docker

extension (for Linux VMs)

![]() Puppet

Agent (for Windows VMs)

Puppet

Agent (for Windows VMs)

![]() Chef

extension (for Windows or Linux)

Chef

extension (for Windows or Linux)

You can add VM extensions as you create the VM through the portal, as well as run them using the Azure CLI, PowerShell and Azure Resource Manager templates.

More Info: Additional Extensions

There are additional extensions for deployment, debugging, security, and more. For more details, see https://docs.microsoft.com/en-us/azure/virtual-machines/windows/extensions-features#common-vm-extensions-reference.

Custom Script Extension makes it possible to automatically download files from Azure Storage and run Windows PowerShell (on Windows VMs) or Shell scripts (on Linux VMs) to copy files and otherwise configure the VM. This can be done when the VM is being created or when it is already running. You can do this from the portal or from a Windows PowerShell command line interface, the Azure CLI, or by using ARM templates.

Create a Windows Server VM following the steps presented in the earlier section, “Creating a Windows Server VM.” After creating the VM, complete the following steps to set up the Custom Script Extension:

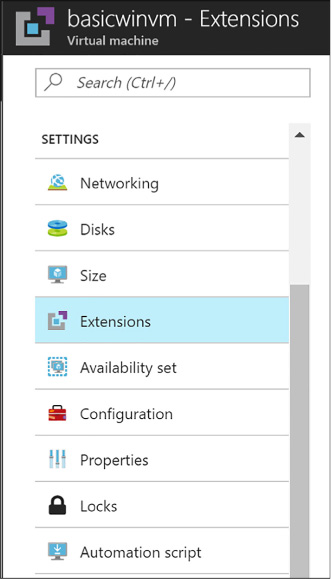

Navigate to the blade for your VM in the portal accessed via https://portal.azure.com.

From the menu, scroll down to the Settings section, and select Extensions (Figure 1-4).

FIGURE 1-4 The Extensions option

On the Extensions blade, select Add on the command bar.

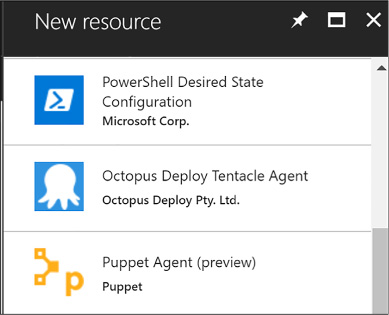

From the New Resource blade, select Custom Script Extension (Figure 1-5).

FIGURE 1-5 The New Resource blade

On the Custom Script blade, select Create.

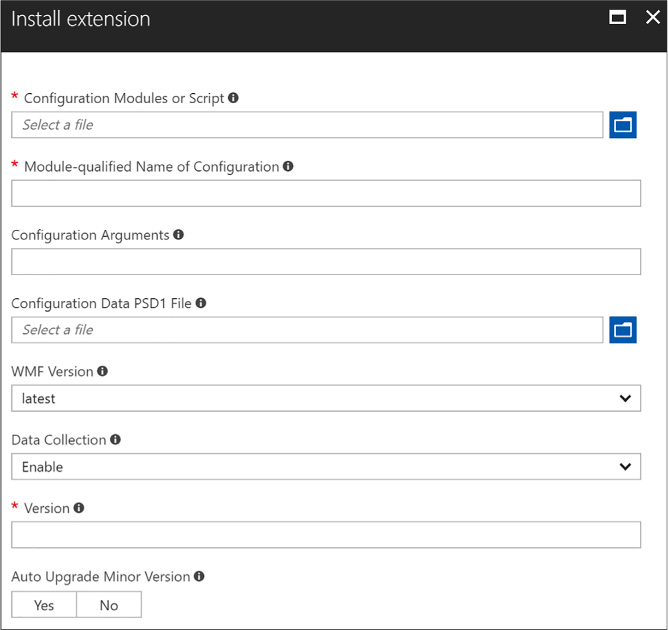

On the Install Extension blade (Figure 1-6), select the Folder button and choose the .ps1 file containing the script you want to run when the VM starts. Optionally, provide arguments. The Version of DSC is required, for example 2.21.

FIGURE 1-6 The Install Extenson blade

Select OK.

More Info: Configuring the Custom Script Extension

You can also configure the Custom Script Extension using the Set-AzureRmVMCustomScriptExtension Windows PowerShell cmdlet (see https://docs.microsoft.com/en-us/azure/virtual-machines/windows/extensions-customscript#powershell-deployment) or via the “az vm extension set” Azure CLI command (see https://docs.microsoft.com/en-us/azure/virtual-machines/linux/extensions-customscript#azure-cli).

PowerShell Desired State Configuration (DSC) is a management platform introduced with Windows PowerShell 4.0, available as a Windows feature on Windows Server 2012 R2. PowerShell DSC is implemented using Windows PowerShell. You can use it to configure a set of servers (or nodes) declaratively, providing a description of the desired state for each node in the system topology. You can describe which application resources to add, remove, or update based on the current state of a server node. The easy, declarative syntax simplifies configuration management tasks.

With PowerShell DSC, you can instruct a VM to self-provision to a desired state on first deployment and then have it automatically update if there is “configuration drift.” Configuration drift happens when the desired state of the node no longer matches what is described by DSC.

Resources are core building blocks for DSC. A script can describe the target state of one or more resources, such as a Windows feature, the Registry, the file system, and other services. For example, a DSC script can describe the following intentions:

![]() Manage

server roles and Windows features

Manage

server roles and Windows features

![]() Manage

registry keys

Manage

registry keys

![]() Copy

files and folders

Copy

files and folders

![]() Deploy

software

Deploy

software

![]() Run

Windows PowerShell scripts

Run

Windows PowerShell scripts

More Info: DSC Built-in Resources

For a more extensive list of DSC resources for both Windows and Linux, see: https://msdn.microsoft.com/en-us/powershell/dsc/resources.

DSC extends Windows PowerShell 4.0 with a Configuration keyword used to express the desired state of one or more target nodes. For example, the following configuration indicates that a server should have IIS enabled during provisioning:

Configuration

EnableIIS

{

Node WebServer

{

WindowsFeature

IIS {

Ensure

= "Present",

Name

= "Web-Server"

}

}

}

The Configuration keyword can wrap one or more Node elements, each describing the desired configuration state of one or more resources on the node. In the preceding example, the server node is named WebServer, the contents of which indicate that the Windows Feature “IIS” should be configured, and that the Web-Server component of IIS should be confirmed present or installed if absent.

Exam Tip

After the DSC runs, a Managed Object Format (MOF) file is created, which is a standard endorsed by the Distributed Management Task Force (DTMF). See: http://www.dmtf.org/education/mof.

Many resources are predefined and exposed to DSC; however, you may also require extended capabilities that warrant creating a custom resource for DSC configuration. You can implement custom resources by creating a Windows PowerShell module. The module includes a MOF schema, a script module, and a module manifest.

More Info: Custom DSC Resources

For more information on building custom DSC resources, see https://msdn.microsoft.com/en-us/powershell/dsc/authoringResource.

More Info: DSC Resources in the Powershell Gallery

The Windows PowerShell team released a number of DSC resources to simplify working with Active Directory, SQL Server, and IIS. See the PowerShell Gallery at http://www.powershellgallery.com/items and search for items in the DSC Resource category.

Local Configuration Manager is the engine of DSC, which runs on all target nodes and enables the following scenarios for DSC:

![]() Pushing

configurations to bootstrap a target node

Pushing

configurations to bootstrap a target node

![]() Pulling

configuration from a specified location to bootstrap or update a

target node

Pulling

configuration from a specified location to bootstrap or update a

target node

![]() Applying

the configuration defined in the MOF file to the target node, either

during the bootstrapping stage or to repair configuration drift

Applying

the configuration defined in the MOF file to the target node, either

during the bootstrapping stage or to repair configuration drift

Local Configuration Manager runs invoke the configuration specified by your DSC configuration file. You can optionally configure Local Configuration Manager to apply new configurations only, to report differences resulting from configuration drift, or to automatically correct configuration drift.

More Info: Local Configuration Manager

For additional details on the configuration settings available for Local Configuration Manager, see https://msdn.microsoft.com/en-us/powershell/dsc/metaConfig.

To configure a VM using DSC, first create a Windows PowerShell script that describes the desired configuration state. As discussed earlier, this involves selecting resources to configure and providing the appropriate settings. When you have a configuration script, you can use one of a number of methods to initialize a VM to run the script on startup.

Use any text editor to create a Windows PowerShell file. Include a collection of resources to configure, for one or more nodes, in the file. If you are copying files as part of the node configuration, they should be available in the specified source path, and a target path should also be specified. For example, the following script ensures IIS is enabled and copies a single file to the default website:

configuration

DeployWebPage

{

node

("localhost")

{

WindowsFeature IIS

{

Ensure

= "Present"

Name

= "Web-Server"

}

File

WebPage

{

Ensure =

"Present"

DestinationPath

= "C:\inetpub\wwwroot\index.html"

Force =

$true

Type =

"File"

Contents =

'<html><body><h1>Hello Web

Page!</h1></body></html>'

}

}

}

After creating your configuration script and allocating any resources it requires, you need to produce a compressed zip file containing the configuration script in the root, along with any resources needed by the script. You create the zip and copy it up to Azure Storage in one command using Publish-AzureRMVmDscConfiguration using Windows PowerShell and then apply the configuration with SetAzureRmVmDscExtension.

Assume you have the following configuration script in the file iisInstall.ps1 on your local machine:

configuration

IISInstall

{

node "localhost"

{

WindowsFeature

IIS

{

Ensure

= "Present"

Name

= "Web-Server"

}

}

}

You would then run the following PowerShell cmdlets to upload and apply the configuration:

#Load

the Azure PowerShell cmdlets

Import-Module Azure

#Login

to your Azure Account and select your subscription (if your account

has multiple

subscriptions)

Login-AzureRmAccount

Set-AzureRmContext -SubscriptionId <YourSubscriptionId>

$resourceGroup = "dscdemogroup"

$vmName = "myVM"

$storageName = "demostorage"

#Publish the

configuration script into Azure storage

Publish-AzureRmVMDscConfiguration -ConfigurationPath .\iisInstall.ps1

-ResourceGroupName $resourceGroup

-StorageAccountName $storageName -force

#Configure the VM to

run the DSC configuration

Set-AzureRmVmDscExtension -Version

2.21

-ResourceGroupName $resourceGroup

-VMName $vmName

-ArchiveStorageAccountName

$storageName

-ArchiveBlobName

iisInstall.ps1.zip -AutoUpdate:$true -ConfigurationName

"IISInstall"

Before configuring an existing VM using the Azure Portal, you will need to create a ZIP package around your PowerShell script. To do so, run the Publish-AzureVMDscConfiguration cmdlet providing the path to your PowerShell script and the name of that destination zip file to create, for example:

Publish-AzureVMDscConfiguration

.\iisInstall.ps1 -ConfigurationArchivePath .\iisInstall.

ps1.zip

Then you can proceed in the Azure Portal. To configure an existing VM in the portal, complete the following steps:

Navigate to the blade for your VM in the portal accessed via https://portal.azure.com.

From the menu, scroll down to the Settings section, and select Extensions.

On the Extensions blade, select Add on the command bar.

From the New Resource blade, select PowerShell Desired State Configuration.

On the PowerShell Desired State Configuration blade, select Create.

On the Install Extension blade, select the folder button and choose the zip file containing the DSC configuration.

Provide the module-qualified name of the configuration in your .ps1 that you want to apply. This value is constructed from the name of your .ps1 file including the extension, a slash (\) and the name of the configuration as it appears within the .ps1 file. For example, if your file is iisInstall.ps1 and you have a configuration named IISInstall, you would set this to “iisInstall.ps1\IISInstall”.

Optionally provide any Data PSD1 file and configuration arguments required by your script.

Specify the version of the DSC extension (Figure 1-7) you want to install (e.g., 2.21).

FIGURE 1-7 Using the Install Extension

Select OK.

You can use remote debugging to debug applications running on your Windows VMs. Server Explorer in Visual Studio shows your VMs in a list, and from there you can enable remote debugging and attach to a process following these steps:

In Visual Studio, open Cloud Explorer.

Expand the node of the subscription containing your VM, and then expand the Virtual Machines node.

Right-click the VM you want to debug and select Enable Debugging. Click Yes in the dialog box to confirm.

This installs a remote debugging extension to the VM so that you can debug remotely. The progress will be shown in the Microsoft Azure Activity Log. After the debugging extension is installed, you can continue.

Right-click the virtual machine again and select Attach Debugger. This presents a list of processes in the Attach To Process dialog box.

Select the processes you want to debug on the VM and click Attach. To debug a web application, select w3wp.exe, for example.

More Info: Debugging Processes in Visual Studio

For additional information about debugging processes in Visual Studio, see this reference: https://docs.microsoft.com/en-us/visualstudio/debugger/debug-multiple-processes.

Similar to Azure Web Apps, Azure Virtual Machines provides the capability to scale in terms of both instance size and instance count and supports auto-scale on the instance count. However, unlike Websites that can automatically provision new instances as a part of scale out, Virtual Machines on their own must be pre-provisioned in order for auto-scale to turn instances on or off during a scaling operation. To achieve scale-out without having to perform any pre-provisioning of VM resources, Virtual Machine Scale Sets should be deployed.

This skill covers how to:

![]() Scale

up and scale down VM sizes

Scale

up and scale down VM sizes

![]() Deploy

ARM VM Scale Sets (VMSS)

Deploy

ARM VM Scale Sets (VMSS)

![]() Configure

auto-scale on ARM VM Scale Sets

Configure

auto-scale on ARM VM Scale Sets

Using the portal or Windows PowerShell, you can scale VM sizes up or down to alter the capacity of the VM, which collectively adjusts:

![]() The

number of data disks that can be attached and the total IOPS capacity

The

number of data disks that can be attached and the total IOPS capacity

![]() The

size of the local temp disk

The

size of the local temp disk

![]() The

number of CPU cores

The

number of CPU cores

![]() The

amount of RAM memory available

The

amount of RAM memory available

![]() The

network performance

The

network performance

![]() The

quantity of network interface cards (NICs) supported

The

quantity of network interface cards (NICs) supported

More Info: Limits by VM Size

To view the detailed listing of limits by VM size, see https://docs.microsoft.com/azure/virtual-machines/windows/sizes.

To scale a VM up or down in the portal, complete these steps:

Navigate to the blade of your VM in the portal accessed via https://portal.azure.com.

From the menu, select Size.

On the Choose a size blade, select the new size you would like for the VM.

Choose Select to apply the new size.

The instance size can also be adjusted using the following Windows PowerShell script:

$ResourceGroupName

= "examref"

$VMName = "vmname"

$NewVMSize = "Standard_A5"

$vm = Get-AzureRmVM

-ResourceGroupName $ResourceGroupName -Name $VMName

$vm.HardwareProfile.vmSize = $NewVMSize

Update-AzureRmVM

-ResourceGroupName $ResourceGroupName -VM $vm

In the previous script, you specify the name of the Resource Group containing your VM, the name of the VM you want to scale, and the label of the size (for example, “Standard_A5”) to which you want to scale it.

You can get the list of VM sizes available in each Azure region by running the following PowerShell (supplying the Location value desired):

Get-AzureRmVmSize

-Location "East US" | Sort-Object Name |

ft Name,

NumberOfCores, MemoryInMB, MaxDataDiskCount -AutoSize

Virtual Machine Scale Sets enable you to automate the scaling process. During a scale-out event, a VM Scale Set deploys additional, identical copies of ARM VMs. During a scale-in it simply removes deployed instances. No VM in the Scale Set is allowed to have any unique configuration, and can contain only one size and tier of VM, in other words each VM in the Scale Set will also have the same size and tier as all the others in the Scale Set.

VM Scale Sets support VMs running either Windows or Linux. A great way to understand Scale Sets is to compare them to the features of standalone Virtual Machines:

![]() In

a Scale Set, each Virtual Machine must be identical to the other, as

opposed to stand alone Virtual Machines where you can customize each

VM individually.

In

a Scale Set, each Virtual Machine must be identical to the other, as

opposed to stand alone Virtual Machines where you can customize each

VM individually.

![]() You

adjust the capacity of Scale Set simply by adjusting the capacity

property, and this in turn deploys more VMs in parallel. In contrast,

scaling out stand alone VMs would mean writing a script to

orchestrate the deployment of many individual VMs.

You

adjust the capacity of Scale Set simply by adjusting the capacity

property, and this in turn deploys more VMs in parallel. In contrast,

scaling out stand alone VMs would mean writing a script to

orchestrate the deployment of many individual VMs.

![]() Scale

Sets support overprovisioning during a scale out event, meaning that

the Scale Set will actually deploy more VMs than you asked for, and

then when the requested number of VMs are successfully provisioned

the extra VMs are deleted (you are not charged for the extra VMs and

they do not count against your quota limits). This approach improves

the provisioning success rate and reduces deployment time. For

standalone VMs, this adds extra requirements and complexity to any

script orchestrating the deployment. Moreover, you would be charged

for the extra standalone VM’s and they would count against your

quota limits.

Scale

Sets support overprovisioning during a scale out event, meaning that

the Scale Set will actually deploy more VMs than you asked for, and

then when the requested number of VMs are successfully provisioned

the extra VMs are deleted (you are not charged for the extra VMs and

they do not count against your quota limits). This approach improves

the provisioning success rate and reduces deployment time. For

standalone VMs, this adds extra requirements and complexity to any

script orchestrating the deployment. Moreover, you would be charged

for the extra standalone VM’s and they would count against your

quota limits.

![]() Scale

Set can roll out upgrades using an upgrade policy across the VMs in

your Scale Set. With standalone VMs you would have to orchestrate

this update process yourself.

Scale

Set can roll out upgrades using an upgrade policy across the VMs in

your Scale Set. With standalone VMs you would have to orchestrate

this update process yourself.

![]() Azure

Autoscale can be used to automatically scale a Scale Set, but cannot

be used against standalone VMs.

Azure

Autoscale can be used to automatically scale a Scale Set, but cannot

be used against standalone VMs.

![]() The

Networking of Scale Sets is similar to standalone VMs deployed in a

Virtual Network. Scale Sets deploy the VMs they manage into a single

subnet of a Virtual Network. To access any particular Scale Set VM

you either use an Azure Load Balancer with NAT rules (e.g. where each

external port can map to a Scale Set instance VM) or you deploy a

publicly accessible “jumpbox” VM in the same Virtual Network

subnet as the Scale Set VMs, and access the Scale Set VMs via the

jumpbox (to which you are either RDP or SSH connected).

The

Networking of Scale Sets is similar to standalone VMs deployed in a

Virtual Network. Scale Sets deploy the VMs they manage into a single

subnet of a Virtual Network. To access any particular Scale Set VM

you either use an Azure Load Balancer with NAT rules (e.g. where each

external port can map to a Scale Set instance VM) or you deploy a

publicly accessible “jumpbox” VM in the same Virtual Network

subnet as the Scale Set VMs, and access the Scale Set VMs via the

jumpbox (to which you are either RDP or SSH connected).

The maximum number of VMs to which a VM Scale Set can scale, referred to as the capacity, depends on three factors:

![]() Support

for multiple placement groups

Support

for multiple placement groups

![]() The

use of managed disks

The

use of managed disks

![]() If

the VM’s use an image from the Marketplace or are created from a

user supplied image

If

the VM’s use an image from the Marketplace or are created from a

user supplied image

Placement groups are a Scale Set specific concept that is similar to Availability Sets, where a Placement group is implicitly an Availability Set with five fault domains and five update domains, and supports up to 100 VM’s. When you deploy a Scale Set you can restrict to only allow a single placement group, which will effectively limit your Scale Set capacity to 100 VM’s. However, if you allow multiple placement groups during deployment, then your Scale Set may support up to 1,000 VM’s, depending on the other two factors (managed disks and image source).

During Scale Set deployment, you can also choose whether to use unmanaged (for example, the traditional disks in an Azure Storage Account you control) or managed disks (where the disk itself is the resource you manage, and the Storage Account is no longer a concern of yours). If you choose unmanaged storage, you will also need to be limited to using a single placement group, and therefore the capacity of your Scale Set limited to 100 VMs. However, if you opt to use managed disks then your Scale Set may support up to 1,000 VMs subject only to our last factor (the image source).

The final factor affecting your Scale Set’s maximum capacity is the source of the image used when the Scale Set provisions the VMs it manages. If the image source is a Marketplace image (like any of the baseline images for Windows Server or Linux) then your Scale Set supports up to 1,000 VMs. However, if your VMs will be based off of a custom image you supply then your Scale Set will have a capacity of 100 VMs.

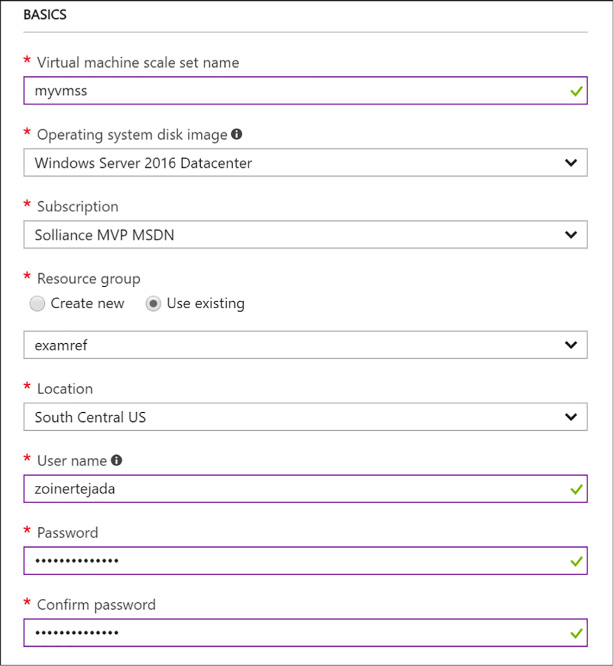

To deploy a Scale Set using the Azure Portal, you deploy a Scale Set and as a part of that process select the Marketplace image to use for the VMs it will manage. You cannot select a VM Marketplace image and then choose to include it in a Scale Set (as you might when selecting a Resource Group). To deploy a Scale Set in the portal, complete these steps (Figure 1-8):

Navigate to the portal accessed via https://portal.azure.com.

Select + New and in the Search the Marketplace box, enter “scale sets” and select the “Virtual machine scale set” item that appears.

On the Virtual machine scale set blade, select Create.

In the Basics property group, provide a name for the scale set.

Select the OS type (Window or Linux).

Choose your Subscription, Resource group and Location. Note that the Resource group you select for the Scale Set must either be empty or be created new with Scale Set.

Enter a user name and password (for Windows), an SSH user name and password (for Linux) or an SSH public key (for Linux).

FIGURE 1-8 The Basics properties for the VM Scale Set

In the Instances and Load Balancer property group, set the instance count to the desired number of instances to deploy initially.

Select the virtual machine instance size for all machines in the Scale Set.

Choose whether to limit to a single placement group or not by selecting the option to Enable scaling beyond 100 instances. A selection of “No” will limit your deployment to a single placement group.

Select to use managed or unmanaged disks. If you chose to sue multiple placement groups, then managed disks are the only option and will be automatically selected for you.

If you chose to use a single placement group, configure the public IP address name you can use to access VMs via a Load Balancer. If you allowed multiple placement groups, then this option is unavailable.

Similarly, if you chose to use a single placement group, configure the public IP allocation mode (which can be Dynamic or Static) and provide a label for your domain name. If you allowed multiple placement groups, then this option is unavailable (Figure 1-9).

FIGURE 1-9 The Instances And Load Balancer properties for a VM Scale Set

In the Autoscale property group, leave Autoscale set to Disabled.

Select Create.

More Info: Deploying a Scale set Using Powershell or Azure CLI

You can also deploy a Scale Set using PowerShell or the Azure CLI. For the detailed step by step instructions, see https://docs.microsoft.com/azure/virtual-machine-scale-sets/virtual-machine-scale-sets-create.

To deploy a Scale Set where the VMs are created from custom or user-supplied image you must perform the following:

Generalize and capture an unmanaged VM disk from a standalone VM. The disk is saved in an Azure Storage Account you provide.

More Info: Creating a Generalized VM Disk

To generalize and capture a VM from a VM you have already deployed in Azure, see https://docs.microsoft.com/azure/virtual-machines/windows/sa-copy-generalized.

Create an ARM Template that at minimum:

Creates a managed image based on the generalized unmanaged disk available in Azure Storage. Your template needs to define a resource of type “Microsoft.Compute/images” that references the VHD image by its URI. Alternately, you can pre-create the managed image (which allows you to specify the VHDs for the OS Disk and any Data Disks), for example by creating an image using the Portal, and omit this section in your template.

Configures the Scale Set to use the managed image. Your template needs to defines a resource of type “Microsoft.Compute/virtualMachineScaleSets” that, in its “storageProfile” contains a reference to the image you defined previously.

Deploy the ARM template. Deploy the ARM template using the approach of your choice (for example, Portal, PowerShell or by using the Azure CLI).

More Info: Deploying ARM Templates

For instructions on deploying an ARM template, see https://docs.microsoft.com/azure/azure-resource-manager/resource-group-template-deploy-portal#deploy-resources-from-custom-template.

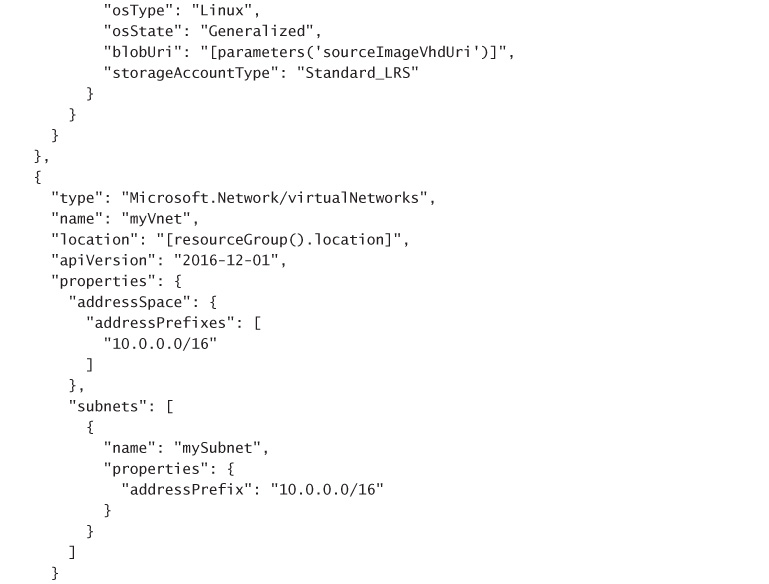

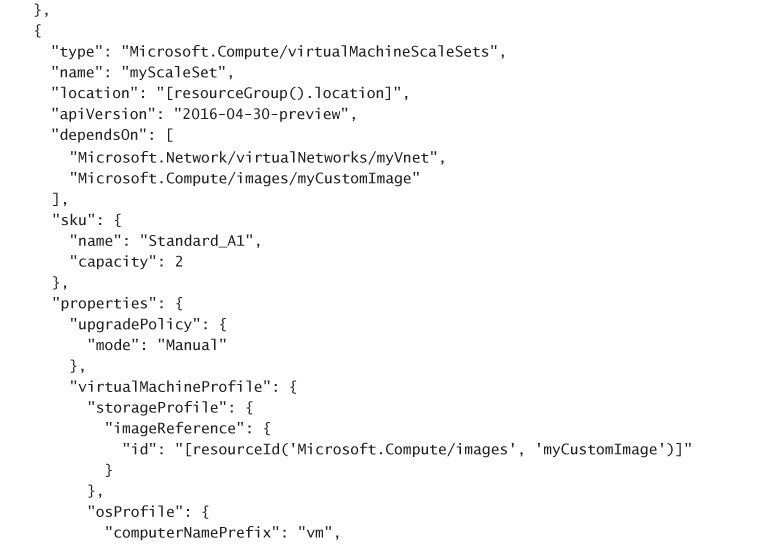

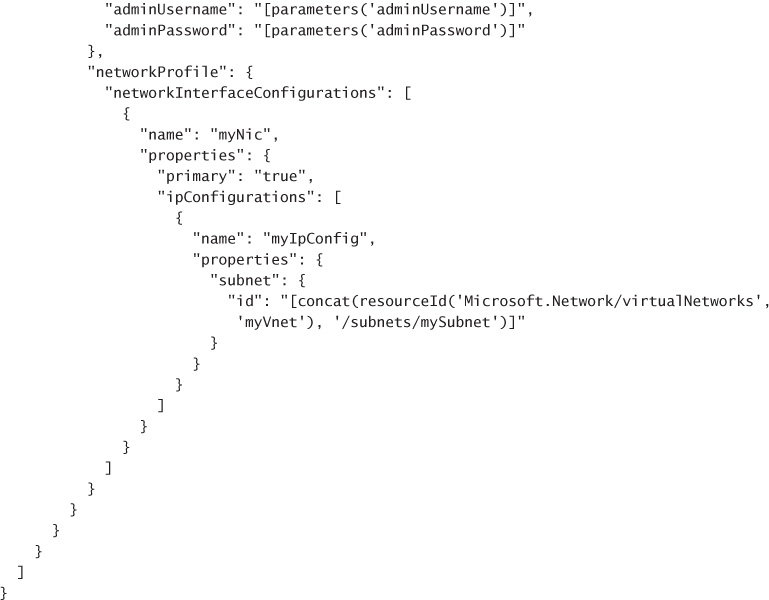

The following code snippet shows an example of a complete ARM template for deploying a VM Scale Set that uses Linux VMs, where the authentication for the VM’s is username and password based, and the VHD source is a generalized, unmanaged VHD disk stored in an Azure Storage Account. When the template is deployed, the user needs to specify the admin username and password to establish on all VMs in the Scale Set, as well as the URI to the source VHD in Azure Storage blobs.

{

"$schema":

"http://schema.management.azure.com/schemas/

2015-01-01/deploymentTemplate.json",

"contentVersion":

"1.0.0.0",

"parameters": {

"adminUsername": {

"type": "string"

},

"adminPassword":

{

"type":

"securestring"

},

"sourceImageVhdUri": {

"type": "string",

"metadata": {

"description":

"The source of the generalized blob containing the custom

image"

}

}

},

"variables": {},

"resources": [

{

"type":

"Microsoft.Compute/images",

"apiVersion":

"2016-04-30-preview",

"name":

"myCustomImage",

"location":

"[resourceGroup().location]",

"properties":

{

"storageProfile":

{

"osDisk":

{

"osType":

"Linux",

"osState":

"Generalized",

"blobUri":

"[parameters('sourceImageVhdUri')]",

"storageAccountType":

"Standard_LRS"

}

}

}

},

{

"type":

"Microsoft.Network/virtualNetworks",

"name":

"myVnet",

"location":

"[resourceGroup().location]",

"apiVersion":

"2016-12-01",

"properties":

{

"addressSpace":

{

"addressPrefixes":

[

"10.0.0.0/16"

]

},

"subnets":

[

{

"name":

"mySubnet",

"properties":

{

"addressPrefix":

"10.0.0.0/16"

}

}

]

}

},

{

"type":

"Microsoft.Compute/virtualMachineScaleSets",

"name": "myScaleSet",

"location":

"[resourceGroup().location]",

"apiVersion":

"2016-04-30-preview",

"dependsOn":

[

"Microsoft.Network/virtualNetworks/myVnet",

"Microsoft.Compute/images/myCustomImage"

],

"sku":

{

"name":

"Standard_A1",

"capacity":

2

},

"properties": {

"upgradePolicy":

{

"mode":

"Manual"

},

"virtualMachineProfile":

{

"storageProfile":

{

"imageReference":

{

"id":

"[resourceId('Microsoft.Compute/images', 'myCustomImage')]"

}

},

"osProfile":

{

"computerNamePrefix":

"vm",

"adminUsername":

"[parameters('adminUsername')]",

"adminPassword":

"[parameters('adminPassword')]"

},

"networkProfile":

{

"networkInterfaceConfigurations":

[

{

"name":

"myNic",

"properties":

{

"primary":

"true",

"ipConfigurations":

[

{

"name":

"myIpConfig",

"properties":

{

"subnet":

{

"id":

"[concat(resourceId('Microsoft.Network/virtualNetworks',

'myVnet'), '/subnets/mySubnet')]"

}

}

}

]

}

}

]

}

}

}

}

]

}

More Info: Template Source

You can download the ARM template shown from: https://github.com/gatneil/mvss/blob/custom-image/azuredeploy.json.

Autoscale is a feature of the Azure Monitor service in Microsoft Azure that enables you to automatically scale resources based on rules evaluated against metrics provided by those resources. Autoscale can be used with Virtual Machine Scale Sets to adjust the capacity according to metrics like CPU utilization, network utilization and memory utilization across the VMs in the Scale Set. Additionally, Autoscale can be configured to adjust the capacity of the Scale Set according to metrics from other services, such as the number of messages in an Azure Queue or Service Bus queue.

You can configure a Scale Set to autoscale when provisioning a new Scale Set in the Azure Portal. When configuring it during provisioning, the only metric you can scale against is CPU utilization. To provision a Scale Set with CPU based autoscale, complete the following steps:

Navigate to the portal accessed via https://portal.azure.com.

Select + New and in the Search the Marketplace box, enter “scale sets” and select the “Virtual machine scale set” item that appears.

On the Virtual machine scale set blade, select Create.

In the Basics property group, provide a name for the scale set.

Select the OS type (Window or Linux).

Choose your Subscription, Resource group and Location. Note that the Resource group you select for the Scale Set must either be empty or be created new with Scale Set.

Enter a user name and password (for Windows), an SSH user name and password (for Linux) or an SSH public key (for Linux).

In the Instances and Load Balancer property group, set the instance count to the desired number of instances to deploy initially.

Select the virtual machine instance size for all machines in the Scale Set.

Choose whether to limit to a single placement group or not by selecting the option to Enable scaling beyond 100 instances. A selection of “No” will limit your deployment to a single placement group.

Select to use managed or unmanaged disks. If you chose to sue multiple placement groups, then managed disks are the only option and will be automatically selected for you.

If you chose to use a single placement group, configure the public IP address name you can use to access VMs via a Load Balancer. If you allowed multiple placement groups, then this option is unavailable.

Similarly, if you chose to use a single placement group, configure the public IP allocation mode (which can be Dynamic or Static) and provide a label for your domain name. If you allowed multiple placement groups, then this option is unavailable.

In the Autoscale property group, chose to enable autoscale. If you enable autoscale, provide the desired VM instance count ranges, the scale out or scale in CPU thresholds and instance counts to scale out or scale in by (Figure 1-10).

FIGURE 1-10 The Autoscale settings for a VM Scale Set

Select Create.

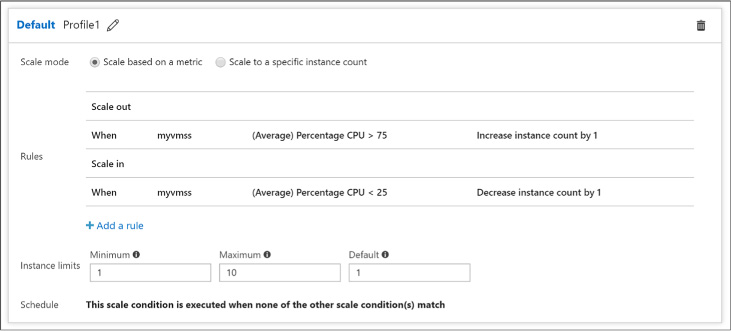

You can configure a Scale Set to Autoscale after it is deployed using the Portal. When configuring this way, you can scale according to any of the available metrics. To further configure Autoscale on an existing Scale Set with Autoscale already enabled, complete the following steps:

Navigate to the portal accessed via https://portal.azure.com.

Navigate to your Virtual machine scale set in the Portal.

From the menu, under Settings, select Scaling.

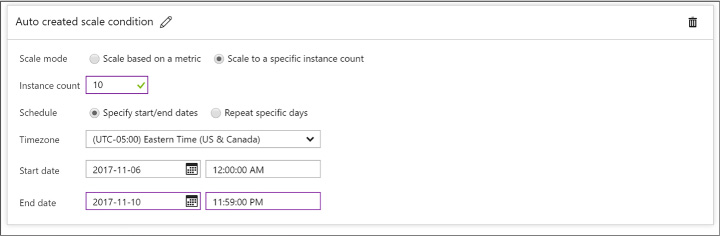

Select Add Default Scale Condition or Add A Scale Condition. The default scale condition (Figure 1-11) will run when none of the other scale conditions match.

For the scale condition, choose the scale mode. You can scale based on a metric or scale to a specific instance count.

FIGURE 1-11 The Default scale condition

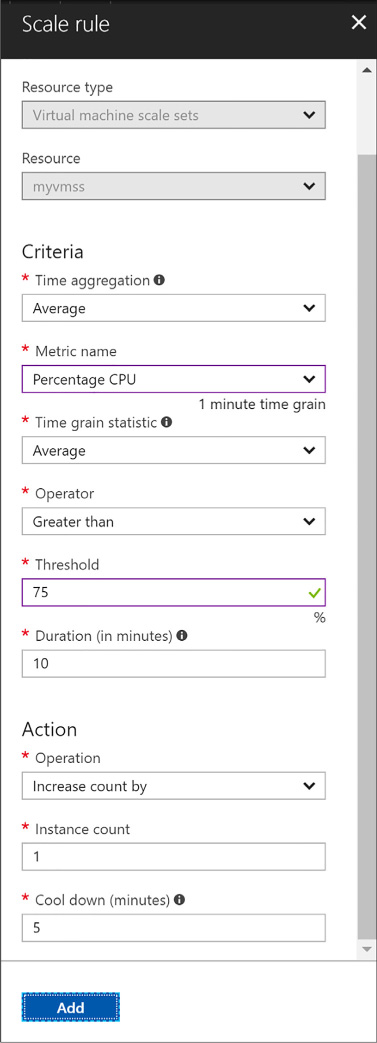

When choosing to scale based on a metric (Figure 1-12):

![]() Select

Add rule to define the metric source (e.g., the Scale Set itself or

another Azure resource), the Criteria (e.g., the metric name, time

grain and value range), and the Action (e.g., to scale out or scale

in).

Select

Add rule to define the metric source (e.g., the Scale Set itself or

another Azure resource), the Criteria (e.g., the metric name, time

grain and value range), and the Action (e.g., to scale out or scale

in).

FIGURE 1-12 Adding a Scale Rule

When choosing to scale to a specific instance count:

![]() For

the default scale condition, you can only specify the target instance

count to which the Scale Set capacity will reset.

For

the default scale condition, you can only specify the target instance

count to which the Scale Set capacity will reset.

![]() For

non-default scale conditions, you specify the desired instance count

and a time based schedule during which that instance count will

apply. Specify the time by using a start and end dates or according

to a recurring schedule that repeats during a time range on selected

days of the week (Figure

1-13).

For

non-default scale conditions, you specify the desired instance count

and a time based schedule during which that instance count will

apply. Specify the time by using a start and end dates or according

to a recurring schedule that repeats during a time range on selected

days of the week (Figure

1-13).

FIGURE 1-13 Adding a Scale Condition

Select Save in the command bar to apply your Autoscale settings.

More Info: VM Scale set and Autoscale Deployment with Powershell

For an end-to-end example walking thru of the steps to create a VM Scale Set configured with Autoscale showing how to deploy with PowerShell, see: https://docs.microsoft.com/azure/virtual-machine-scale-sets/virtual-machine-scale-sets-windows-autoscale.