Users Online

· Members Online: 0

· Total Members: 188

· Newest Member: meenachowdary055

Forum Threads

Latest Articles

Articles Hierarchy

Skill 2.1: Implement Azure Storage blobs and Azure files

Skill 2.1: Implement Azure Storage blobs and Azure files

Skill 2.1: Implement Azure Storage blobs and Azure files

File storage is incredibly useful in a wide variety of solutions for your organization. Whether storing sensor data from refrigeration trucks that check in every few minutes, storing resumes as PDFs for your company website, or storing SQL Server backup files to comply with a retention policy. Microsoft Azure provides several methods of storing files, including Azure Storage blobs and Azure Files. We will look at the differences between these products and teach you how to begin using each one.

This skill covers how to:

![]() Create a blob storage account

Create a blob storage account

![]() Read data and change data

Read data and change data

![]() Set metadata on a container

Set metadata on a container

![]() Store data using block and page blobss

Store data using block and page blobss

![]() Stream data using blobs

Stream data using blobs

![]() Access blobs securely

Access blobs securely

![]() Implement async blob copy

Implement async blob copy

![]() Configure Content Delivery Network (CDN)

Configure Content Delivery Network (CDN)

![]() Design blob hierarchies

Design blob hierarchies

![]() Configure custom domains

Configure custom domains

![]() Scale blob storage

Scale blob storage

![]() Implement blob leasing

Implement blob leasing

![]() Create connections to files from on-premises or cloud-based Windows or Linux machines

Create connections to files from on-premises or cloud-based Windows or Linux machines

![]() Shard large datasets

Shard large datasets

Azure Storage blobs

Azure Storage blobs are the perfect product to use when you have files that you’re storing using a custom application. Other developers might also write applications that store files in Azure Storage blobs, which is the storage location for many Microsoft Azure products, like Azure HDInsight, Azure VMs, and Azure Data Lake Analytics. Azure Storage blobs should not be used as a file location for users directly, like a corporate shared drive. Azure Storage blobs provide client libraries and a REST interface that allows unstructured data to be stored and accessed at a massive scale in block blobs.

Create a blob storage account

-

Sign in to the Azure portal.

-

Click the green plus symbol on the left side.

-

On the Hub menu, select New > Storage > Storage account–blob, file, table, queue.

-

Click Create.

-

Enter a name for your storage account.

-

For most of the options, you can choose the defaults.

-

Specify the Resource Manager deployment model. You should choose an Azure Resource Manager deployment. This is the newest deployment API. Classic deployment will eventually be retired.

-

Your application is typically made up of many components, for instance a website and a database. These components are not separate entities, but one application. You want to deploy and monitor them as a group, called a resource group. Azure Resource Manager enables you to work with the resources in your solution as a group.

-

Select the General Purpose type of storage account.

There are two types of storage accounts: General purpose or Blob storage. General purpose storage type allows you to store tables, queues, and blobs all-in-one storage. Blob storage is just for blobs. The difference is that Blob storage has hot and cold tiers for performance and pricing and a few other features just for Blob storage. We’ll choose General Purpose so we can use table storage later.

-

Under performance, specify the standard storage method. Standard storage uses magnetic disks that are lower performing than Premium storage. Premium storage uses solid-state drives.

-

Storage service encryption will encrypt your data at rest. This might slow data access, but will satisfy security audit requirements.

-

Secure transfer required will force the client application to use SSL in their data transfers.

-

You can choose several types of replication options. Select the replication option for the storage account.

-

The data in your Microsoft Azure storage account is always replicated to ensure durability and high availability. Replication copies your data, either within the same data center, or to a second data center, depending on which replication option you choose. For replication, choose carefully, as this will affect pricing. The most affordable option is Locally Redundant Storage (LRS).

-

Select the subscription in which you want to create the new storage account.

-

Specify a new resource group or select an existing resource group. Resource groups allow you to keep components of an application in the same area for performance and management. It is highly recommended that you use a resource group. All service placed in a resource group will be logically organized together in the portal. In addition, all of the services in that resource group can be deleted as a unit.

-

Select the geographic location for your storage account. Try to choose one that is geographically close to you to reduce latency and improve performance.

-

Click Create to create the storage account.

Once created, you will have two components that allow you to interact with your Azure Storage account via an SDK. SDKs exist for several languages, including C#, JavaScript, and Python. In this module, we’ll focus on using the SDK in C#. Those two components are the URI and the access key. The URI will look like this: http://{your storage account name from step 4}.blob.core.windows.net.

Your access key will look like this: KEsm421/uwSiel3dipSGGL124K0124SxoHAXq3jk124vuCjw35124fHRIk142WIbxbTmQrzIQdM4K5Zyf9ZvUg==

Read and change data

First, let’s use the Azure SDK for .NET to load data into your storage account.

-

Create a console application.

-

Use Nuget Package Manager to install WindowsAzure.Storage.

-

In the Using section, add a using to Microsoft.WindowsAzure.Storage and Microsoft.WindowsAzure.Storage.Blob.

-

Create a storage account in your application like this:

CloudStorageAccount storageAccount;

storageAccount =

CloudStorageAccount.Parse("DefaultEndpointsProtocol=https;AccountName={your

storage account name};AccountKey={your storage key}");

Azure Storage blobs are organized with containers. Each storage account can have an unlimited amount of containers. Think of containers like folders, but they are very flat with no sub-containers. In order to load blobs into an Azure Storage account, you must first choose the container.

-

Create a container using the following code:

CloudBlobClient blobClient = storageAccount.CreateCloudBlobClient();

CloudBlobContainer container = blobClient.GetContainerReference("democontainerblo

ckblob");

try

{

await container.CreateIfNotExistsAsync();

}

catch (StorageException ex)

{

Console.WriteLine(ex.Message);

Console.ReadLine();

throw;

}

-

In the following code, you need to set the path of the file you want to upload using the ImageToUpload variable.

const string ImageToUpload = @"C:\temp\HelloWorld.png";

CloudBlockBlob blockBlob = container.GetBlockBlobReference("HelloWorld.png");

// Create or overwrite the "myblob" blob with contents from a local file.

using (var fileStream = System.IO.File.OpenRead(ImageToUpload))

{

blockBlob.UploadFromStream(fileStream);

}

-

Every blob has an individual URI. By default, you can gain access to that blob as long as you have the storage account name and the access key. We can change the default by changing the Access Policy of the Azure Storage blob container. By default, containers are set to private. They can be changed to either blob or container. When set to Public Container, no credentials are required to access the container and its blobs. When set to Public Blob, only blobs can be accessed without credentials if the full URL is known. We can read that blob using the following code:

foreach (IListBlobItem blob in container.ListBlobs())

{

Console.WriteLine("- {0} (type: {1})", blob.Uri, blob.GetType());

}

Note how we use the container to list the blobs to get the URI. We also have all of the information necessary to download the blob in the future.

Set metadata on a container

Metadata is useful in Azure Storage blobs. It can be used to set content types for web artifacts or it can be used to determine when files have been updated. There are two different types of metadata in Azure Storage Blobs: System Properties and User-defined Metadata. System properties give you information about access, file types, and more. Some of them are read-only. User-defined metadata is a key-value pair that you specify for your application. Maybe you need to make a note of the source, or the time the file was processed. Data like that is perfect for user-defined metadata.

Blobs and containers have metadata attached to them. There are two forms of metadata:

![]() System properties metadata

System properties metadata

![]() User-defined metadata

User-defined metadata

System properties can influence how the blob behaves, while user-defined metadata is your own set of name/value pairs that your applications can use. A container has only read-only system properties, while blobs have both read-only and read-write properties.

Setting user-defined metadata

To set user-defined metadata for a container, get the container reference using GetContainerReference(), and then use the Metadata member to set values. After setting all the desired values, call SetMetadata() to persist the values, as in the following example:

CloudBlobClient blobClient = storageAccount.CreateCloudBlobClient();

CloudBlobContainer container =

blobClient.GetContainerReference("democontainerblockblob");

container.Metadata.Add("counter", "100");container.SetMetadata();

More Info: Blob Metadata

Blob metadata includes both read-only and read-write properties that are valid HTTP headers and follow restrictions governing HTTP headers. The total size of the metadata is limited to 8 KB for the combination of name and value pairs. For more information on interacting with individual blob metadata, see https://docs.microsoft.com/en-us/azure/storage/blobs/storage-properties-metadata.

Reading user-defined metadata

To read user-defined metadata for a container, get the container reference using GetContainerReference(), and then use the Metadata member to retrieve a dictionary of values and access them by key, as in the following example:

container.FetchAttributes();

foreach (var metadataItem in container.Metadata)

{

Console.WriteLine("\tKey: {0}", metadataItem.Key);

Console.WriteLine("\tValue: {0}", metadataItem.Value);

}

Exam Tip

If the metadata key doesn’t exist, an exception is thrown.

Reading system properties

To read a container’s system properties, first get a reference to the container using GetContainerReference(), and then use the Properties member to retrieve values. The following code illustrates accessing container system properties:

container = blobClient.GetContainerReference("democontainerblockblob");

container.FetchAttributes();

Console.WriteLine("LastModifiedUTC: " + container.Properties.LastModified);

Console.WriteLine("ETag: " + container.Properties.ETag);

More Info: Container Metadata and the Storage API

You can request container metadata using the Storage API. For more information on this and the list of system properties returned, see http://msdn.microsoft.com/en-us/library/azure/dd179370.aspx.

Store data using block and page blobs

There are three types of blobs used in Azure Storage Blobs: Block, Append, and Page. Block blobs are used to upload large files. They are comprised of blocks, each with its own block ID. Because the blob is divided up in blocks, it allows for easy updating or resending when transferring large files. You can insert, replace, or delete an existing block in any order. Once a block is updated, added, or removed, the list of blocks needs to be committed for the file to actually record the update.

Page blobs are comprised of 512-byte pages that are optimized for random read and write operations. Writes happen in place and are immediately committed. Page blobs are good for VHDs in Azure VMs and other files that have frequent, random access.

Append blobs are optimized for append operations. Append blobs are good for logging and streaming data. When you modify an append blob, blocks are added to the end of the blob.

In most cases, block blobs will be the type you will use. Block blobs are perfect for text files, images, and videos.

A previous section demonstrated how to interact with a block blob. Here’s how to write a page blob:

string pageBlobName = "random";

CloudPageBlob pageBlob = container.GetPageBlobReference(pageBlobName);

await pageBlob.CreateAsync(512 * 2 /*size*/); // size needs to be multiple of 512 bytes

byte[] samplePagedata = new byte[512];

Random random = new Random();

random.NextBytes(samplePagedata);

await pageBlob.UploadFromByteArrayAsync(samplePagedata, 0, samplePagedata.Length);

To read a page blob, use the following code:

int bytesRead = await pageBlob.DownloadRangeToByteArrayAsync(samplePagedata,

0, 0, samplePagedata.Count());

Stream data using blobs

You can stream blobs by downloading to a stream using the DownloadToStream() API method. The advantage of this is that it avoids loading the entire blob into memory, for example before saving it to a file or returning it to a web request.

Access blobs securely

Secure access to blob storage implies a secure connection for data transfer and controlled access through authentication and authorization.

Azure Storage supports both HTTP and secure HTTPS requests. For data transfer security, you should always use HTTPS connections. To authorize access to content, you can authenticate in three different ways to your storage account and content:

![]() Shared Key Constructed from a set of fields related to the request. Computed with a SHA-256 algorithm and encoded in Base64.

Shared Key Constructed from a set of fields related to the request. Computed with a SHA-256 algorithm and encoded in Base64.

![]() Shared Key Lite Similar to Shared Key, but compatible with previous versions of Azure Storage. This provides backwards compatibility with code that was written against versions prior to 19 September 2009. This allows for migration to newer versions with minimal changes.

Shared Key Lite Similar to Shared Key, but compatible with previous versions of Azure Storage. This provides backwards compatibility with code that was written against versions prior to 19 September 2009. This allows for migration to newer versions with minimal changes.

![]() Shared Access Signature Grants restricted access rights to containers and blobs. You can provide a shared access signature to users you don’t trust with your storage account key. You can give them a shared access signature that will grant them specific permissions to the resource for a specified amount of time. This is discussed in a later section.

Shared Access Signature Grants restricted access rights to containers and blobs. You can provide a shared access signature to users you don’t trust with your storage account key. You can give them a shared access signature that will grant them specific permissions to the resource for a specified amount of time. This is discussed in a later section.

To interact with blob storage content authenticated with the account key, you can use the Storage Client Library as illustrated in earlier sections. When you create an instance of the CloudStorageAccount using the account name and key, each call to interact with blob storage will be secured, as shown in the following code:

string accountName = "ACCOUNTNAME";

string accountKey = "ACCOUNTKEY";

CloudStorageAccount storageAccount = new CloudStorageAccount(new

StorageCredentials(accountName, accountKey), true);

Implement Async blob copy

It is possible to copy blobs between storage accounts. You may want to do this to create a point-in-time backup of your blobs before a dangerous update or operation. You may also want to do this if you’re migrating files from one account to another one. You cannot change blob types during an async copy operation. Block blobs will stay block blobs. Any files with the same name on the destination account will be overwritten.

Blob copy operations are truly asynchronous. When you call the API and get a success message, this means the copy operation has been successfully scheduled. The success message will be returned after checking the permissions on the source and destination accounts.

You can perform a copy in conjunction with the Shared Access Signature method of gaining permissions to the account. We’ll cover that security method in a later topic.

Configure a Content Delivery Network with Azure Blob Storage

A Content Delivery Network (CDN) is used to cache static files to different parts of the world. For instance, let’s say you were developing an online catalog for a retail organization with a global audience. Your main website was hosted in western United States. Users of the application in Florida complain of slowness while users in Washington state compliment you for how fast it is. A CDN would be a perfect solution for serving files close to the users, without the added latency of going across country. Once files are hosted in an Azure Storage Account, a configured CDN will store and replicate those files for you without any added management. The CDN cache is perfect for style sheets, documents, images, JavaScript files, packages, and HTML pages.

After creating an Azure Storage Account like you did earlier, you must configure it for use with the Azure CDN service. Once that is done, you can call the files from the CDN inside the application.

To enable the CDN for the storage account, follow these steps:

-

In the Storage Account navigation pane, find Azure CDN towards the bottom. Click on it.

-

Create a new CDN endpoint by filling out the form that popped up.

-

Azure CDN is hosted by two different CDN networks. These are partner companies that actually host and replicate the data. Choosing a correct network will affect the features available to you and the price you pay. No matter which tier you use, you will only be billed through the Microsoft Azure Portal, not through the third-party. There are three pricing tiers:

-

![]() Premium Verizon The most expensive tier. This tier offers advanced real-time analytics so you can know what users are hitting what content and when.

Premium Verizon The most expensive tier. This tier offers advanced real-time analytics so you can know what users are hitting what content and when.

![]() Standard Verizon The standard CDN offering on Verizon’s network.

Standard Verizon The standard CDN offering on Verizon’s network.

![]() Standard Akamai The standard CDN offering on Akamai’s network.

Standard Akamai The standard CDN offering on Akamai’s network.

-

-

Specify a Profile and an endpoint name. After the CDN endpoint is created, it will appear on the list above.

-

-

Once this is done, you can configure the CDN if needed. For instance, you can use a custom domain name is it looks like your content is coming from your website.

-

Once the CDN endpoint is created, you can reference your files using a path similar to the following:

Error! Hyperlink reference not valid.>

If a file needs to be replaced or removed, you can delete it from the Azure Storage blob container. Remember that the file is being cached in the CDN. It will be removed or updated when the Time-to-Live (TTL) expires. If no cache expiry period is specified, it will be cached in the CDN for seven days. You set the TTL is the web application by using the clientCache element in the web.config file. Remember when you place that in the web.config file it affects all folders and subfolders for that application.

Design blob hierarchies

Azure Storage blobs are stored in containers, which are very flat. This means that you cannot have child containers contained inside a parent container. This can lead to organizational confusion for users who rely on folders and subfolders to organize files.

A hierarchy can be replicated by naming the files something that’s similar to a folder structure. For instance, you can have a storage account named “sally.” Your container could be named “pictures.” Your file could be named “product1\mainFrontPanel.jpg.” The URI to your file would look like this: http://sally.blob.core.windows.net/pictures/product1/mainFrontPanel.jpg

In this manner, a folder/subfolder relationship can be maintained. This might prove useful in migrating legacy applications over to Azure.

Configure custom domains

The default endpoint for Azure Storage blobs is: (Storage Account Name).blob.core.windows.net. Using the default can negatively affect SEO. You might also not want to make it obvious that you are hosting your files in Azure. To obfuscate this, you can configure Azure Storage to respond to a custom domain. To do this, follow these steps:

-

Navigate to your storage account in the Azure portal.

-

On the navigation pane, find BLOB SERVICE. Click Custom Domain.

-

Check the Use Indirect CNAME Validation check box. We use this method because it does not incur any downtime for your application or website.

-

Log on to your DNS provider. Add a CName record with the subdomain alias that includes the Asverify subdomain. For example, if you are holding pictures in your blob storage account and you want to note that in the URL, then the CName would be Asverify.pictures (your custom domain including the .com or .edu, etc.) Then provide the hostname for the CNAME alias, which would also include Asverify. If we follow the earlier example of pictures, the hostname URL would be sverify.pictures.blob.core.windows.net. The hostname to use appears in #2 of the Custom domain blade in the Azure portal from the previous step.

-

In the text box on the Custom domain blade, enter the name of your custom domain, but without the Asverify. In our example, it would be pictures.(your custom domain including the .com or .edu, etc.) .

-

Select Save.

-

Now return to your DNS provider’s website and create another CNAME record that maps your subdomain to your blob service endpoint. In our example, we can make pictures.(your custom domain) point to pictures.blob.core.windows.net.

-

Now you can delete the azverify CName now that it has been verified by Azure.

Why did we go through the azverify steps? We were allowing Azure to recognize that you own that custom domain before doing the redirection. This allows the CNAME to work with no downtime.

In the previous example, we referenced a file like this: http://sally.blob.core.windows.net/pictures/product1/mainFrontPanel.jpg.

With the custom domain, it would now look like this: http://pictures.(your custom domain)/pictures/product1/mainFrontPanel.jpg.

Scale blob storage

We can scale blob storage both in terms of storage capacity and performance. Each Azure subscription can have 200 storage accounts, with 500TB of capacity each. That means that each Azure subscription can have 100 petabytes of data in it without creating another subscription.

An individual block blob can have 50,000 100MB blocks with a total size of 4.75TB. An append blob has a max size of 195GB. A page blob has a max size of 8TB.

In order to scale performance, we have several features available to us. We can implement an Azure CDN to enable geo-caching to keep blobs close to the users. We can implement read access geo-redundant storage and offload some of the reads to another geographic location (thus creating a mini-CDN that will be slower, but cheaper).

Azure Storage blobs (and tables, queues, and files, too) have an amazing feature. By far, the most expensive services for most cloud vendors is compute time. You pay for how many and how fast the processors are in the service you are using. Azure Storage doesn’t charge for compute. It only charges for disk space used and network bandwidth (which is a fairly nominal charge). Azure Storage blobs are partitioned by storage account name + container name + blob name. This means that each blob is retrieved by one and only one server. Many small files will perform better in Azure Storage than one large file. Blobs use containers for logical grouping, but each blob can be retrieved by different compute resources, even if they are in the same container.

Azure files

Azure file storage provides a way for applications to share storage accessible via SMB 2.1 protocol. It is particularly useful for VMs and cloud services as a mounted share, and applications can use the File Storage API to access file storage.

More Info: File Storage Documentation

For additional information on file storage, see the guide at: http://azure.microsoft.com/en-us/documentation/articles/storage-dotnet-how-to-use-files/.

Implement blob leasing

You can create a lock on a blob for write and delete operations. The lock can be between 15 and 60 seconds or it can be infinite. To write to a blob with an active lease, the client must include the active lease ID with the request.

When a client requests a lease, a lease ID is returned. The client may then use this lease ID to renew, change, or release the lease. When the lease is active, the lease ID must be included to write to the blob, set any meta data, add to the blob (through append), copy the blob, or delete the blob. You may still read a blob that has an active lease ID to another client and without using the lease ID.

The code to acquire a lease looks like the following example (assuming the blockBlob variable was instantiated earlier):

TimeSpan? leaseTime = TimeSpan.FromSeconds(60);

string leaseID = blockBlob.AcquireLease(leaseTime, null);

Create connections to files from on-premises or cloudbased Windows or, Linux machines

Azure Files can be used to replace on-premise file servers or NAS devices. You can connect to Azure Files using Windows, Linux, or MacOS.

You can mount an Azure File share using Windows File Explorer, PowerShell, or the Command Prompt. To use File Explorer, follow these steps:

-

Open File Explorer

-

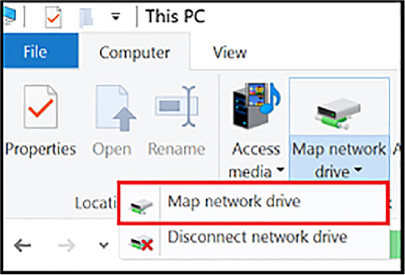

Under the computer menu, click Map Network Drive (see Figure 2-1).

FIGURE 2-1 Map network Drive

-

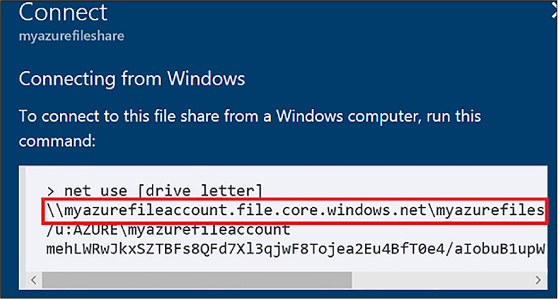

Copy the UNC path from the Connect pane in the Azure portal, as shown in Figure 2-2.

FIGURE 2-2 Azure portal UNC path

-

Select the drive letter and enter the UNC path.

-

Use the storage account name prepended with Azure\ as the username and the Storage Account Key as the password (see Figure 2-3).

FIGURE 2-3 Login credentials for Azure Files

The PowerShell code to map a drive to Azure Files looks like this:

$acctKey = ConvertTo-SecureString -String "<storage-account-key>" -AsPlainText

-Force

$credential = New-Object System.Management.Automation.PSCredential -ArgumentList

"Azure\<storage-account-name>", $acctKey

New-PSDrive -Name <desired-drive-letter> -PSProvider FileSystem -Root

"\\<storage-account-name>.file.core.windows.net\<share-name>" -Credential $credential

To map a drive using a command prompt, use a command that looks like this:

net use <desired-drive-letter>: \\<storage-account-name>.file.core.windows.net

\<share-name> <storage-account-key> /user:Azure\<storage-account-name>

To use Azure Files on a Linux machine, first install the cifs-utils package. Then create a folder for a mount point using mkdir. Afterwards, use the mount command with code similar to the following:

sudo mount -t cifs //<storage-account-name>.file.core.windows.net/<share-name>

./mymountpoint -o vers=2.1,username=<storage-account-name>,password=<storage-

account-key>,dir_mode=0777,file_mode=0777,serverino

Shard large datasets

Each blob is held in a container in Azure Storage. You can use containers to group related blobs that have the same security requirements. The partition key of a blob is account name + container name + blob name. Each blob can have its own partition if load on the blob demands it. A single blob can only be served by a single server. If sharding is needed, you need to create multiple blobs.