In this section, you learn about Microsoft Azure SQL Database, a PaaS offering for relational data.

This skill covers how to:

![]() Choose the appropriate database tier and performance level

Choose the appropriate database tier and performance level

![]() Configure and perform point in time recovery

Configure and perform point in time recovery

![]() Enable geo-replication

Enable geo-replication

![]() Import and export data and schema

Import and export data and schema

![]() Scale Azure SQL databases

Scale Azure SQL databases

![]() Manage elastic pools, including DTUs and eDTUs

Manage elastic pools, including DTUs and eDTUs

![]() Manage limits and resource governor

Manage limits and resource governor

![]() Implement Azure SQL Data Sync

Implement Azure SQL Data Sync

![]() Implement graph database functionality in Azure SQL

Implement graph database functionality in Azure SQL

Choosing a SQL Database tier used to be simply a matter of storage space. Recently, Microsoft added new tiers that also affect the performance of SQL Database. This tiered pricing is called Service Tiers. There are three service tiers to choose from, and while they still each have restrictions on storage space, they also have some differences that might affect your choice. The major difference is in a measurement called database throughput units (DTUs). A DTU is a blended measure of CPU, memory, disk reads, and disk writes. Because SQL Database is a shared resource with other Azure customers, sometimes performance is not stable or predictable. As you go up in performance tiers, you also get better predictability in performance.

![]() Basic Basic tier is meant for light workloads. There is only one performance level of the basic service tier. This level is good for small use, new projects, testing, development, or learning.

Basic Basic tier is meant for light workloads. There is only one performance level of the basic service tier. This level is good for small use, new projects, testing, development, or learning.

![]() Standard Standard tier is used for most production online transaction processing (OLTP) databases. The performance is more predictable than the basic tier. In addition, there are four performance levels under this tier, levels S0 to S3 (S4 – S12 are currently in preview).

Standard Standard tier is used for most production online transaction processing (OLTP) databases. The performance is more predictable than the basic tier. In addition, there are four performance levels under this tier, levels S0 to S3 (S4 – S12 are currently in preview).

![]() Premium Premium tier continues to scale at the same level as the standard tier. In addition, performance is typically measured in seconds. For instance, the basic tier can handle 16,600 transactions per hour. The standard/S2 level can handle 2,570 transactions per minute. The top tier of premium can handle 735 transactions per second. That translates to 2,645,000 per hour in basic tier terminology.

Premium Premium tier continues to scale at the same level as the standard tier. In addition, performance is typically measured in seconds. For instance, the basic tier can handle 16,600 transactions per hour. The standard/S2 level can handle 2,570 transactions per minute. The top tier of premium can handle 735 transactions per second. That translates to 2,645,000 per hour in basic tier terminology.

More Info: Sql Database Tiers and Throughput

For more information on SQL Database tiers, see: http://msdn.microsoft.com/en-us/library/azure/dn741336.aspx.

There are many similarities between the various tiers. Each tier has a 99.99 percent uptime SLA, backup and restore capabilities, access to the same tooling, and the same database engine features. Fortunately, the levels are adjustable, and you can change your tier as your scaling requirements change.

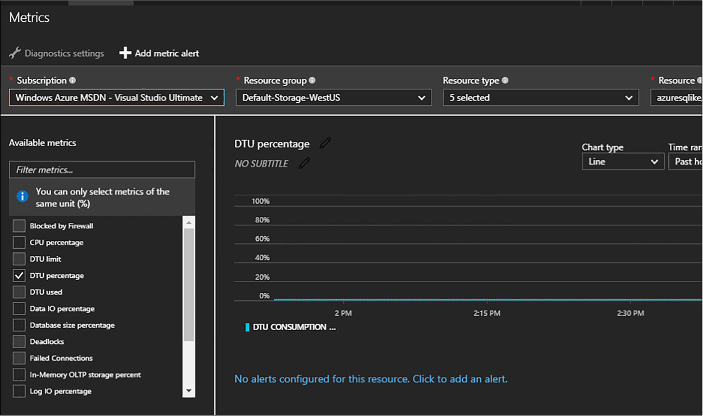

The management portal can help you select the appropriate level. You can review the metrics on the Metrics tab to see the current load of your database and decide whether to scale up or down.

Click the SQL database you want to monitor.

Click the DTU tab, as shown in Figure 2-5.

Add the following metrics:

![]() CPU Percentage

CPU Percentage

![]() Physical Data Reads Percentage

Physical Data Reads Percentage

![]() Log Writes Percentage

Log Writes Percentage

FIGURE 2-5 The Metrics tab

All three of these metrics are shown relative to the DTU of your database. If you reach 80 percent of your performance metrics, it’s time to consider increasing your service tier or performance level. If you’re consistently below 10 percent of the DTU, you might consider decreasing your service tier or performance level. Be aware of momentary spikes in usage when making your choice.

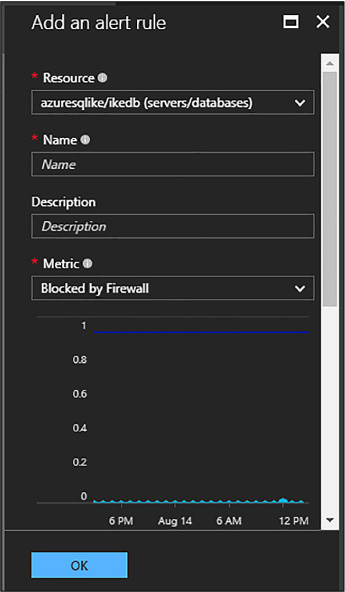

In addition, you can configure an email alert for when your metrics are 80 percent of your selected DTU by completing the following steps:

Click the metric.

Click Add Rule.

The first page of the Create Alert Rule dialog box is shown in Figure 2-6. Add a name and description, and then click the right arrow.

FIGURE 2-6 The first page of the Add An Alert Rule dialog box

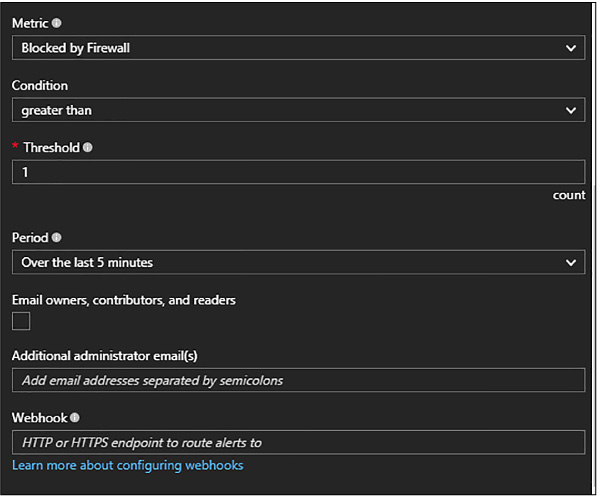

Scroll down for the rest of the page of the Create Alert Rule dialog box, shown in Figure 2-7, select the condition and the threshold value.

FIGURE 2-7 The second page of the Create Alert Rule dialog box

Select your alert evaluation window. An email will be generated if the event happens over a specific duration. You should indicate at least 10 minutes.

Select the action. You can choose to send an email either to the service administrator(s) or to a specific email address.

Azure SQL Database does a full backup every week, a differential backup each day, and an incremental log backup every five minutes. The incremental log backup allows for a point in time restore, which means the database can be restored to any specific time of day. This means that if you accidentally delete a customer’s table from your database, you will be able to recover it with minimal data loss if you know the timeframe to restore from that has the most recent copy.

The length of time it takes to do a restore varies. The further away you get from the last differential backup determines the longer the restore operation takes because there are more log backups to restore. When you restore a new database, the service tier stays the same, but the performance level changes to the minimum level of that tier.

Depending on your service tier, you will have different backup retention periods. Basic retains backups for 7 days. Standard and premium retains for 35 days.

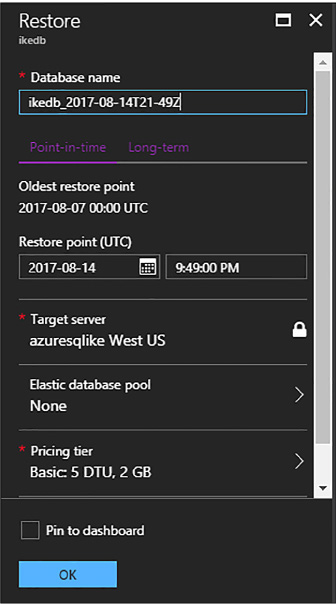

You can restore a database that was deleted as long as you are within the retention period. Follow these steps to restore a database:

Select the database you want to restore, and then click Restore.

The Restore dialog box opens, as shown in Figure 2-8.

FIGURE 2-8 The Restore dialog box

Select a database name.

Select a restore point. You can use the slider bar or manually enter a date and time.

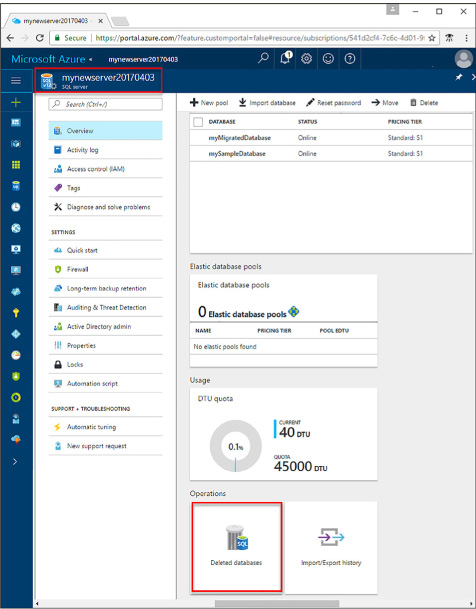

You can also restore a deleted database. Click on the SQL Server (not the database) that once held the database you wish to restore. Select the Deleted Databases tab, as shown in Figure 2-9.

FIGURE 2-9 The Deleted Databases tab for SQL databases in the management portal

Select the database you want to restore.

Click Restore as you did in Step 1.

Specify a database name for the new database.

Click Submit.

Every Azure SQL Database subscription has built-in redundancy. Three copies of your data are stored across fault domains in the datacenter to protect against server and hardware failure. This is built in to the subscription price and is not configurable.

In addition, you can configure active geo-replication. This allows your data to be replicated between Azure data centers. Active geo-replication has the following benefits:

![]() Database-level disaster recovery goes quickly when you’ve replicated transactions to databases on different SQL Database servers in the same or different regions.

Database-level disaster recovery goes quickly when you’ve replicated transactions to databases on different SQL Database servers in the same or different regions.

![]() You can fail over to a different data center in the event of a natural disaster or other intentionally malicious act.

You can fail over to a different data center in the event of a natural disaster or other intentionally malicious act.

![]() Online secondary databases are readable, and they can be used as load balancers for read-only workloads such as reporting.

Online secondary databases are readable, and they can be used as load balancers for read-only workloads such as reporting.

![]() With automatic asynchronous replication, after an online secondary database has been seeded, updates to the primary database are automatically copied to the secondary database.

With automatic asynchronous replication, after an online secondary database has been seeded, updates to the primary database are automatically copied to the secondary database.

To create an offline secondary database in the portal, follow these steps:

Navigate to your SQL database in the management portal accessed via https://portal.azure.com.

Scroll to the Geo Replication section, and click the Configure Geo Replication box.

On the Geo Replication blade, select your target region.

On the Create Secondary blade, click Create.

Note: Uses for Creating an Offline Secondary

Another use for this feature has to do with the ability to terminate the continuous copy relationship between a primary and secondary database. You can terminate the relationship and then upgrade the primary database to a different schema to support a software upgrade. The secondary database gives you a rollback option.

Before you create an online secondary, the following requirements must be met:

![]() The secondary database must have the same name as the primary.

The secondary database must have the same name as the primary.

![]() They must be on separate servers.

They must be on separate servers.

![]() They both must be on the same subscription.

They both must be on the same subscription.

![]() The secondary server cannot be a lower performance tier than the primary.

The secondary server cannot be a lower performance tier than the primary.

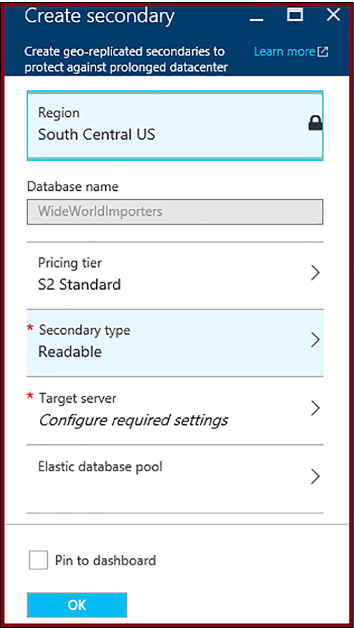

The steps for configuring an active secondary is the same as creating an offline secondary, except you can select the target region, as shown in Figure 2-10.

FIGURE 2-10 The New Secondary For Geo Replication dialog box for creating an active secondary

To create an online secondary in the portal, follow these steps:Navigate to your SQL database in the management portal accessed via https://portal.azure.com.

On the Create Secondary blade, change the Secondary Type to Readable.

Click Create to create the secondary.

The on-premise version of Microsoft SQL Server has long had the ability to export and import data using a BACPAC file. This file will also work with Azure SQL Database. A BACPAC file is just a ZIP file that contains all of the metadata and state data of a SQL Server database.

The easiest way to import schema and data from an on-premise SQL Server into an Azure SQL Database is to use SQL Server Management Studio (SSMS). The general steps are:

Export source database using SSMS

Import database to a new destination using SSMS.

Open SQL Server Management Studio

Right-click on the source database, click Tasks, and click Export Data-tier Application (see Figure 2-11).

![]()

FIGURE 2-11 SSMS Export Data-tier right-click menu

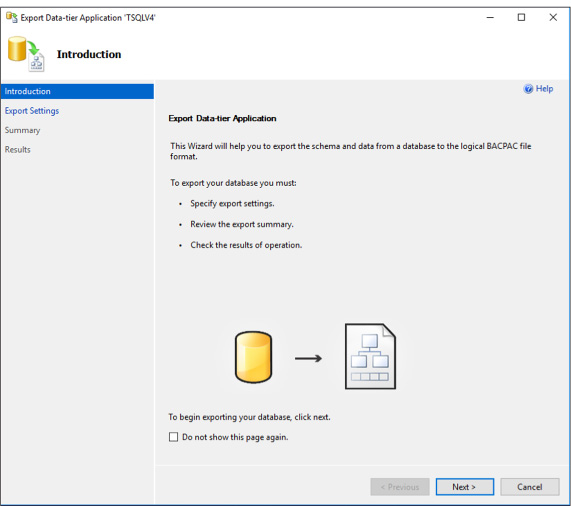

Click Next on the Welcome screen (Figure 2-12).

FIGURE 2-12 Welcome screen for BACPAC process

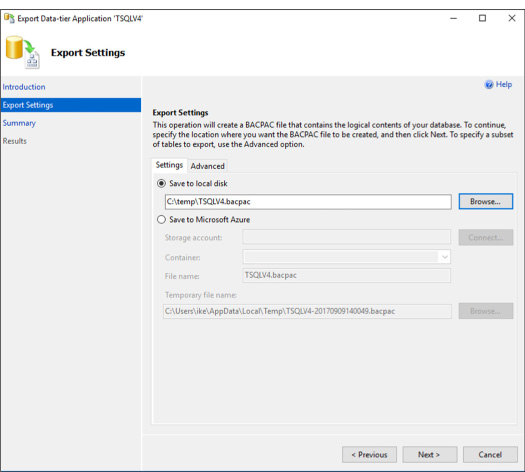

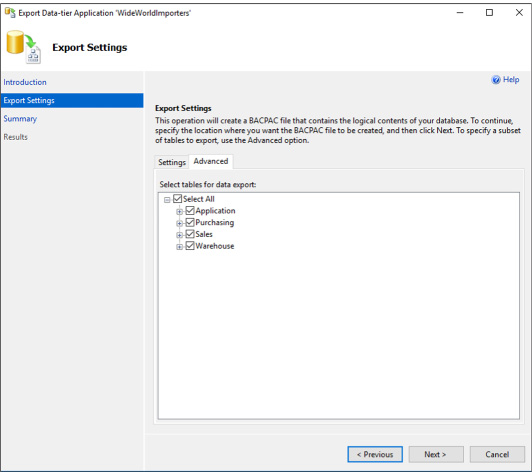

In the Export Settings screen, you can choose where the BACPAC file should be stored. You can either save it to a local disk or save it in an Azure Storage blob container. Either method is easy to use when you import the BACPAC file (Figure 2-13).

FIGURE 2-13 Location for BACPAC file

On the Advanced tab (Figure 2-14), you can selective choose specific tables or schemas or the entire database.

FIGURE 2-14 The advanced tab for selecting the correct tables and schema

Then click Finish and we’re all done.

Connect to your Azure SQL Database using SSMS.

You may need to log into the portal and allow your IP address in to the built-in firewall used by Azure SQL Database. More information can be found here: https://docs.microsoft.com/en-us/azure/sql-database/sql-database-firewall-configure.

Right-click on the database folder and click Import Data-tier Application.

Click Next.

Choose the correct BACPAC file and click Next.

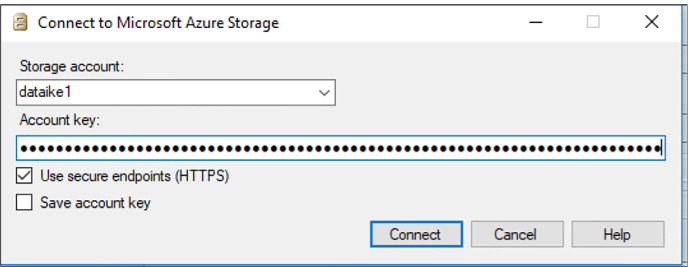

In the next screen (Figure 2-15), click Connect and enter your storage account name and account key.

FIGURE 2-15 The Connect To Microsoft Azure Storage screen

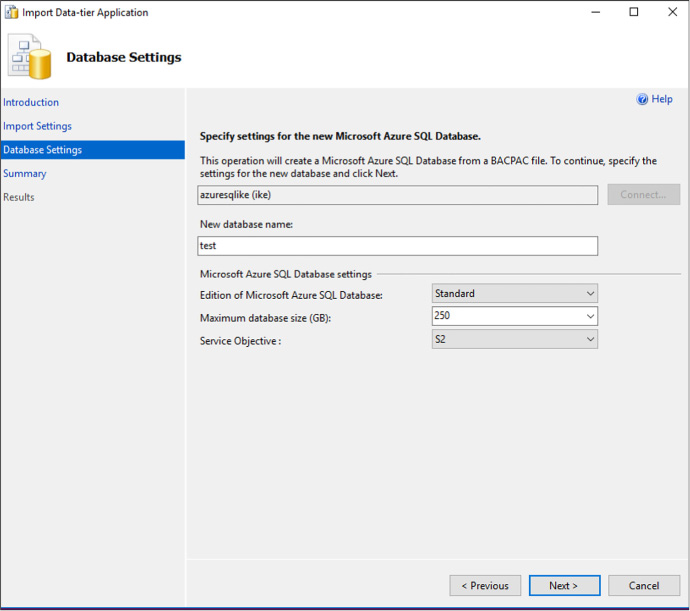

Name the new database and select the pricing tier (see Figure 2-16). Warning: this option determines pricing. If you are just experimenting, choose Basic under the Edition of Microsoft Azure SQL Database.

FIGURE 2-16 Choosing the database name and pricing tier

Click Next and Finish.

The schema and data will import into the new database that you’ve named.

There are two methods for preparing a relational database for a high transaction load. First, we can scale-up. This means that we will add CPU, memory, and better disk i/o to handle the load. In Azure SQL Database, scaling up is very simple: we just move the slider bar over to the right or choose a new pricing tier. This will give us the ability to handle more DTUs. Under a very high load, we might not be able to scale-up much futher. That would mean we’d have to use our second method, scale-out.

Scaling out a database means that we would break apart a large database into small portions. This is called sharding. We would put one portion of our data in one database and another portion of our data in a different database. We can do this by function, by date, by geo-location of our brand offices, by business unit, or some other method.

We may also shard a database simply because it is too large to be stored in a single Azure SQL Database. Or it is too much data to backup and restore in a reasonable amount of time. We may also shard data because we are a software company and our customers require that their data is stored away from our other customers, effectively giving us one database per customer.

Sharding is burdensome in a transactional system because it usually involves rewriting a significant portion of our applications to handle multiple databases. Also, if we get the sharding boundaries wrong, we might not actually improve performance. For instance, what if we often join data from one database with data from a different database? Now we’re locking resources while we wait for the slower database to respond. This can compound our concurrency, blocking, and deadlocking issues that we might have led us towards scaling-out in the first place.

Some of these issues are solved with a shard map. This is usually a table or database that tells the application where data actually is and where to go looking for it. This allows us to move data around and update the shard map, thus avoiding significant rewriting of our application. If implemented correctly, shard maps can allow us to add more databases or delete database as necessary. This may give us the elasticity that may have eluded us on the database thus far.

You’ll note that sharding is easily implemented in Azure Table Storage and Azure Cosmos DB, but is significantly more difficult in a relational database like Azure SQL Database. The complexity comes from being transactionally consistent while having data available and spread throughout several databases.

Microsoft has released a set of tools called Elastic Database Tools that are compatible with Azure SQL Database. This client library can be used in your application to create sharded databases. It has a split-merge tool that will allow you to create new nodes or drop nodes without data loss. It also includes a tool that will keep schema consistent across all the nodes by running scripts on each node individually.

The main power of the Elastic Database Tools is the ability to fan-out queries across multiple shards without a lot of code changes. Follow these general steps to use a sharded database:

Get a Shard Map.

![]() There are several different types of shard maps, for instance range shard map will tell you what range of values exist in which databases. If we were to divide our data by customer ID, then we would make sure all tables in our database included a customer ID. We could grab anything about that customer, including their contacts, orders, invoices, payments, customer service disputes, and employees as long as we have the correct customer ID. A shard map might look like this:

There are several different types of shard maps, for instance range shard map will tell you what range of values exist in which databases. If we were to divide our data by customer ID, then we would make sure all tables in our database included a customer ID. We could grab anything about that customer, including their contacts, orders, invoices, payments, customer service disputes, and employees as long as we have the correct customer ID. A shard map might look like this:

![]() 1 – 100 = Database1

1 – 100 = Database1

![]() 101 – 200 = Database2

101 – 200 = Database2

![]() 202 – 300 = Database 3

202 – 300 = Database 3

Create a MultiShareConnection Object

![]() This is similar to a regular SqlConnection object, except in represents a connection to a set of shards.

This is similar to a regular SqlConnection object, except in represents a connection to a set of shards.

Create a multi-shard command.

Set the CommandText property

ExecuteReader

View the results using the MultiShardDataReader class.

Assuming you had a ShardMap object, the query would look like this:

using (MultiShardConnection conn = new MultiShardConnection(

myShardMap.GetShards(),

myShardConnectionString)

)

{

using (MultiShardCommand cmd = conn.CreateCommand())

{

cmd.CommandText = "SELECT c1, c2, c3 FROM ShardedTable";

cmd.CommandType = CommandType.Text;

cmd.ExecutionOptions = MultiShardExecutionOptions.IncludeShardNameColumn;

cmd.ExecutionPolicy = MultiShardExecutionPolicy.PartialResults;

using (MultiShardDataReader sdr = cmd.ExecuteReader())

{

while (sdr.Read())

{

var c1Field = sdr.GetString(0);

var c2Field = sdr.GetFieldValue<int>(1);

var c3Field = sdr.GetFieldValue<Int64>(2);

}

}

}

}

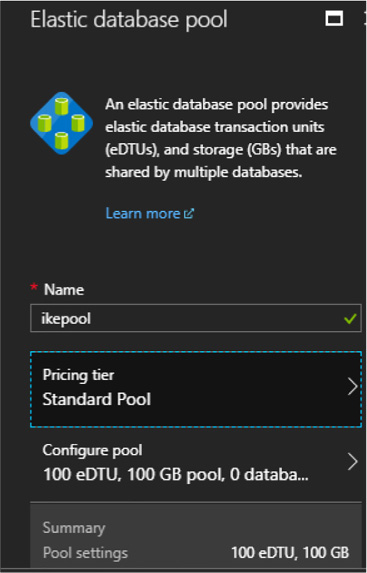

A single SQL Database server can have several databases on it. Those databases can each have their own size and pricing tier. This might work out well if we always know exactly how large each database will be and how many DTUs are needed for them individually. What happens if we don’t really know that? Or we’d like the databases on a single server to share a DTU pool? Elastic pools (not to be confused with the last topic, Elastic Tools) are used to do exactly this: share DTUs across databases on a single server.

Elastic pools enable the user to purchase elastic Database Transaction Units (eDTUs) for a pool of multiple databases. The user adds databases to the pool, sets the minimum and maximum eDTUS for each database, and sets the eDTU limit of the pool based on their budget. This means that within the pool, each database is given the ability to auto-scale in a set range.

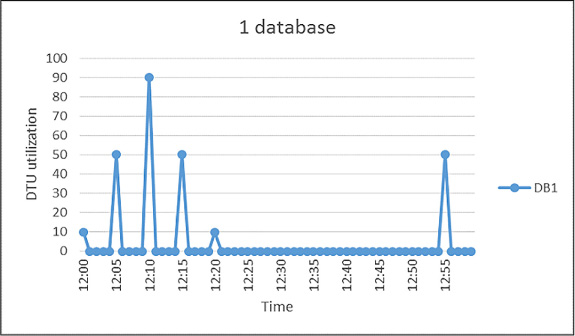

In Figure 2-17, you will see a database that spends most of its time idle, but occasionally spikes in activity. This database is a good candidate for an Elastic pool.

FIGURE 2-17 Choosing the right database to participate in the pool

To create an Elastic pool, follow these steps:

Click on your database server and click New Pool.

![]() The new pool pane appears (Figure 2-18).

The new pool pane appears (Figure 2-18).

FIGURE 2-18 Creating an Elastic pool

Name the pool a unique name.

Choose a pricing tier for the pool.

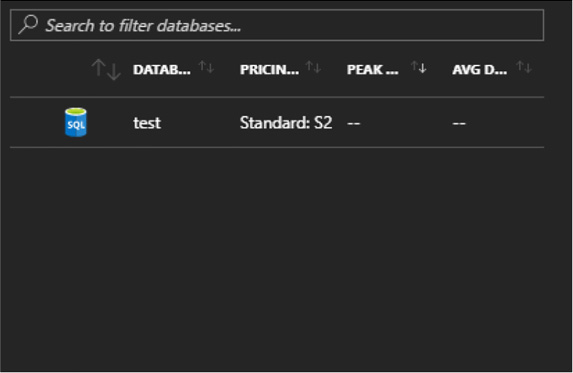

To choose the databases you want to participate in the pool, click Configure Pool. This pane appears in Figure 2-19.

FIGURE 2-19 Choosing the databases that participate in the Elastic pool

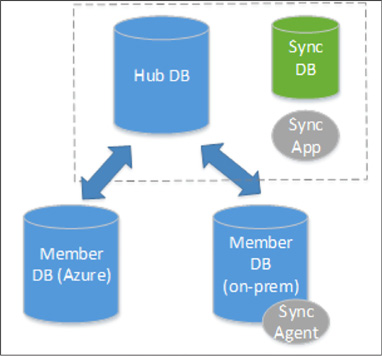

SQL Data Sync is a new service for Azure SQL Database. It allows you to bi-directionally replicate data between two Azure SQL Databases or between an Azure SQL Database and an on-premise SQL Server.

A Sync Group is a group of databases that you want to synchronize using Azure SQL Data Sync. A Sync Schema is the data you want to synchronize. Sync Direction allows you to synchronize data in either one direction or bi-directionally. Sync Interval controls how often synchronization occurs. Finally, a Conflict Resolution Policy determines who wins if data conflicts with one another.

The following diagram (Figure 2-20) shows how Azure Data Sync keeps multiple databases consistent with each other.

FIGURE 2-20 Azure Data Sync diagram

The hub database must always be an Azure SQL Database. A member database can either be Azure SQL Database or an on-premise SQL Server.

It is important to note that this is a method to of keeping data consistent across multiple databases, it is not an ETL tool. This should not be used to populate a data warehouse or to migrate an on-premise SQL Server to the cloud. This can be used to populate a read-only version of the database for reporting, but only if the schema will be 100% consistent.

More Info: Azure SQL Data Sync

Here’s a tutorial for creating a Data Sync Group: https://docs.microsoft.com/en-us/azure/sql-database/sql-database-get-started-sql-data-sync.

SQL Server 2017 introduces a new graph database feature. This feature hasn’t been released in the on-premise edition as of this writing, but should be available in Azure SQL Database by the time this book is released. We discuss graph databases in the next section on Azure Cosmos DB as well.

So far, we’ve discussed a NoSQL solution when we covered Azure Storage Tables. That was a key-value store. We will cover a different type of NoSQL solution, JSON document storage, when we examine Azure Cosmos DB DocumentDB. Graph databases are yet another NoSQL solution. Graph database introduce two new vocabulary words: nodes and relationships.

Nodes are entities in relational database terms. Each node is popularly a noun, like a person, an event, an employee, a product, or a car. A relationship is similar to a relationship in SQL Server in that it defines that a connection exists between nouns. Where the relationship in graph databases differ is that it is hierarchal in nature, where it tends to be flat in SQL Server, PostgresSQL, and other relational storage engines.

A graph is an abstract representation of a set of objects where nodes are linked with relationships in a hierarchy. A graph database is a database with an explicit and enforceable graph structure. Another key difference between a relational storage engine and a graph database storage engine is that as the number of nodes increase, the performance cost stays the same. Any relational database professional will tell you that joining tables will burden the engine and be a common source of performance issues when scaling. Graph databases don’t suffer from that issue. Also, entities can be connected with each other through several different paths.

So where relational databases are optimized for aggregation, graph databases are optimized for having plenty of connections between nodes. Graph databases are popularly traversed through a domain specific language (DSL) called Gremlin.

In Azure SQL Database, graph-like capabilities are implemented through T-SQL. Graph databases popularly have several different relationship types that are possible between nodes. Azure SQL Database only has many-to-many relationships.

You can create graph objects in T-SQL with the following syntax:

CREATE TABLE Person (ID INTEGER PRIMARY KEY, Name VARCHAR(100), Age INT) AS NODE;

CREATE TABLE friends (StartDate date) AS EDGE;

This is very similar to the standard CREATE TABLE syntax, with the added “AS NODE” or “AS EDGE” at the end.

Azure SQL Database supports new query syntax for traversing the graph hierarchy. This query looks something like this:

SELECT Restaurant.name

FROM Person, likes, Restaurant

WHERE MATCH (Person-(likes)->Restaurant)

AND Person.name = 'John';

Notice the MATCH keyword in the T-SQL WHERE clause. This will show us every person that likes a restaurant named John.

More Info: Azure SQL Graph

Here's a tutorial for creating a graph relationships that currently works with Azure SQL Database: https://docs.microsoft.com/en-us/sql/relational-databases/graphs/sql-graph-sample.