Chapter 1. Preparing our IoT Projects

This book will cover a series of projects for Raspberry Pi that cover very common and handy use cases within Internet of Things (IoT). These projects include the following:

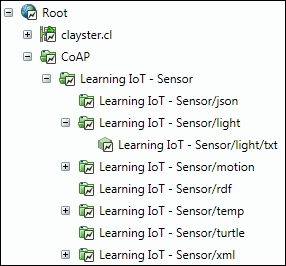

Sensor: This project is used to sense physical values and publish them together with metadata on the Internet in various ways.

Actuator: This performs actions in the physical world based on commands it receives from the Internet.

Controller: This is a device that provides application intelligence to the Internet.

Camera: This is a device that publishes a camera through which you will take pictures.

Bridge: This is the fifth and final project, which is a device that acts as a bridge between different protocols. We will cover this at an introductory level later in the book (Chapter 8, Creating Protocol Gateways, if you would like to take a look at it now), as it relies on the IoT service platform.

Before delving into the different protocols used in Internet of Things, we will dedicate some time in this chapter to set up some of these projects, present circuit diagrams, and perform basic measurement and control operations, which are not specific to any communication protocol. The following chapters will then use this code as the basis for the new code presented in each chapter.

Along with the project preparation phase, you will also learn about some of the following concepts in this chapter:

Development using C# for Raspberry Pi

The basic project structure

Introduction to Clayster libraries

The sensor, actuator, controller, and camera projects

Interfacing the General Purpose Input/Output pins

Circuit diagrams

Hardware interfaces

Introduction to interoperability in IoT

Data persistence using an object database

Our first project will be the sensor project. Since it is the first one, we will cover it in more detail than the following projects in this book. A majority of what we will explore will also be reutilized in other projects as much as possible. The development of the sensor is broken down into six steps, and the source code for each step can be downloaded separately. You will find a simple overview of this here:

Firstly, you will set up the basic structure of a console application.

Then, you will configure the hardware and learn to sample sensor values and maintain a useful historical record.

After adding HTTP server capabilities as well as useful web resources to the project, you will publish the sensor values collected on the Internet.

You will then handle persistence of sampled data in the sensor so it can resume after outages or software updates.

The next step will teach you how to add a security layer, requiring user authentication to access sensitive information, on top of the application.

In the last step, you will learn how to overcome one of the major obstacles in the request/response pattern used by HTTP, that is, how to send events from the server to the client.

Tip

Only the first two steps are presented here, and the rest in the following chapter, since they introduce HTTP. The fourth step will be introduced in this chapter but will be discussed in more detail in Appendix C, Object Database.

I assume that you are familiar with Raspberry Pi and have it configured. If not, refer to http://www.raspberrypi.org/help/faqs/#buyingWhere.

In our examples, we will use Model B with the following:

An SD card with the Raspbian operating system installed

A configured network access, including Wi-Fi, if used

User accounts, passwords, access rights, time zones, and so on, all configured correctly

Note

I also assume that you know how to create and maintain terminal connections with the device and transfer files to and from the device.

All our examples will be developed on a remote PC (for instance, a normal working laptop) using C# (C + + + + if you like to think of it this way), as this is a modern programming language that allows us to do what we want to do with IoT. It also allows us to interchange code between Windows, Linux, Macintosh, Android, and iOS platforms.

Tip

Don't worry about using C#. Developers with knowledge in C, C++, or Java should have no problems understanding it.

Once a project is compiled, executable files are deployed to the corresponding Raspberry Pi (or Raspberry Pi boards) and then executed. Since the code runs on .NET, any language out of the large number of CLI-compatible languages can be used.

Tip

Development tools for C# can be downloaded for free from http://xamarin.com/.

To prepare Raspberry for the execution of the .NET code, we need to install Mono, which contains the Common Language Runtime for .NET that will help us run the .NET code on Raspberry. This is done by executing the following commands in a terminal window in Raspberry Pi:

$ sudo apt-get update

$ sudo apt-get upgrade

$ sudo apt-get install mono-complete

Your device is now ready to run the .NET code.

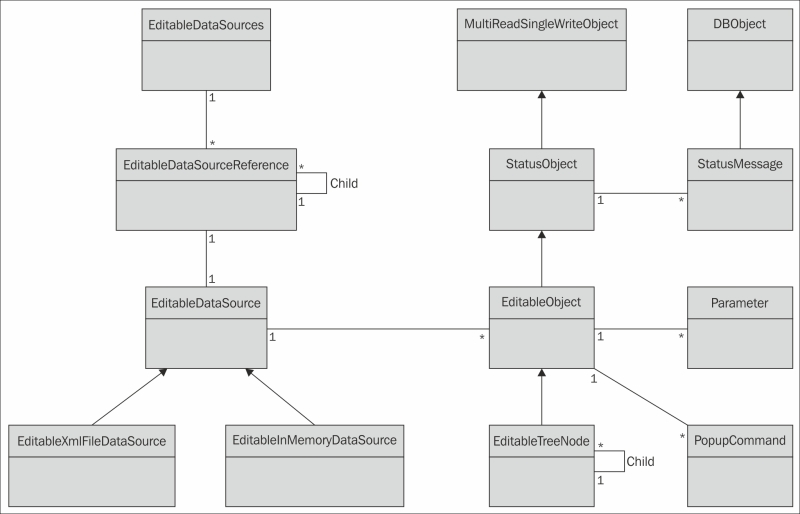

To facilitate the development of IoT applications, this book provides you with the right to use seven Clayster libraries for private and commercial applications. These are available on GitHub with the downloadable source code for each chapter. Of these seven libraries, two are provided with the source code so that the community can extend them as they desire. Furthermore, the source code of all the examples shown in this book is also available for download.

The following Clayster libraries are included:

|

Library |

Description |

|

Clayster.Library.Data |

This provides the application with a powerful object database. Objects are persisted and can be searched directly in the code using the object's class definition. No database coding is necessary. Data can be stored in the SQLite database provided in Raspberry Pi. |

|

Clayster.Library.EventLog |

This provides the application with an extensible event logging architecture that can be used to get an overview of what happens in a network of things. |

|

Clayster.Library.Internet |

This contains classes that implement common Internet protocols. Applications can use these to communicate over the Internet in a dynamic manner. |

|

Clayster.Library.Language |

This provides mechanisms to create localizable applications that are simple to translate and that can work in an international setting. |

|

Clayster.Library.Math |

This provides a powerful extensible, mathematical scripting language that can help with automation, scripting, graph plotting, and others. |

|

Clayster.Library.IoT |

This provides classes that help applications become interoperable by providing data representation and parsing capabilities of data in IoT. The source code is also included here. |

|

Clayster.Library.RaspberryPi |

This contains Hardware Abstraction Layer (HAL) for Raspberry Pi. It provides object-oriented interfaces to interact with devices connected to the General Purpose Input/Output (GPIO) pins available. The source code is also included here. |

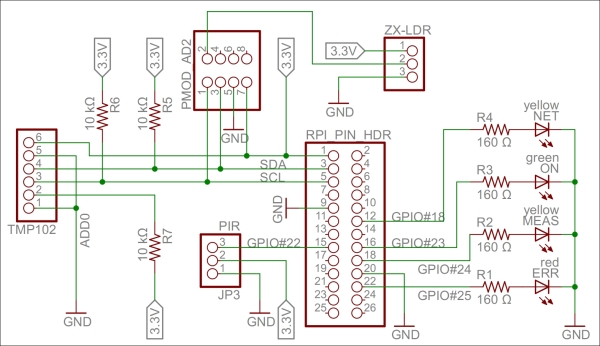

Our sensor prototype will measure three things: light, temperature, and motion. To summarize, here is a brief description of the components:

The light sensor is a simple ZX-LDR analog sensor that we will connect to a four-channel (of which we use only one) analog-to-digital converter (Digilent Pmod AD2), which is connected to an I2C bus that we will connect to the standard GPIO pins for I2C.

Note

The I2C bus permits communication with multiple circuits using synchronous communication that employs a Serial Clock Line (SCL) and Serial Data Line (SDA) pin. This is a common way to communicate with integrated circuits.

The temperature sensor (Texas Instruments TMP102) connects directly to the same I2C bus.

The SCL and SDA pins on the I2C bus use recommended pull-up resistors to make sure they are in a high state when nobody actively pulls them down.

The infrared motion detector (Parallax PIR sensor) is a digital input that we connect to GPIO 22.

We also add four LEDs to the board. One of these is green and is connected to GPIO 23. This will show when the application is running. The second one is yellow and is connected to GPIO 24. This will show when measurements are done. The third one is yellow and is connected to GPIO 18. This will show when an HTTP activity is performed. The last one is red and is connected to GPIO 25. This will show when a communication error occurs.

The pins that control the LEDs are first connected to 160 Ω resistors before they are connected to the LEDs, and then to ground. All the hardware of the prototype board is powered by the 3.3 V source provided by Raspberry Pi. A 160 Ω resistor connected in series between the pin and ground makes sure 20 mA flows through the LED, which makes it emit a bright light.

Tip

For an introduction to GPIO on Raspberry Pi, please refer to http://www.raspberrypi.org/documentation/usage/gpio/.

Two guides on GPIO pins can be found at http://elinux.org/RPi_Low-level_peripherals.

For more information, refer to http://pi.gadgetoid.com/pinout.

The following figure shows a circuit diagram of our prototype board:

A circuit diagram for the Sensor project

Tip

For a bill of materials containing the components used, see Appendix R, Bill of Materials.

We also need to create a console application project in Xamarin. Appendix A, Console Applications, details how to set up a console application in Xamarin and how to enable event logging and then compile, deploy, and execute the code on Raspberry Pi.

Interaction with our hardware is done using corresponding classes defined in the Clayster.Library.RaspberryPi library, for which the source code is provided. For instance, digital output is handled using the DigitalOutput class and digital input with the DigitalInput class. Devices connected to an I2C bus are handled using the I2C class. There are also other generic classes, such as ParallelDigitalInput and ParallelDigitalOutput, that handle a series of digital input and output at once. The SoftwarePwm class handles a software-controlled pulse-width modulation output. The Uart class handles communication using the UART port available on Raspberry Pi. There's also a subnamespace called Devices where device-specific classes are available.

In the end, all classes communicate with the static GPIO class, which is used to interact with the GPIO layer in Raspberry Pi.

Each class has a constructor that initializes the corresponding hardware resource, methods and properties to interact with the resource, and finally a Dispose method that releases the resource.

Tip

It is very important that you release the hardware resources allocated before you terminate the application. Since hardware resources are not controlled by the operating system, the fact that the application is terminated is not sufficient to release the resources. For this reason, make sure you call the Dispose methods of all the allocated hardware resources before you leave the application. Preferably, this should be done in the finally statement of a try-finally block.

The hardware interfaces used for our LEDs are as follows:

private static DigitalOutput executionLed = new DigitalOutput (23, true);

private static DigitalOutput measurementLed = new DigitalOutput (24, false);

private static DigitalOutput errorLed = new DigitalOutput (25, false);

private static DigitalOutput networkLed = new DigitalOutput (18, false);

We use a DigitalInput class for our motion detector:

private static DigitalInput motion = new DigitalInput (22);

With our temperature sensor on the I2C bus, which limits the serial clock frequency to a maximum of 400 kHz, we interface as follows:

private static I

2

C i

2

cBus = new I

2

C (3, 2, 400000);

private static TexasInstrumentsTMP102 tmp102 = new TexasInstrumentsTMP102 (0, i

2

cBus);

We interact with the light sensor using an analog-to-digital converter as follows:

private static AD799x adc = new AD799x (0, true, false, false, false, i

2

cBus);

The sensor data values will be represented by the following set of variables:

private static bool motionDetected = false;

private static double temperatureC;

private static double lightPercent;

private static object synchObject = new object ();

Historical values will also be kept so that trends can be analyzed:

private static List<Record> perSecond = new List<Record> ();

private static List<Record> perMinute = new List<Record> ();

private static List<Record> perHour = new List<Record> ();

private static List<Record> perDay = new List<Record> ();

private static List<Record> perMonth = new List<Record> ();

Note

Appendix B, Sampling and History, describes how to perform basic sensor value sampling and historical record keeping in more detail using the hardware interfaces defined earlier. It also describes the Record class.

Persisting data is simple. This is done using an object database. This object database analyzes the class definition of objects to persist and dynamically creates the database schema to accommodate the objects you want to store. The object database is defined in the Clayster.Library.Datalibrary. You first need a reference to the object database, which is as follows:

internal static ObjectDatabase db;

Then, you need to provide information on how to connect to the underlying database. This can be done in the .config file of the application or the code itself. In our case, we will specify a SQLite database and provide the necessary parameters in the code during the startup:

DB.BackupConnectionString = "Data Source=sensor.db;Version=3;";

DB.BackupProviderName = "Clayster.Library.Data.Providers." + "SQLiteServer.SQLiteServerProvider";

Finally, you will get a proxy object for the object database as follows. This object can be used to store, update, delete, and search for objects in your database:

db = DB.GetDatabaseProxy ("TheSensor");

Tip

Appendix C, Object Database, shows how the data collected in this application is persisted using only the available class definitions through the use of this object database.

By doing this, the sensor does not lose data if Raspberry Pi is restarted.

To facilitate the interchange of sensor data between devices, an interoperable sensor data format based on XML is provided in the Clayster.Library.IoT library. There, sensor data consists of a collection of nodes that report data ordered according to the timestamp. For each timestamp, a collection of fields is reported. There are different types of fields available: numerical, string, date and time, timespan, Boolean, and enumeration-valued fields. Each field has a field name, field value of the corresponding type and the optional readout type (if the value corresponds to a momentary value, peak value, status value, and so on), a field status, or Quality of Service value and localization information.

The Clayster.Library.IoT.SensorData namespace helps us export sensor data information by providing an abstract interface called ISensorDataExport. The same logic can later be used to export to different sensor data formats. The library also provides a class named ReadoutRequest that provides information about what type of data is desired. We can use this to tailor the data export to the desires of the requestor.

The export starts by calling the Start() method on the sensor data export module and ends with a call to the End() method. Between these two, a sequence of StartNode() and EndNode() method calls are made, one for each node to export. To simplify our export, we then call another function to output data from an array of Record objects that contain our data. We use the same method to export our momentary values by creating a temporary Record object that would contain them:

private static void ExportSensorData (ISensorDataExport Output, ReadoutRequest Request)

{

Output.Start ();

lock (synchObject)

{

Output.StartNode ("Sensor");

Export (Output, new Record[]

{

new Record (DateTime.Now, temperatureC, lightPercent, motionDetected)

},ReadoutType.MomentaryValues, Request);

Export (Output, perSecond, ReadoutType.HistoricalValuesSecond, Request);

Export (Output, perMinute, ReadoutType.HistoricalValuesMinute, Request);

Export (Output, perHour, ReadoutType.HistoricalValuesHour, Request);

Export (Output, perDay, ReadoutType.HistoricalValuesDay, Request);

Export (Output, perMonth, ReadoutType.HistoricalValuesMonth, Request);

Output.EndNode ();

}

Output.End ();

}

For each array of Record objects, we then export them as follows:

Note

It is important to note here that we need to check whether the corresponding readout type is desired by the client before you export data of this type.

The Export method exports an enumeration of Record objects as follows. First it checks whether the corresponding readout type is desired by the client before exporting data of this type. The method also checks whether the data is within any time interval requested and that the fields are of interest to the client. If a data field passes all these tests, it is exported by calling any of the instances of the overloaded method ExportField(), available on the sensor data export object. Fields are exported between the StartTimestamp() and EndTimestamp() method calls, defining the timestamp that corresponds to the fields being exported:

private static void Export(ISensorDataExport Output, IEnumerable<Record> History, ReadoutType Type,ReadoutRequest Request)

{

if((Request.Types & Type) != 0)

{

foreach(Record Rec in History)

{

if(!Request.ReportTimestamp (Rec.Timestamp))

continue;

Output.StartTimestamp(Rec.Timestamp);

if (Request.ReportField("Temperature"))

Output.ExportField("Temperature",Rec.TemperatureC, 1,"C", Type);

if(Request.ReportField("Light"))

Output.ExportField("Light",Rec.LightPercent, 1, "%", Type);

if(Request.ReportField ("Motion"))

Output.ExportField("Motion",Rec.Motion, Type);

Output.EndTimestamp();

}

}

}

We can test the method by exporting some sensor data to XML using the SensorDataXmlExport class. It implements the ISensorDataExport interface. The result would look something like this if you export only momentary and historic day values.

Note

The ellipsis (…) represents a sequence of historical day records, similar to the one that precedes it, and newline and indentation has been inserted for readability.

<?xml version="1.0"?>

<fields xmlns="urn:xmpp:iot:sensordata">

<node nodeId="Sensor">

<timestamp value="2014-07-25T12:29:32Z">

<numeric value="19.2" unit="C" automaticReadout="true" momentary="true" name="Temperature"/>

<numeric value="48.5" unit="%" automaticReadout="true" momentary="true" name="Light"/>

<boolean value="true" automaticReadout="true" momentary="true" name="Motion"/>

</timestamp>

<timestamp value="2014-07-25T04:00:00Z">

<numeric value="20.6" unit="C" automaticReadout="true" name="Temperature" historicalDay="true"/>

<numeric value="13.0" unit="%" automaticReadout="true" name="Light" historicalDay="true"/>

<boolean value="true" automaticReadout="true" name="Motion" historicalDay="true"/>

</timestamp>

...

</node>

</fields>

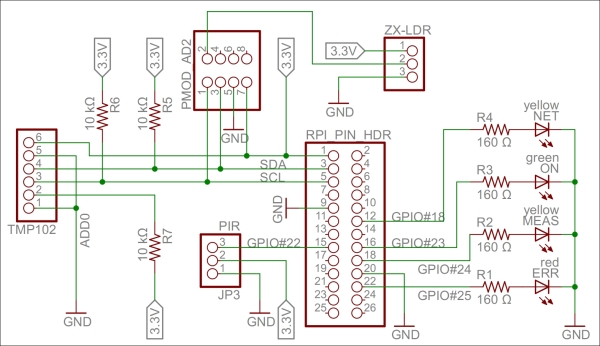

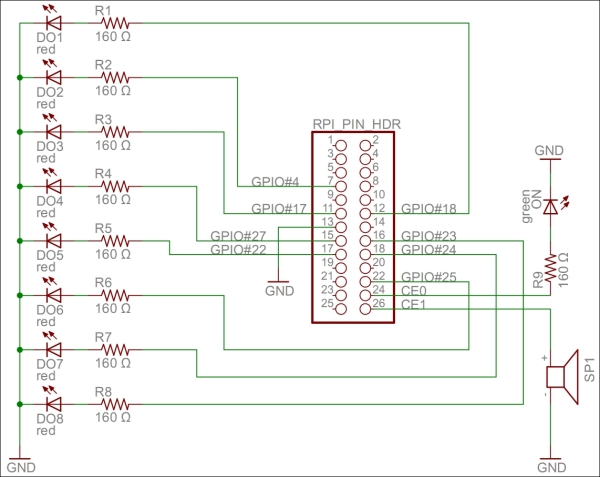

Another very common type of object used in automation and IoT is the actuator. While the sensor is used to sense physical magnitudes or events, an actuator is used to control events or act with the physical world. We will create a simple actuator that can be run on a standalone Raspberry Pi. This actuator will have eight digital outputs and one alarm output. The actuator will not have any control logic in it by itself. Instead, interfaces will be published, thereby making it possible for controllers to use the actuator for their own purposes.

Note

In the sensor project, we went through the details on how to create an IoT application based on HTTP. In this project, we will reuse much of what has already been done and not explicitly go through these steps again. We will only list what is different.

Our actuator prototype will control eight digital outputs and one alarm output:

Each one of the digital output is connected to a 160 Ω resistor and a red LED to ground. If the output is high, the LED is turned on. We have connected the LEDs to the GPIO pins in this order: 18, 4, 17, 27, 22, 25, 24, and 23. If Raspberry Pi R1 is used, GPIO pin 27 should be renumbered to 21.

For the alarm output, we connect a speaker to GPIO pin 7 (CE1) and then to ground. We also add a connection from GPIO 8 (CE0), a 160 Ω resistor to a green LED, and then to ground. The green LED will show when the application is being executed.

Tip

For a bill of materials containing components used, refer to Appendix R, Bill of Materials.

The actuator project can be better understood with the following circuit diagram:

A circuit diagram for the actuator project

All the hardware interfaces except the alarm output are simple digital outputs. They can be controlled by the DigitalOutput class. The alarm output will control the speaker through a square wave signal that will be output on GPIO pin 7 using the SoftwarePwm class, which outputs a pulse-width-modulated square signal on one or more digital outputs. The SoftwarePwm class will only be created when the output is active. When not active, the pin will be left as a digital input.

The declarations look as follows:

private static DigitalOutput executionLed =

new DigitalOutput (8, true);

private static SoftwarePwm alarmOutput = null;

private static Thread alarmThread = null;

private static DigitalOutput[] digitalOutputs = new DigitalOutput[]

{

new DigitalOutput (18, false),

new DigitalOutput (4, false),

new DigitalOutput (17, false),

new DigitalOutput (27, false),// pin 21 on RaspberryPi R1

new DigitalOutput (22, false),

new DigitalOutput (25, false),

new DigitalOutput (24, false),

new DigitalOutput (23, false)

};

Digital output is controlled using the objects in the digitalOutputs array directly. The alarm is controlled by calling the AlarmOn() and AlarmOff()methods.

Tip

Appendix D, Control, details how these hardware interfaces are used to perform control operations.

We have a sensor that provides sensing and an actuator that provides actuating. But none have any intelligence yet. The controller application provides intelligence to the network. It will consume data from the sensor, then draw logical conclusions and use the actuator to inform the world of its conclusions.

The controller we create will read the ambient light and motion detection provided by the sensor. If it is dark and there exists movement, the controller will sound the alarm. The controller will also use the LEDs of the controller to display how much light is being reported.

Tip

Of the three applications we have presented thus far, this application is the simplest to implement since it does not publish any information that needs to be protected. Instead, it uses two other applications through the interfaces they have published. The project does not use any particular hardware either.

The first step toward creating a controller is to access sensors from where relevant data can be retrieved. We will duplicate sensor data into these private member variables:

private static bool motion = false;

private static double lightPercent = 0;

private static bool hasValues = false;

In the following chapter, we will show you different methods to populate these variables with values by using different communication protocols. Here, we will simply assume the variables have been populated by the correct sensor values.

We get help from Clayster.Library.IoT.SensorData to parse data in XML format, generated by the sensor data export we discussed earlier. So, all we need to do is loop through the fields that are received and extract the relevant information as follows. We return a Boolean value that would indicate whether the field values read were different from the previous ones:

private static bool UpdateFields(XmlDocument Xml)

{

FieldBoolean Boolean;

FieldNumeric Numeric;

bool Updated = false;

foreach (Field F in Import.Parse(Xml))

{

if(F.FieldName == "Motion" && (Boolean = F as FieldBoolean) != null)

{

if(!hasValues || motion != Boolean.Value)

{

motion = Boolean.Value;

Updated = true;

}

} else if(F.FieldName == "Light" && (Numeric = F as FieldNumeric) != null && Numeric.Unit == "%")

{

if(!hasValues || lightPercent != Numeric.Value)

{

lightPercent = Numeric.Value;

Updated = true;

}

}

}

return Updated;

}

The controller needs to calculate which LEDs to light along with the state of the alarm output based on the values received by the sensor. The controlling of the actuator can be done from a separate thread so that communication with the actuator does not affect the communication with the sensor, and the other way around. Communication between the main thread that is interacting with the sensor and the control thread is done using two AutoResetEvent objects and a couple of control state variables:

private static AutoResetEvent updateLeds = new AutoResetEvent(false);

private static AutoResetEvent updateAlarm = new AutoResetEvent(false);

private static int lastLedMask = -1;

private static bool? lastAlarm = null;

private static object synchObject = new object();

We have eight LEDs to control. We will turn them off if the sensor reports 0 percent light and light them all if the sensor reports 100 percent light. The control action we will use takes a byte where each LED is represented by a bit. The alarm is to be sounded when there is less than 20 percent light reported and the motion is detected. The calculations are done as follows:

private static void CheckControlRules()

{

int NrLeds = (int)System.Math.Round((8 * lightPercent) / 100);

int LedMask = 0;

int i = 1;

bool Alarm;

while(NrLeds > 0)

{

NrLeds--;

LedMask |= i;

i <<= 1;

}

Alarm = lightPercent < 20 && motion;

We then compare these results with the previous ones to see whether we need to inform the control thread to send control commands:

lock(synchObject)

{

if(LedMask != lastLedMask)

{

lastLedMask = LedMask;

updateLeds.Set();

}

if (!lastAlarm.HasValue || lastAlarm.Value != Alarm)

{

lastAlarm = Alarm;

updateAlarm.Set();

}

}

}

In this book, we will also introduce a camera project. This device will use an infrared camera that will be published in the network, and it will be used by the controller to take pictures when the alarm goes off.

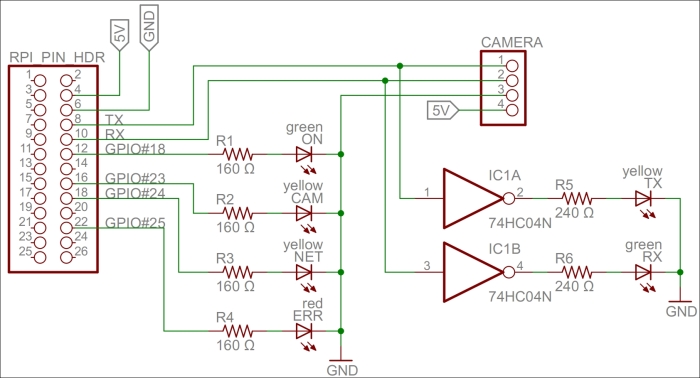

For our camera project, we've chosen to use the LinkSprite JPEG infrared color camera instead of the normal Raspberry Camera module or a normal UVC camera. It allows us to take photos during the night and leaves us with two USB slots free for Wi-Fi and keyboard. You can take a look at the essential information about the camera by visiting http://www.linksprite.com/upload/file/1291522825.pdf. Here is a summary of the circuit connections:

The camera has a serial interface that we can use through the UART available on Raspberry Pi. It has four pins, two of which are reception pin (RX) and transmission pin (TX) and the other two are connected to 5 V and ground GND respectively.

The RX and TX on the Raspberry Pi pin header are connected to the TX and RX on the camera, respectively. In parallel, we connect the TX and RX lines to a logical inverter. Then, via 240 Ω resistors, we connect them to two LEDs, yellow for TX and green for RX, and then to GND. Since TX and RX are normally high and are drawn low during communication, we need to invert the signals so that the LEDs remain unlit when there is no communication and they blink when communication is happening.

We also connect four GPIO pins (18, 23, 24, and 25) via 160 Ω resistors to four LEDs and ground to signal the different states in our application. GPIO 18 controls a green LED signal when the camera application is running. GPIO 23 and 24 control yellow LEDs; the first GPIO controls the LED when communication with the camera is being performed, and the second controls the LED when a network request is being handled. GPIO 25 controls a red LED, and it is used to show whether an error has occurred somewhere.

This project can be better understood with the following circuit diagram:

A circuit diagram for the camera project

Tip

For a bill of materials containing components used, see Appendix R, Bill of Materials.

To be able to access the serial port on Raspberry Pi from the code, we must first make sure that the Linux operating system does not use it for other purposes. The serial port is called ttyAMA0 in the operating system, and we need to remove references to it from two operating system files: /boot/cmdline.txt and /etc/inittab. This will disable access to the Raspberry Pi console via the serial port. But we will still be able to access it using SSH or a USB keyboard. From a command prompt, you can edit the first file as follows:

$ sudo nano /boot/cmdline.txt

You need to edit the second file as well, as follows:

$ sudo nano /etc/inittab

Tip

For more detailed information, refer to the http://elinux.org/RPi_Serial_Connection#Preventing_Linux_using_the_serial_port article and read the section on how to prevent Linux from using the serial port.

To interface the hardware laid out on our prototype board, we will use the Clayster.Library.RaspberryPi library. We control the LEDs using DigitalOutput objects:

private static DigitalOutput executionLed = new DigitalOutput (18, true);

private static DigitalOutput cameraLed = new DigitalOutput (23, false);

private static DigitalOutput networkLed = new DigitalOutput (24, false);

private static DigitalOutput errorLed = new DigitalOutput (25, false);

The LinkSprite camera is controlled by the LinkSpriteJpegColorCamera class in the Clayster.Library.RaspberryPi.Devices.Cameras subnamespace. It uses the Uart class to perform serial communication. Both these classes are available in the downloadable source code:

private static LinkSpriteJpegColorCamera camera = new LinkSpriteJpegColorCamera (LinkSpriteJpegColorCamera.BaudRate.Baud__38400);

For our camera to work, we need four persistent and configurable default settings: camera resolution, compression level, image encoding, and an identity for our device. To achieve this, we create a DefaultSettings class that we can persist in the object database:

public class DefaultSettings : DBObject

{

private LinkSpriteJpegColorCamera.ImageSize resolution = LinkSpriteJpegColorCamera.ImageSize._320x240;

private byte compressionLevel = 0x36;

private string imageEncoding = "image/jpeg";

private string udn = Guid.NewGuid().ToString();

public DefaultSettings() : base(MainClass.db)

{

}

We publish the camera resolution property as follows. The three possible enumeration values are: ImageSize_160x120, ImageSize_320x240, and ImageSize_640x480. These correspond to the three different resolutions supported by the camera:

[DBDefault (LinkSpriteJpegColorCamera.ImageSize._320x240)]

public LinkSpriteJpegColorCamera.ImageSize Resolution

{

get

{

return this.resolution;

}

set

{

if (this.resolution != value)

{

this.resolution = value;

this.Modified = true;

}

}

}

We publish the compression-level property in a similar manner.

Internally, the camera only supports JPEG-encoding of the pictures that are taken. But in our project, we will add software support for PNG and BMP compression as well. To make things simple and extensible, we choose to store the image-encoding method as a string containing the Internet media type of the encoding scheme implied:

[DBShortStringClipped (false)]

[DBDefault ("image/jpeg")]

public string ImageEncoding

{

get

{

return this.imageEncoding;

}

set

{

if(this.imageEncoding != value)

{

this.imageEncoding = value;

this.Modified = true;

}

}

}

We add a method to load any persisted settings from the object database:

public static DefaultSettings LoadSettings()

{

return MainClass.db.FindObjects <DefaultSettings>().GetEarliestCreatedDeleteOthers();

}

}

In our main application, we create a variable to hold our default settings. We make sure to define it as internal using the internal access specifier so that we can access it from other classes in our project:

internal static DefaultSettings defaultSettings;

During application initialization, we load any default settings available from previous executions of the application. If none are found, the default settings are created and initiated to the default values of the corresponding properties, including a new GUID identifying the device instance in the UDN property the UDN property:

defaultSettings = DefaultSettings.LoadSettings();

if(defaultSettings == null)

{

defaultSettings = new DefaultSettings();

defaultSettings.SaveNew();

}

To avoid having to reconfigure the camera every time a picture is to be taken, something that is time-consuming, we need to remember what the current settings are and avoid reconfiguring the camera unless new properties are used. These current settings do not need to be persisted since we can reinitialize the camera every time the application is restarted. We declare our current settings parameters as follows:

private static LinkSpriteJpegColorCamera.ImageSize currentResolution;

private static byte currentCompressionRatio;

During application initialization, we need to initialize the camera. First, we get the default settings as follows:

Log.Information("Initializing camera.");

try

{

currentResolution = defaultSettings.Resolution;

currentCompressionRatio = defaultSettings.CompressionLevel;

Here, we need to reset the camera and set the default image resolution. After changing the resolution, a new reset of the camera is required. All of this is done on the camera's default baud rate, which is 38,400 baud:

try

{

camera.Reset();// First try @ 38400 baud

camera.SetImageSize(currentResolution);

camera.Reset();

Since image transfer is slow, we then try to set the highest baud rate supported by the camera:

camera.SetBaudRate (LinkSpriteJpegColorCamera.BaudRate.Baud_115200);

camera.Dispose();

camera = new LinkSpriteJpegColorCamera (LinkSpriteJpegColorCamera.BaudRate.Baud_115200);

If the preceding procedure fails, an exception will be thrown. The most probable cause for this to fail, if the hardware is working correctly, is that the application has been restarted and the camera is already working at 115,200 baud. This will be the case during development, for instance. In this case, we simply set the camera to 115,200 baud and continue. Here is room for improved error handling, and trying out different options to recover from more complex error conditions and synchronize the current states with the states of the camera:

}

catch(Exception) // If already at 115200 baud.

{

camera.Dispose ();

camera = new LinkSpriteJpegColorCamera (LinkSpriteJpegColorCamera.BaudRate.Baud_115200);

We then set the camera compression rate as follows:

}finally

{

camera.SetCompressionRatio(currentCompressionRatio);

}

If this fails, we log the error to the event log and light our error LED to inform the end user that there is a failure:

}catch(Exception ex)

{

Log.Exception(ex);

errorLed.High();

camera = null;

}

In this chapter, we presented most of the projects that will be discussed in this book, together with circuit diagrams that show how to connect our hardware components. We also introduced development using C# for Raspberry Pi and presented the basic project structure. Several Clayster libraries were also introduced that help us with common programming tasks such as communication, interoperability, scripting, event logging, interfacing GPIO, and data persistence.

In the next chapter, we will introduce our first communication protocol for the IoT: The Hypertext Transfer Protocol (HTTP).

Chapter 2. The HTTP Protocol

Now that we have a definition for Internet of Things, where do we start? It is safe to assume that most people that use a computer today have had an experience of Hypertext Transfer Protocol (HTTP), perhaps without even knowing it. When they "surf the Web", what they do is they navigate between pages using a browser that communicates with the server using HTTP. Some even go so far as identifying the Internet with the Web when they say they "go on the Internet" or "search the Internet".

Yet HTTP has become much more than navigation between pages on the Internet. Today, it is also used in machine to machine (M2M) communication, automation, and Internet of Things, among other things. So much is done on the Internet today, using the HTTP protocol, because it is easily accessible and easy to relate to. For this reason, we are starting our study of Internet of Things by studying HTTP. This will allow you to get a good grasp of its strengths and weaknesses, even though it is perhaps one of the more technically complex protocols. We will present the basic features of HTTP; look at the different available HTTP methods; study the request/response pattern and the ways to handle events, user authentication, and web services.

Before we begin, let's review some of the basic concepts used in HTTP which we will be looking at:

The basics of HTTP

How to add HTTP support to the sensor, actuator, and controller projects

How common communication patterns such as request/response and event subscription can be utilized using HTTP

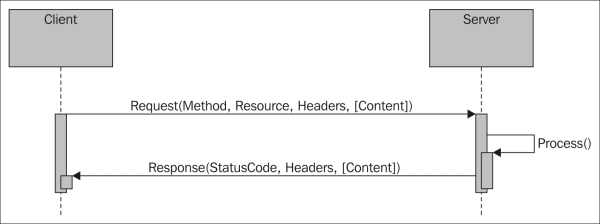

HTTP is a stateless request/response protocol where clients request information from a server and the server responds to these requests accordingly. A request is basically made up of a method, a resource, some headers, and some optional content. A response is made up of a three-digit status code, some headers and some optional content. This can be observed in the following diagram:

HTTP request/response pattern

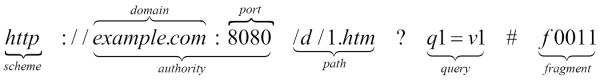

Each resource, originally thought to be a collection of Hypertext documents or HTML documents, is identified by a Uniform Resource Locator (URL). Clients simply use the GET method to request a resource from the corresponding server. In the structure of the URL presented next, the resource is identified by the path and the server by the authority portions of the URL. The PUT and DELETE methods allow clients to upload and remove content from the server, while the POST method allows them to send data to a resource on the server, for instance, in a web form. The structure of a URL is shown in the following diagram:

Structure of a Uniform Resource Locator (URL)

HTTP defines a set of headers that can be used to attach metainformation about the requests and responses sent over the network. These headers are human readable key - value text pairs that contain information about how content is encoded, for how long it is valid, what type of content is desired, and so on. The type of content is identified by a Content-Type header, which identifies the type of content that is being transmitted. Headers also provide a means to authenticate clients to the server and a mechanism to introduce states in HTTP. By introducing cookies, which are text strings, the servers can ask the client to remember the cookies, which the client can then add to each request that is made to the server.

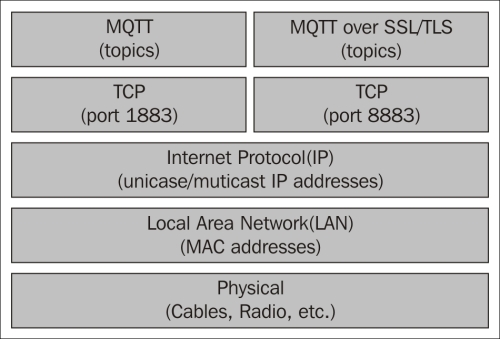

HTTP works on top of the Internet Protocol (IP). In this protocol, machines are addressed using an IP address, which makes it possible to communicate between different local area networks (LANs) that might use different addressing schemes, even though the most common ones are Ethernet-type networks that use media access control (MAC) addresses. Communication in HTTP is then done over a Transmission Control Protocol (TCP) connection between the client and the server. The TCP connection makes sure that the packets are not lost and are received in the same order in which they were sent. The connection endpoints are defined by the corresponding IP addresses and a corresponding port number. The assigned default port number for HTTP is 80, but other port numbers can also be used; the alternative HTTP port 8080 is common.

Tip

To simplify communication, Domain Name System (DNS) servers provide a mechanism of using host names instead of IP addresses when referencing a machine on the IP network.

Encryption can done through the use of Secure Sockets Layer (SSL) or Transport Layer Security (TLS). When this is done, the protocol is normally named Hypertext Transfer Protocol Secure (HTTPS) and the communication is performed on a separate port, normally 443. In this case, most commonly the server, but also the client, can be authenticated using X.509 certificates that are based on a Public Key Infrastructure (PKI), where anybody with access to the public part of the certificate can encrypt data meant for the holder of the private part of the certificate. The private part is required to decrypt the information. These certificates allow the validation of the domain of the server or the identity of the client. They also provide a means to check who their issuer is and whether the certificates are invalid because they have been revoked. The Internet architecture is shown in the following diagram:

HTTP is a cornerstone of service-oriented architecture (SOA), where methods for publishing services through HTTP are called web services. One important manner of publishing web services is called Simple Object Access Protocol (SOAP), where web methods, their arguments, return values, bindings, and so on, are encoded in a specific XML format. It is then documented using the Web Services Description Language (WSDL). Another popular method of publishing web services is called Representational State Transfer (REST). This provides a simpler, loosely-coupled architecture where methods are implemented based on the normal HTTP methods and URL query parameters, instead of encoding them in XML using SOAP.

Recent developments based on the use of HTTP include Linked Data; a re-abstraction of the Web, where any type of data can be identified using a Unique Resource Identifier (URI), semantic representation of this data into Semantic Triples, as well as semantic data formats such as Resource Description Framework (RDF), readable by machines, or Terse RDF Triple Language (TURTLE), more readily readable by humans. While the collection of HTTP-based Internet resources is called the Web, these later efforts are known under the name 'the semantic web'.

Tip

For a thorough review of HTTP, please see Appendix E, Fundamentals of HTTP.

We are now ready to add web support to our working sensor, which we prepared in the previous chapter, and publish its data using the HTTP protocol. The following are the three basic strategies that one can use when publishing data using HTTP:

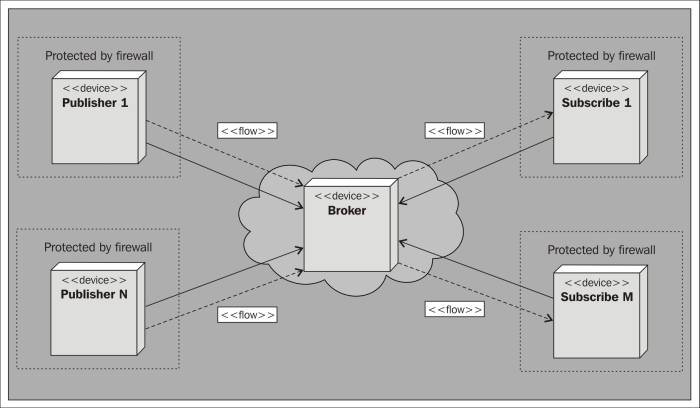

In the first strategy the sensor is a client who publishes information to a server on the Internet. The server acts as a broker and informs the interested parties about sensor values. This pattern is called publish/subscribe, and it will be discussed later in this book. It has the advantage of simplifying handling events, but it makes it more difficult to get momentary values. Sensors can also be placed behind firewalls, as long as the server is publically available on the Internet.

Another way is to let all entities in the network be both clients and servers, depending on what they need to do. This pattern will be discussed in Chapter 3, The UPnP Protocol. This reduces latency in communication, but requires all participants to be on the same side of any firewalls.

The method we will employ in this chapter is to let the sensor become an HTTP server, and anybody who is interested in knowing the status of the sensor become the clients. This is advantageous as getting momentary values is easy but sending events is more difficult. It also allows easy access to the sensor from the parties behind firewalls if the sensor is publically available on the Internet.

Setting up an HTTP server on the sensor is simple if you are using the Clayster libraries. In the following sections, we will demonstrate with images how to set up an HTTP server and publish different kinds of data such as XML, JavaScript Object Notation (JSON), Resource Description Framework (RDF), Terse RDF Triple Language (TURTLE), and HTML. However, before we begin, we need to add references to namespaces in our application. Add the following code at the top, since it is needed to be able to work with XML, text, and images:

using System.Xml;

using System.Text;

using System.IO;

using System.Drawing;

Then, add references to the following Clayster namespaces, which will help us to work with HTTP and the different content types mentioned earlier:

using Clayster.Library.Internet;

using Clayster.Library.Internet.HTTP;

using Clayster.Library.Internet.HTML;

using Clayster.Library.Internet.MIME;

using Clayster.Library.Internet.JSON;

using Clayster.Library.Internet.Semantic.Turtle;

using Clayster.Library.Internet.Semantic.Rdf;

using Clayster.Library.IoT;

using Clayster.Library.IoT.SensorData;

using Clayster.Library.Math;

The Internet library helps us with communication and encoding, the IoT library with interoperability, and the Math library with graphs.

During application initialization, we will first tell the libraries that we do not want the system to search for and use proxy servers (first parameter), and that we don't lock these settings (second parameter). Proxy servers force HTTP communication to pass through them. This makes them useful network tools and allows an added layer of security and monitoring. However. unless you have one in your network, it can be annoying during application development if the application has to always look for proxy servers in the network when none exist. This also causes a delay during application initialization, because other HTTP communication is paused until the search times out. Application initialization is done using the following code:

HttpSocketClient.RegisterHttpProxyUse (false, false);

To instantiate an HTTP server, we add the following code before application initialization ends and the main loop begins:

HttpServer HttpServer = new HttpServer (80, 10, true, true, 1);

Log.Information ("HTTP Server receiving requests on port " + HttpServer.Port.ToString ());

This opens a small HTTP server on port 80, which requires the application to be run with superuser privileges, which maintains a connection backlog of 10 simultaneous connection attempts, allows both GET and POST methods, and allocates one working thread to handle synchronous web requests. The HTTP server can process both synchronous and asynchronous web resources:

A synchronous web resource responds within the HTTP handler we register for each resource. These are executed within the context of a working thread.

An asynchronous web resource handles processing outside the context of the actual request and is responsible for responding by itself. This is not executed within the context of a working thread.

Note

For now, we will focus on synchronous web resources and leave asynchronous web resources for later.

Now we are ready to register web resources on the server. We will create the following web resources:

HttpServer.Register ("/", HttpGetRoot, false);

HttpServer.Register ("/html", HttpGetHtml, false);

HttpServer.Register ("/historygraph", HttpGetHistoryGraph, false);

HttpServer.Register ("/xml", HttpGetXml, false);

HttpServer.Register ("/json", HttpGetJson, false);

HttpServer.Register ("/turtle", HttpGetTurtle, false);

HttpServer.Register ("/rdf", HttpGetRdf, false);

These are all registered as synchronous web resources that do not require authentication (the third parameter in each call). We will handle authentication later in this chapter. Here, we have registered the path of each resource and connected that path with an HTTP handler method, which will process each corresponding request.

In the previous example, we chose to register web resources using methods that will return the corresponding information dynamically. It is also possible to register web resources based on the HttpServerSynchronousResource and HttpServerAsynchronousResource classes and implement the functionality as an override of the existing methods. In addition, it is also possible to register static content, either in the form of embedded resources using the HttpServerEmbeddedResource class or in the form of files in the filesystem using the HttpServerResourceFolder class. In our examples, however, we've chosen to only register resources that generate dynamic content.

We also need to correctly dispose of our server when the application ends, or the application will not be terminated correctly. This is done by adding the following disposal method call in the termination section of the main application:

HttpServer.Dispose ();

If we want to add an HTTPS support to the application, we will need an X.509Certificate with a valid private key. First, we will have to load this certificate to the server's memory. For this, we will need its password, which can be obtained through the following code:

X509Certificate2 Certificate = new X509Certificate2 ("Certificate.pfx", "PASSWORD");

We then create the HTTPS server in a way similar to the HTTP server that we just created, except we will now also tell the server to use SSL/TLS (sixth parameter) and not the client certificates (seventh parameter) and provide the server certificate to use in HTTPS:

HttpServer HttpsServer = new HttpServer (443, 10, true, true, 1, true, false, Certificate);

Log.Information ("HTTPS Server receiving requests on port " + HttpsServer.Port.ToString ());

We will then make sure that the same resources that are registered on the HTTP server are also registered on the HTTPS server:

foreach (IHttpServerResource Resource in HttpServer.GetResources())

HttpsServer.Register (Resource);

We will also need to correctly dispose of the HTTPS server when the application ends, just as we did in the case of the HTTP server. As usual, we will do this in the termination section of the main application, as follows:

HttpsServer.Dispose ();

The first web resource we will add is a root menu, which is accessible through the path /. It will return an HTML page with links to what can be seen on the device. We will add the root menu method as follows:

private static void HttpGetRoot (HttpServerResponse resp, HttpServerRequest req)

{

networkLed.High ();

try

{

resp.ContentType = "text/html";

resp.Encoding = System.Text.Encoding.UTF8;

resp.ReturnCode = HttpStatusCode.Successful_OK;

} finally

{

networkLed.Low ();

}

}

This preceding method still does not return any page.

This is because the method header contains the HTTP response object resp, and the response should be written to this parameter. The original request can be found in the req parameter. Notice the use of the networkLed digital output in a try-finally block to signal web access to one of our resources. This pattern will be used throughout this book.

Before responding to the query, the method has to inform the recipient what kind of response it will receive. This is done by setting the ContentType parameter of the response object. If we return an HTML page, we use the Internet media type text/html here. Since we send text back, we also have to choose a text encoding. We choose the UTF8 encoding, which is common on the Web. We also make sure to inform the client, that the operation was successful, and that the OK status code (200) is returned.

We will now return the actual HTML page, a very crude one, having the following code:

resp.Write ("<html><head><title>Sensor</title></head>");

resp.Write ("<body><h1>Welcome to Sensor</h1>");

resp.Write ("<p>Below, choose what you want to do.</p><ul>");

resp.Write ("<li>View Data</li><ul>");

resp.Write ("<li><a href='/xml?Momentary=1'>");

resp.Write ("View data as XML using REST</a></li>");

resp.Write ("<li><a href='/json?Momentary=1'>");

resp.Write ("View data as JSON using REST</a></li>");

resp.Write ("<li><a href='/turtle?Momentary=1'>");

resp.Write ("View data as TURTLE using REST</a></li>");

resp.Write ("<li><a href='/rdf?Momentary=1'>");

resp.Write ("View data as RDF using REST</a></li>");

resp.Write ("<li><a href='/html'>");

resp.Write ("Data in a HTML page with graphs</a></li></ul>");

resp.Write ("</body></html>");

And then we are done! The previous code will show the following view in a browser when navigating to the root:

We are now ready to display our measured information to anybody through a web page (or HTML page). We've registered the path /html to an HTTP handler method named HttpGetHtml. We will now start implementing it, as follows:

private static void HttpGetHtml (HttpServerResponse resp,

HttpServerRequest req)

{

networkLed.High ();

try

{

resp.ContentType = "text/html";

resp.Encoding = System.Text.Encoding.UTF8;

resp.Expires = DateTime.Now;

resp.ReturnCode = HttpStatusCode.Successful_OK;

lock (synchObject)

{

}

}

finally

{

networkLed.Low ();

}

}

The only difference here, compared to the previous method, is that we have added a property to the response: an expiry date and time. Since our values are momentary and are updated every second, we will tell the client that the page expires immediately. This ensures the page is not cached on the client side, and it is reloaded properly when the user wants to see the page again. We also added a lock statement, using our synchronization object, to make sure that access to the momentary values are only available from one thread at a time.

We can now start to return our momentary values, from within the lock statement:

resp.Write ("<html><head>");

resp.Write ("<meta http-equiv='refresh' content='60'/>");

resp.Write ("<title>Sensor Readout</title></head>");

resp.Write ("<body><h1>Readout, ");

resp.Write (DateTime.Now.ToString ());

resp.Write ("</h1><table><tr><td>Temperature:</td>");

resp.Write ("<td style='width:20px'/><td>");

resp.Write (HtmlUtilities.Escape (temperatureC.ToString ("F1")));

resp.Write (" C</td></tr><tr><td>Light:</td><td/><td>");

resp.Write (HtmlUtilities.Escape (lightPercent.ToString ("F1")));

resp.Write (" %</td></tr><tr><td>Motion:</td><td/><td>");

resp.Write (motionDetected.ToString ());

resp.Write ("</td></tr></table>");

We would like to draw your attention to the meta tag at the top of an HTML document. This tag tells the client to refresh the page every 60 seconds. So, by using this meta tag, the page automatically updates itself every minute when it is kept open.

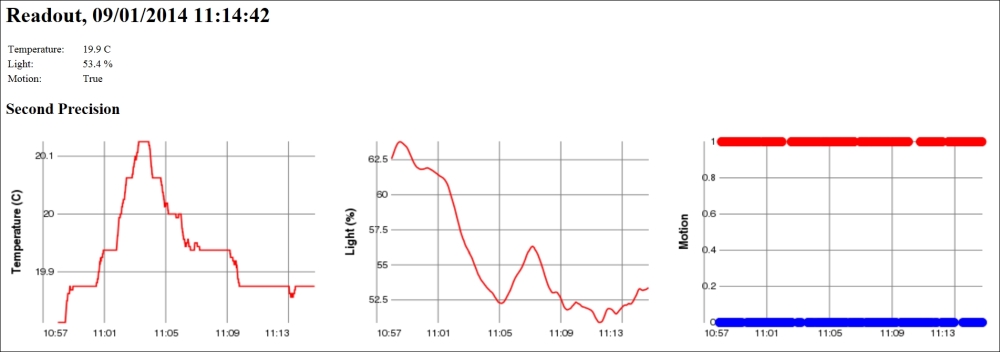

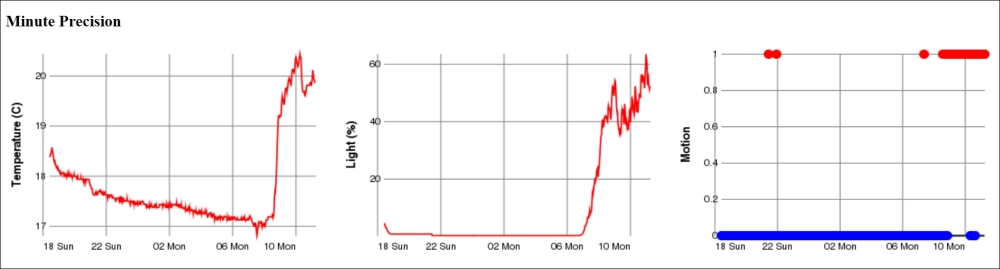

Historical data is best displayed using graphs. To do this, we will output image tags with references to our historygraph web resource, as follows:

if (perSecond.Count > 1)

{

resp.Write ("<h2>Second Precision</h2>");

resp.Write ("<table><tr><td>");

resp.Write ("<img src='historygraph?p=temp&base=sec&");

resp.Write ("w=350&h=250' width='480' height='320'/></td>");

resp.Write ("<td style='width:20px'/><td>");

resp.Write ("<img src='historygraph?p=light&base=sec&");

resp.Write ("w=350&h=250' width='480' height='320'/></td>");

resp.Write ("<td style='width:20px'/><td>");

resp.Write ("<img src='historygraph?p=motion&base=sec&");

resp.Write ("w=350&h=250' width='480' height='320'/></td>");

resp.Write ("</tr></table>");

Here, we have used query parameters to inform the historygraph resource what we want it to draw. The p parameter defines the parameter, the base parameter the time base, and the w and h parameters the width and height respectively of the resulting graph. We will now do the same for minutes, hours, days, and months by assigning the base query parameter the values min, h, day and month respectively.

We will then close all if statements and terminate the HTML page before we send it to the client:

}

resp.Write ("</body><html>");

Before we can view the page, we also need to create our historygraph resource that will generate the graph images referenced from the HTML page. We will start by defining the method in our usual way:

private static void HttpGetHistoryGraph (HttpServerResponse resp,

HttpServerRequest req)

{

networkLed.High ();

try

{

}

finally

{

networkLed.Low ();

}

}

Within the try section of our method, we start by parsing the query parameters of the request. If we find any errors in the request that cannot be mended or ignored, we make sure to throw an HttpException exception by taking the HTTPStatusCode value and illustrating the error as a parameter. This causes the correct error response to be returned to the client. We start by parsing the width and height of the image to be generated:

int Width, Height;

if (!req.Query.TryGetValue ("w", out s) || !int.TryParse (s, out Width) || Width <= 0 || Width > 2048)

throw new HttpException (HttpStatusCode.ClientError_BadRequest);

if (!req.Query.TryGetValue ("h", out s) || !int.TryParse (s, out Height) || Height <= 0 || Height > 2048)

throw new HttpException (HttpStatusCode.ClientError_BadRequest);

Then we extract the parameter to plot the graph. The parameter is stored in the p query parameter. From this value, we will extract the property name corresponding to the parameter in our Record class and the ValueAxis title in the graph. To do this, we will first define some variables:

string ValueAxis;

string ParameterName;

string s;

We will then extract the value of the p parameter:

if (!req.Query.TryGetValue ("p", out s))

throw new HttpException (HttpStatusCode.ClientError_BadRequest);

We will then look at the value of this parameter to deduce the Record property name and the ValueAxis title:

switch (s)

{

case "temp":

ParameterName = "TemperatureC";

ValueAxis = "Temperature (C)";

break;

case "light":

ParameterName = "LightPercent";

ValueAxis = "Light (%)";

break;

case "motion":

ParameterName = "Motion";

ValueAxis = "Motion";

break;

default:

throw new HttpException (HttpStatusCode.ClientError_BadRequest);

}

We will need to extract the value of the base query parameter to know what time base should be graphed:

if (!req.Query.TryGetValue ("base", out s))

throw new HttpException (HttpStatusCode.ClientError_BadRequest);

In the Clayster.Library.Math library, there are tools to generate graphs. These tools can, of course, be accessed programmatically if desired. However, since we already use the library, we can also use its scripting capabilities, which make it easier to create graphs. Variables accessed by script are defined in a Variables collection. So, we need to create one of these:

Variables v = new Variables();

We also need to tell the client for how long the graph will be valid or when the graph will expire. So, we will need the current date and time:

DateTime Now = DateTime.Now;

Access to any historical information must be done within a thread-safe critical section of the code. To achieve this, we will use our synchronization object again:

lock (synchObject)

{

}

Within this critical section, we can now safely access our historical data. It is only the List<T> objects that we need to protect and not the Records objects. So, as soon as we've populated the Variables collection with the arrays returned using the ToArray() method, we can unlock our synchronization object again. The following switch statement needs to be executed in the critical section:

switch (s)

{

case "sec":

v ["Records"] = perSecond.ToArray ();

resp.Expires = Now;

break;

case "min":

v ["Records"] = perMinute.ToArray ();

resp.Expires = new DateTime (Now.Year, Now.Month, Now.Day, Now.Hour, Now.Minute, 0).AddMinutes (1);

break;

The hour, day, and month cases (h, day, and month respectively) will be handled analogously. We will also make sure to include a default statement that returns an HTTP error, making sure bad requests are handled properly:

default:

throw new HttpException (

HttpStatusCode.ClientError_BadRequest);

}

Now, the Variables collection contains a variable called Records that contains an array of Record objects to draw. Furthermore, the ParameterName variable contains the name of the value property to draw. The Timestamp property of each Record object contains values for the time axis. Now we have everything we need to plot the graph. We only need to choose the type of graph to plot.

The motion detector reports Boolean values. Plotting the motion values using lines or curves may just cause a mess if it regularly reports motion and non-motion. A better option is perhaps the use of a scatter graph, where each value is displayed using a small colored disc (say, of radius 5 pixels). We interpret false values to be equal to 0 and paint them Blue, and true values to be equal to 1 and paint them Red.

The Clayster script to accomplish this would be as follows:

scatter2d(Records.Timestamp,

if (Values:=Records.Motion) then 1 else 0,5,

if Values then 'Red' else 'Blue','','Motion')

The other two properties are easier to draw since they can be drawn as simple line graphs:

line2d(Records.Timestamp,Records.Property,'Value Axis')

The Expression class handles parsing and evaluation of Clayster script expressions. This class has two static methods for parsing an expression: Parse() and ParseCached(). If the expressions are from a limited set of expressions, ParseCached() can be used. It only parses an expression once and remembers it. If expressions contain a random component, Parse() should be used since caching does not fulfill any purpose except exhausting the server memory.

The parsed expression has an Evaluate() method that can be used to evaluate the expression. The method takes a Variables collection, which represents the variables available to the expression when evaluating it. All graphical functions return an object of the Graph class. This object can be used to generate the image we want to return. First, we need a Graph variable to store our script evaluation result:

Graph Result;

We will then generate, parse, and evaluate our script, as follows:

if (ParameterName == "Motion")

Result = Expression.ParseCached ("scatter2d("+

"Records.Timestamp, "+

"if (Values:=Records.Motion) then 1 else 0,5, "+

"if Values then 'Red' else 'Blue','','Motion')").

Evaluate (v) as Graph;

else

Result = Expression.ParseCached ("line2d("+

"Records.Timestamp,Records." + ParameterName +

",'','" + ValueAxis + "')").Evaluate (v)as Graph;

We now have our graph. All that is left to do is to generate a bitmapped image from it, to return to the client. We will first get the bitmapped image, as follows:

Image Img = Result.GetImage (Width, Height);

Then we need to encode it so that it can be sent to the client. This is done using Multi-Purpose Internet Mail Extensions (MIME) encoding of the image. We can use MimeUtilities to encode the image, as follows:

byte[] Data = MimeUtilities.Encode (Img, out s);

The Encode() method on MimeUtilities returns a byte array of the encoded object. It also returns the Internet media type or content type that is used to describe how the object was encoded. We tell the client the content type that was used, and that the operation was performed successfully. We then return the binary block of data representing our image, as follows:

resp.ContentType = s;

resp.ReturnCode = HttpStatusCode.Successful_OK;

resp.WriteBinary (Data);

We can now view our /html page and see not only our momentary values at the top but also graphs displaying values per second, per minute, per hour, per day, and per month, depending on how long we let the sensor work and collect data. At this point, data is not persisted, so as soon as the data is reset, the sensor will lose all the history.

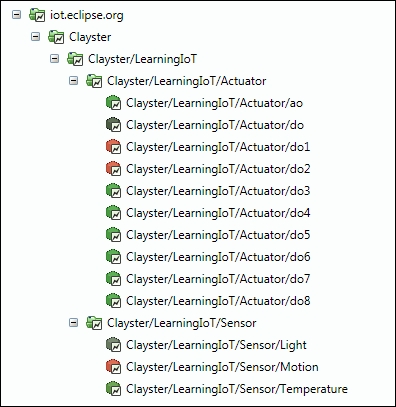

We have now created interfaces to display sensor data to humans, and are now ready to export the same sensor data to machines. We have registered four web resources to export sensor data to four different formats with the same names: /xml, /json, /turtle, and /rdf. Luckily, we don't have to write these export methods explicitly as long as we export the sensor data. Clayster.Library.IoT helps us to export sensor data to these different formats through the use of an interface named ISensorDataExport.

We will create our four web resources, one for each data format. We will begin with the resource that exports XML data:

private static void HttpGetXml (HttpServerResponse resp,

HttpServerRequest req)

{

HttpGetSensorData (resp, req, "text/xml",

new SensorDataXmlExport (resp.TextWriter));

}

We can use the same code to export data to different formats by replacing the key arguments, as shown in the following table:

|

Format |

Method |

Content Type |

Export class |

|

XML |

HttpGetXml |

text/xml |

SensorDataXmlExport |

|

JSON |

HttpGetJson |

application/json |

SensorDataJsonExport |

|

TURTLE |

HttpGetTurtle |

text/turtle |

SensorDataTurtleExport |

|

RDF |

HttpGetRdf |

application/rdf+xml |

SensorDataRdfExport |

Clayster.Library.IoT can also help the application to interpret query parameters for sensor data queries in an interoperable manner. This is done by using objects of the ReadoutRequest class, as follows:

private static void HttpGetSensorData (HttpServerResponse resp, HttpServerRequest req,string ContentType, ISensorDataExport ExportModule)

{

ReadoutRequest Request = new ReadoutRequest (req);

HttpGetSensorData (resp, ContentType, ExportModule, Request);

}

Often, as in our case, a sensor or a meter has a lot of data. It is definitely not desirable to return all the data to everybody who requests information. In our case, the sensor can store up to 5000 records of historical information. So, why should we export all this information to somebody who only wants to see the momentary values? We shouldn't. The ReadoutRequest class in the Clayster.Library.IoT.SensorData namespace helps us to parse a sensor data request query in an interoperable fashion and lets us know the type of data that is requested. It helps us determine which field names to report on which nodes. It also helps us to limit the output to specific readout types or a specific time interval. In addition, it provides all external credentials used in distributed transactions. Appendix F, Sensor Data Query Parameters, provides a detailed explanation of the query parameters that are used by the ReadoutRequest class.

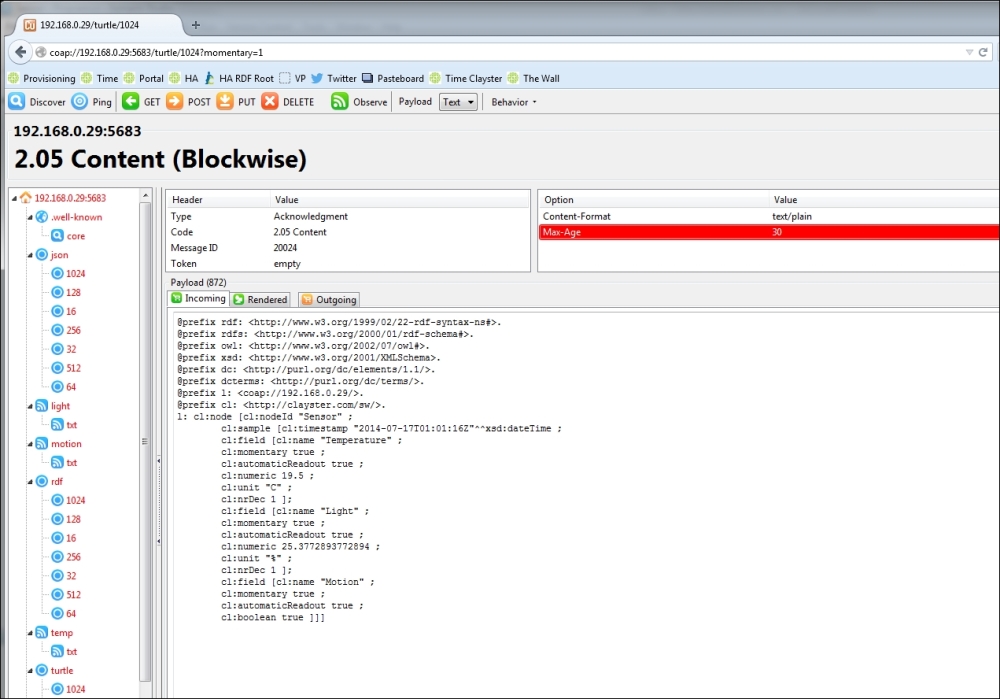

Data export is now complete, and so the next step is to test the different data formats and see what they look like. First, we will test our XML data export using an URL similar to the following:

http://192.168.0.29/xml?Momentary=1&HistoricalDay=1&Temperature=1

This will only read momentary and daily historical-temperature values. Data will come in an unformatted manner, but viewing the data in a browser provides some form of formatting. If we want JSON instead of XML, we must call the /json resource instead:

http://192.168.0.29/json?Momentary=1&HistoricalDay=1&Temperature=1

The data that is returned can be formatted using online JSON formatting tools to get a better overview of its structure. To test the TURTLE and RDF versions of the data export, we just need to use the URLs similar to the following:

http://192.168.0.29/turtle?Momentary=1&HistoricalDay=1&Temperature=1

http://192.168.0.29/rdf?Momentary=1&HistoricalDay=1&Temperature=1

Note

If you've setup HTTPS on your device, you access the resources using the https URI scheme instead of the http URI scheme.

Publishing things on the Internet is risky. Anybody with access to the thing might also try to use it with malicious intent. For this reason, it is important to protect all public interfaces with some form of user authentication mechanism to make sure only approved users with correct privileges are given access to the device.

Tip

As discussed in the introduction to HTTP, there are several types of user authentication mechanisms to choose from. High-value entities are best protected using both server-side and client-side certificates over an encrypted connection (HTTPS). Although this book does not necessarily deal with things of high individual value, some form of protection is still needed.

We have two types of authentication:

The first is the www authentication mechanism provided by the HTTP protocol itself. This mechanism is suitable for automation

The second is a login process embedded into the web application itself, and it uses sessions to maintain user login credentials

Both of these will be explained in Appendix G, Security in HTTP.

Earlier we had a discussion about the positive and negative aspects of letting the sensor be an HTTP server. One of the positive aspects is that it is very easy for others to get current information when they want. However, it is difficult for the sensor to inform interested parties when something happens. If we would have let the sensor act as a HTTP client instead, the roles would have been reversed. It would have been easy to inform others when something happens, but it would have been difficult for interested parties to get current information when they wanted it.

Since we have chosen to let the sensor be an HTTP server, Appendix H, Delayed Responses in HTTP, is dedicated to show how we can inform interested parties of events that occur on the device and when they occur, without the need for constant polling of the device. This architecture will lend itself naturally to a subscription pattern, where different parties can subscribe to different types of events in a natural fashion. These event resources will be used later by the controller to receive information when critical events occur, without the need to constantly poll the sensor.

In automation, apart from having a normal web interface, it is important to be able to provide interoperable interfaces for control. One of the simplest methods to achieve this is through web services. This section shows you how to add support to an application for web services that support both SOAP and REST.

A web services resource is a special type of synchronous web resource. Instead of you parsing a query string or decode data in the response, the web service engine does that for you. All you need to do is create methods as you normally do, perhaps with some added documentation, and the web service engine will publish these methods using both SOAP and REST. Before we define our web service, we need the references to System.Web and System.Web.Services in our application. We also need to add a namespace reference to our application:

using System.Web.Services;

We will define a web service resource by creating a class that derives from HttpServerWebService. Apart from providing the path of the web service locally on the HTTP server, you also need to define a namespace for the web service. This namespace defines the interface and web services with the same namespace, even though they are hosted on different servers, and are supposed to be interoperable. This can be seen in the following code:

private class WebServiceAPI : HttpServerWebService

{

public WebServiceAPI ()

: base ("/ws")

{

}

public override string Namespace

{

get

{

return "http://clayster.com/learniot/actuator/ws/1.0/";

}

}

}

The web service engine can help you test your web services by creating test forms for them. Normally, these test forms are only available if you navigate to the web service from the same machine. Since we also work with Raspberry Pi boards from remote computers, we must explicitly activate test forms even for remote requests. However, as soon as we do not need these test forms, they should be made inaccessible from the remote machines. We can activate test forms by using the following property override:

public override bool CanShowTestFormOnRemoteComputers

{

get

{

return true;

}

}

Since web services are used for control and can cause problems if accessed by malicious users, it is important that we register the resource enabling authentication. This is important once the test forms have been activated. We can register the web services activating authentication, as follows:

HttpServer.Register (new WebServiceAPI (), true);

Let's begin to create web service methods. We will start with methods for control of individual outputs. For instance, the following method will allow us to get the output status of one specific digital output:

[WebMethod]

[WebMethodDocumentation ("Returns the current status of the digital output.")]

public bool GetDigitalOutput ([WebMethodParameterDocumentation ("Digital Output Number. Possible values are 1 to 8.")]int Nr)

{

if (Nr >= 1 && Nr <= 8)

return digitalOutputs [Nr - 1].Value;

else

return false;

}

The WebMethod attribute tells the web service engine that this method should be published, and it can be accessed through the web service. To help the consumer of the web service, we should also provide documentation on how the web service works. This is done by adding theWebMethodDocumentation attributes on each method, and the WebMethodParameterDocumentation attributes in each input parameter. This documentation will be displayed in test forms and WSDL documents describing the web service.

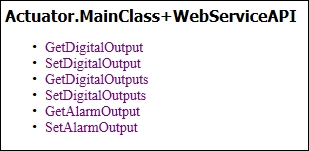

We will also create a method for setting an individual output, as follows:

[WebMethod]

[WebMethodDocumentation ("Sets the value of a specific digital output.")]

public void SetDigitalOutput (

[WebMethodParameterDocumentation (

"Digital Output Number. Possible values are 1 to 8.")]

int Nr,

[WebMethodParameterDocumentation ("Output State to set.")]

bool Value)

{

if (Nr >= 1 && Nr <= 8)

{

digitalOutputs [Nr - 1].Value = Value;

state.SetDO (Nr, Value);

state.UpdateIfModified ();

}

}

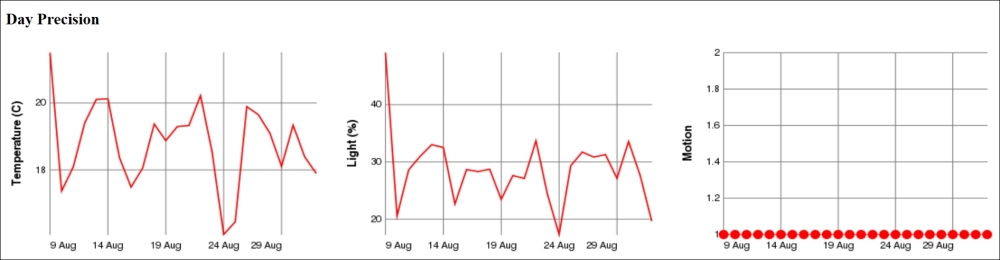

The web services class also provides web methods for setting and getting all digital outputs at once. Here, the digital outputs are encoded into one byte, where each output corresponds to one bit (DO1=bit 0 … DO8=bit 7). The following methods are implemented analogously with the previous method:

public byte GetDigitalOutputs ();

public void SetDigitalOutputs (byte Values);

In the same way, the following two web methods are published for getting and setting the state of the alarm output:

public bool GetAlarmOutput ();

public void SetAlarmOutput ();

To access the test form of a web service, we only need to browse to the resource using a browser. In our case, the path of our web service is /ws; so, if the IP address of our Raspberry Pi is 192.168.0.23, we only need to go to http://192.168.0.23/ws and we will see something similar to the following in the browser:

The main page of the web service will contain links to each method published by the service. Clicking on any of the methods will open the test form for that web method in a separate tab. The following image shows the test form for the SetDigitalOutput web method:

You will notice that the web service documentation that we added to the method and its parameters are displayed in the test form. Since no default value attributes were added to any of the parameters, all parameters are required. This is indicated on the image by a red asterisk against each parameter. If you want to specify default values for parameters, you can use any of the available WebMethodParameterDefault* attributes.

When you click on the Execute button, the method will be executed and a new tab will open that will contain the response of the call. If the web method has a return type set as void, the new tab will simply be blank. Otherwise, the return type will contain a SOAP message encoded using XML.

The SOAP web service interface is documented in what is called a Web Service Definition Language (WSDL) document. This document is automatically generated by the web services engine. You can access it through the same URL through which you would access the test form by adding ?wsdl at the end (http://192.168.0.23/ws?wsdl).

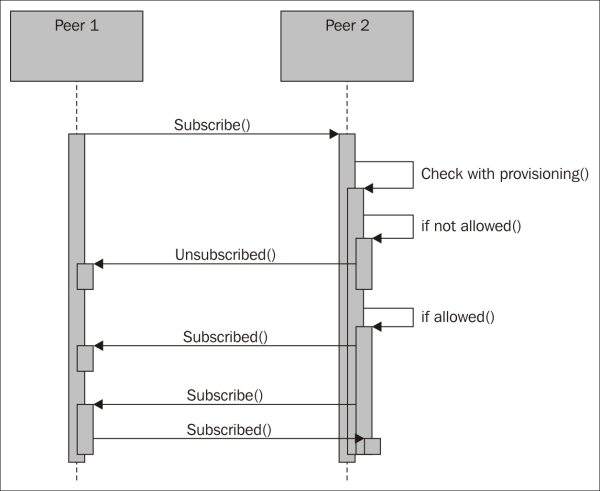

By using this WSDL you can use development tools to automatically create code to access your web service. You can also use web service test tools like SoapUI to test and automate your web services in a simple manner. You can download and test SoapUI at http://www.soapui.org/.