What this learning path covers 43

What you need for this learning path 44

Who this learning path is for 45

Downloading the example code 47

Creating the sensor project 50

Interacting with our hardware 55

Internal representation of sensor values 57

External representation of sensor values 58

Creating the actuator project 62

Accessing the serial port on Raspberry Pi 70

Creating persistent default settings 71

Adding configurable properties 72

Working with the current settings 74

Adding HTTP support to the sensor 81

Setting up an HTTP server on the sensor 81

Setting up an HTTPS server on the sensor 84

Displaying measured information in an HTML page 86

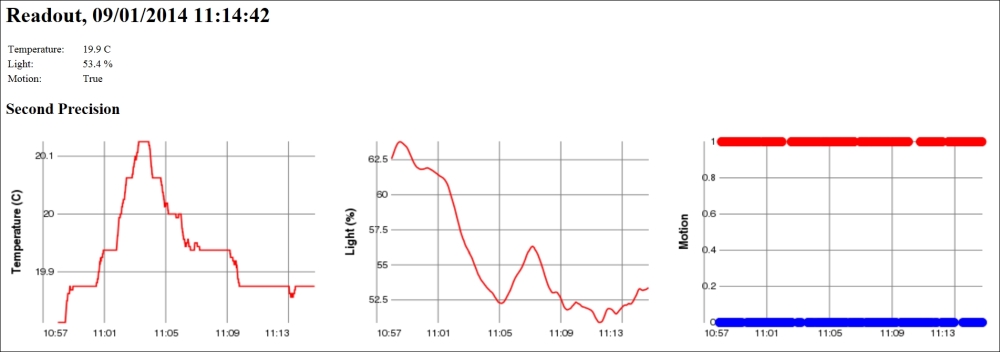

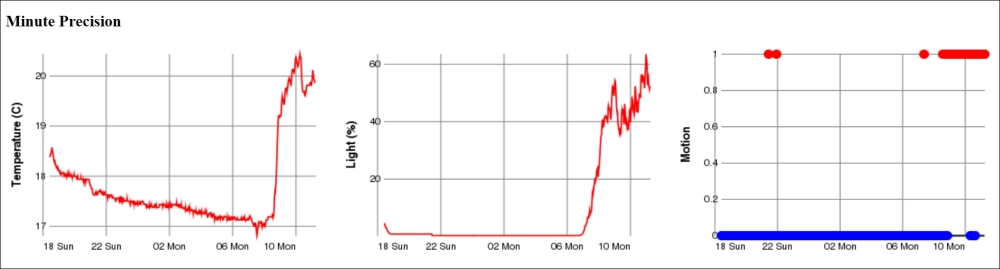

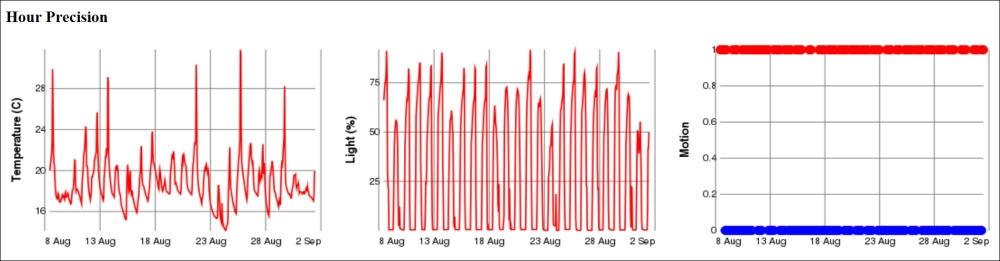

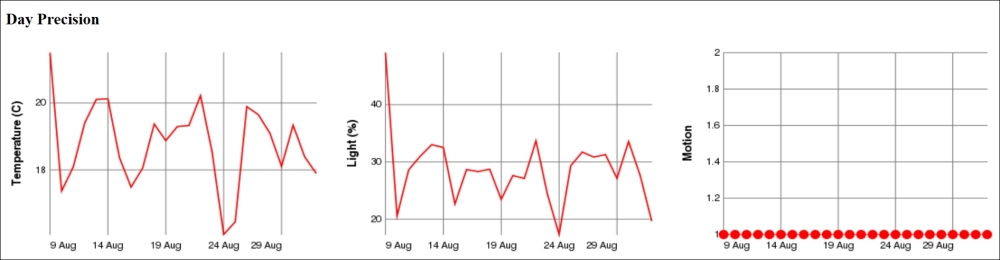

Generating graphics dynamically 88

Creating sensor data resources 95

Interpreting the readout request 95

Adding events for enhanced network performance 97

Adding HTTP support to the actuator 99

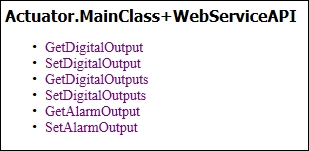

Creating the web services resource 99

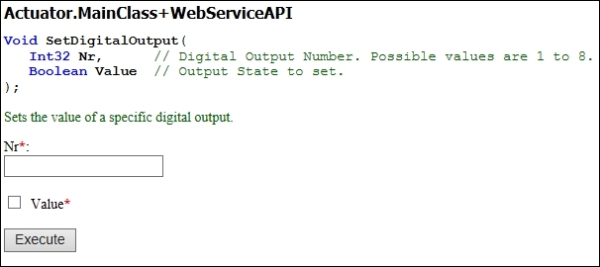

Accessing individual outputs 100

Collective access to outputs 101

Accessing the alarm output 101

Using the REST web service interface 103

Adding HTTP support to the controller 104

Creating the control thread 106

Providing a service architecture 110

Documenting device and service capabilities 111

Creating a device description document 113

Providing the device with an identity 114

Adding references to services 115

Topping off with a URL to a web presentation page 116

Creating the service description document 117

Adding a unique device name 118

Registering UPnP resources 121

Implementing the Still Image service 129

Initializing evented state variables 129

Providing web service properties 129

Discovering devices and services 133

Defining our first CoAP resources 142

Manually triggering an event notification 143

Registering data readout resources 144

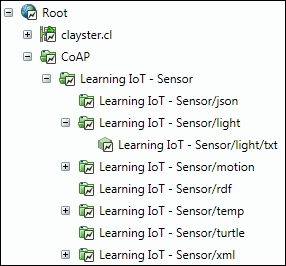

Discovering CoAP resources 147

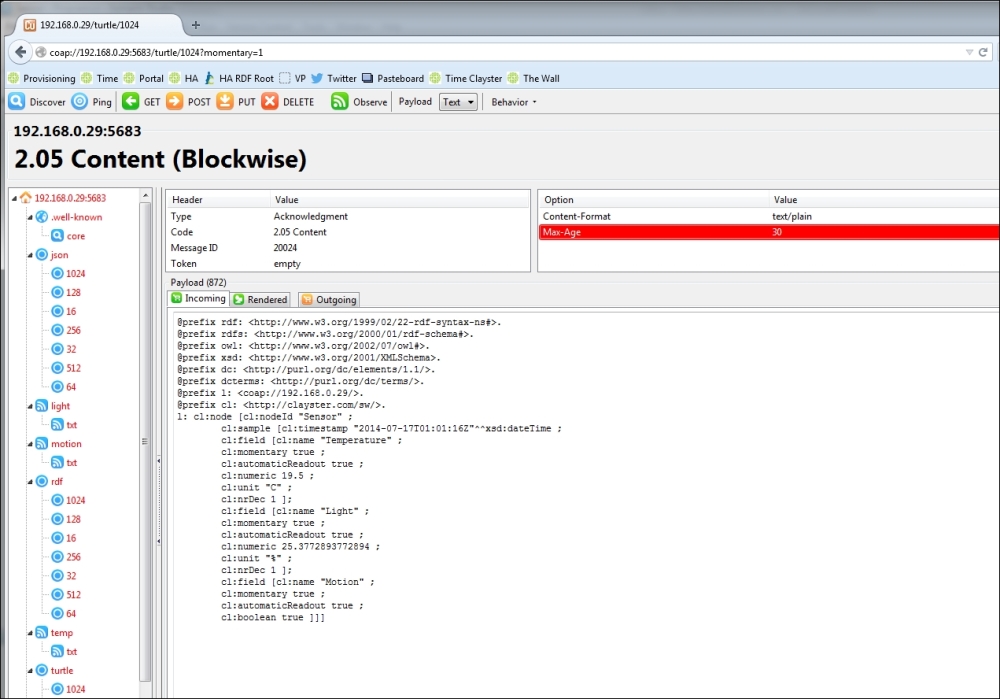

Testing our CoAP resources 148

Adding CoAP to our actuator 150

Defining simple control resources 150

Controlling the output using CoAP 152

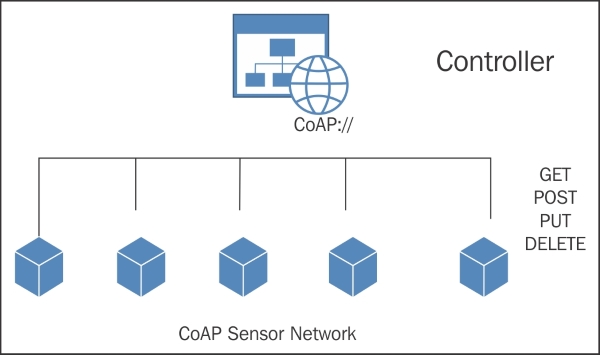

Using CoAP in our controller 154

Monitoring observable resources 154

Performing control actions 156

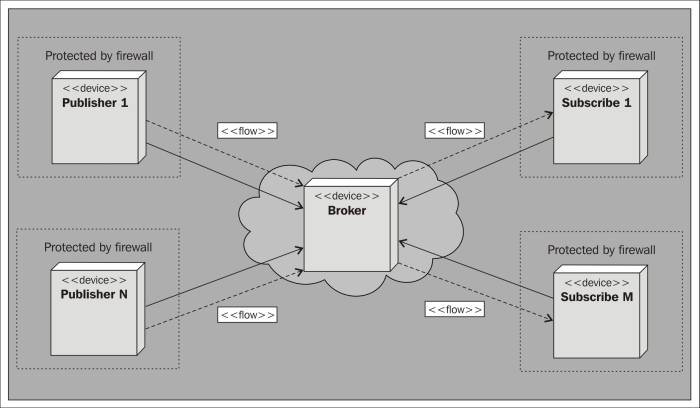

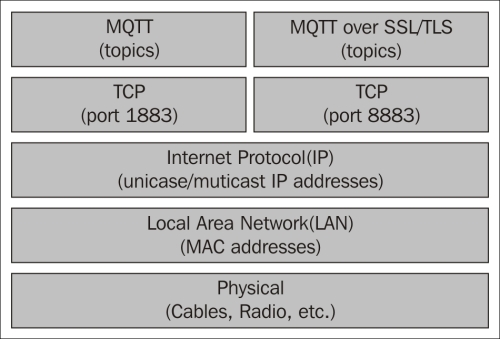

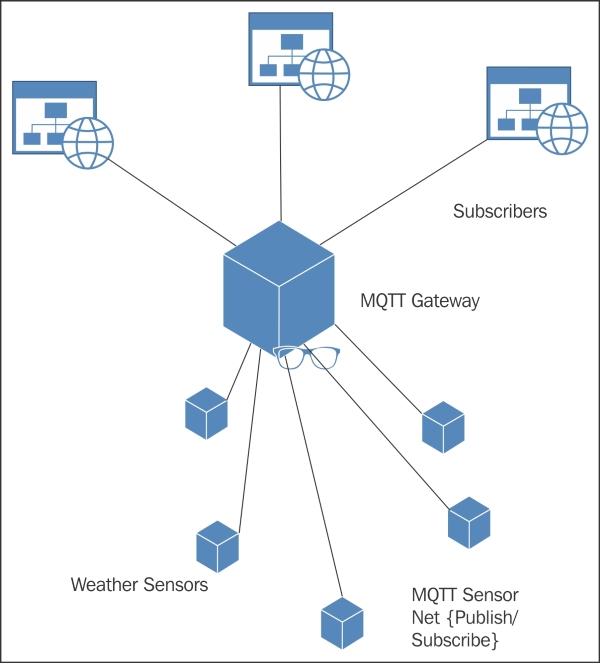

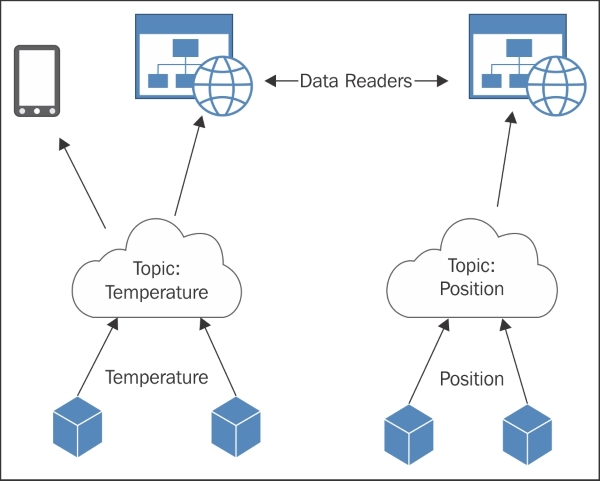

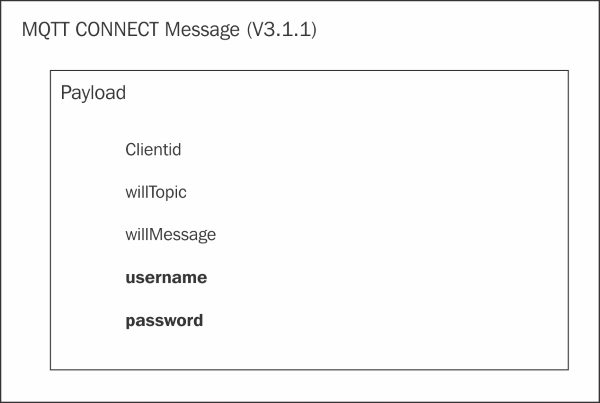

Publishing and subscribing 160

Adding MQTT support to the sensor 163

Controlling the thread life cycle 163

Flagging significant events 164

Connecting to the MQTT server 165

Adding MQTT support to the actuator 169

Initializing the topic content 169

Receiving the published content 170

Decoding and parsing content 171

Adding MQTT support to the controller 173

Handling events from the sensor 173

Decoding and parsing sensor values 173

Subscribing to sensor events 174

Controlling the LED output 175

Controlling the alarm output 176

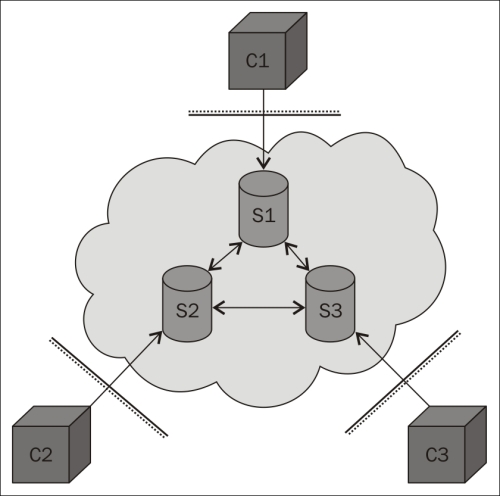

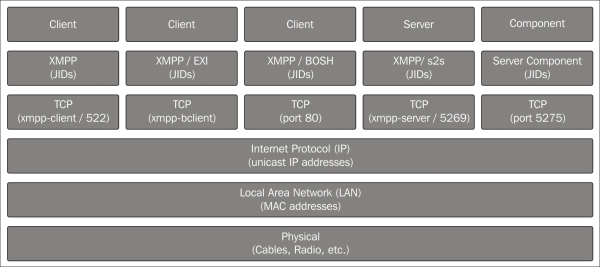

Federating for global scalability 179

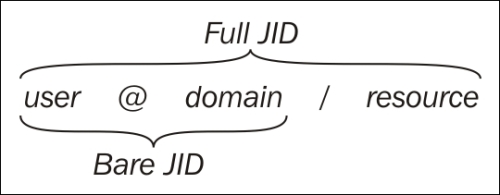

Providing a global identity 181

Provisioning for added security 186

Adding XMPP support to a thing 188

Connecting to the XMPP network 188

Monitoring connection state events 189

Handling HTTP requests over XMPP 190

Providing an additional layer of security 192

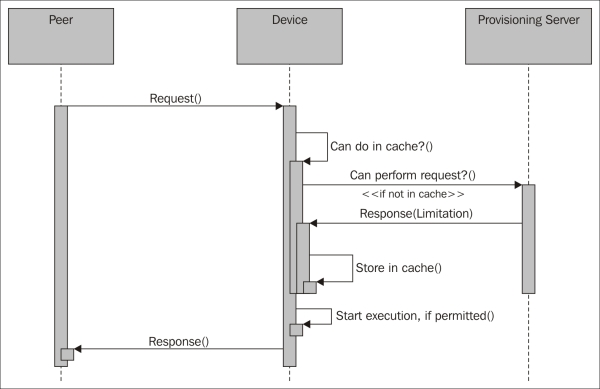

The basics of provisioning 192

Initializing the Thing Registry interface 194

Removing a thing from the registry 196

Initializing the provisioning server interface 198

Handling friendship recommendations 198

Handling requests to unfriend somebody 199

Searching for a provisioning server 199

Providing registry information 201

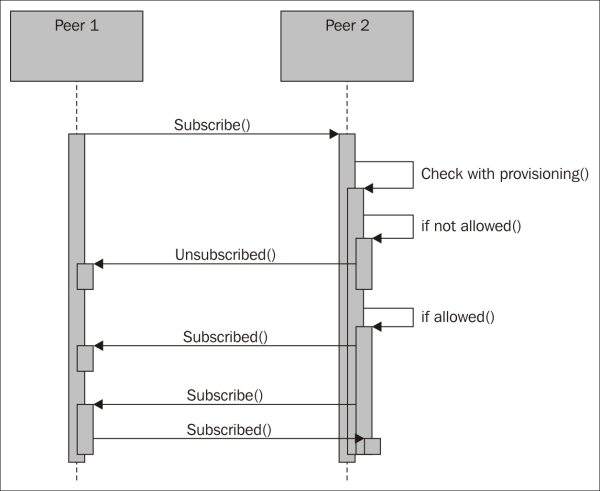

Handling presence subscription requests 203

Continuing interrupted negotiations 205

Adding XMPP support to the sensor 205

Adding a sensor server interface 205

Updating event subscriptions 206

Adding XMPP support to the actuator 208

Adding a controller server interface 208

Adding XMPP support to the camera 210

Adding XMPP support to the controller 211

Setting up a sensor client interface 211

Subscribing to sensor data 211

Handling incoming sensor data 212

Setting up a controller client interface 213

Setting up a camera client interface 214

Fetching the camera image over XMPP 215

Identifying peer capabilities 215

Connecting it all together 219

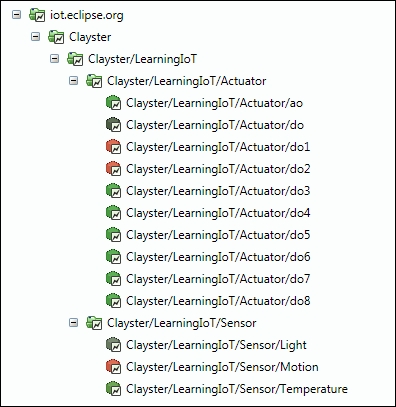

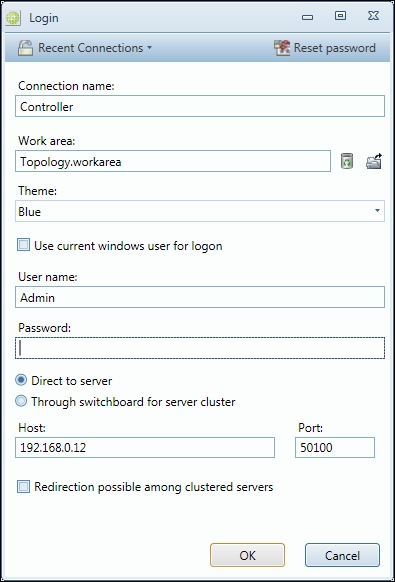

Downloading the Clayster platform 224

Creating a service project 224

Executing from Visual Studio 228

Configuring the Clayster system 228

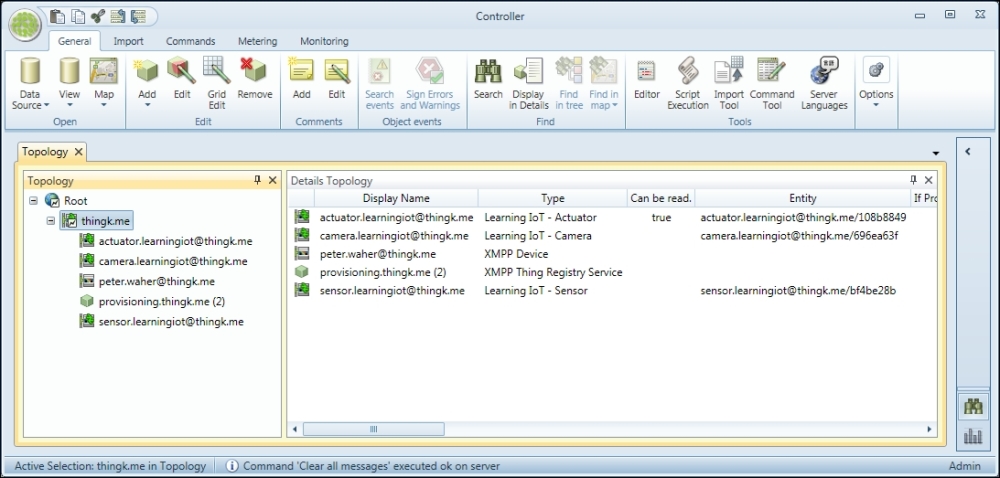

Interfacing our devices using XMPP 232

Creating a class for our sensor 232

Subscribing to sensor data 233

Interpreting incoming sensor data 234

Creating a class for our actuator 235

Customizing control operations 236

Creating a class for our camera 237

Creating our control application 238

Defining the application class 239

Initializing the controller 239

Understanding application references 241

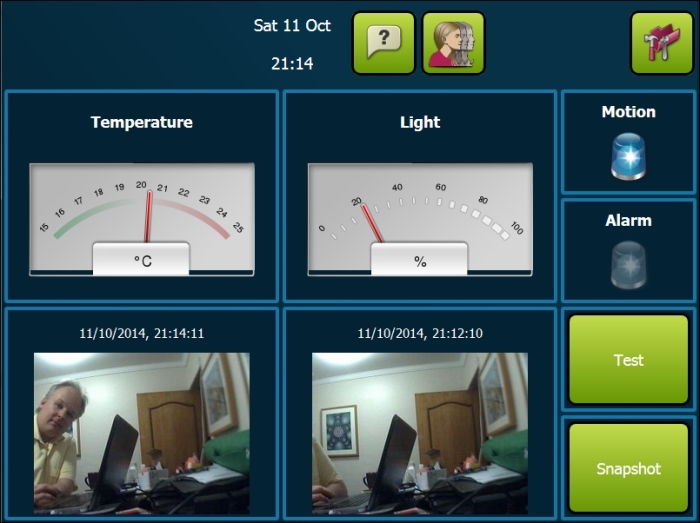

Displaying a binary signal 244

Pushing updates to the client 245

Completing the application 247

Configuring the application 248

Viewing the 10-foot interface application 248

Understanding protocol bridging 252

Using an abstraction model 255

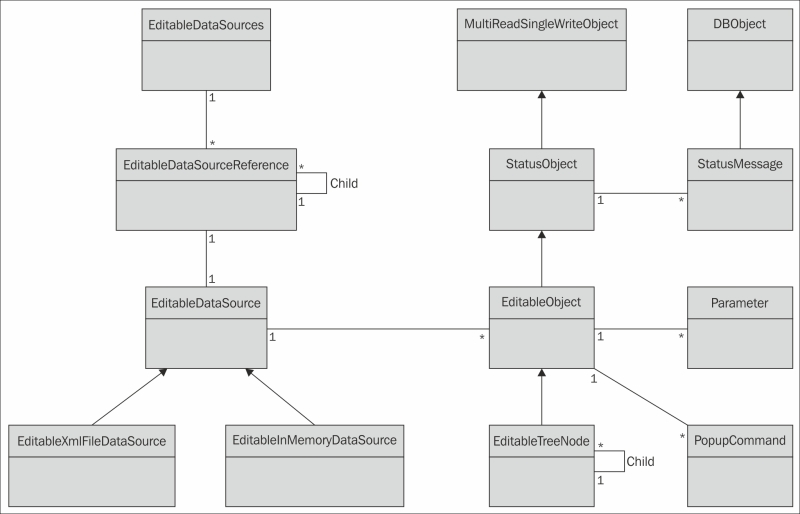

The basics of the Clayster abstraction model 257

Understanding editable data sources 257

Understanding editable objects 258

Overriding key properties and methods 260

Handling communication with devices 261

Understanding the CoAP gateway architecture 263

Reinventing the wheel, but an inverted one 267

Getting access to stored credentials 270

Sniffing network communication 271

Port scanning and web crawling 272

Search features and wildcards 273

Tools for achieving security 275

X.509 certificates and encryption 275

Authentication of identities 276

Using message brokers and provisioning servers 277

Centralization versus decentralization 277

The need for interoperability 279

Allows new kinds of services and reuse of devices 280

Combining security and interoperability 280

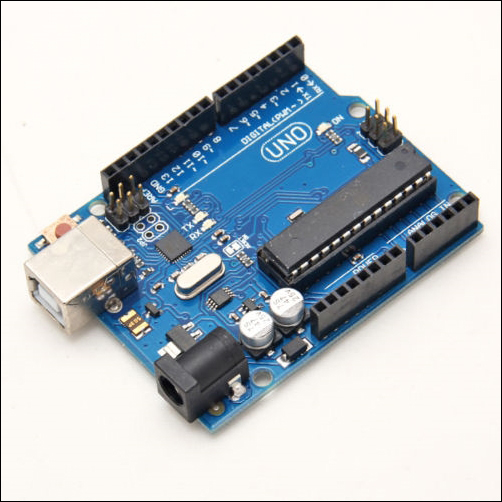

Hardware and software requirements 284

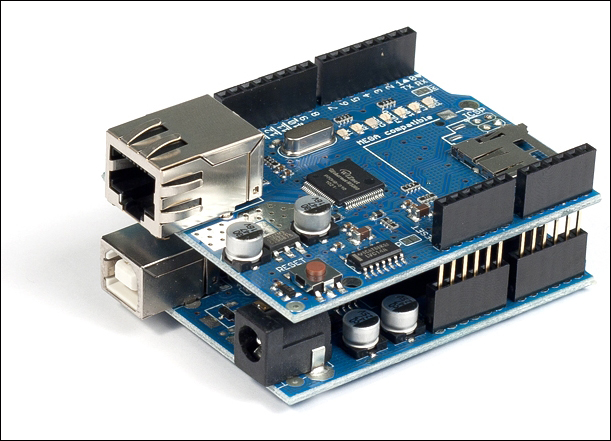

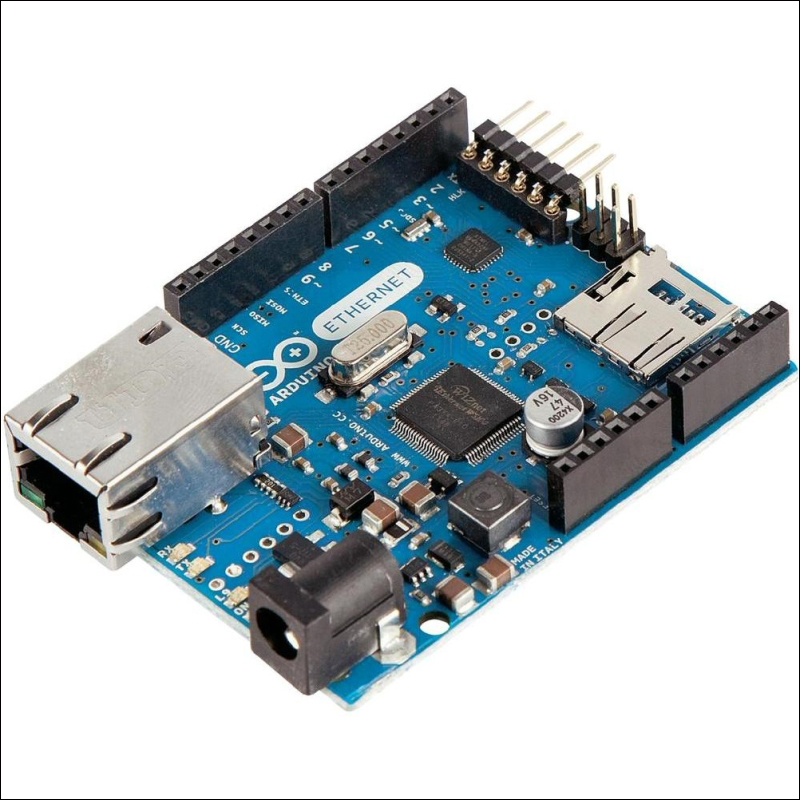

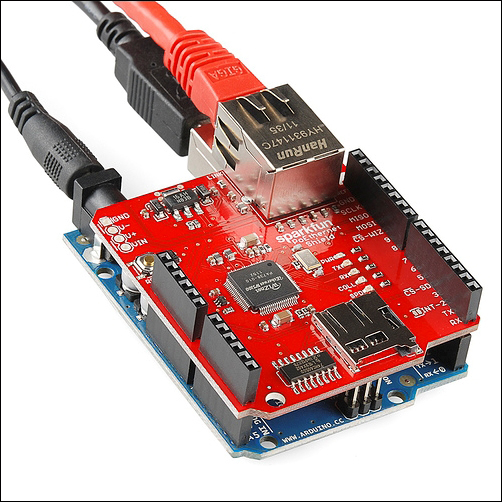

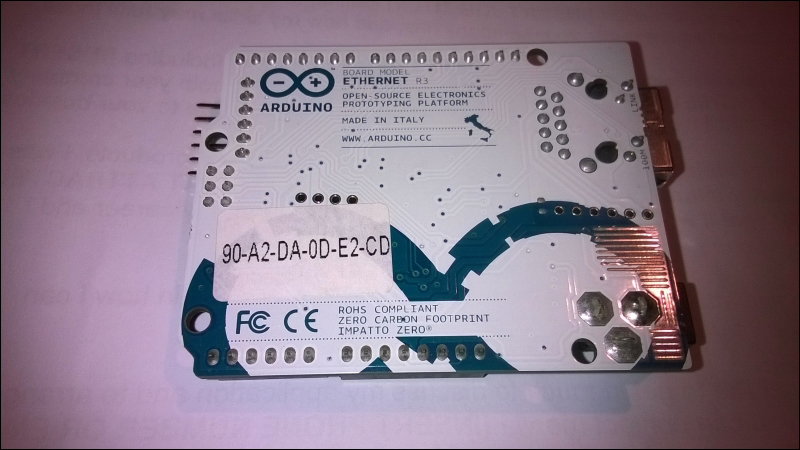

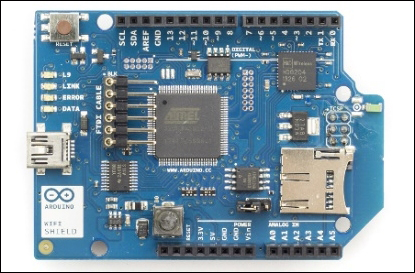

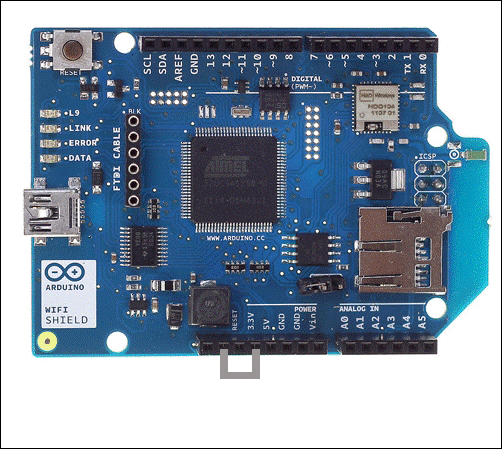

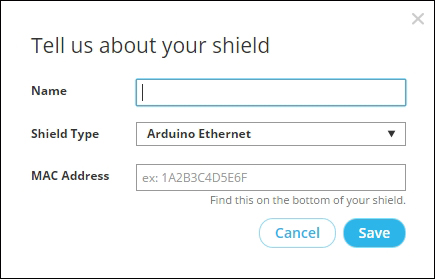

The Arduino Ethernet board 288

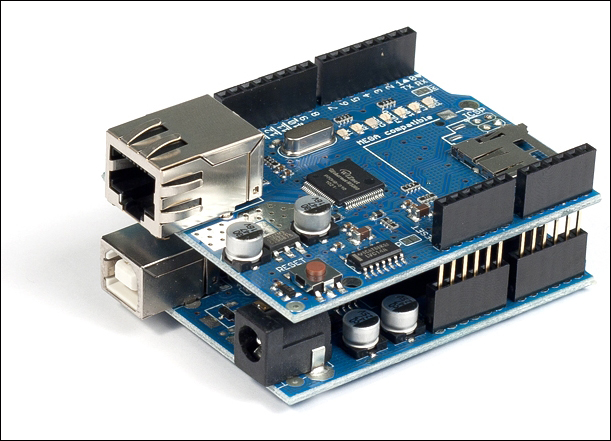

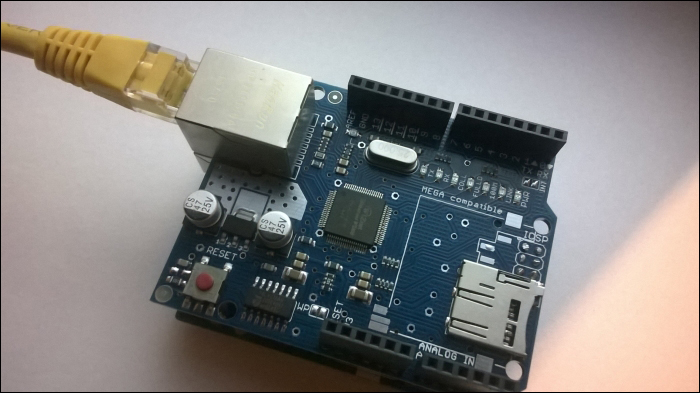

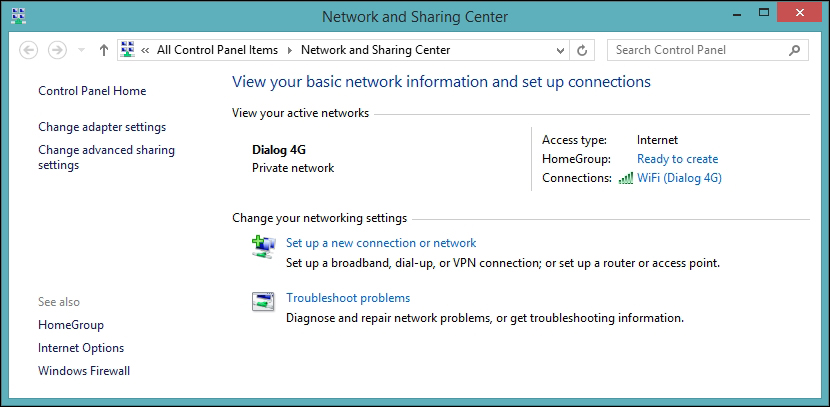

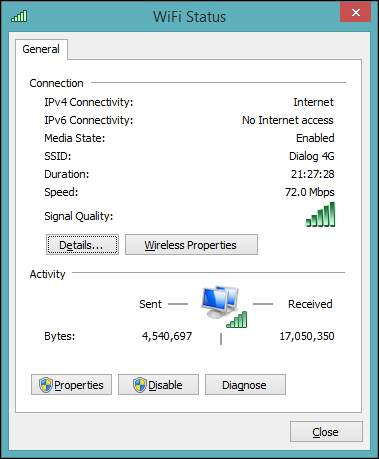

Connecting Arduino Ethernet Shield to the Internet 290

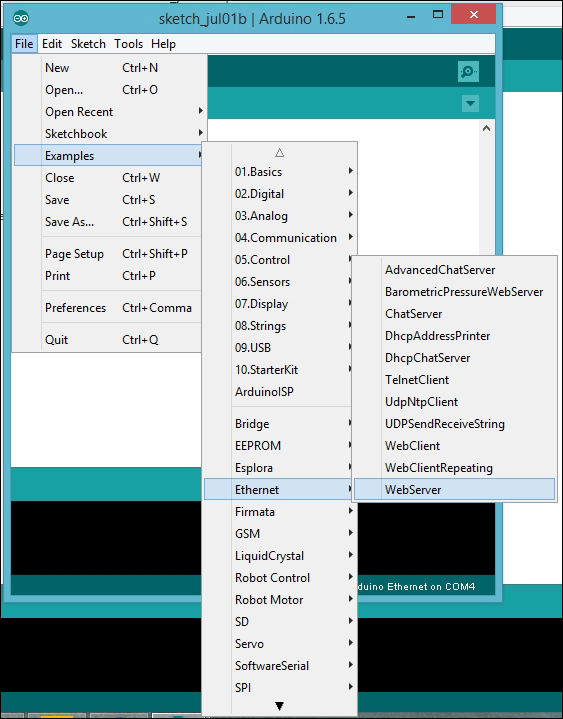

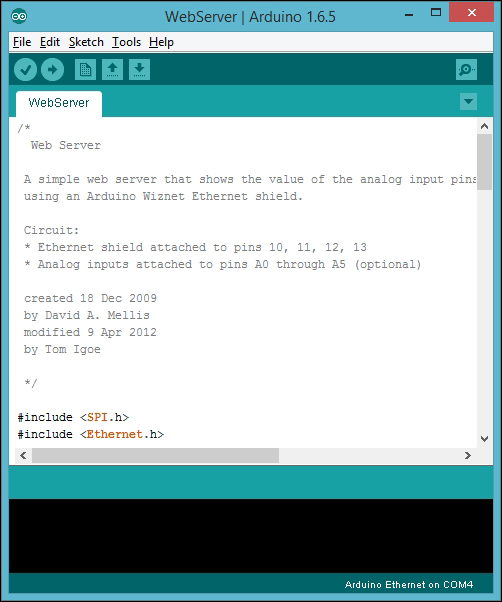

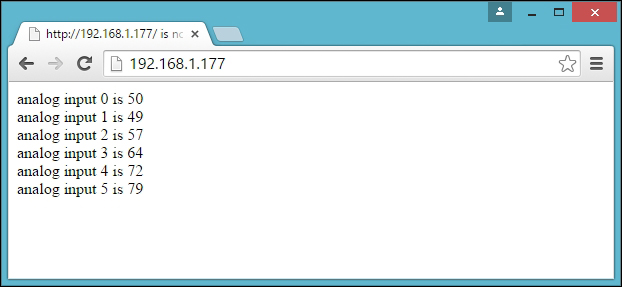

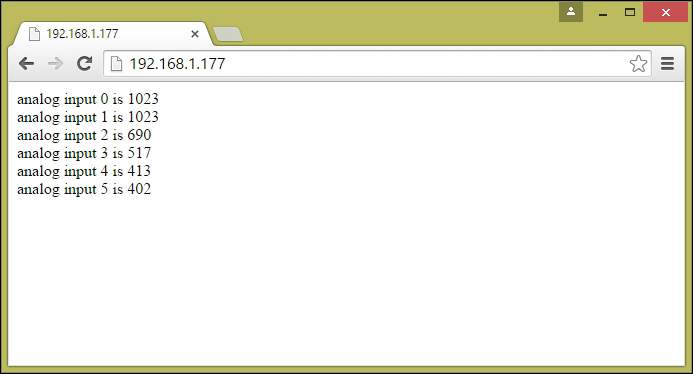

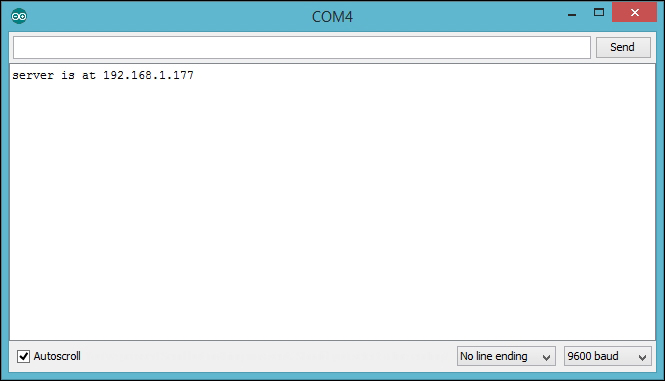

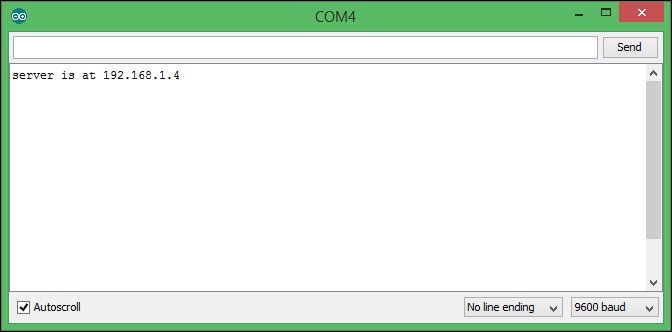

Testing your Arduino Ethernet Shield 293

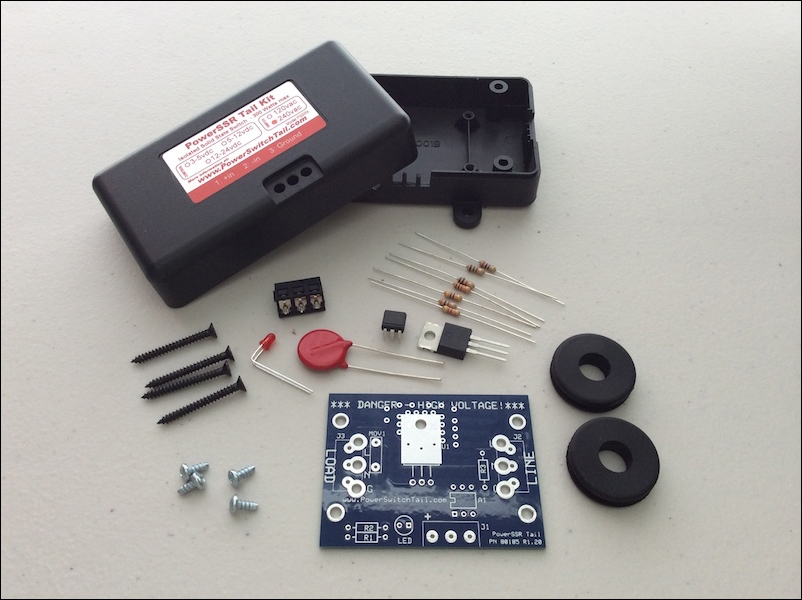

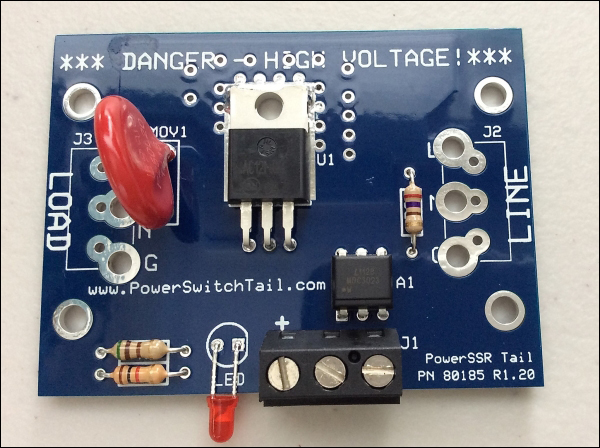

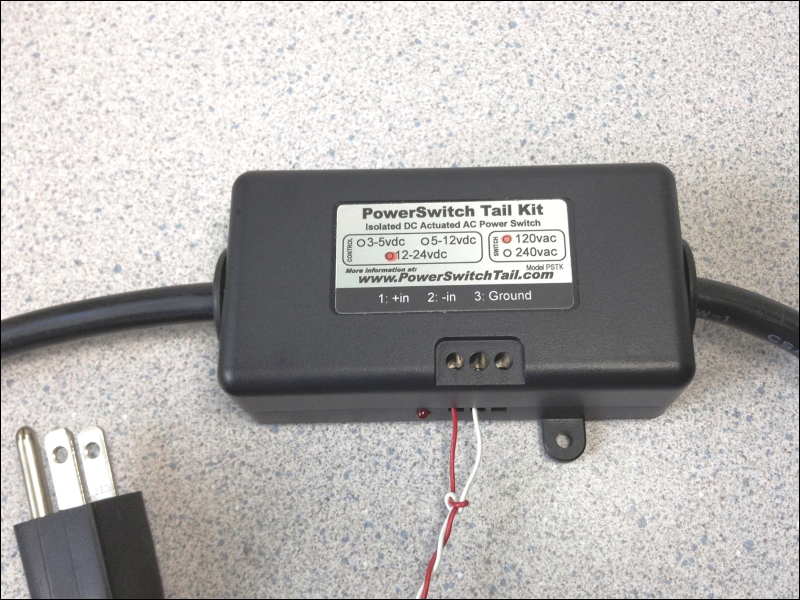

Selecting a PowerSwitch Tail 301

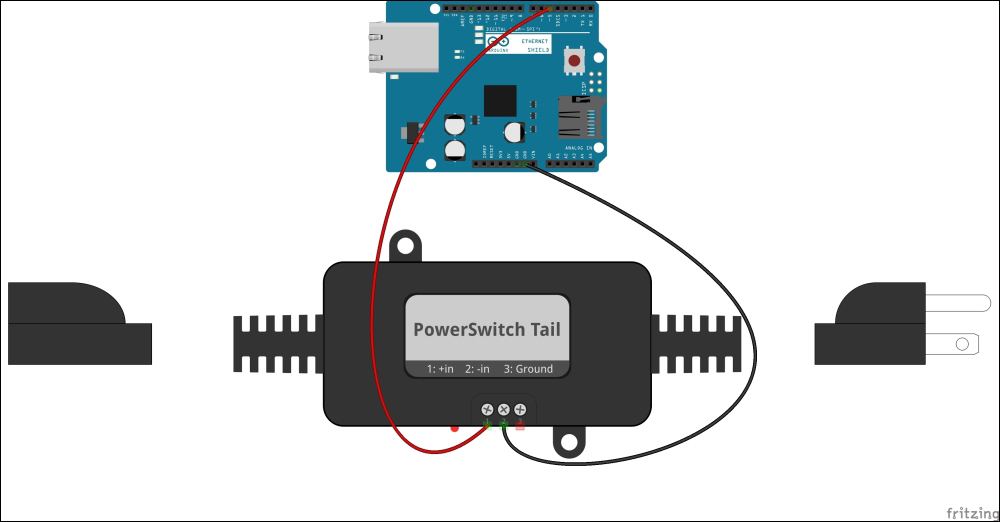

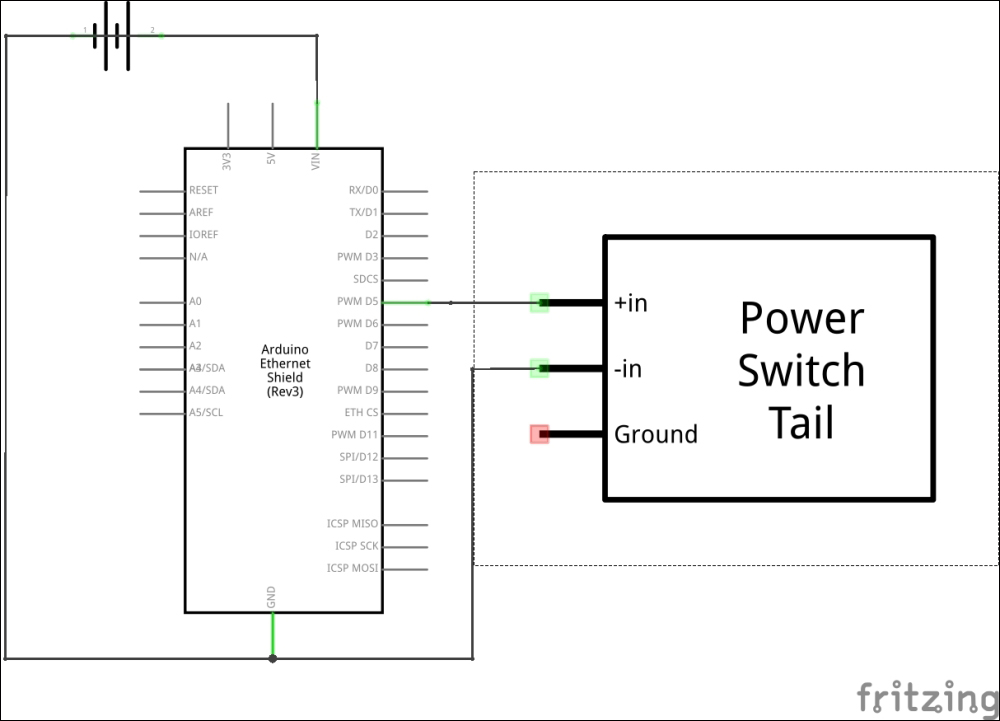

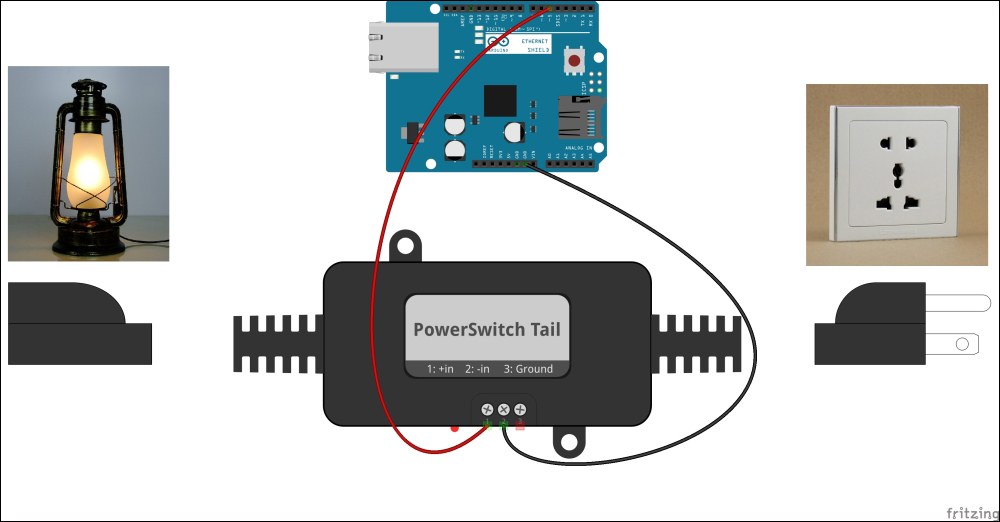

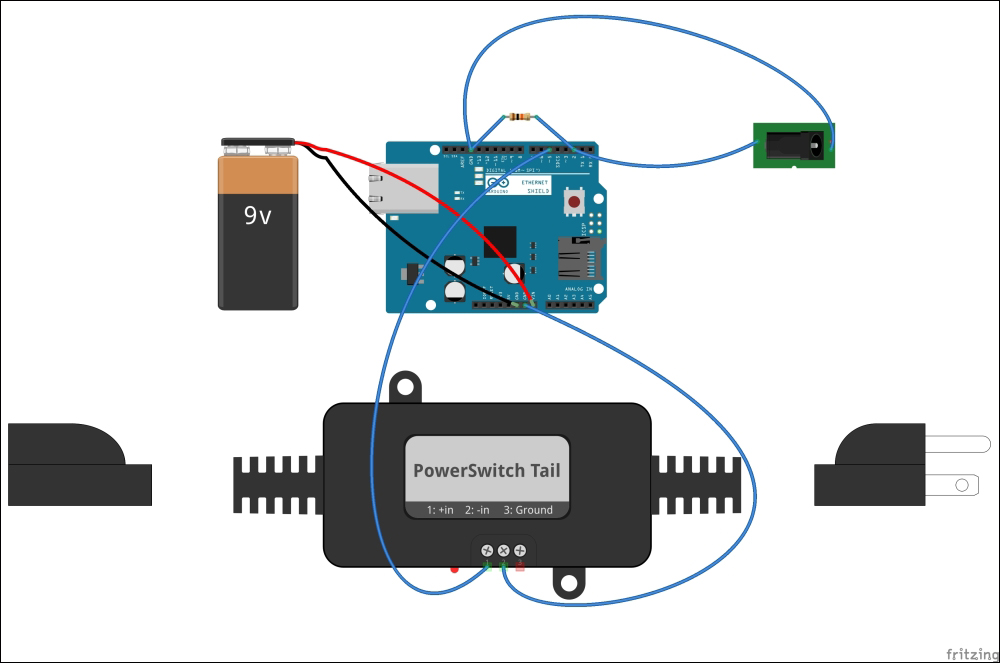

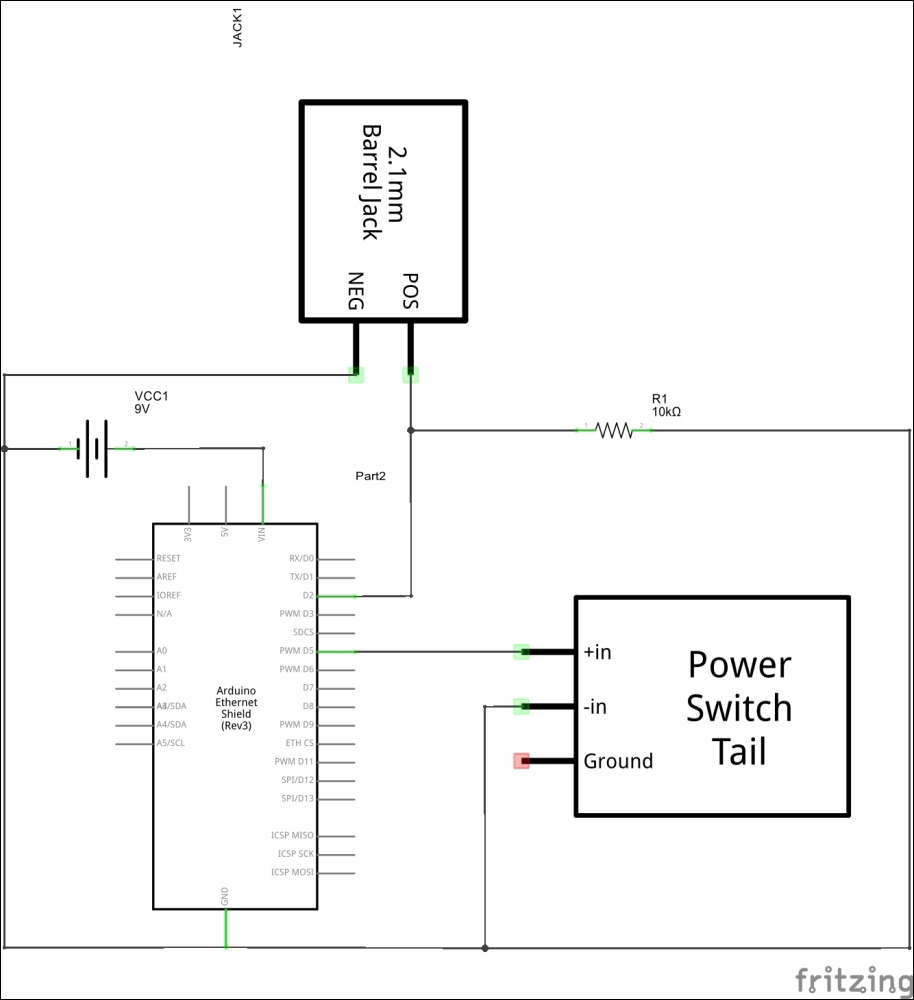

Wiring PowerSwitch Tail with Arduino Ethernet Shield 305

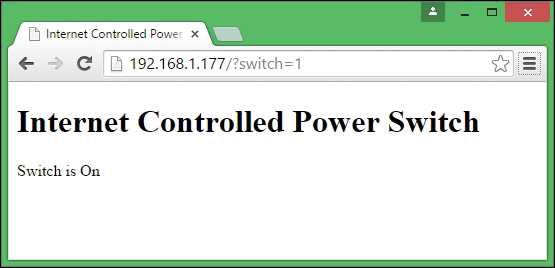

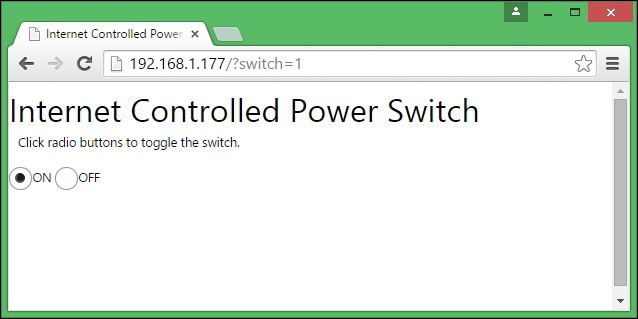

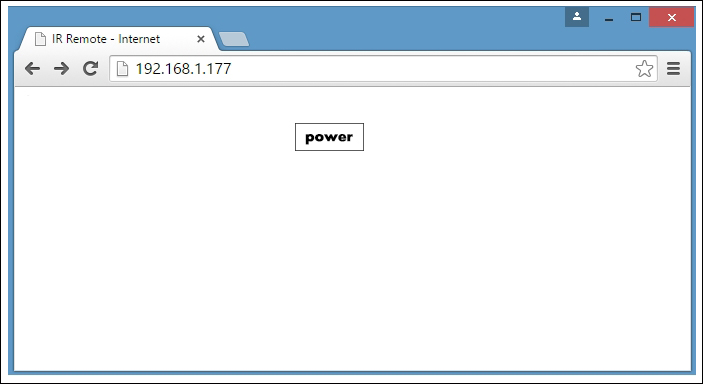

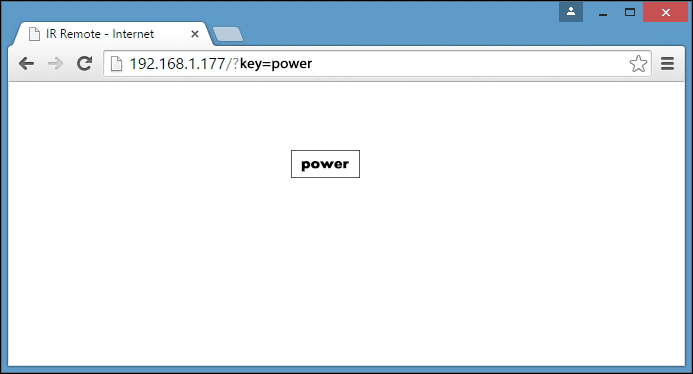

Turning PowerSwitch Tail into a simple web server 309

A step-by-step process for building a web-based control panel 309

Handling client requests by HTTP GET 309

Sensing the availability of mains electricity 313

Testing the mains electricity sensor 317

Building a user-friendly web user interface 317

Adding a Cascade Style Sheet to the web user interface 319

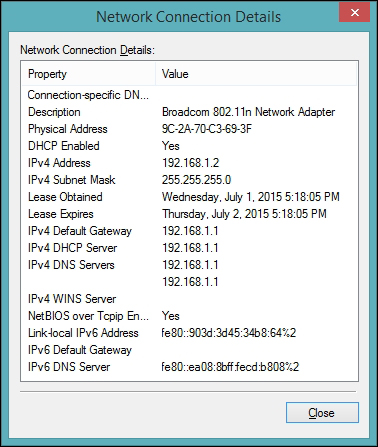

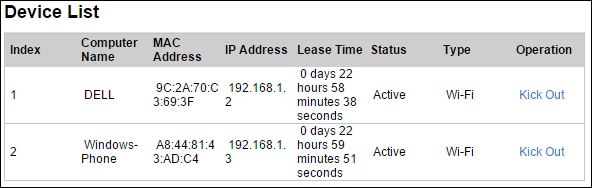

Finding the MAC address and obtaining a valid IP address 321

Assigning a static IP address 322

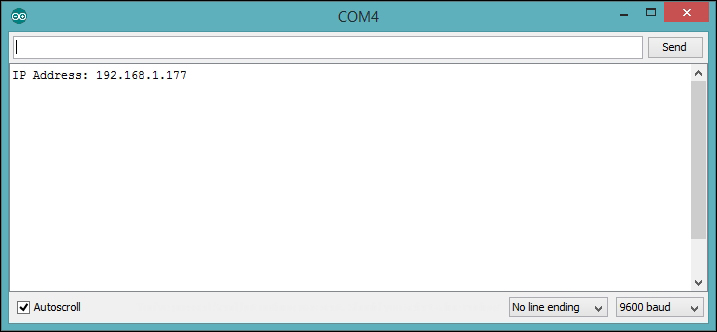

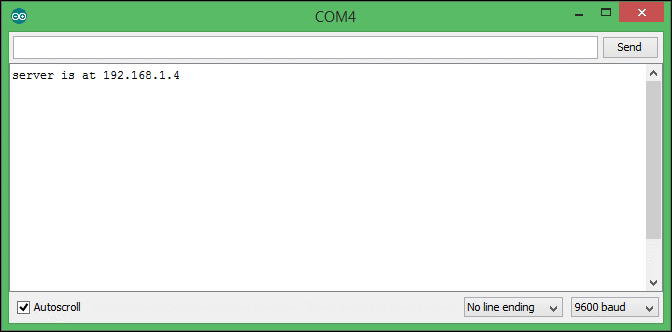

Obtaining an IP address using DHCP 326

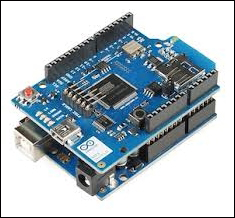

Stacking the WiFi Shield with Arduino 331

Hacking an Arduino earlier than REV3 331

Knowing more about connections 332

Fixing the Arduino WiFi library 332

Connecting your Arduino to a Wi-Fi network 333

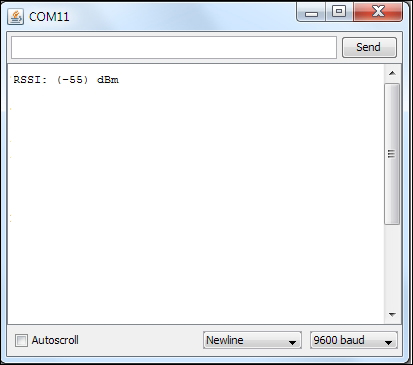

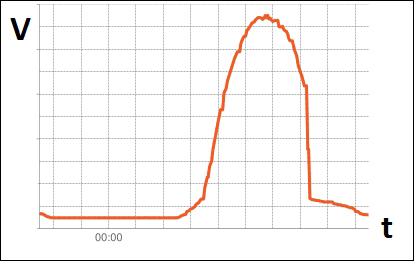

Wi-Fi signal strength and RSSI 339

Reading the Wi-Fi signal strength 339

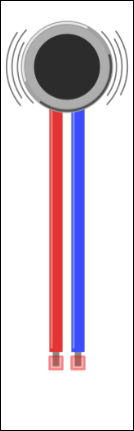

Haptic feedback and haptic motors 343

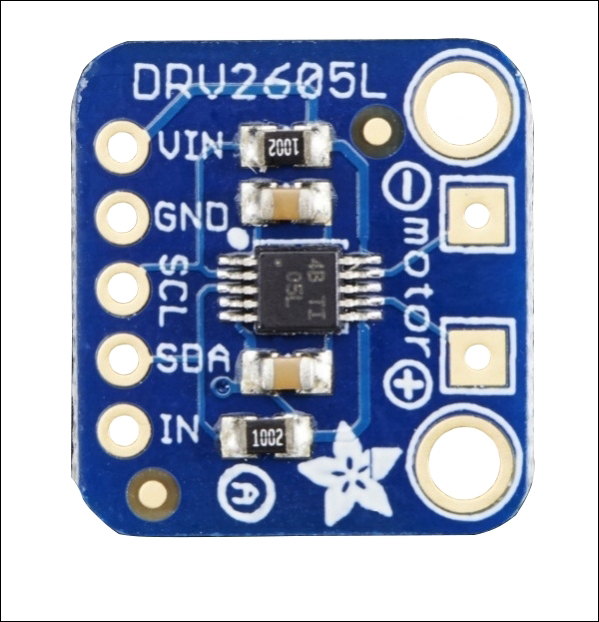

Getting started with the Adafruit DRV2605 haptic controller 343

Selecting a correct vibrator 344

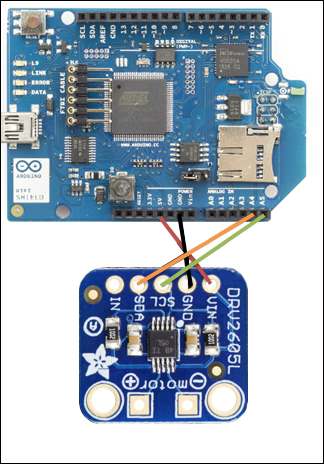

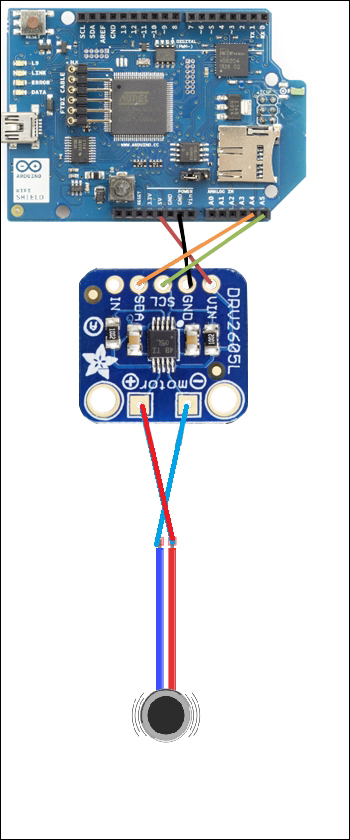

Connecting a haptic controller to Arduino WiFi Shield 345

Soldering a vibrator to the haptic controller breakout board 346

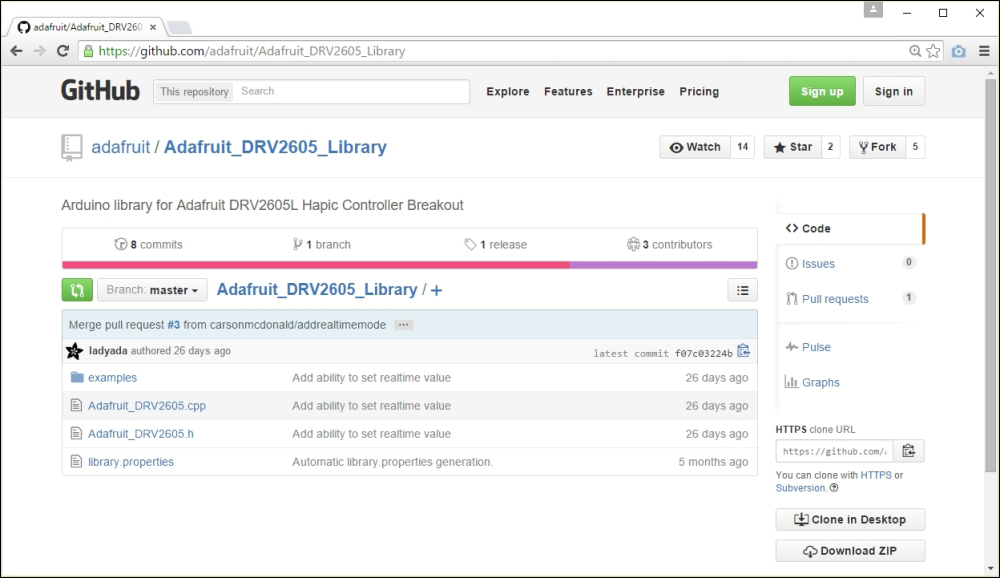

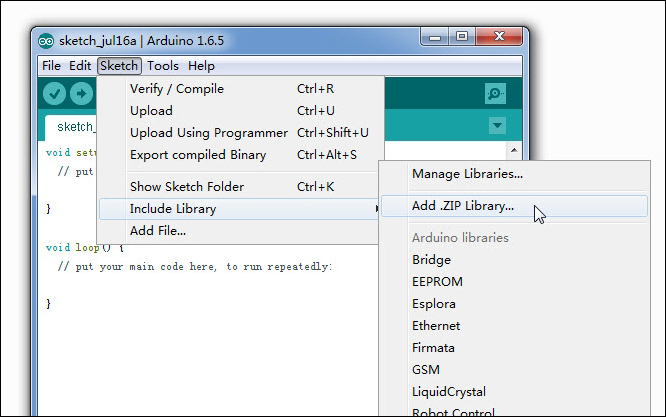

Downloading the Adafruit DRV2605 library 348

Making vibration effects for RSSI 349

Implementing a simple web server 351

Reading the signal strength over Wi-Fi 351

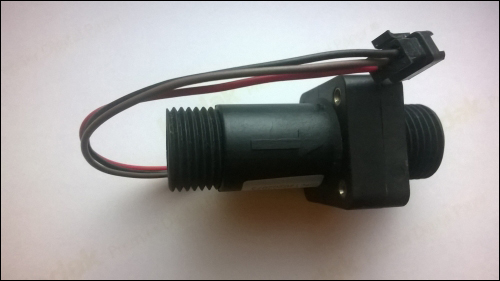

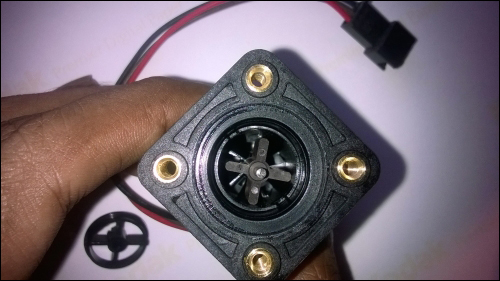

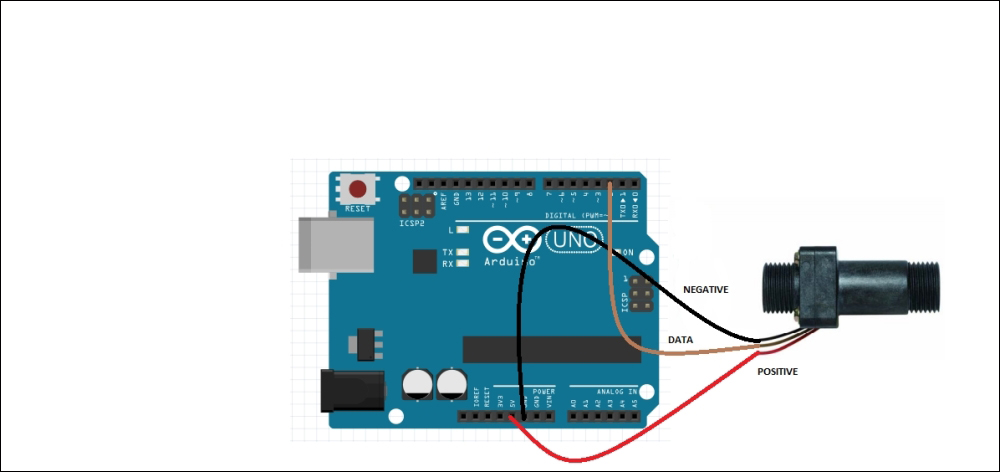

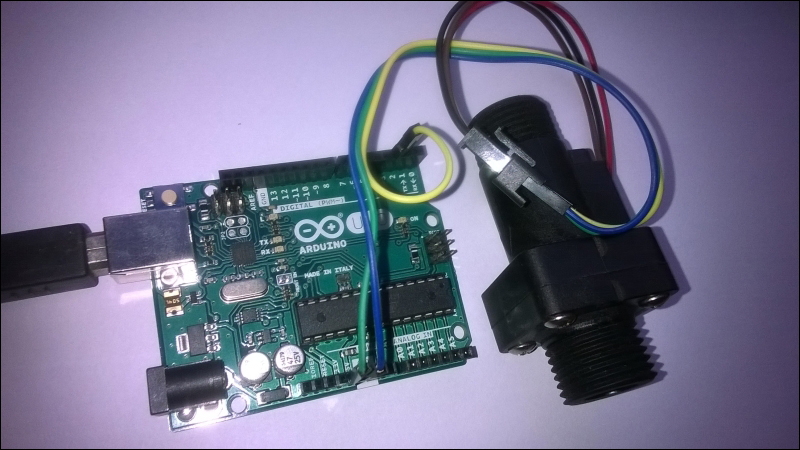

Wiring the water flow sensor with Arduino 355

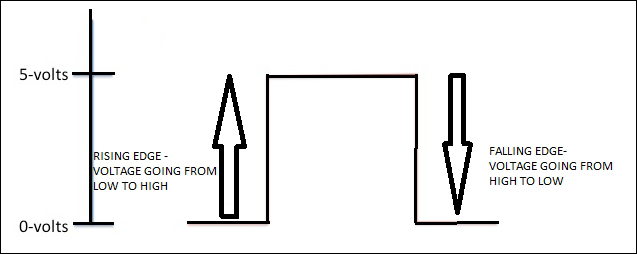

Rising edge and falling edge 358

Reading and counting pulses with Arduino 359

Calculating the water flow rate 361

Calculating the water flow volume 363

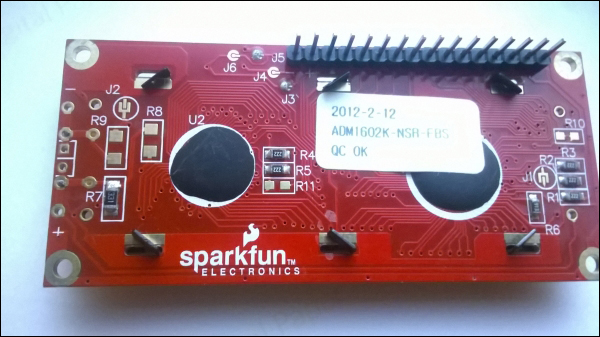

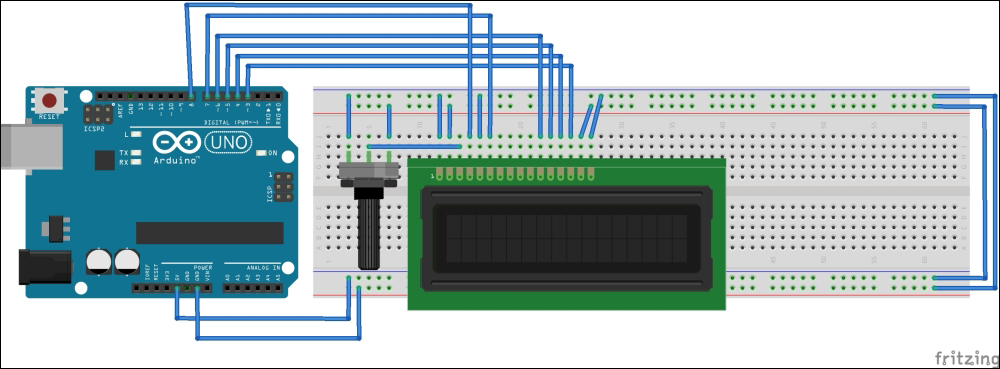

Adding an LCD screen to the water meter 365

Converting your water meter to a web server 369

A little bit about plumbing 370

Getting started with TTL Serial Camera 375

Wiring the TTL Serial Camera for image capturing 376

Wiring the TTL Serial Camera for video capturing 378

Testing NTSC video stream with video screen 379

Connecting the TTL Serial Camera with Arduino and Ethernet Shield 382

Image capturing with Arduino 385

The Software Serial library 385

How the image capture works 385

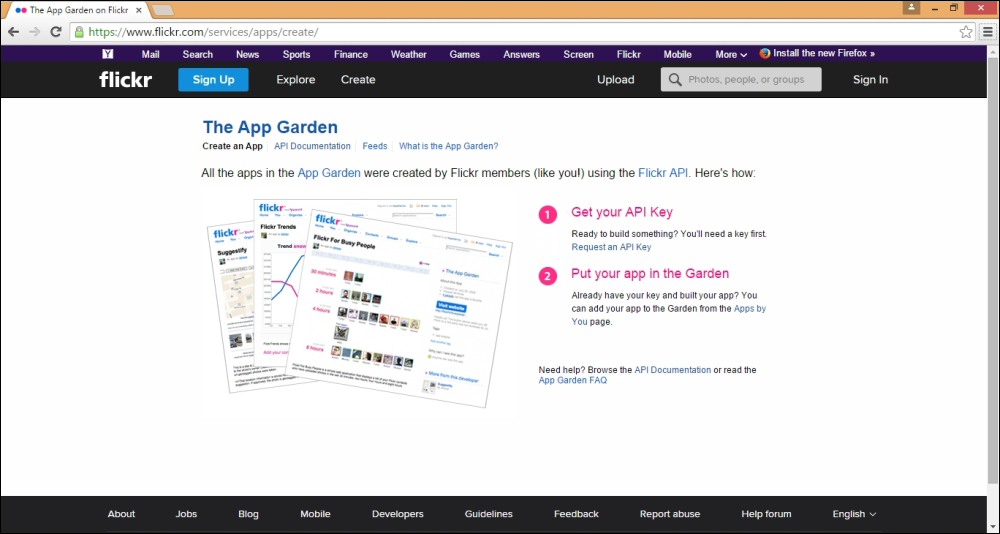

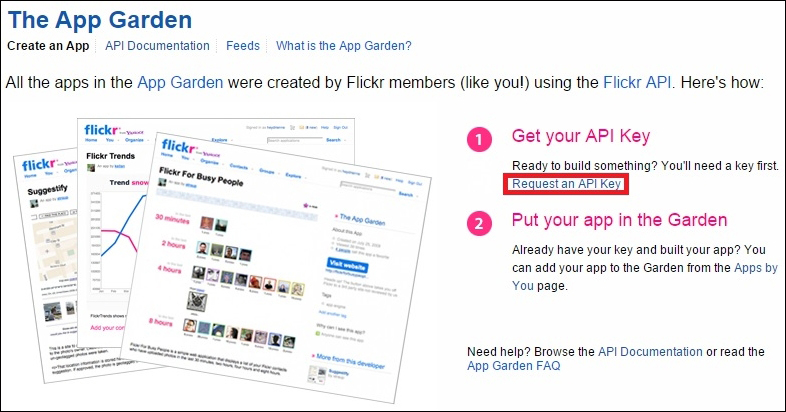

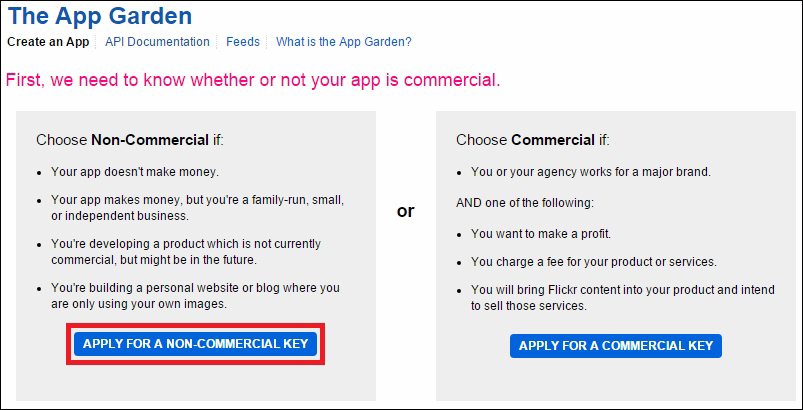

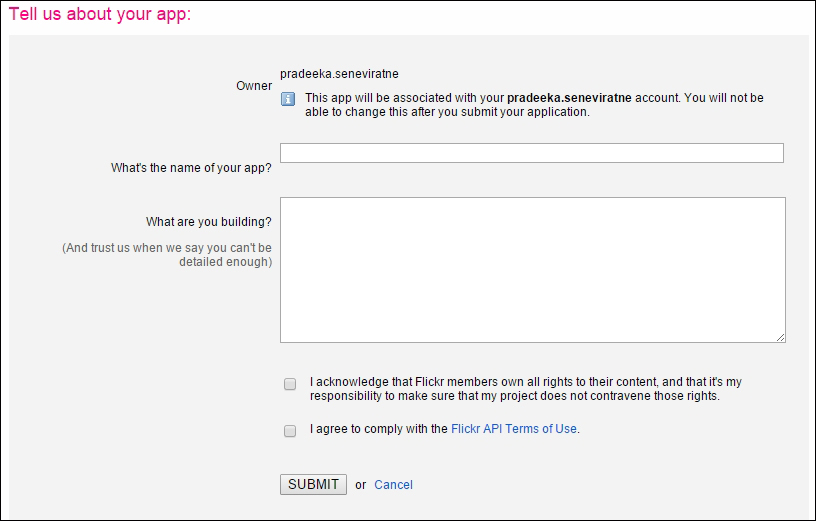

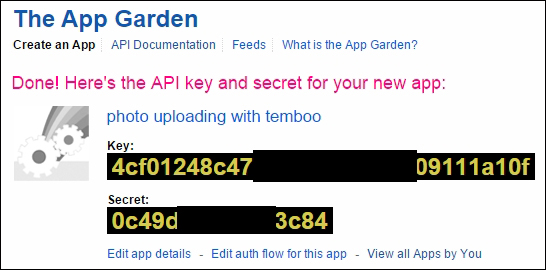

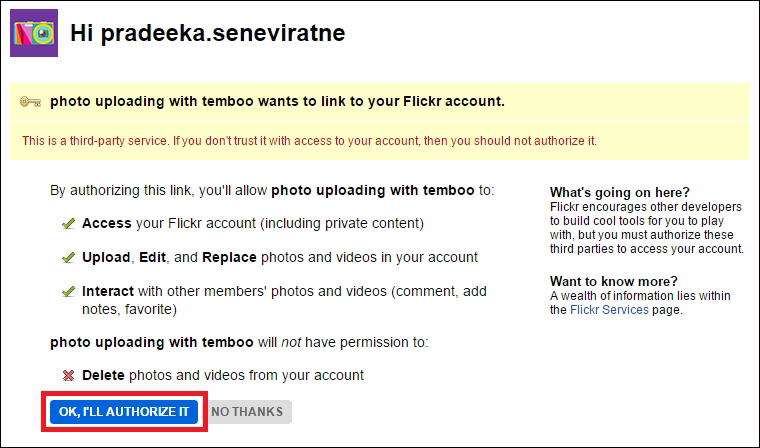

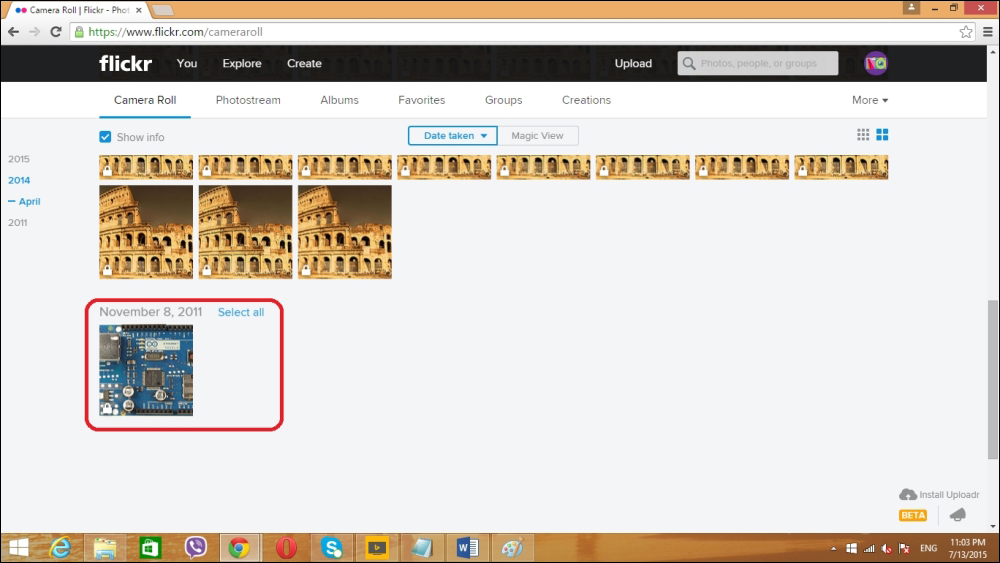

Uploading images to Flickr 387

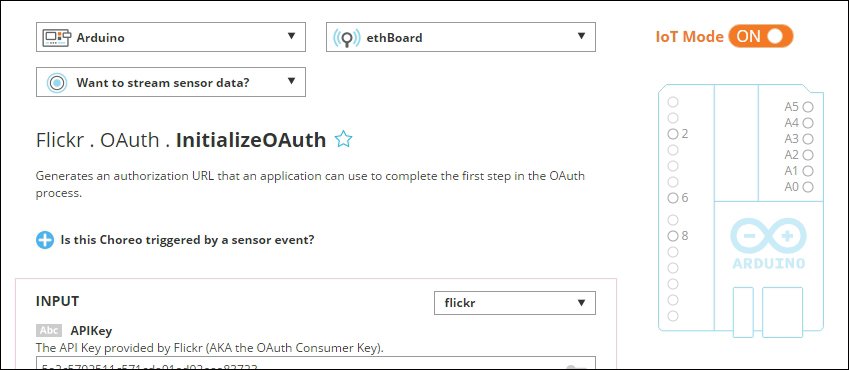

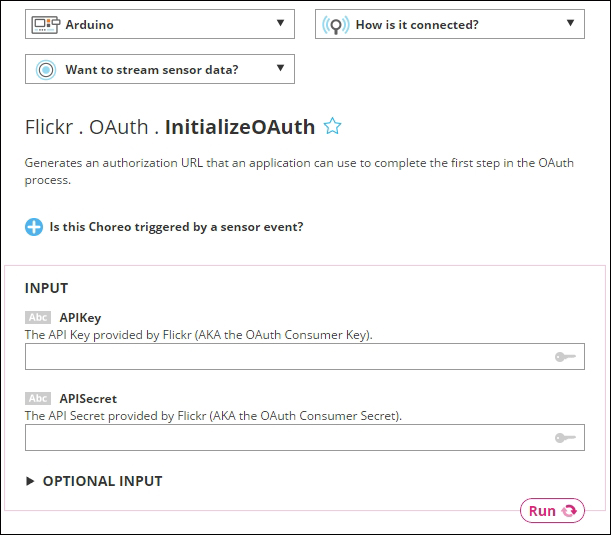

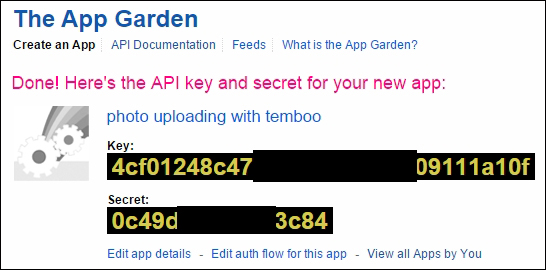

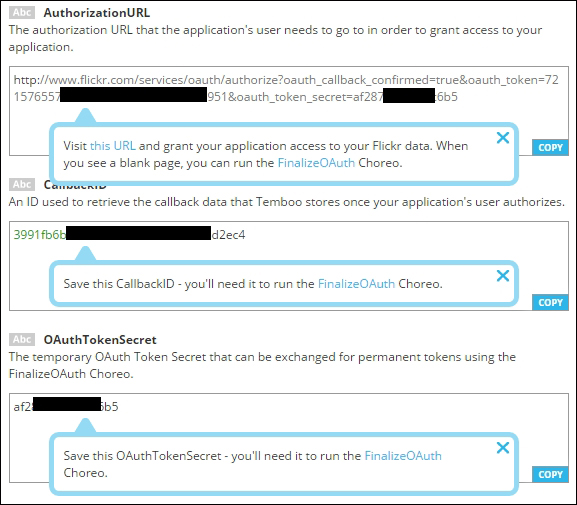

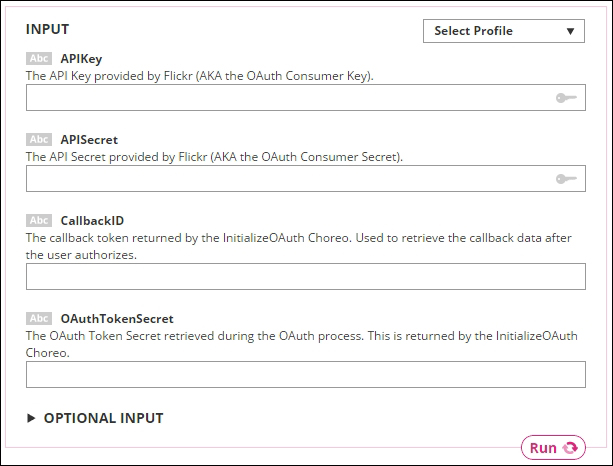

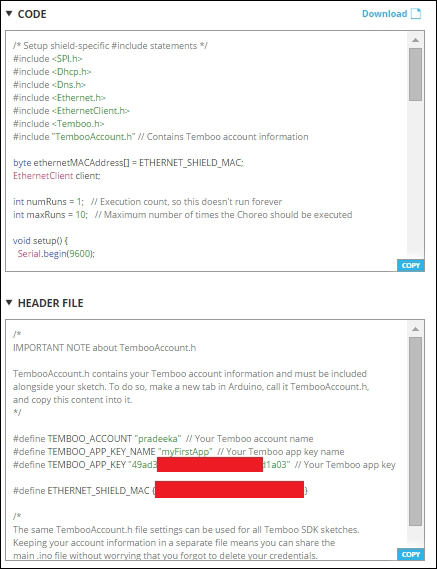

Creating your first Choreo 393

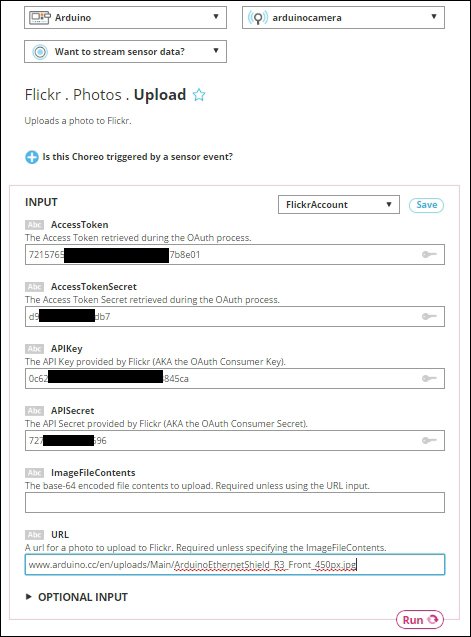

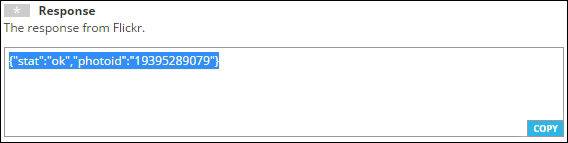

Generating the photo upload sketch 399

Connecting the camera output with Temboo 405

Connecting a solar cell with the Arduino Ethernet board 408

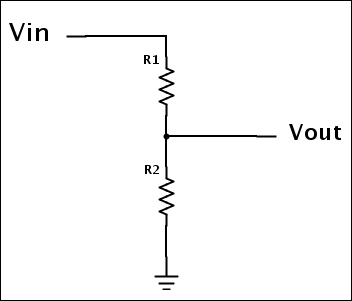

Building a voltage divider 409

Building the circuit with Arduino 410

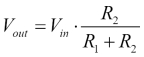

Setting up a NearBus account 413

Examining the device lists 415

Downloading the NearBus agent 415

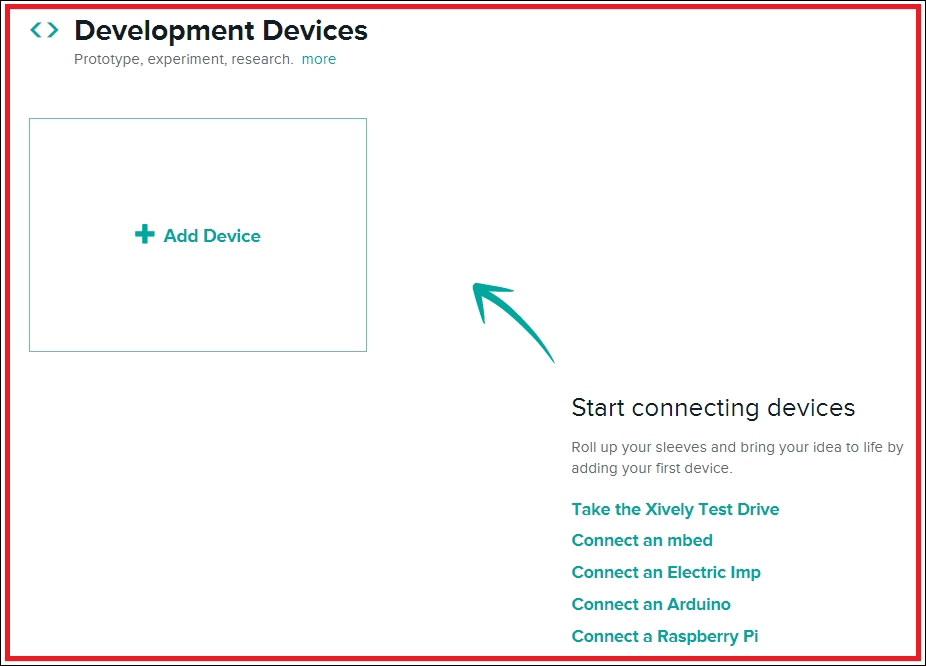

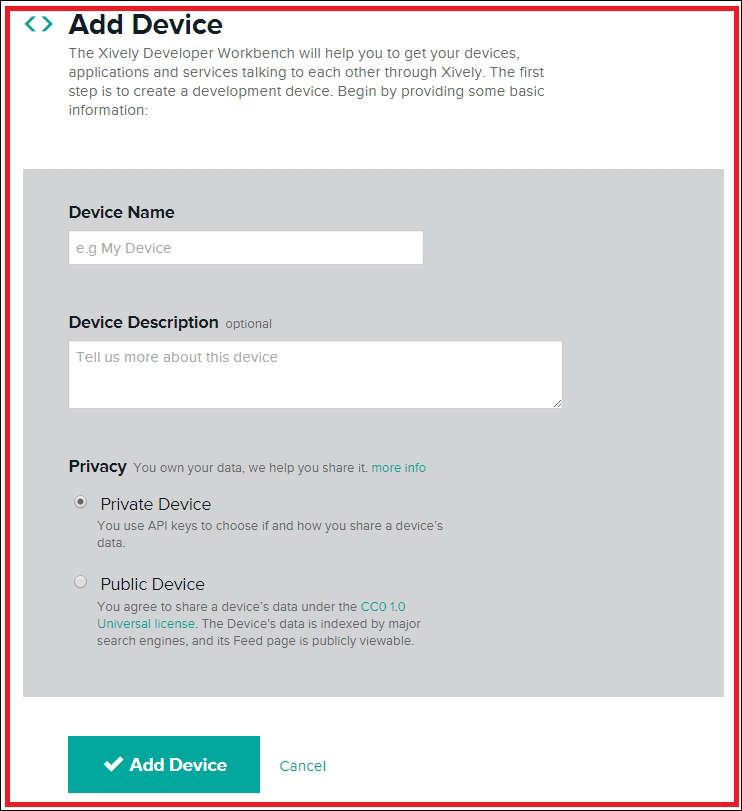

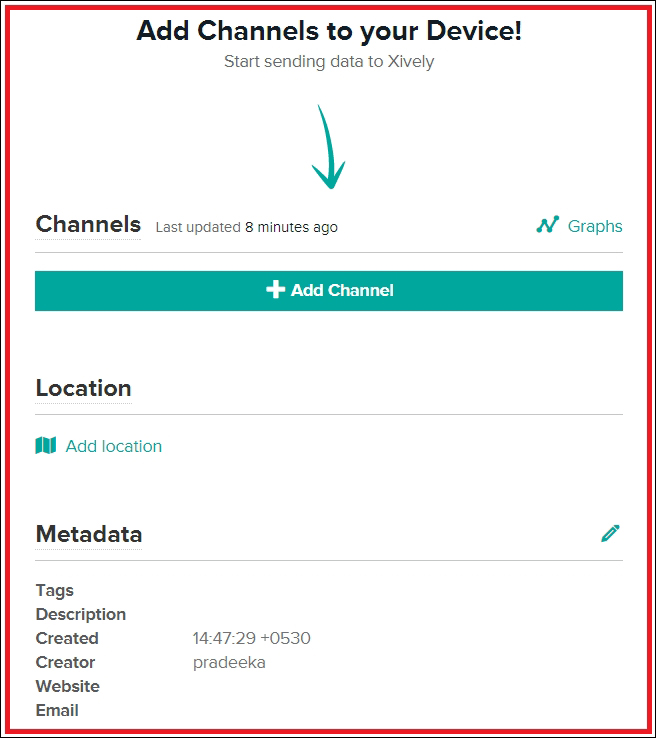

Creating and configuring a Xively account 420

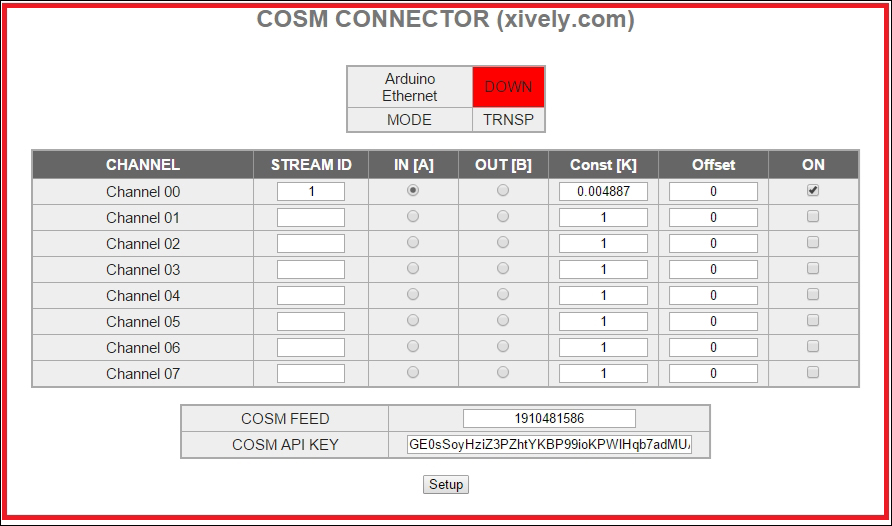

Configuring the NearBus connected device for Xively 428

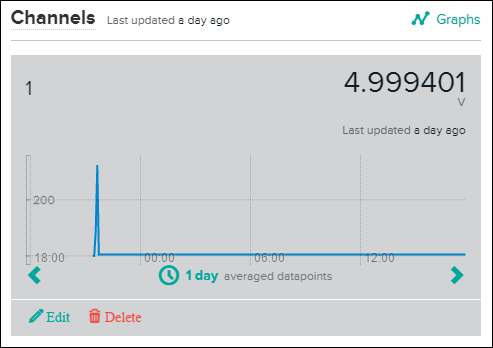

Developing a web page to display the real-time voltage values 431

Displaying data on a web page 432

Hardware and software requirements 436

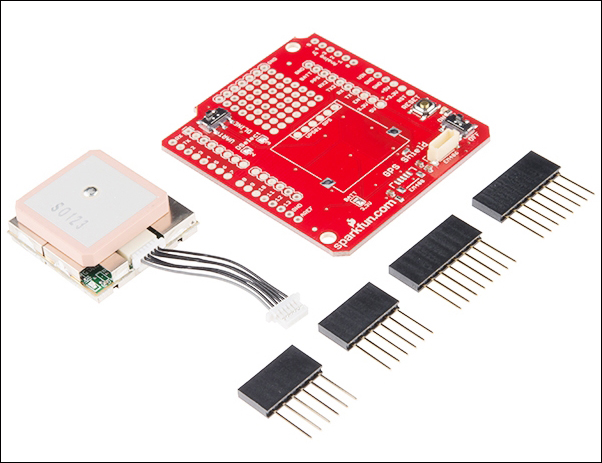

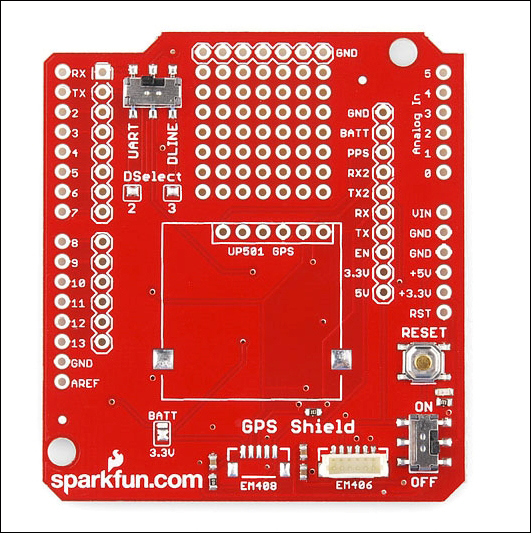

Getting started with the Arduino GPS shield 437

Connecting the Arduino GPS shield with the Arduino Ethernet board 438

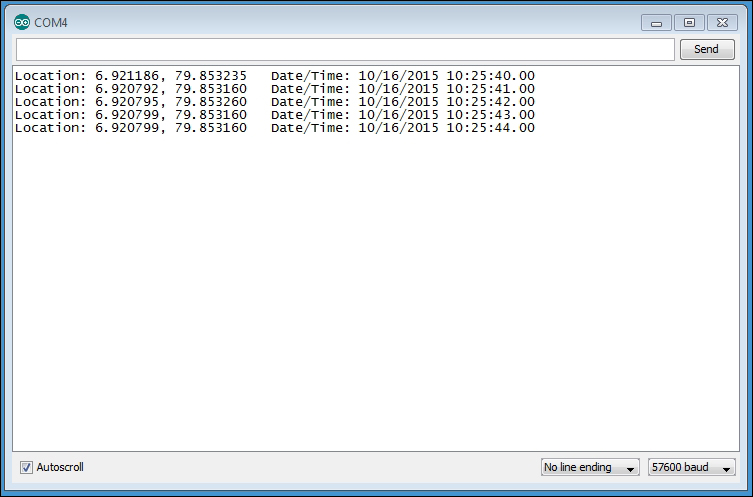

Displaying the current location on Google Maps 440

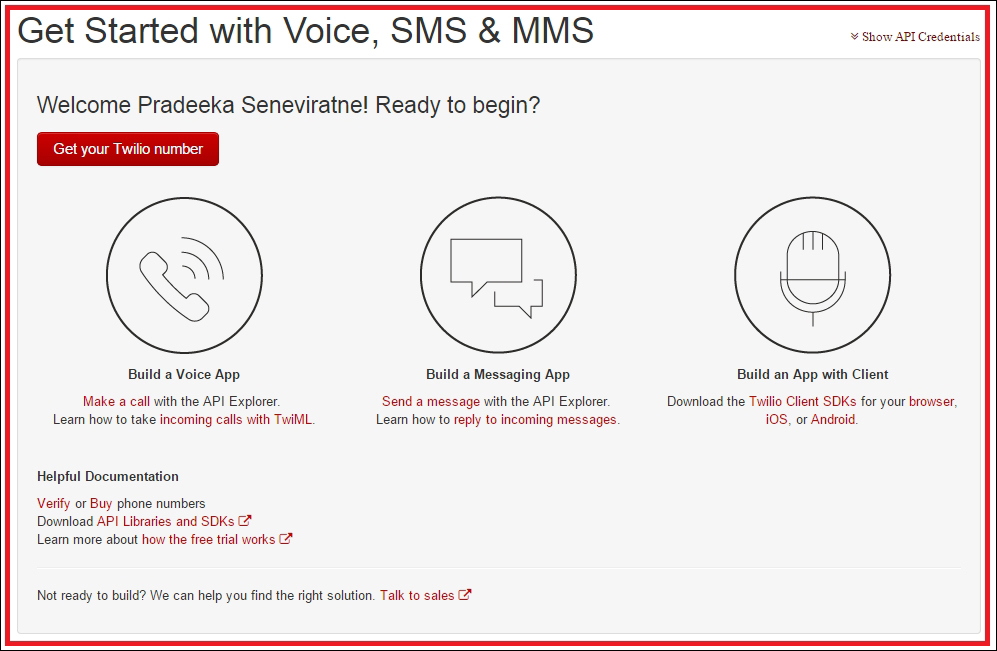

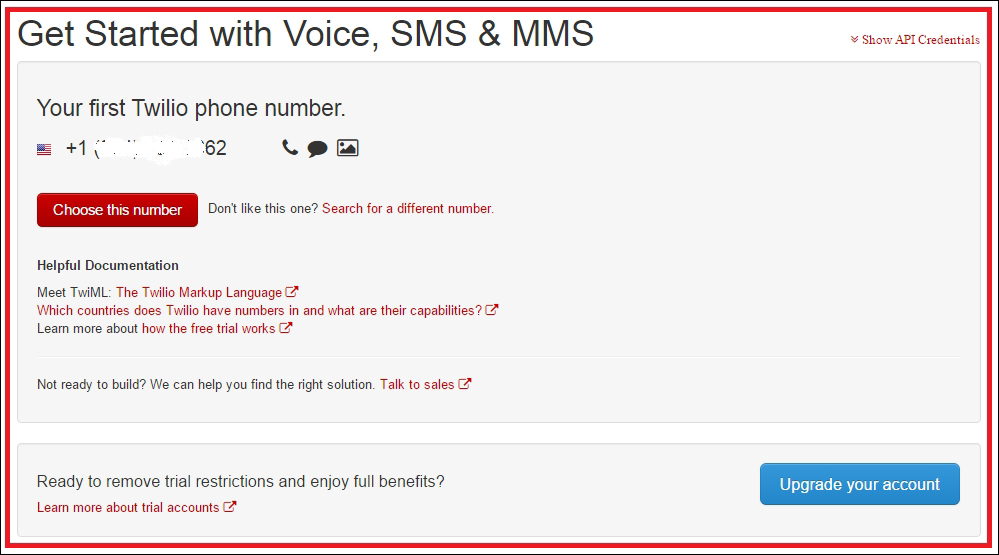

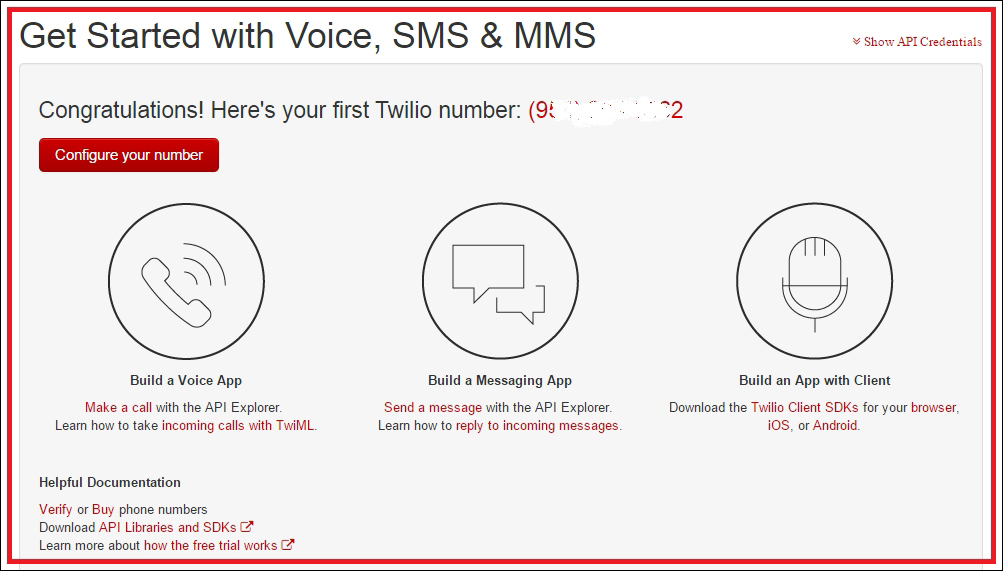

Getting started with Twilio 442

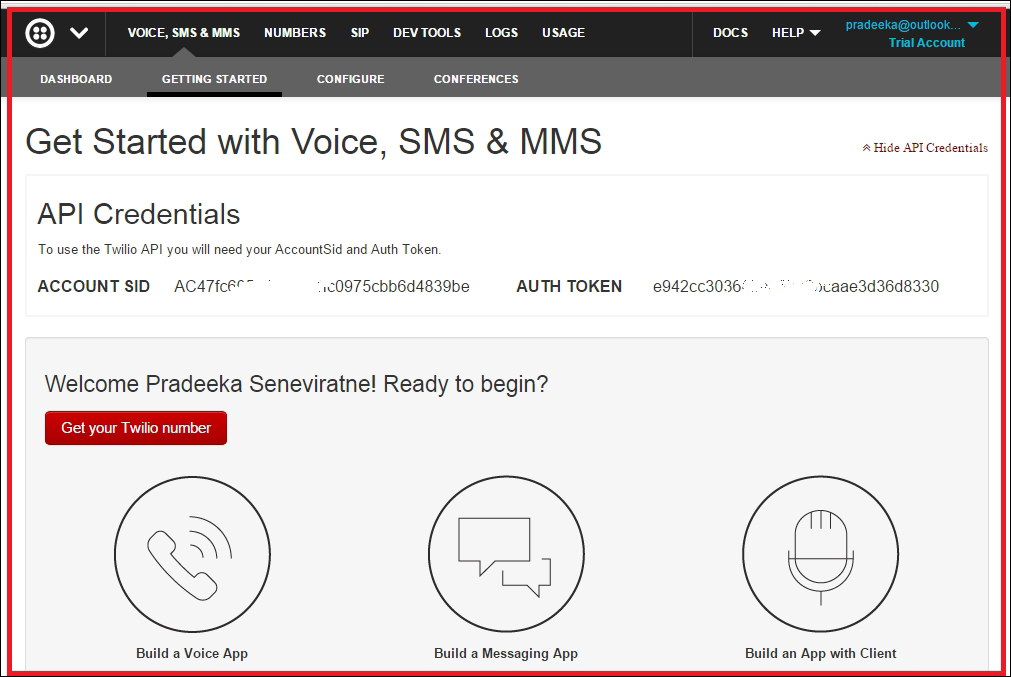

Finding Twilio LIVE API credentials 443

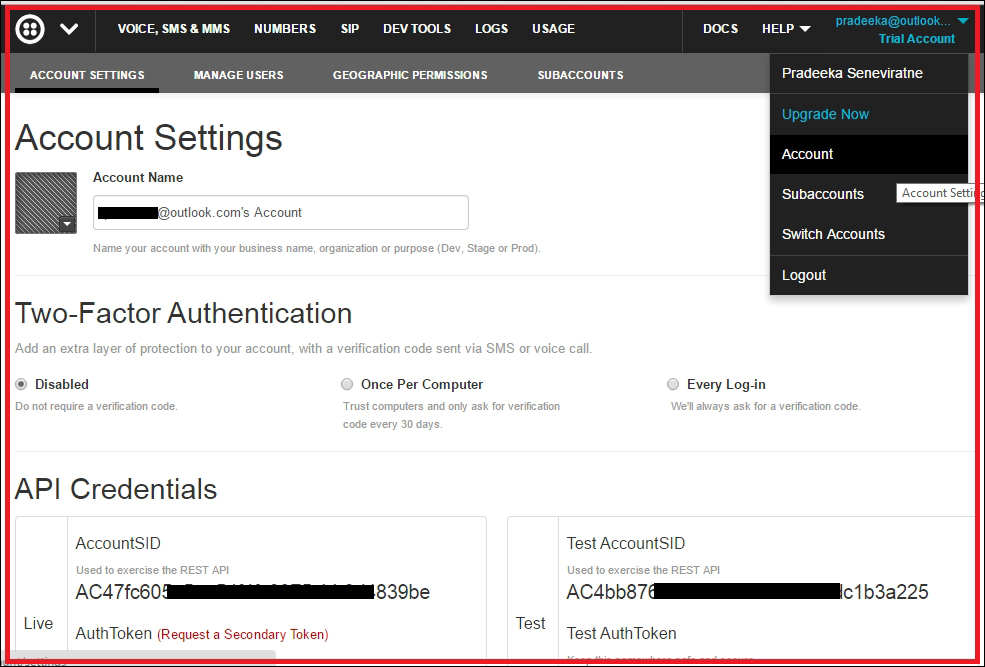

Finding Twilio test API credentials 444

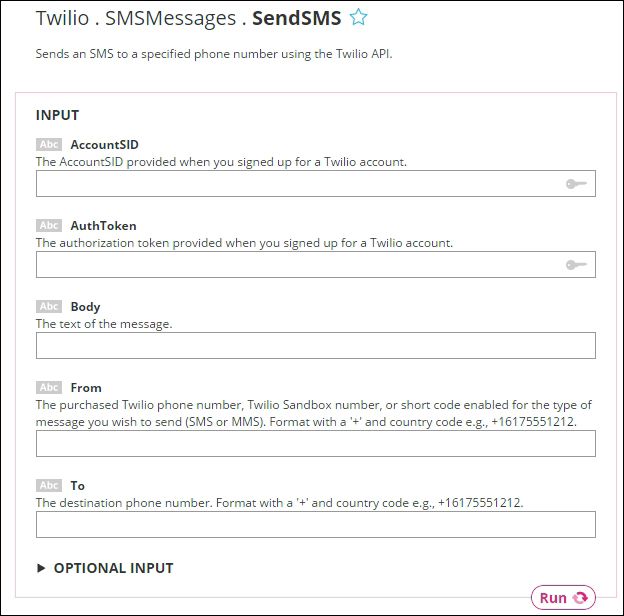

Creating Twilio Choreo with Temboo 448

Sending an SMS with Twilio API 448

Send a GPS location data using Temboo 449

Hardware and software requirements 452

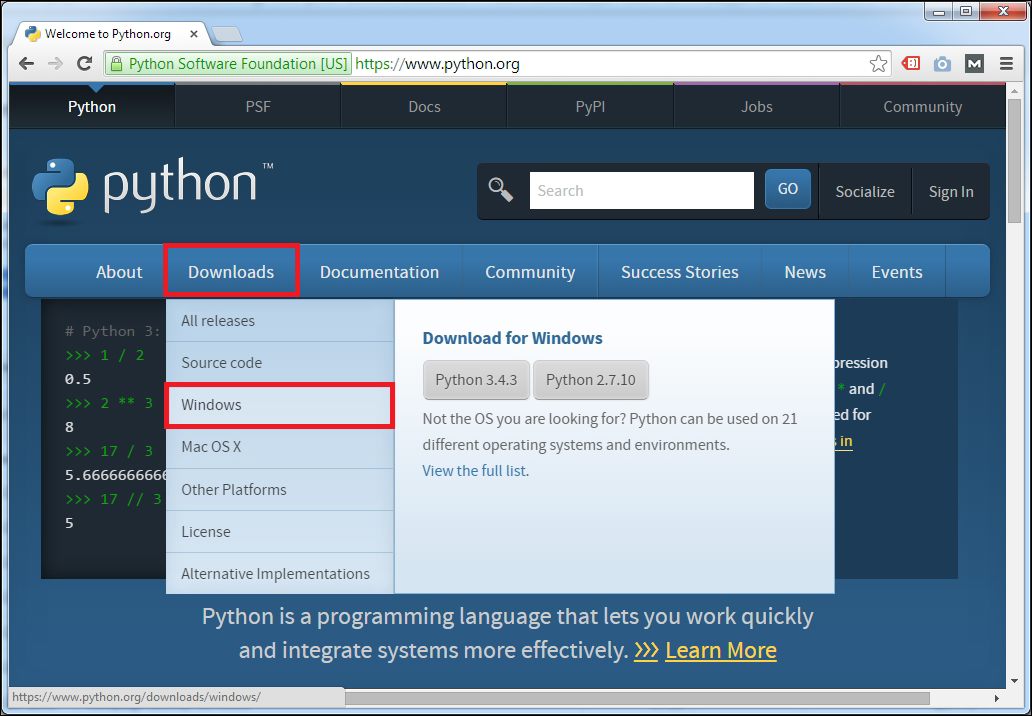

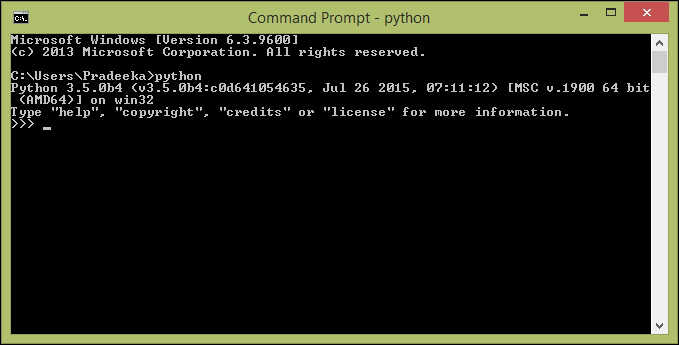

Getting started with Python 453

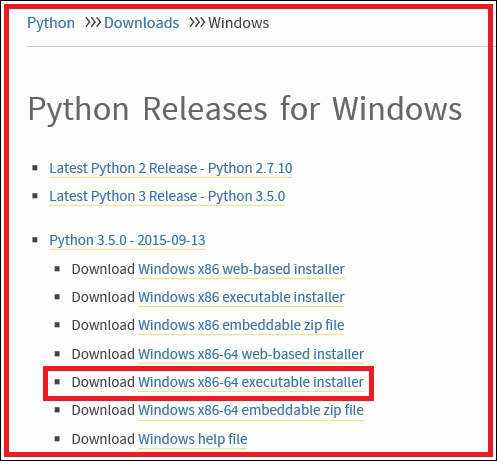

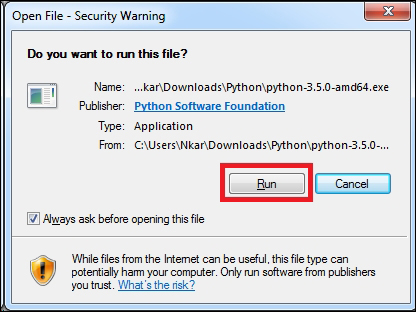

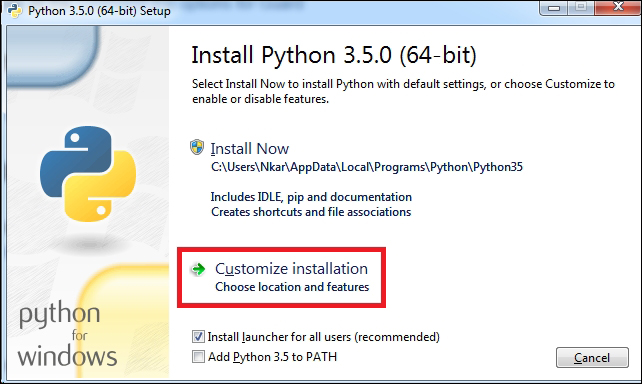

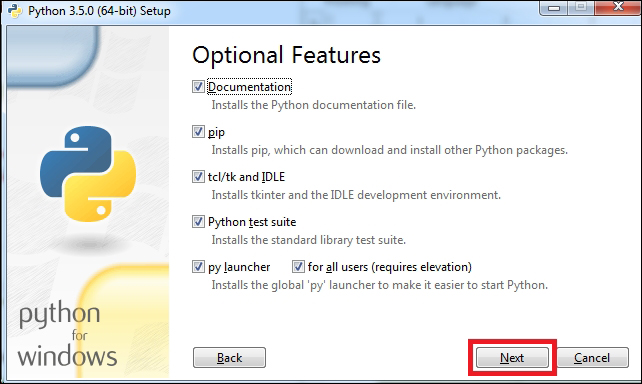

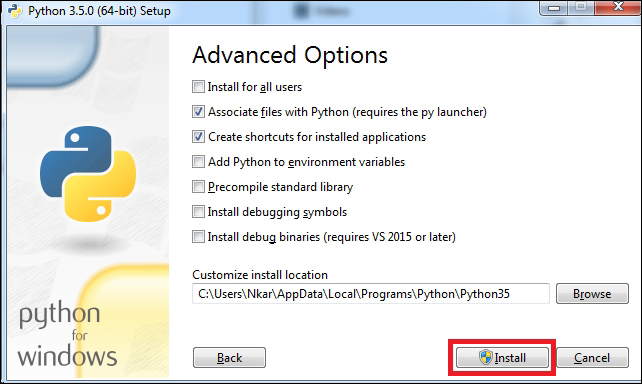

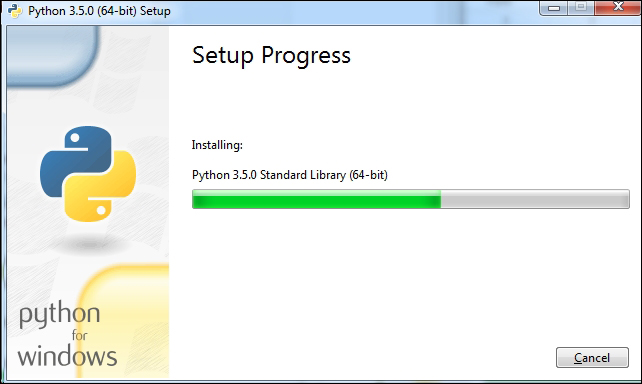

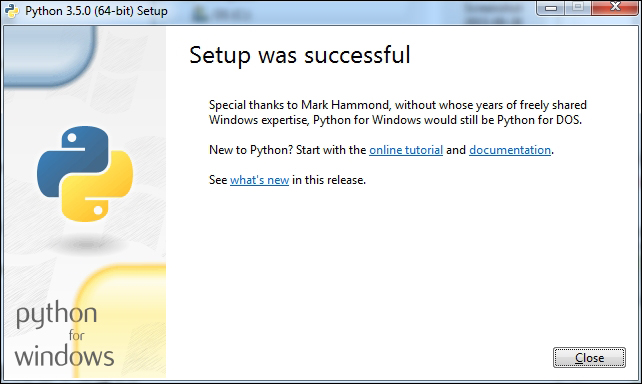

Installing Python on Windows 453

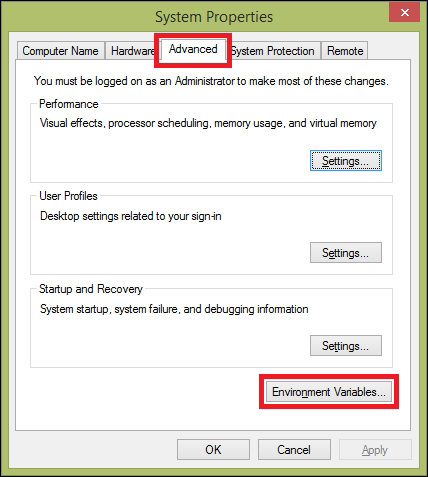

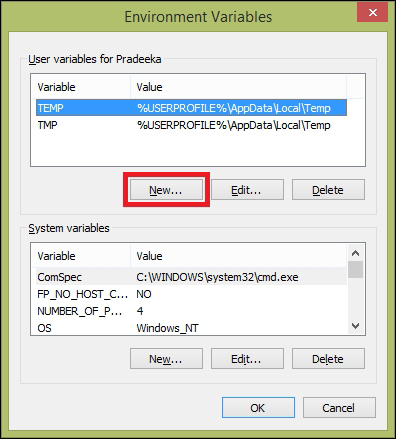

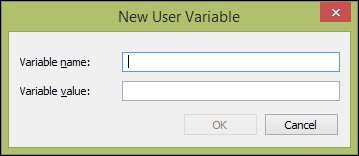

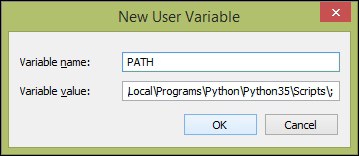

Setting environment variables for Python 459

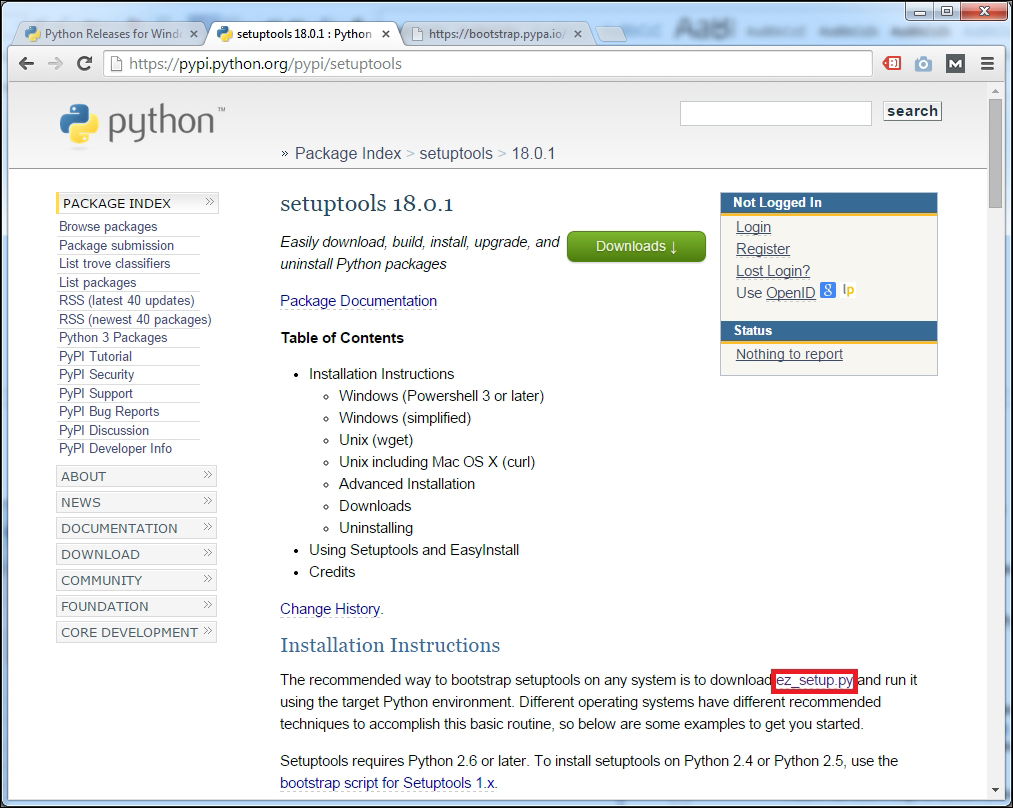

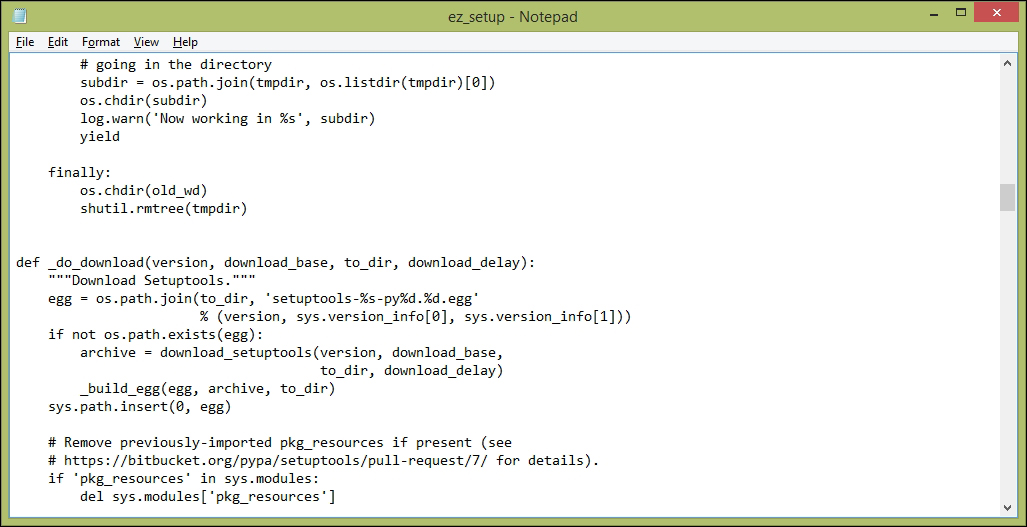

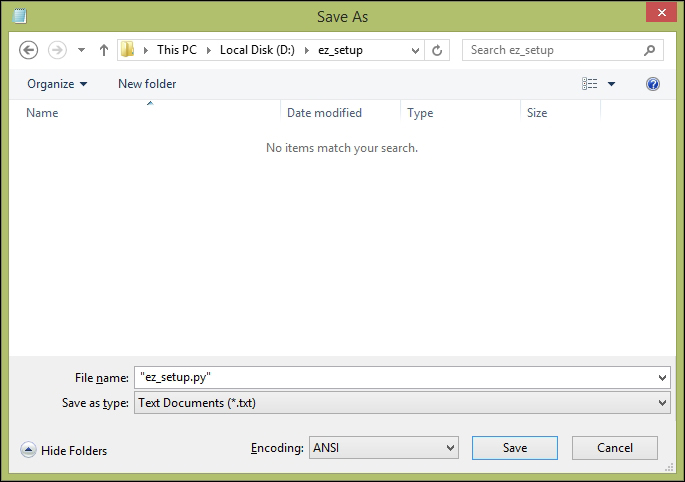

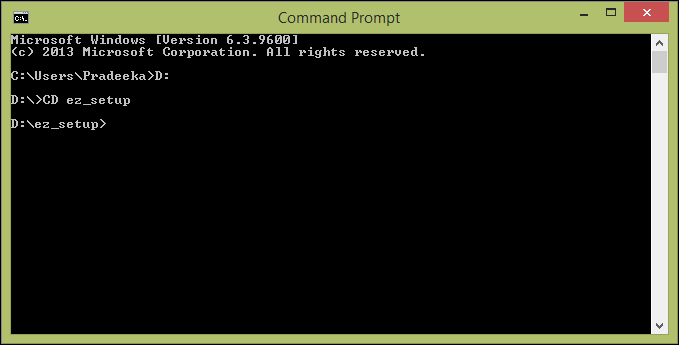

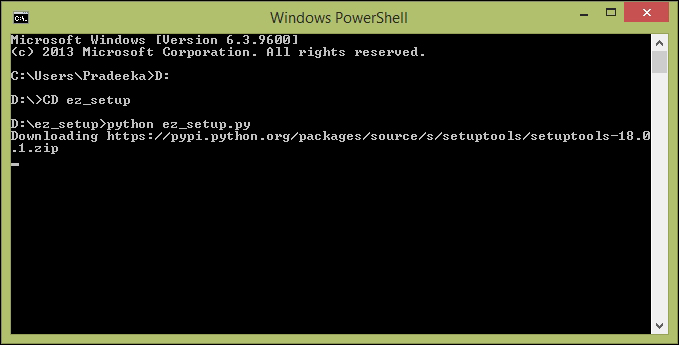

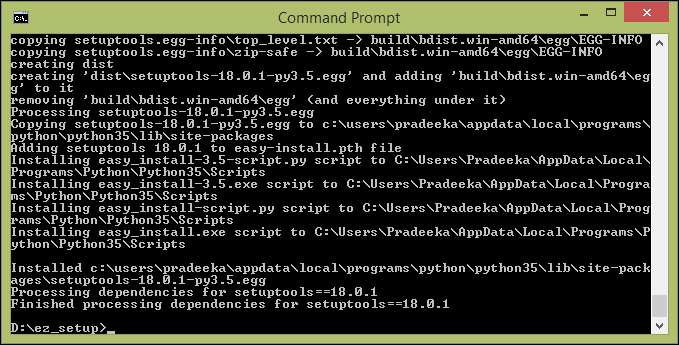

Installing the setuptools utility on Python 462

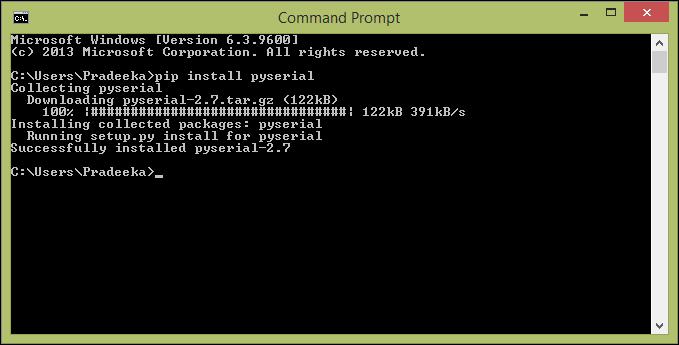

Installing the pip utility on Python 467

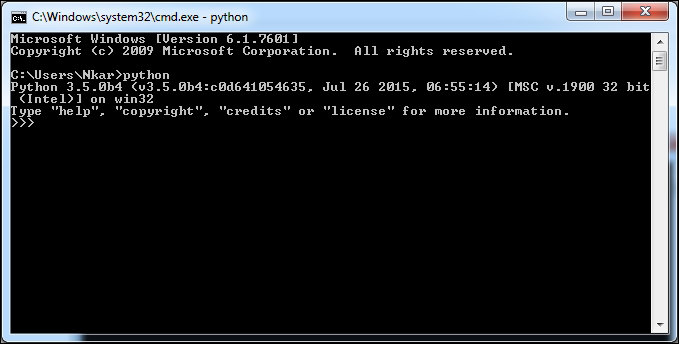

Opening the Python interpreter 468

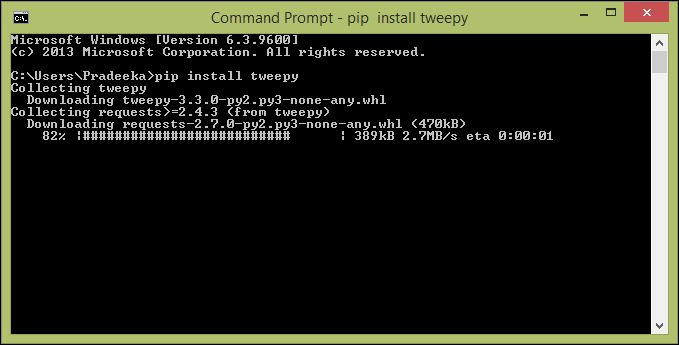

Installing the Tweepy library 469

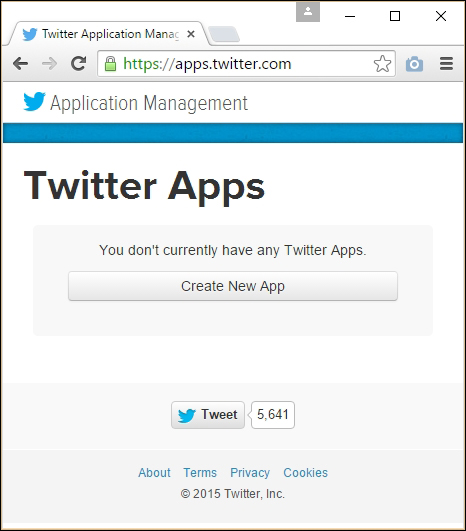

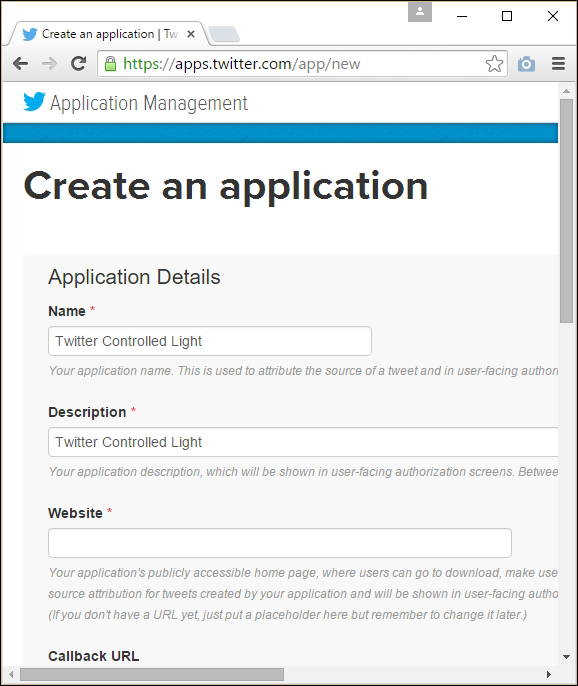

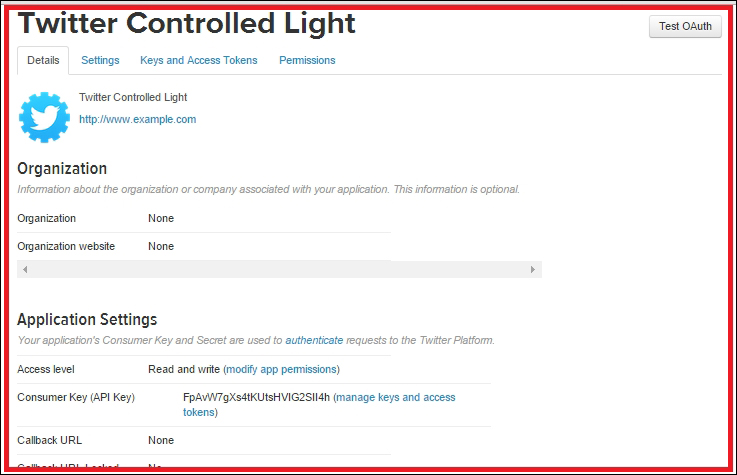

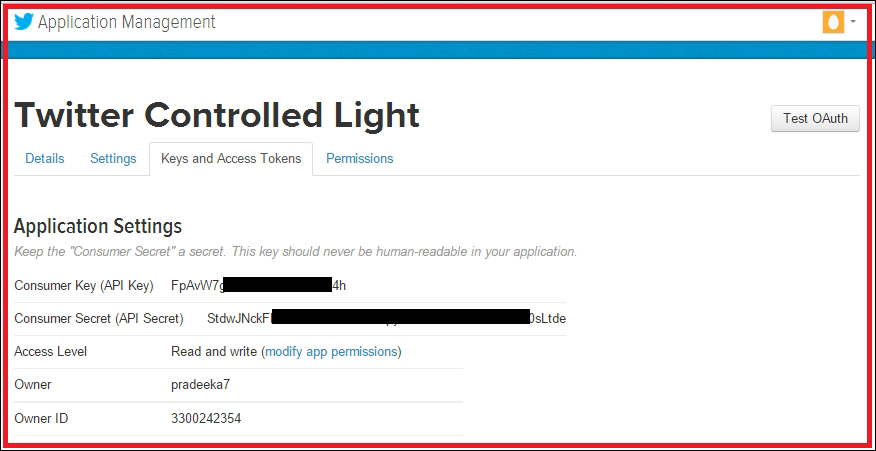

Creating a Twitter app and obtaining API keys 472

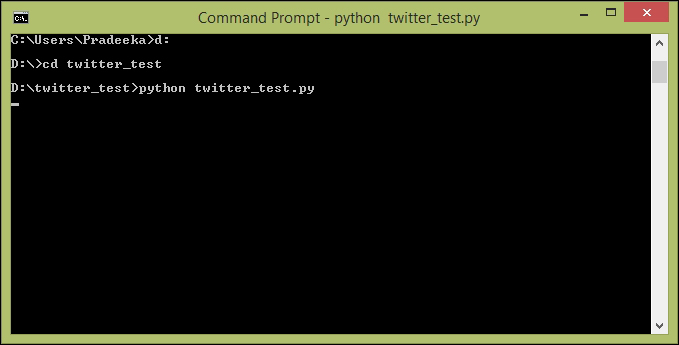

Writing a Python script to read Twitter tweets 475

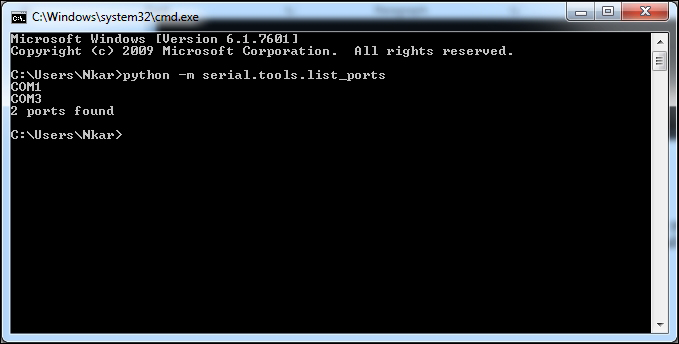

Reading the serial data using Arduino 479

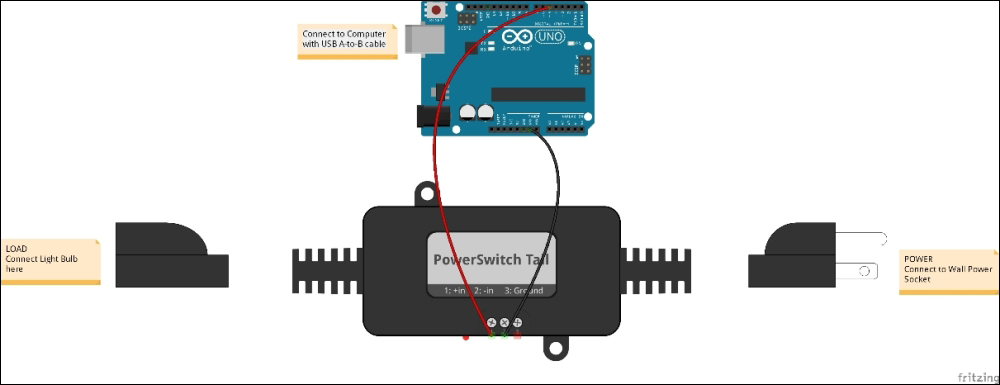

Connecting the PowerSwitch Tail with Arduino 479

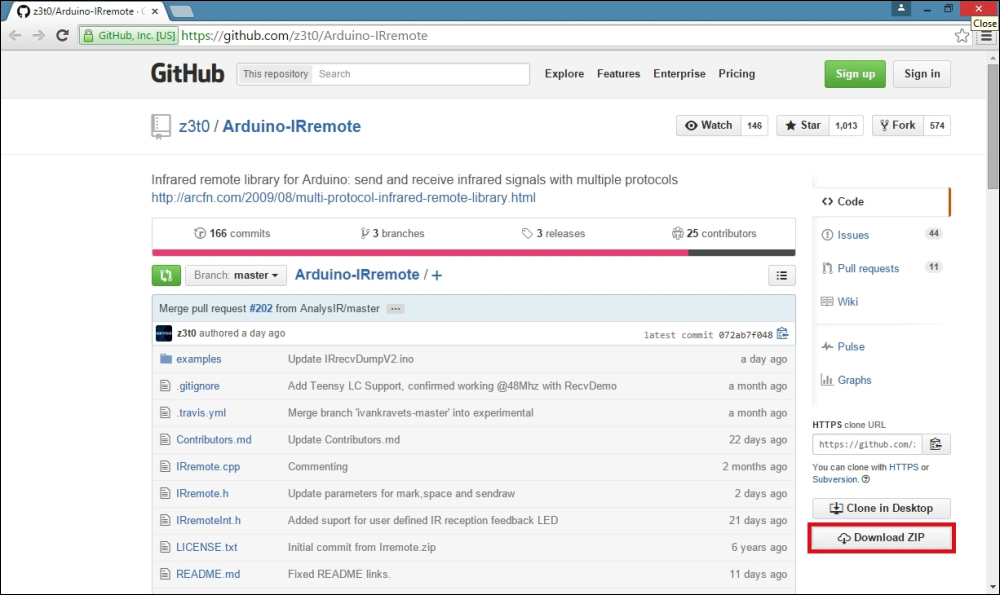

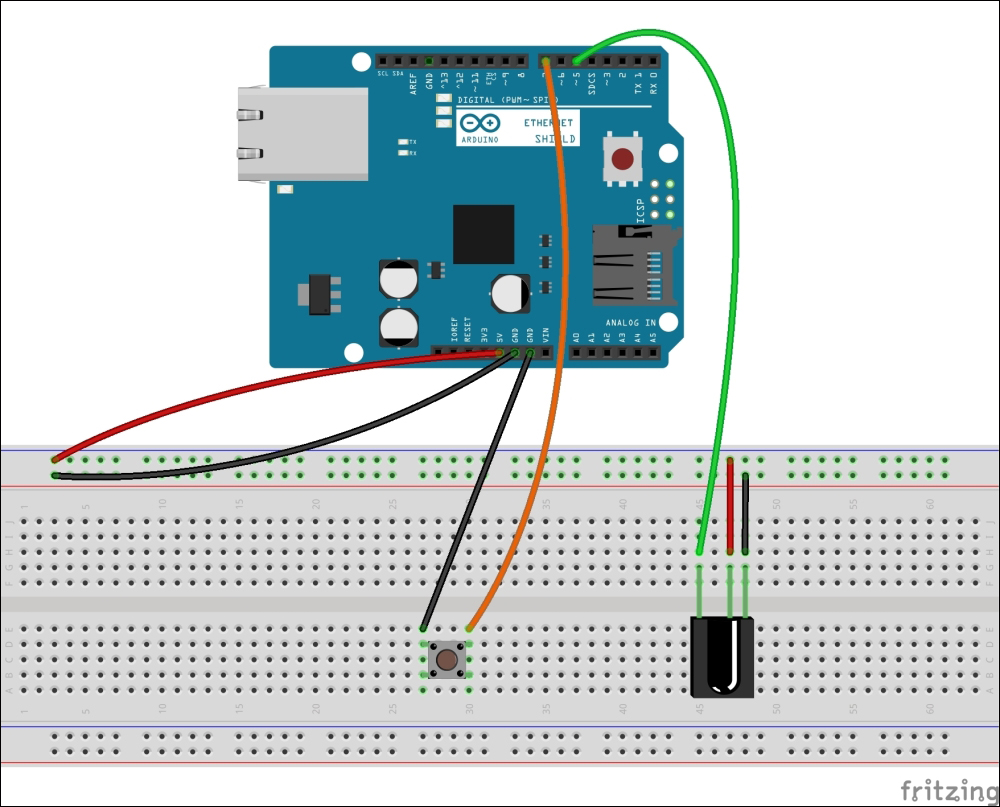

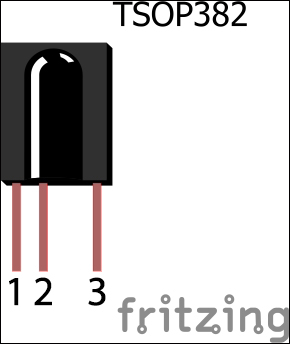

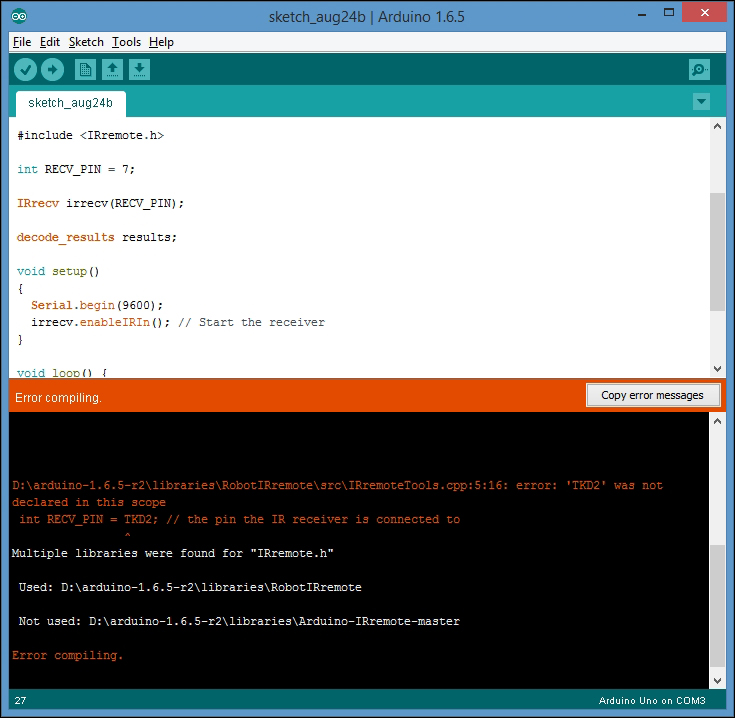

Building an Arduino infrared recorder and remote 482

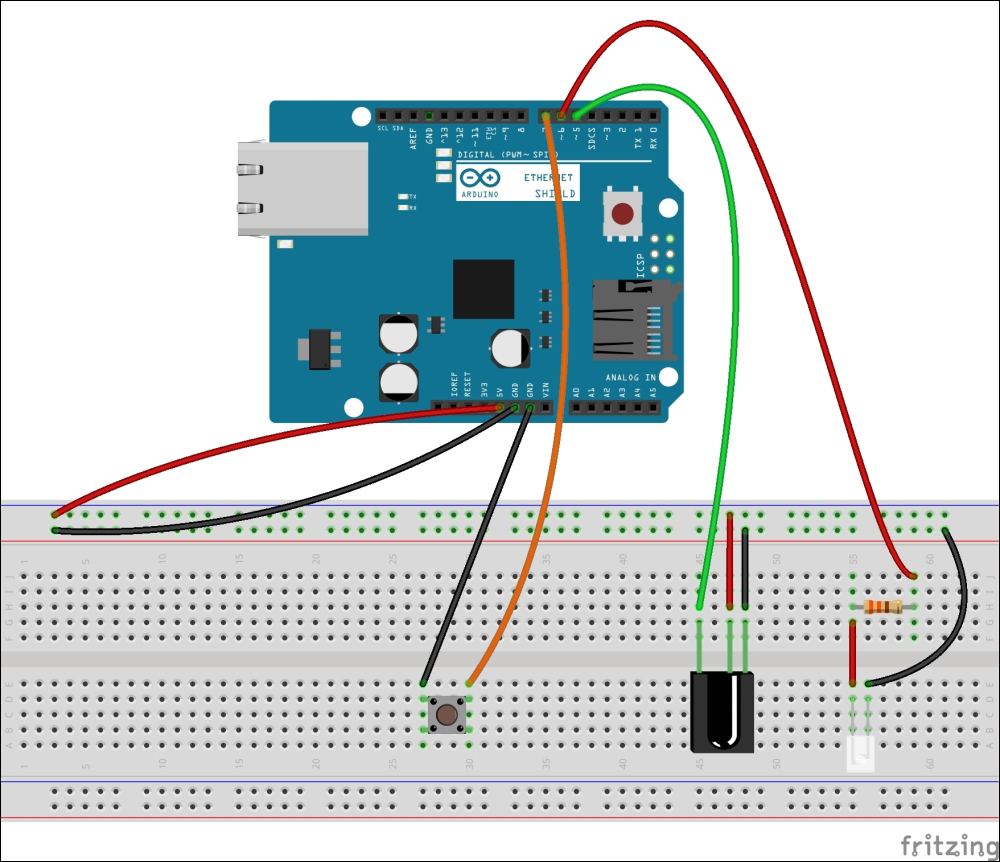

Building the IR receiver module 485

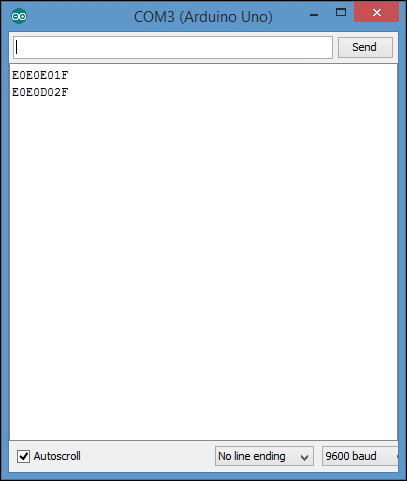

Capturing IR commands in hexadecimal 487

Capturing IR commands in the raw format 490

Building the IR sender module 493

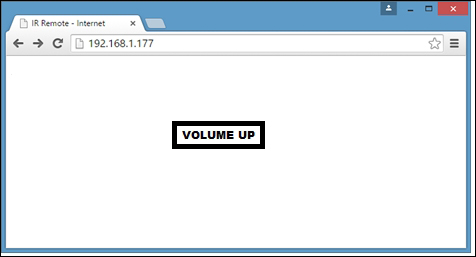

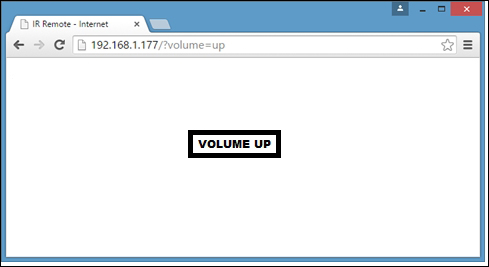

Controlling through the LAN 497

Adding an IR socket to non-IR enabled devices 500

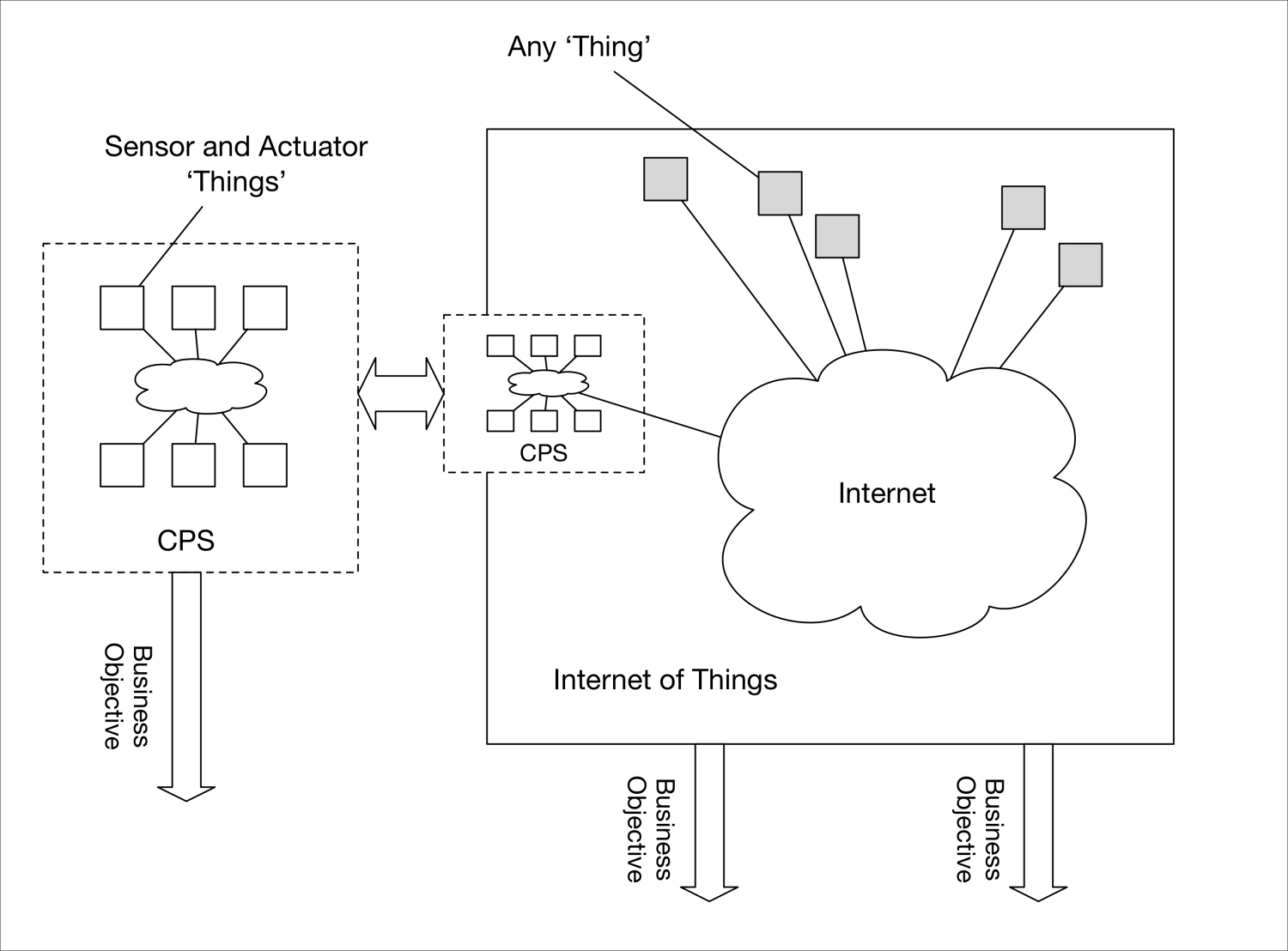

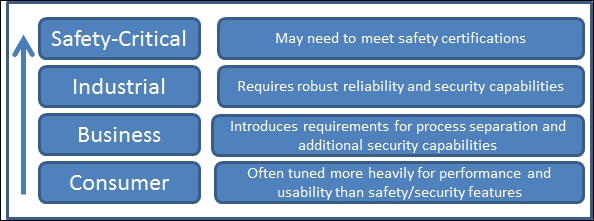

Cybersecurity versus IoT security and cyber-physical systems 510

Why cross-industry collaboration is vital 514

Energy industry and smart grid 518

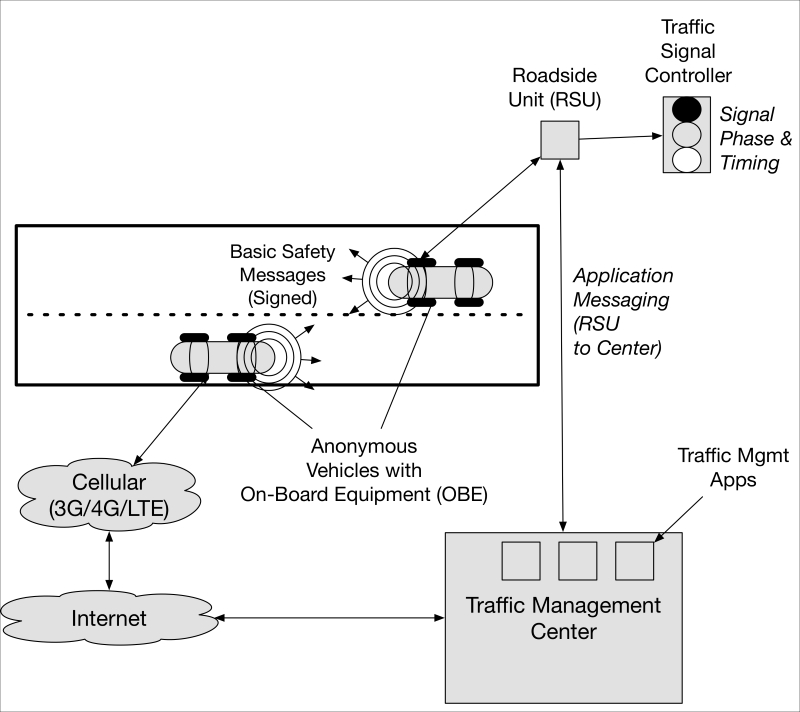

Connected vehicles and transportation 518

Implantables and medical devices 519

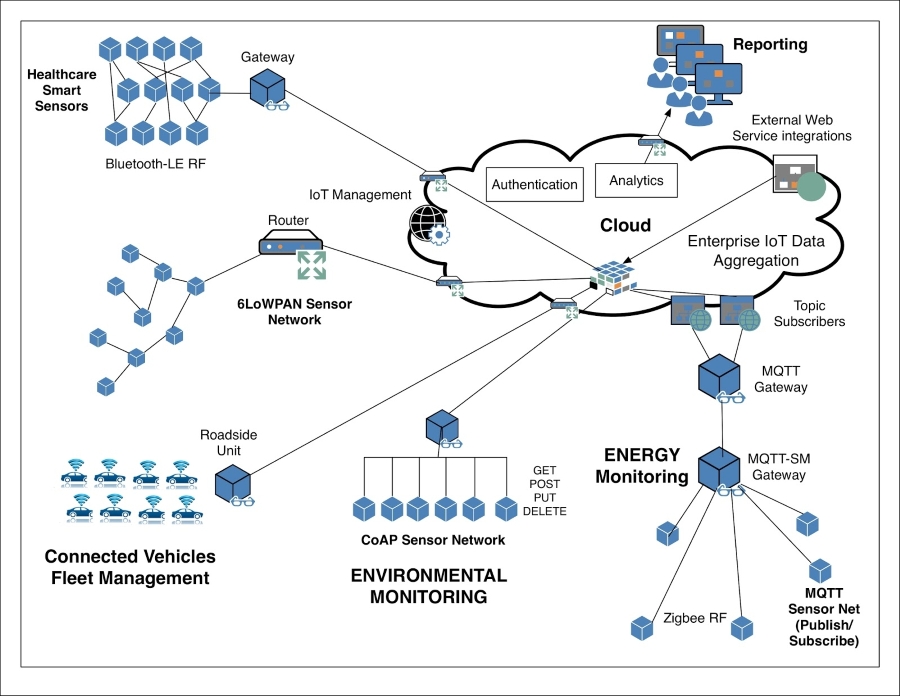

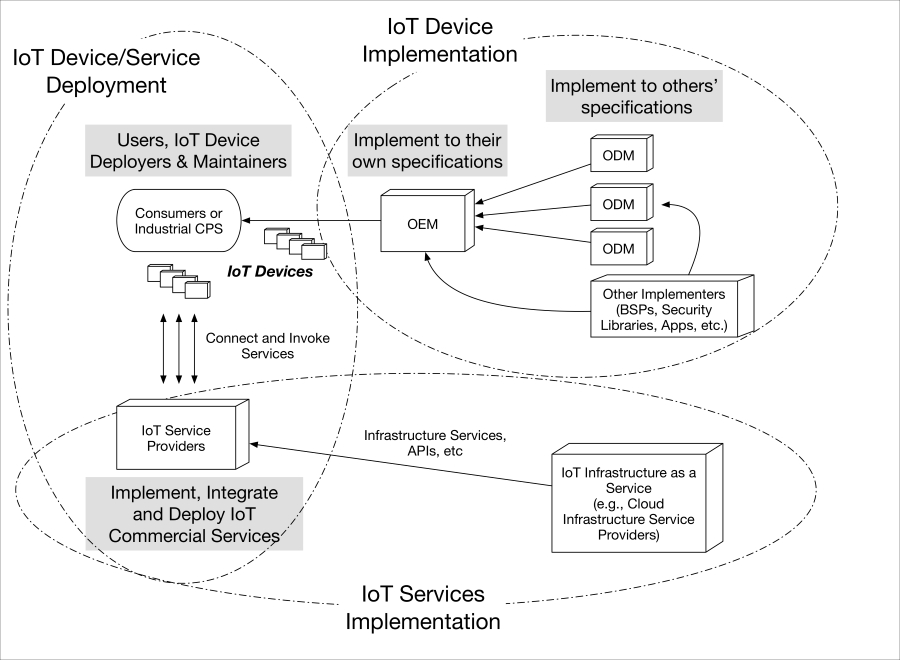

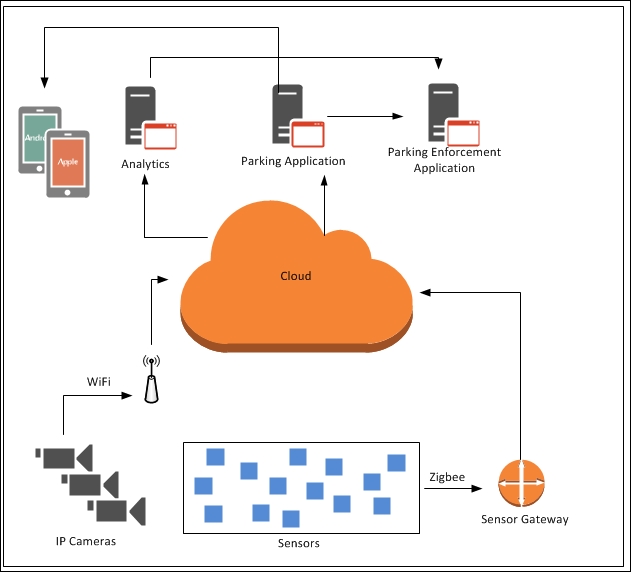

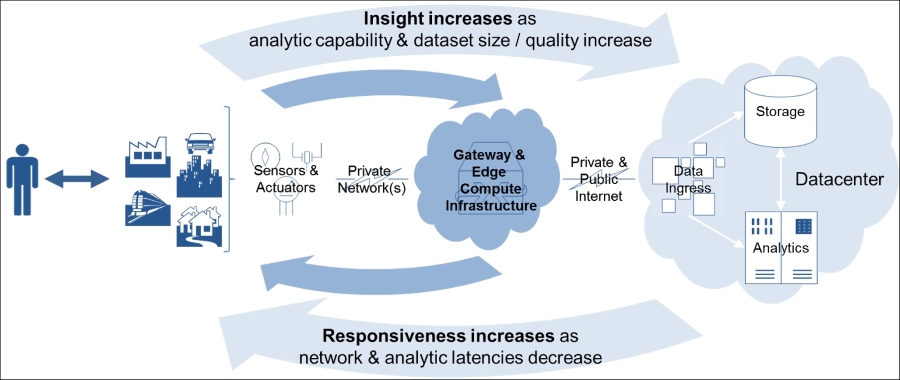

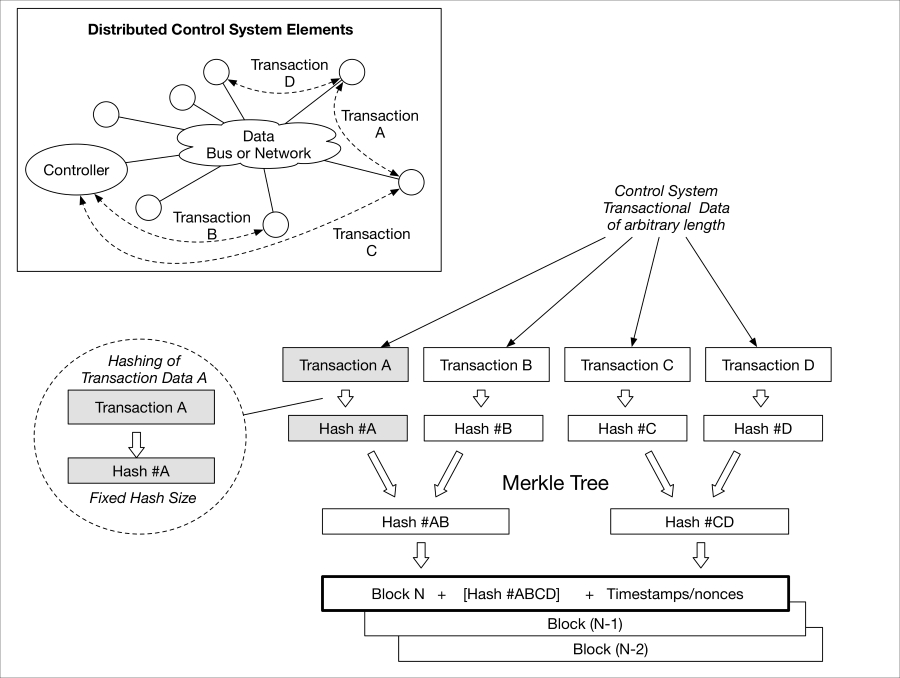

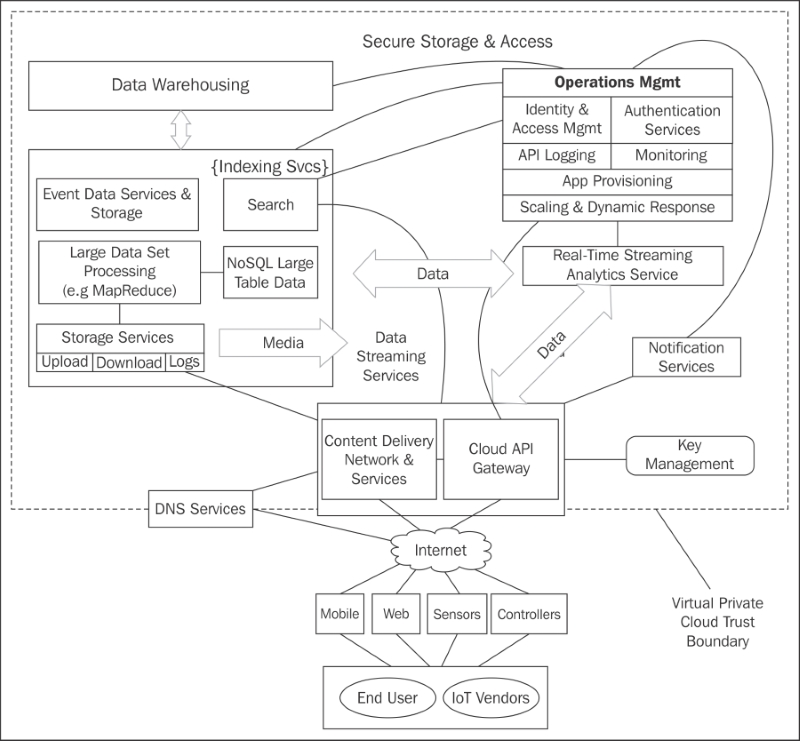

IoT service implementation 527

IoT device and service deployment 527

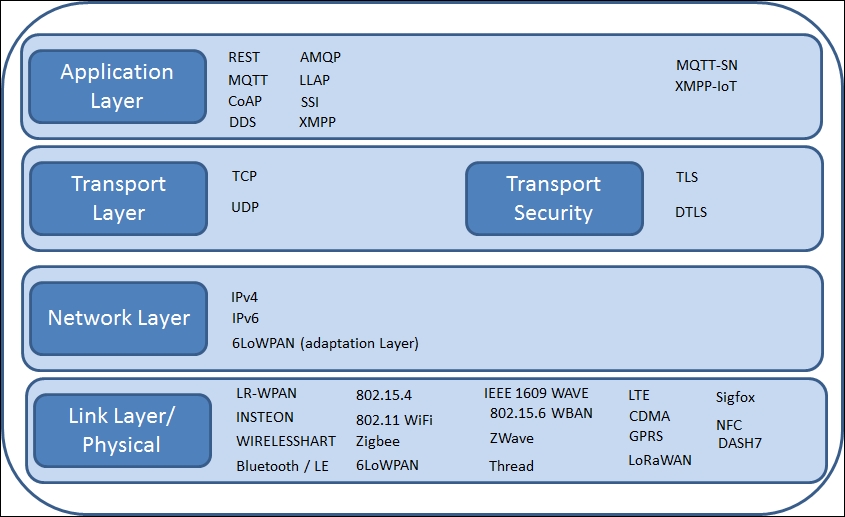

Data link and physical protocols 537

IoT data collection, storage, and analytics 538

IoT integration platforms and solutions 539

The IoT of the future and the need to secure 541

The future – cognitive systems and the IoT 541

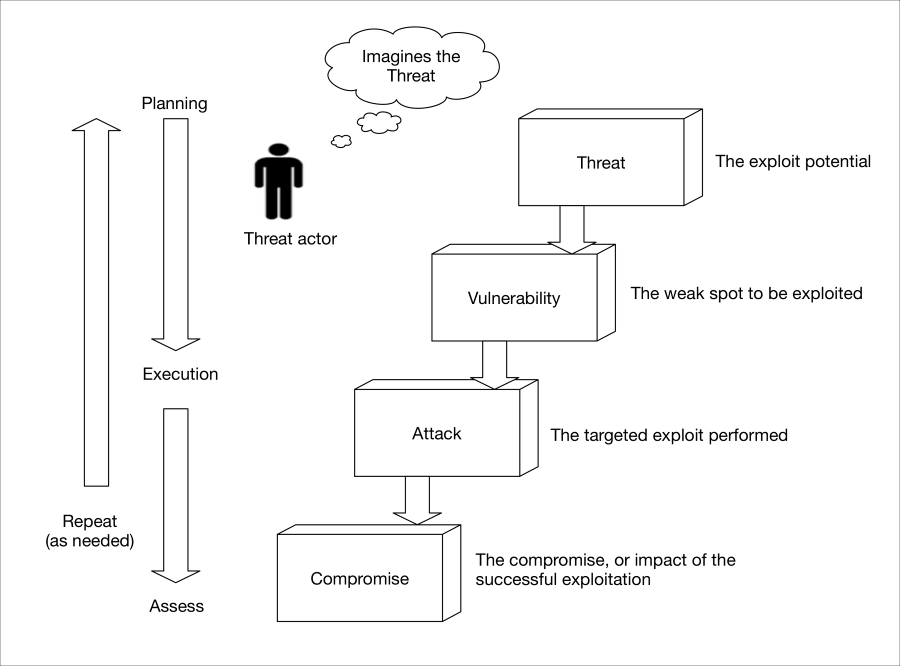

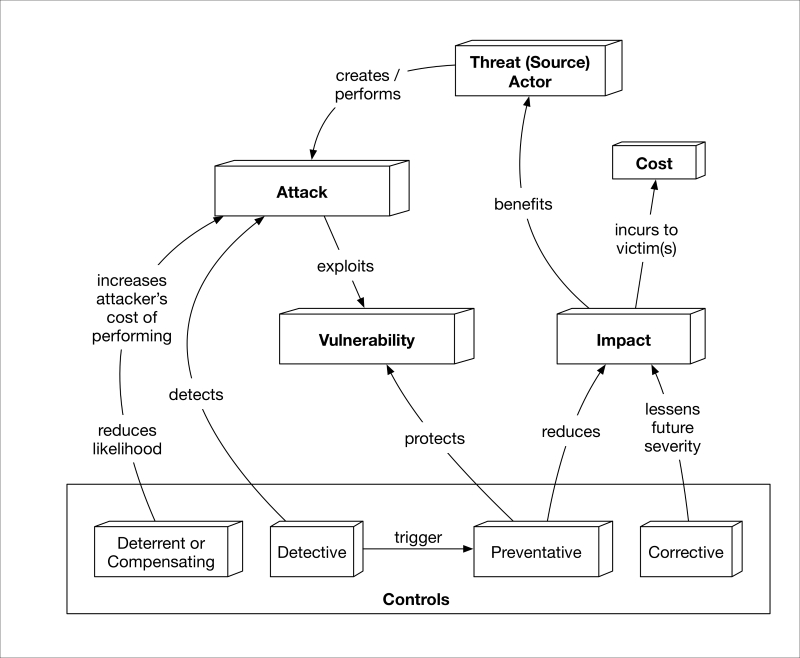

Primer on threats, vulnerability, and risks (TVR) 544

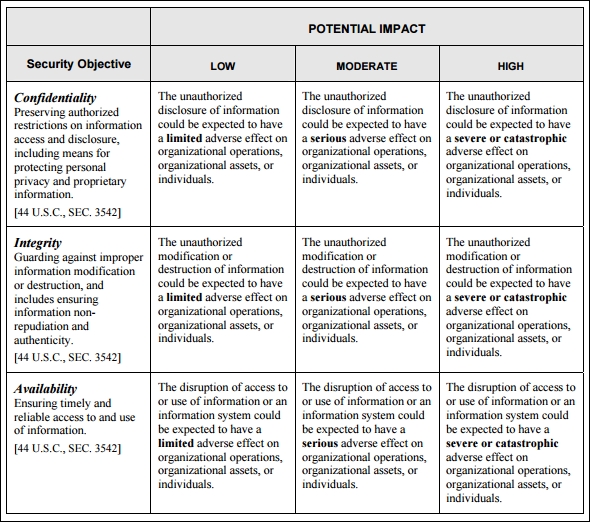

The classic pillars of information assurance 544

Primer on attacks and countermeasures 550

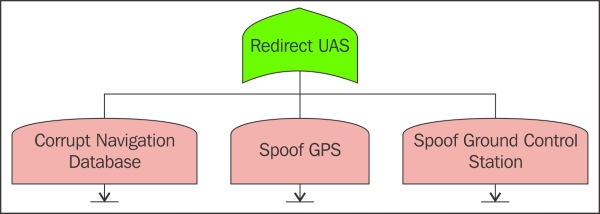

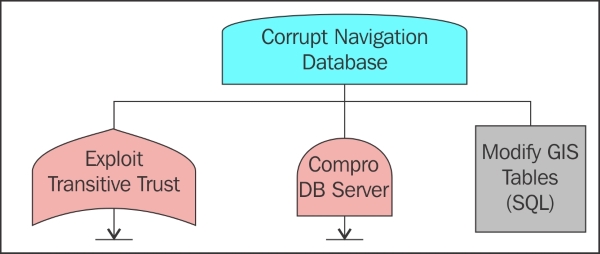

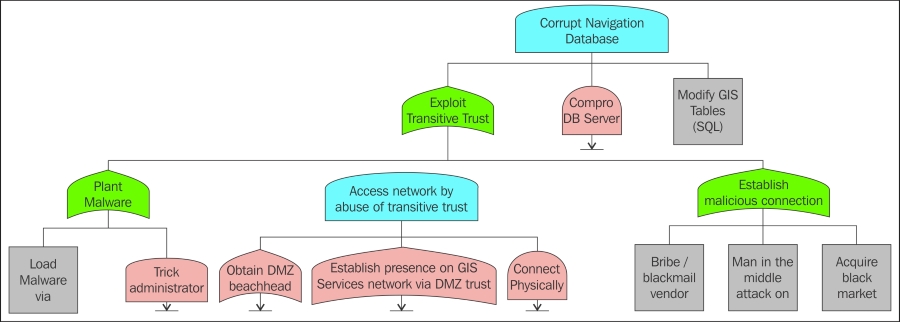

Fault (failure) trees and CPS 557

Fault tree and attack tree differences 558

Merging fault and attack tree analysis 558

Example anatomy of a deadly cyber-physical attack 559

Wireless reconnaissance and mapping 564

Application security attacks 565

Lessons learned and systematic approaches 567

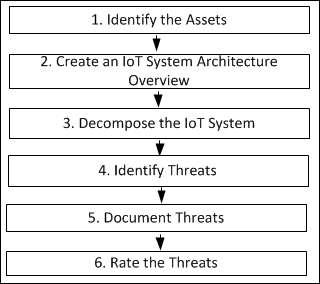

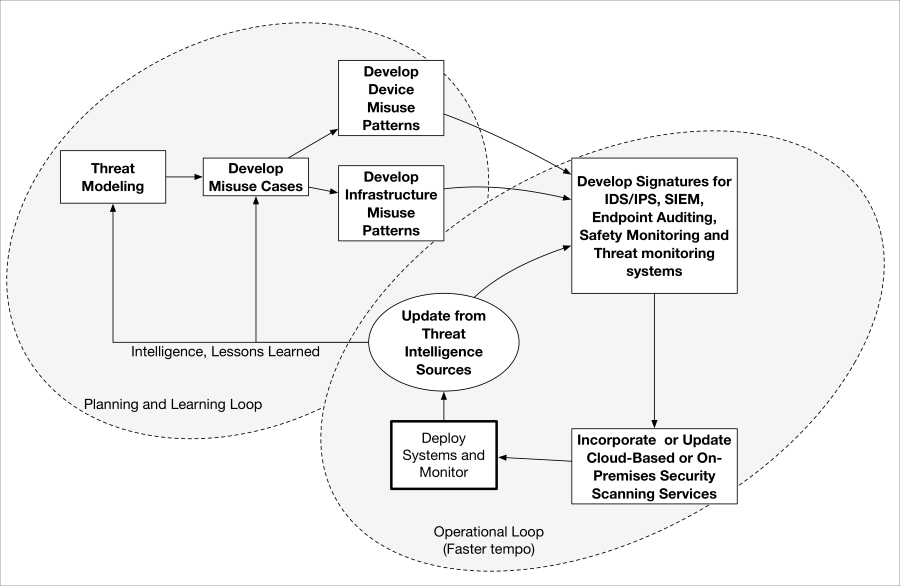

Threat modeling an IoT system 568

Step 1 – identify the assets 569

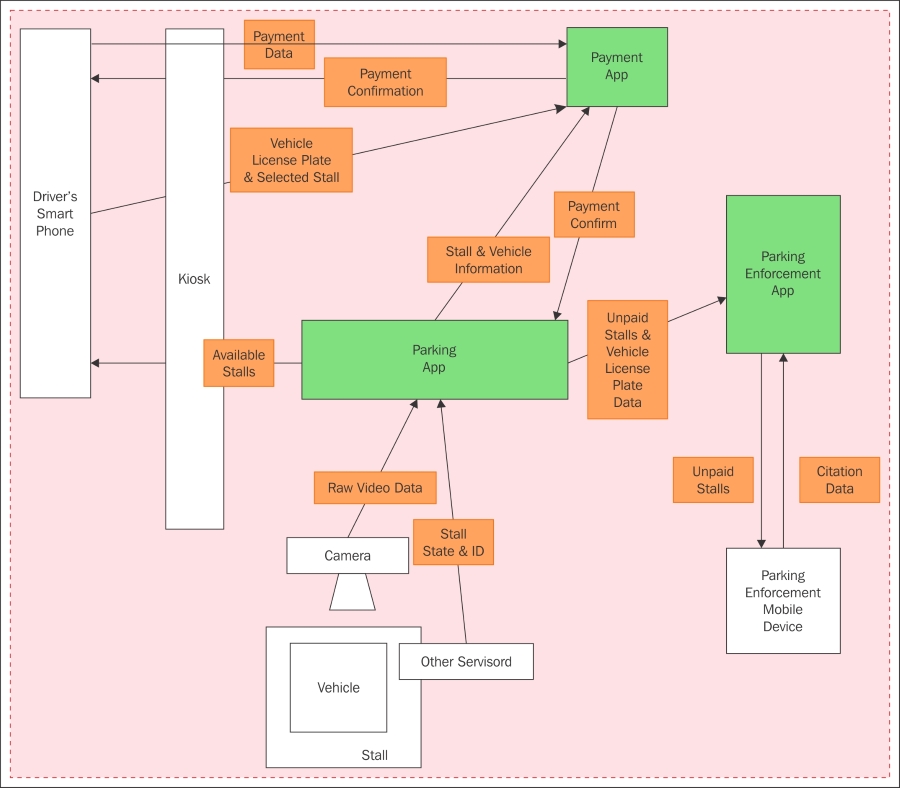

Step 2 – create a system/architecture overview 571

Step 3 – decompose the IoT system 576

Step 5 – document the threats 584

Building security in to design and development 589

Security in agile developments 589

Focusing on the IoT device in operation 592

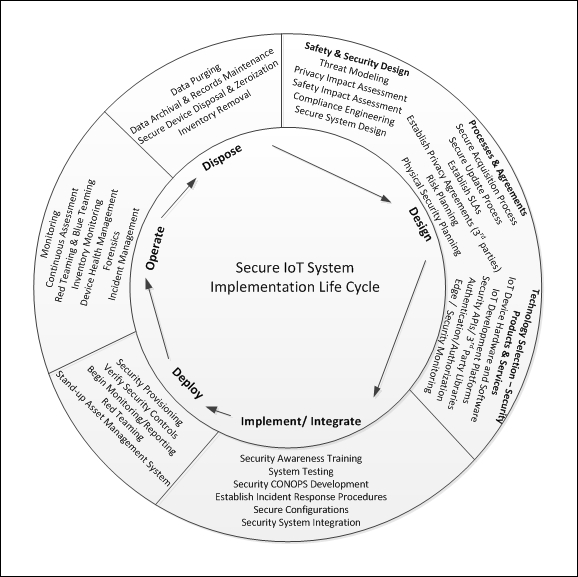

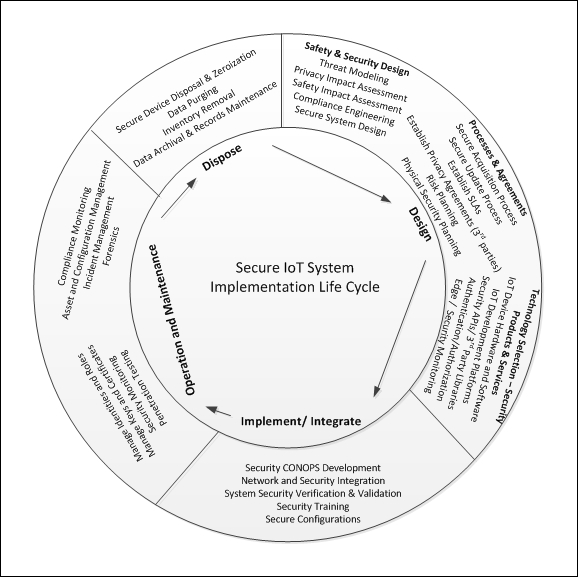

Safety and security design 594

Security system integration 600

Secure acquisition process 604

Establish privacy agreements 606

Consider new liabilities and guard against risk exposure 606

Establish an IoT physical security plan 607

Technology selection – security products and services 608

Selecting a real-time operating system (RTOS) 609

IoT relationship platforms 610

Cryptographic security APIs 611

Authentication/authorization 613

The secure IoT system implementation lifecycle 621

Implementation and integration 621

IoT security CONOPS document 622

Network and security integration 624

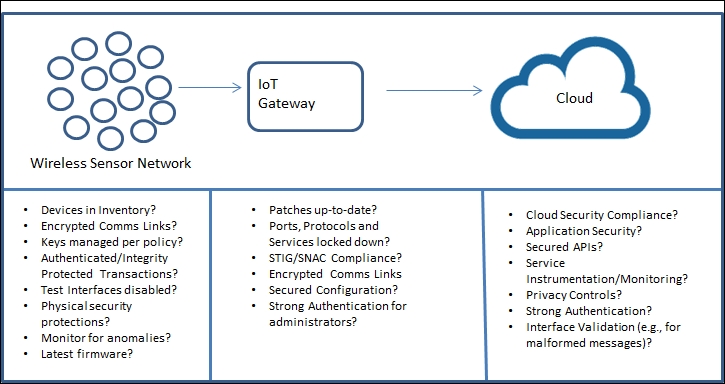

Examining network and security integration for WSNs 624

Examining network and security integration for connected cars 625

Planning for updates to existing network and security infrastructures 625

Planning for provisioning mechanisms 627

Integrating with security systems 627

System security verification and validation (V&V) 628

Security awareness training for users 630

Security administration training for the IoT 630

Secure gateway and network configurations 632

Operations and maintenance 633

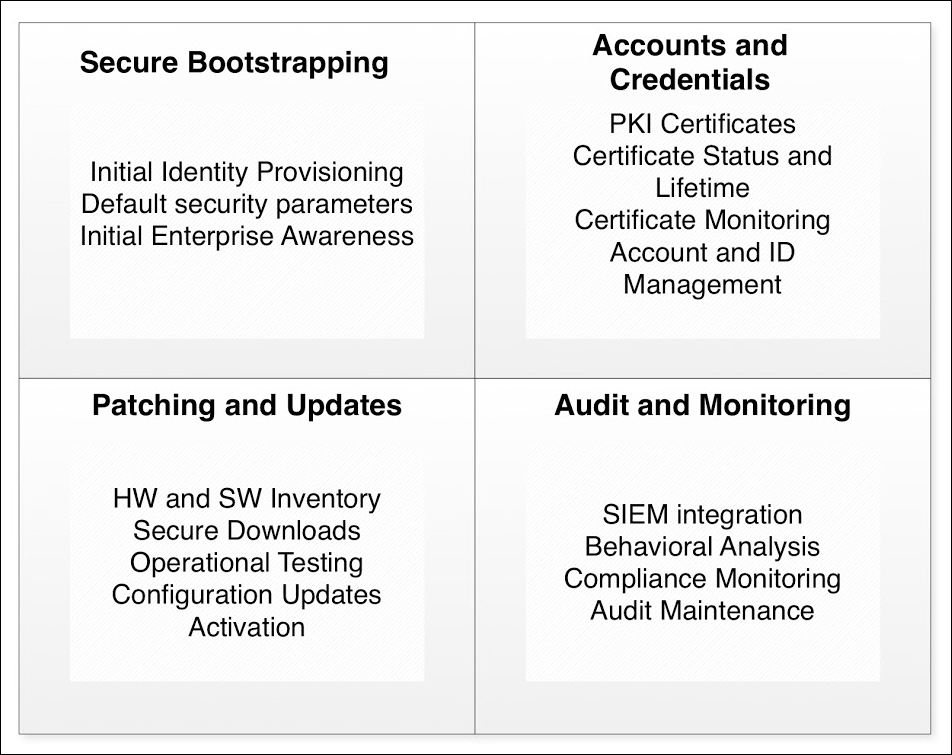

Managing identities, roles, and attributes 633

Identity relationship management and context 634

Attribute-based access control 634

Consider third-party data requirements 635

Manage keys and certificates 636

Evaluating hardware security 640

IoT penetration test tools 641

Asset and configuration management 643

Secure device disposal and zeroization 645

Data archiving and records management 646

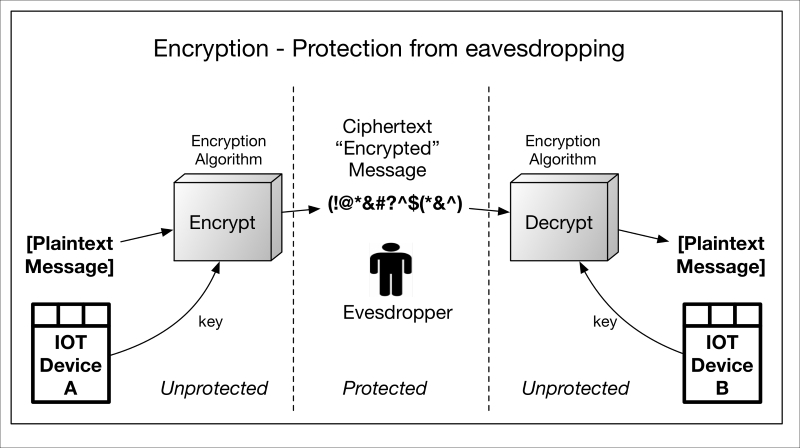

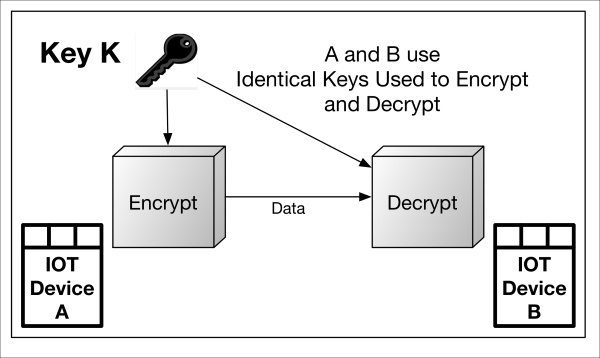

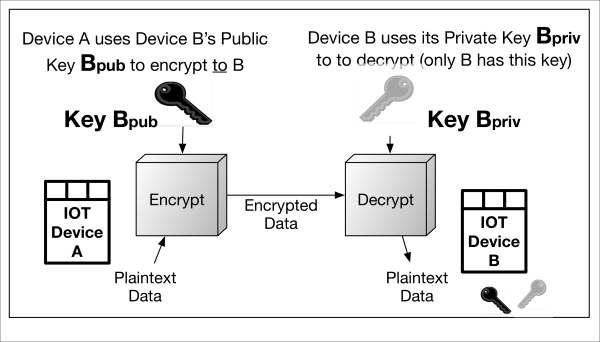

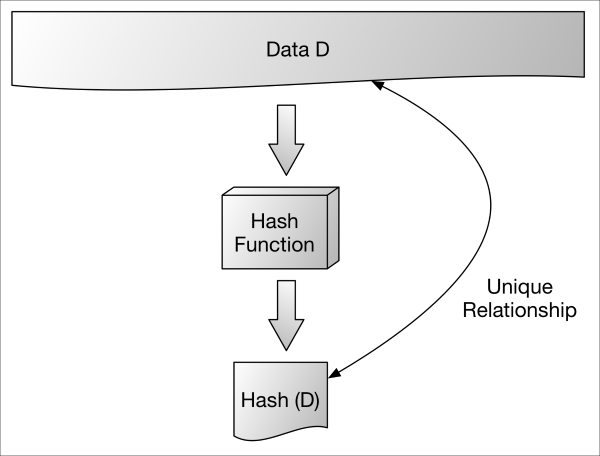

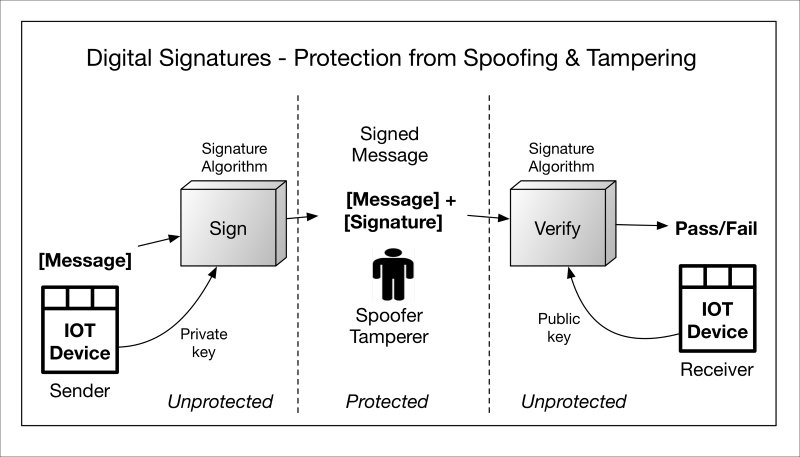

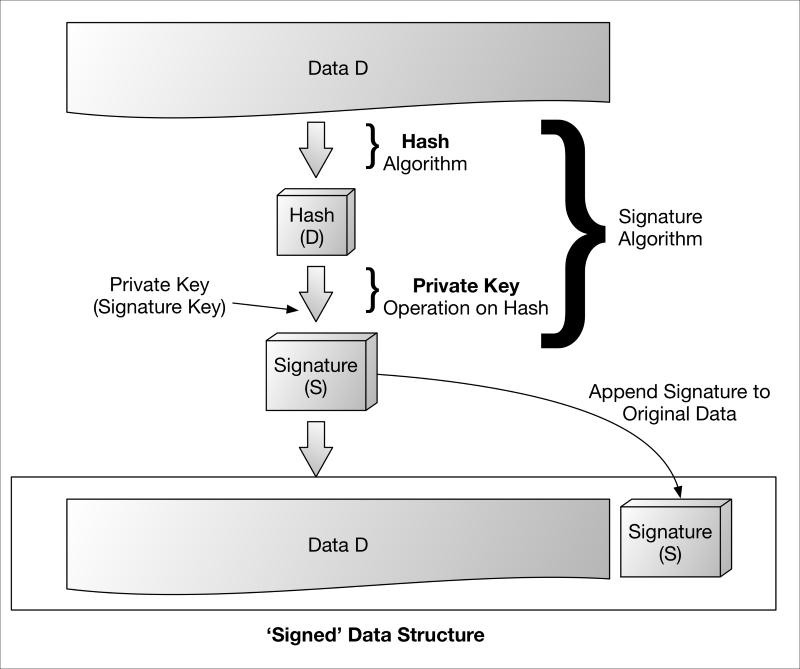

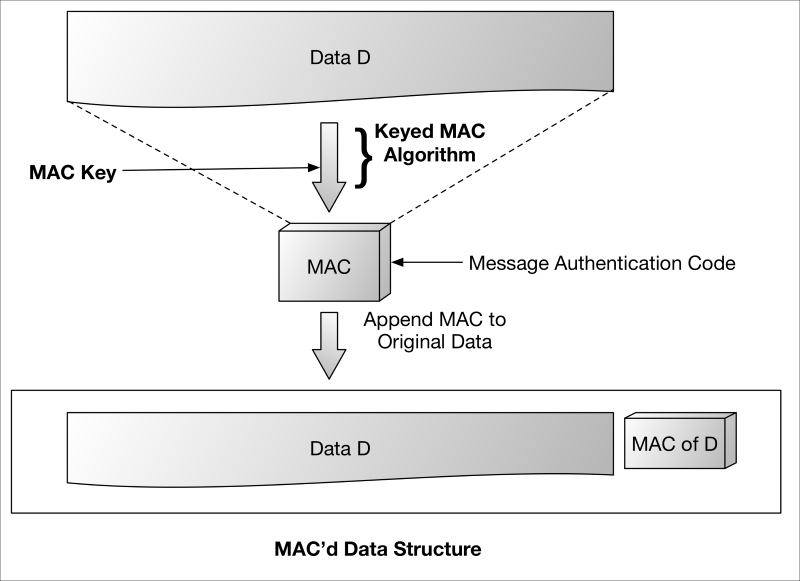

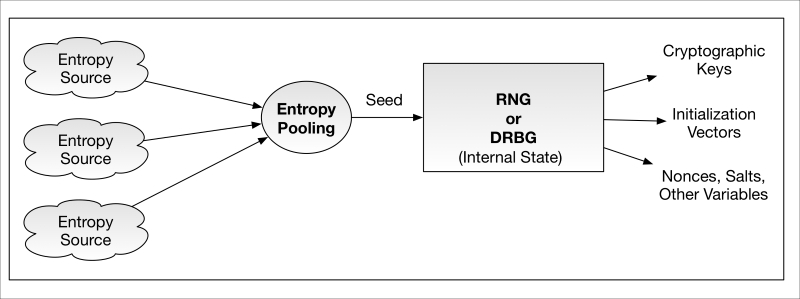

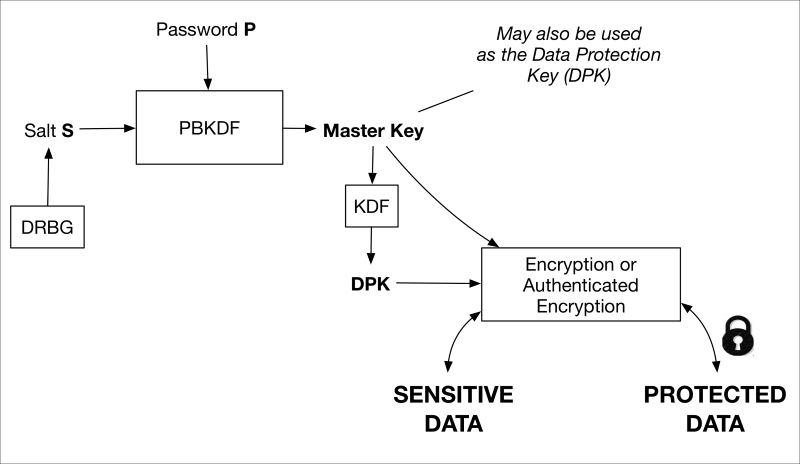

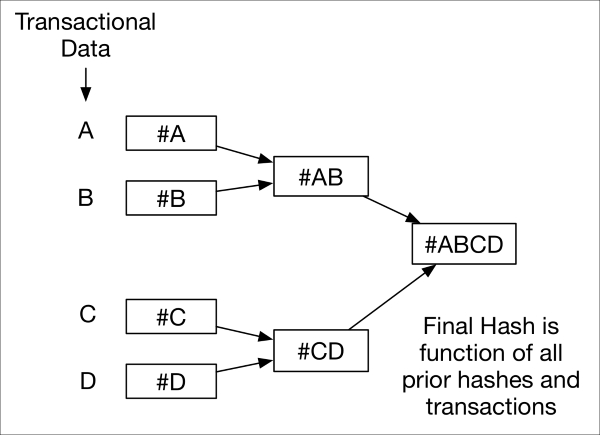

Cryptography and its role in securing the IoT 648

Types and uses of cryptographic primitives in the IoT 650

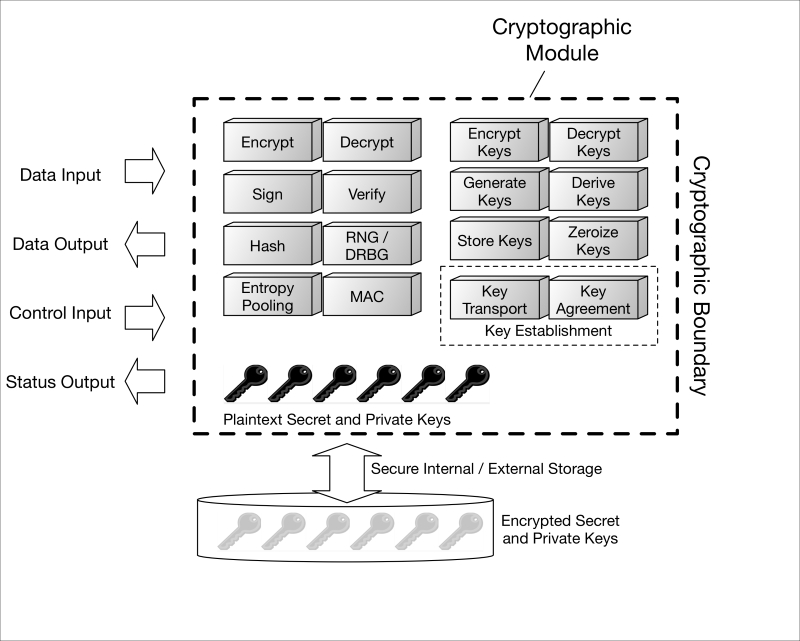

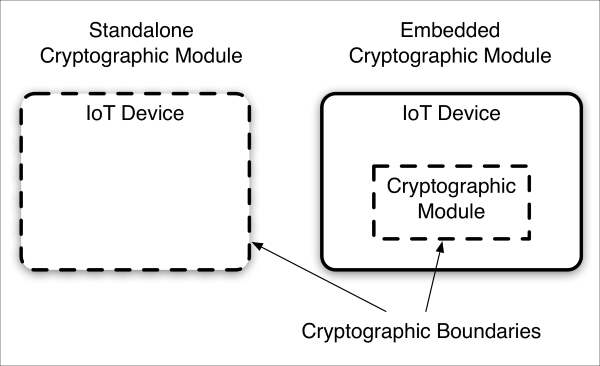

Cryptographic module principles 666

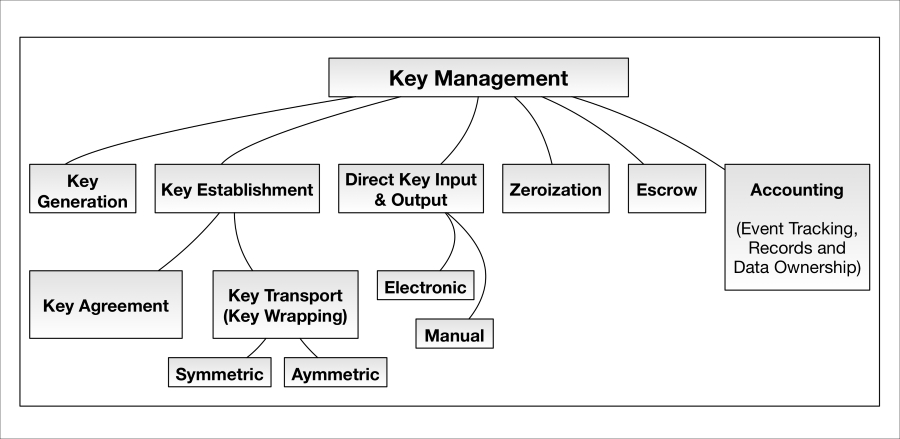

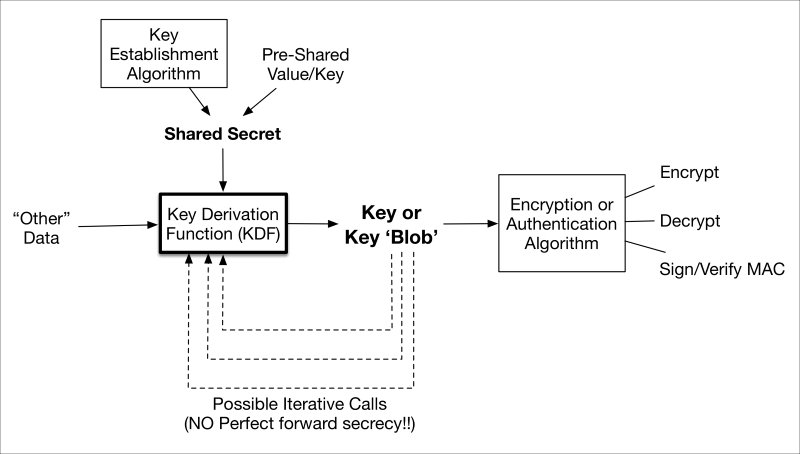

Cryptographic key management fundamentals 673

Summary of key management recommendations 681

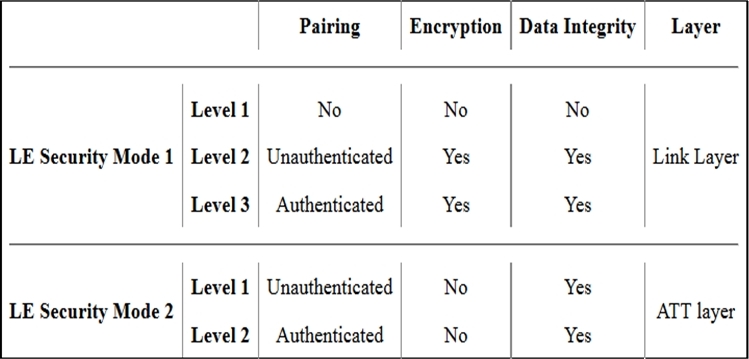

Examining cryptographic controls for IoT protocols 684

Cryptographic controls built into IoT communication protocols 684

Near field communication (NFC) 688

Cryptographic controls built into IoT messaging protocols 688

Future directions of the IoT and cryptography 691

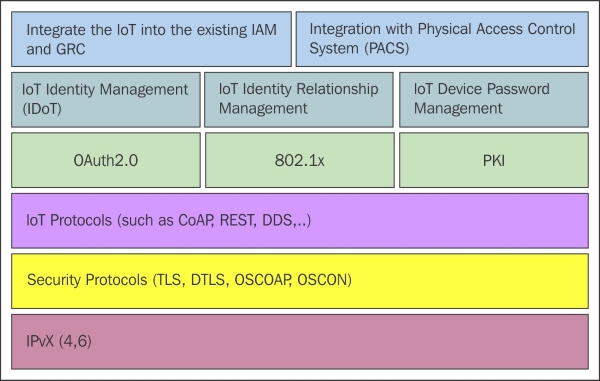

An introduction to identity and access management for the IoT 696

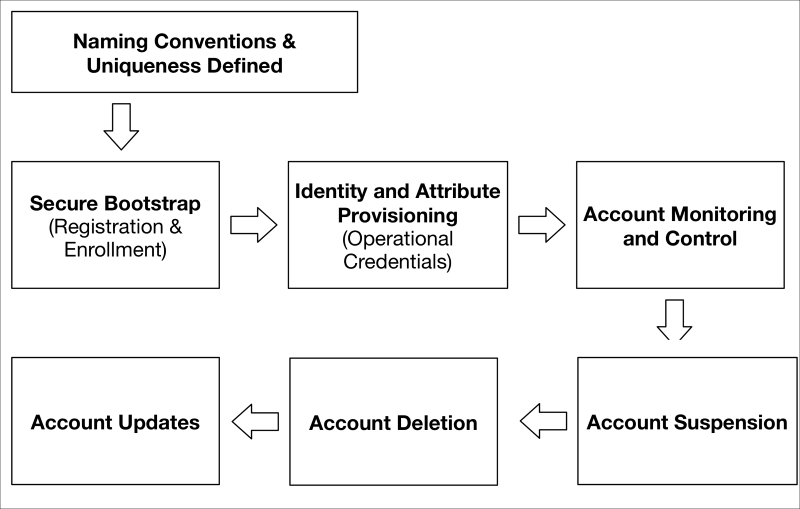

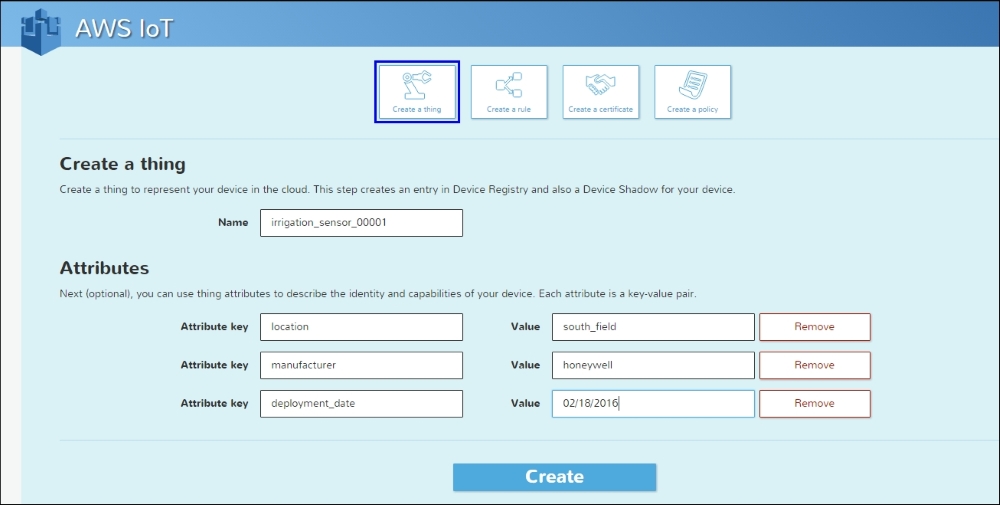

Establish naming conventions and uniqueness requirements 700

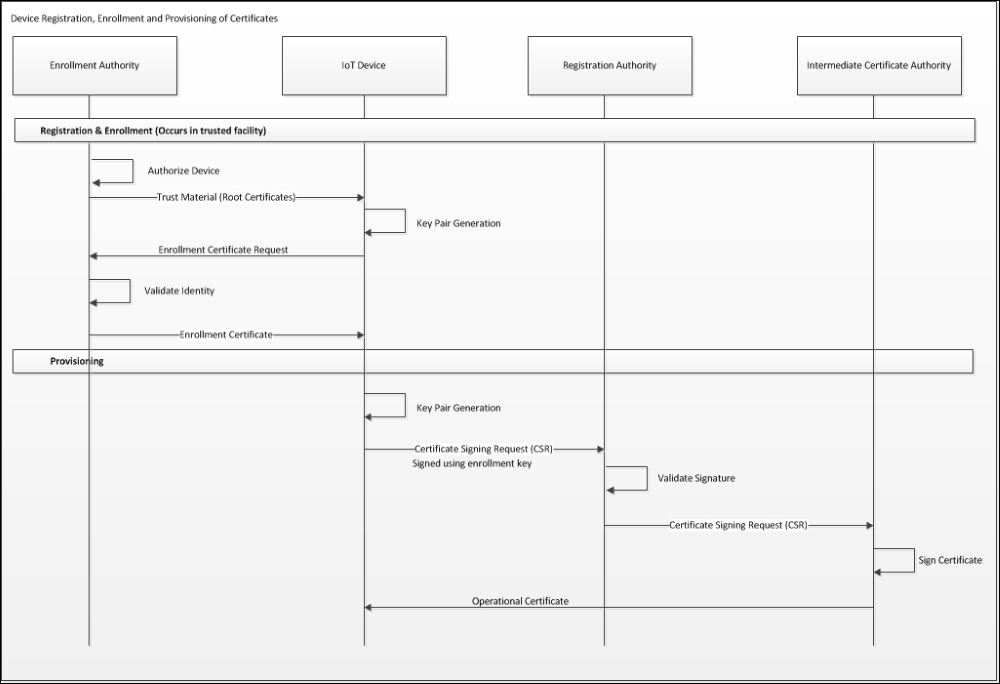

Credential and attribute provisioning 705

Account monitoring and control 708

Account/credential deactivation/deletion 708

Authentication credentials 710

New work in authorization for the IoT 714

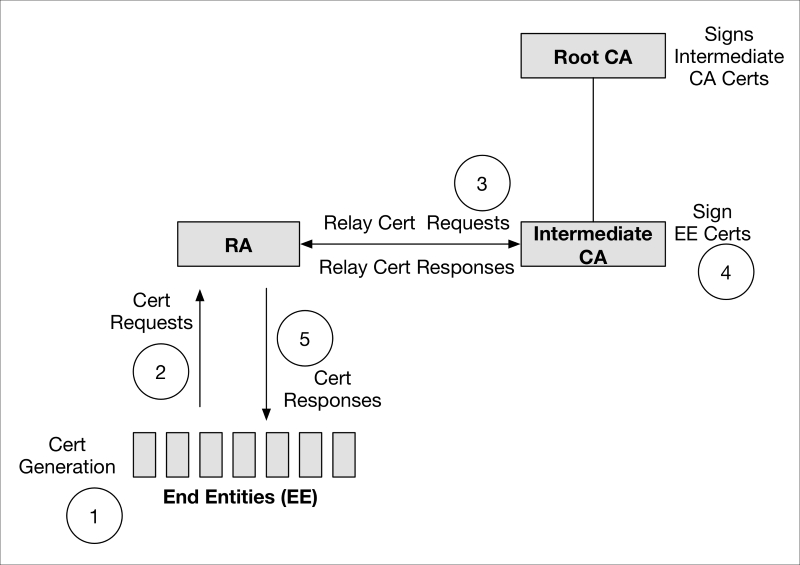

PKI architecture for privacy 719

Authorization and access control 722

Authorization and access controls within publish/subscribe protocols 723

Access controls within communication protocols 724

Privacy challenges introduced by the IoT 726

A complex sharing environment 728

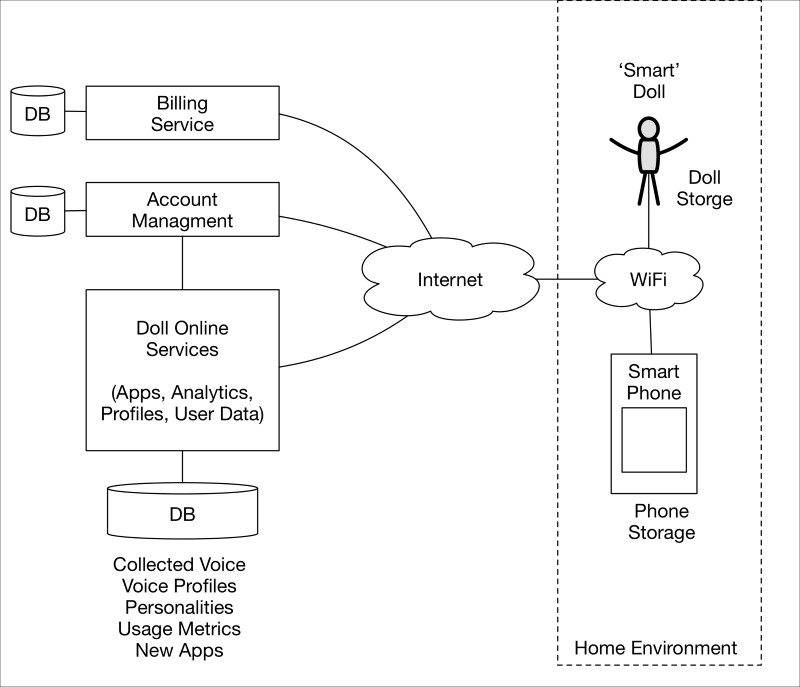

Metadata can leak private information also 729

New privacy approaches for credentials 729

Privacy impacts on IoT security systems 731

New methods of surveillance 732

Guide to performing an IoT PIA 733

Characterizing collected information 736

Uses of collected information 740

Auditing and accountability 743

Privacy embedded into design 745

Positive-sum, not zero-sum 745

Visibility and transparency 746

Privacy engineering recommendations 748

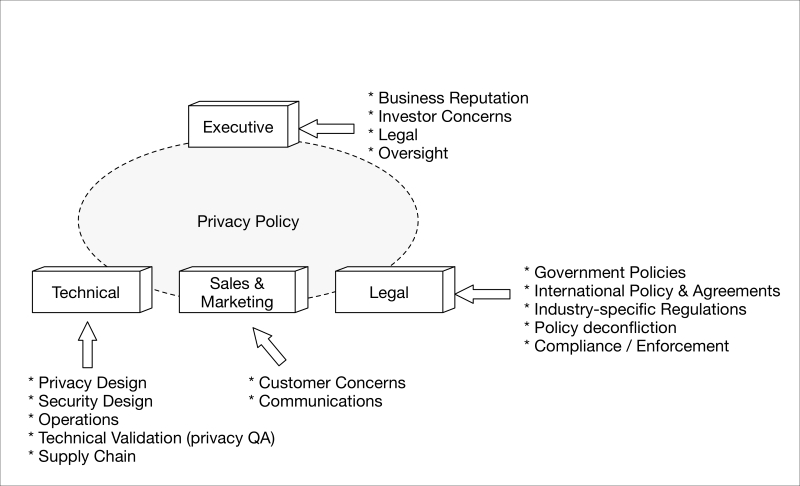

Privacy throughout the organization 748

Privacy engineering professionals 749

Privacy engineering activities 750

Implementing IoT systems in a compliant manner 755

Policies, procedures, and documentation 758

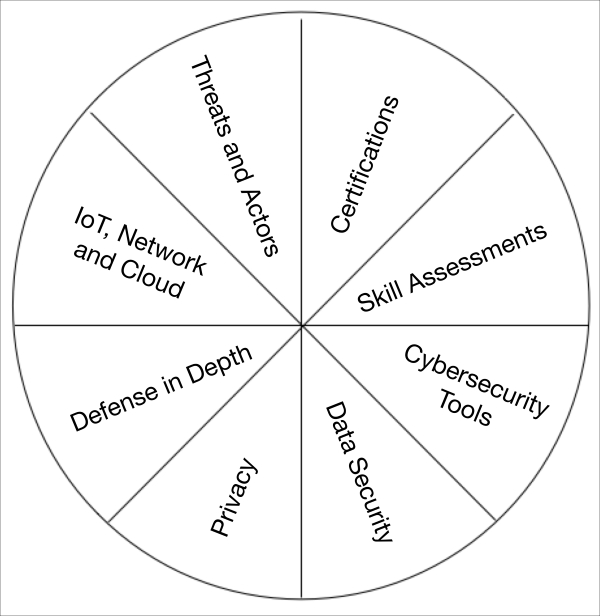

The IoT, network, and cloud 760

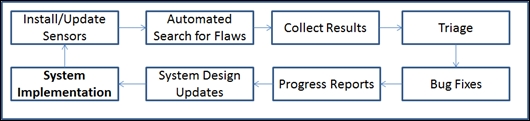

Internal compliance monitoring 762

Automated search for flaws 764

A complex compliance environment 774

Challenges associated with IoT compliance 774

Examining existing compliance standards support for the IoT 774

Underwriters Laboratory IoT certification 775

NIST Risk Management Framework (RMF) 779

Cloud services and the IoT 782

Asset/inventory management 783

Service provisioning, billing, and entitlement management 783

Customer intelligence and marketing 784

Message transport/broadcast 785

Examining IoT threats from a cloud perspective 785

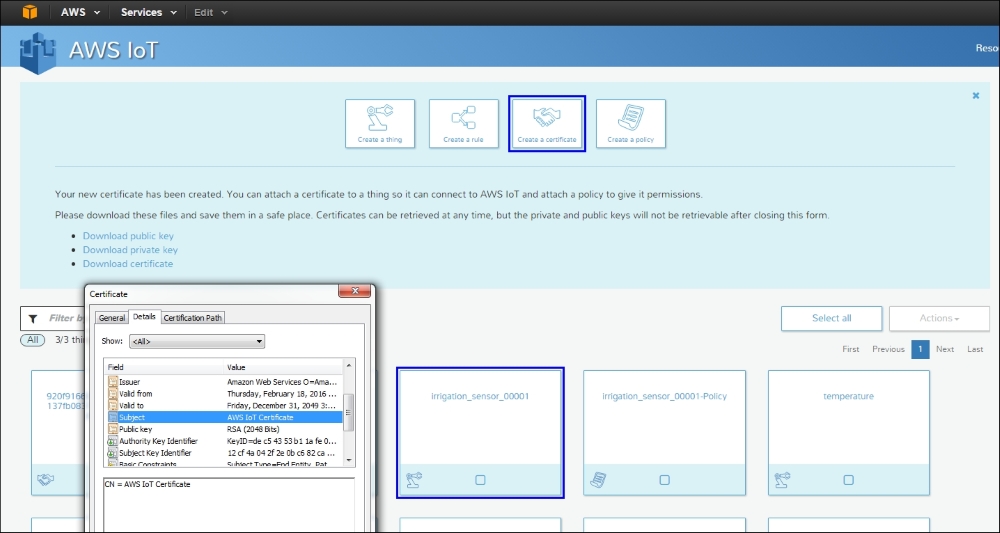

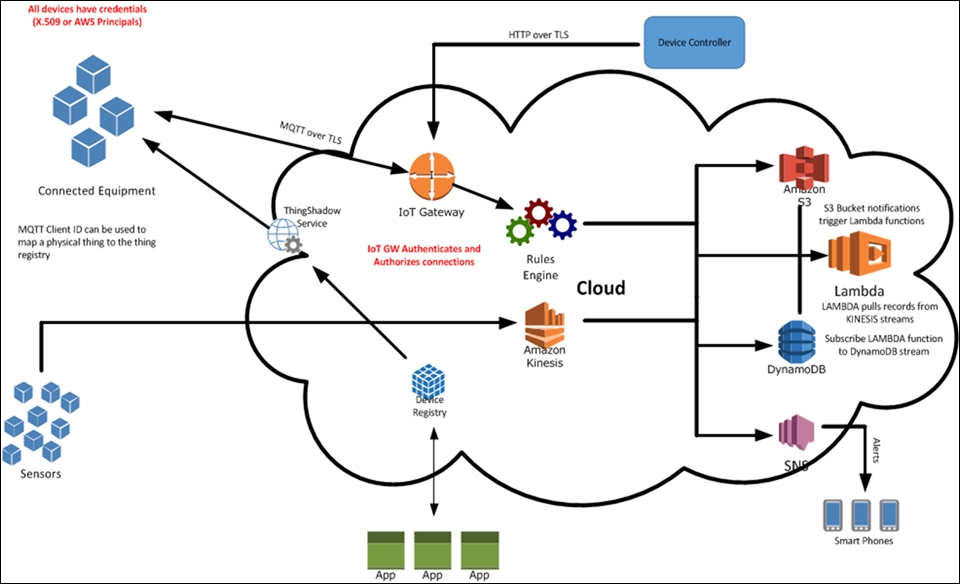

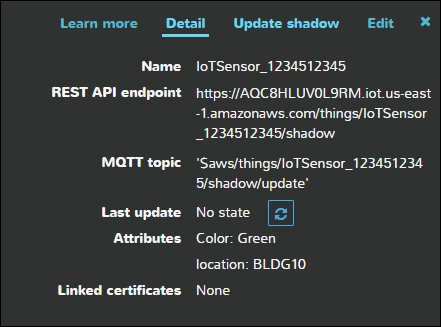

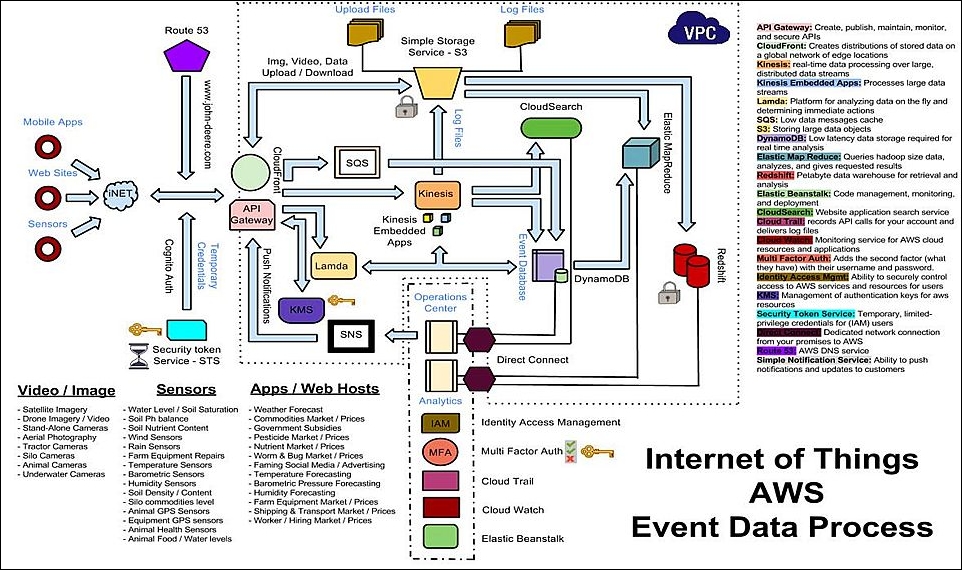

Exploring cloud service provider IoT offerings 788

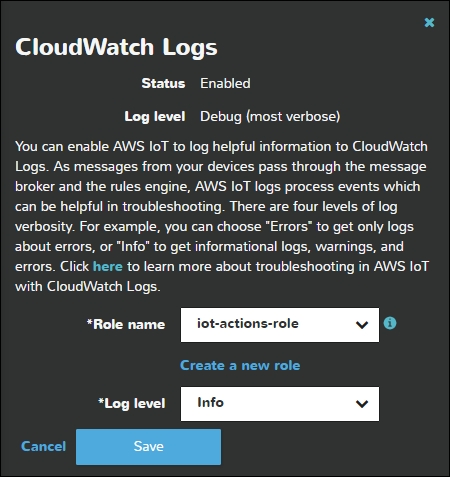

Cloud IoT security controls 798

Authentication (and authorization) 798

End-to-end security recommendations 799

Secure bootstrap and enrollment of IoT devices 801

Tailoring an enterprise IoT cloud security architecture 803

New directions in cloud-enabled IOT computing 807

Software defined networking (SDN) 807

Container support for secure development environments 808

Containers for deployment support 808

The move to 5G connectivity 809

On-demand computing and the IoT (dynamic compute resources) 810

New distributed trust models for the cloud 811

Threats both to safety and security 815

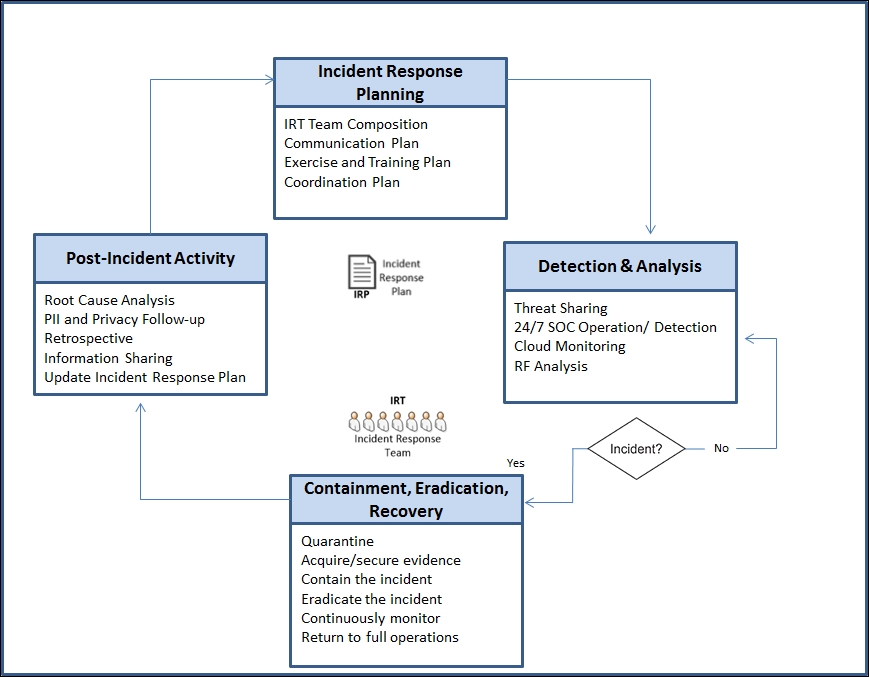

Planning and executing an IoT incident response 819

Incident response planning 820

IoT incident response procedures 823

IoT incident response team composition 824

Exercises and operationalizing an IRP in your organization 825

Analyzing the compromised system 828

Analyzing the IoT devices involved 830

IoT: Building Arduino-Based Projects

IoT: Building Arduino-Based Projects

What this learning path covers

What you need for this learning path

Internal representation of sensor values

External representation of sensor values

Accessing the serial port on Raspberry Pi

Creating persistent default settings

Adding configurable properties

Working with the current settings

Adding HTTP support to the sensor

Setting up an HTTP server on the sensor

Setting up an HTTPS server on the sensor

Displaying measured information in an HTML page

Generating graphics dynamically

Creating sensor data resources

Interpreting the readout request

Adding events for enhanced network performance

Adding HTTP support to the actuator

Creating the web services resource

Using the REST web service interface

Adding HTTP support to the controller

Providing a service architecture

Documenting device and service capabilities

Creating a device description document

Providing the device with an identity

Topping off with a URL to a web presentation page

Creating the service description document

Implementing the Still Image service

Initializing evented state variables

Providing web service properties

Discovering devices and services

Defining our first CoAP resources

Manually triggering an event notification

Registering data readout resources

Defining simple control resources

Controlling the output using CoAP

Monitoring observable resources

Adding MQTT support to the sensor

Controlling the thread life cycle

Adding MQTT support to the actuator

Initializing the topic content

Receiving the published content

Adding MQTT support to the controller

Handling events from the sensor

Decoding and parsing sensor values

Federating for global scalability

Provisioning for added security

Adding XMPP support to a thing

Connecting to the XMPP network

Monitoring connection state events

Handling HTTP requests over XMPP

Providing an additional layer of security

Initializing the Thing Registry interface

Removing a thing from the registry

Initializing the provisioning server interface

Handling friendship recommendations

Handling requests to unfriend somebody

Searching for a provisioning server

Providing registry information

Handling presence subscription requests

Continuing interrupted negotiations

Adding XMPP support to the sensor

Adding a sensor server interface

Adding XMPP support to the actuator

Adding a controller server interface

Adding XMPP support to the camera

Adding XMPP support to the controller

Setting up a sensor client interface

Setting up a controller client interface

Setting up a camera client interface

Fetching the camera image over XMPP

7. Using an IoT Service Platform

Downloading the Clayster platform

Configuring the Clayster system

Interfacing our devices using XMPP

Creating a class for our sensor

Interpreting incoming sensor data

Creating a class for our actuator

Customizing control operations

Creating a class for our camera

Creating our control application

Defining the application class

Understanding application references

Viewing the 10-foot interface application

Understanding protocol bridging

The basics of the Clayster abstraction model

Understanding editable data sources

Understanding editable objects

Overriding key properties and methods

Handling communication with devices

Understanding the CoAP gateway architecture

9. Security and Interoperability

Reinventing the wheel, but an inverted one

Getting access to stored credentials

Sniffing network communication

Port scanning and web crawling

X.509 certificates and encryption

Using message brokers and provisioning servers

Centralization versus decentralization

Allows new kinds of services and reuse of devices

Combining security and interoperability

1. Internet-Controlled PowerSwitch

Hardware and software requirements

Connecting Arduino Ethernet Shield to the Internet

Testing your Arduino Ethernet Shield

Wiring PowerSwitch Tail with Arduino Ethernet Shield

Turning PowerSwitch Tail into a simple web server

A step-by-step process for building a web-based control panel

Handling client requests by HTTP GET

Sensing the availability of mains electricity

Testing the mains electricity sensor

Building a user-friendly web user interface

Adding a Cascade Style Sheet to the web user interface

Finding the MAC address and obtaining a valid IP address

Obtaining an IP address using DHCP

2. Wi-Fi Signal Strength Reader and Haptic Feedback

Stacking the WiFi Shield with Arduino

Hacking an Arduino earlier than REV3

Knowing more about connections

Fixing the Arduino WiFi library

Connecting your Arduino to a Wi-Fi network

Wi-Fi signal strength and RSSI

Reading the Wi-Fi signal strength

Haptic feedback and haptic motors

Getting started with the Adafruit DRV2605 haptic controller

Connecting a haptic controller to Arduino WiFi Shield

Soldering a vibrator to the haptic controller breakout board

Downloading the Adafruit DRV2605 library

Making vibration effects for RSSI

Implementing a simple web server

Reading the signal strength over Wi-Fi

3. Internet-Connected Smart Water Meter

Wiring the water flow sensor with Arduino

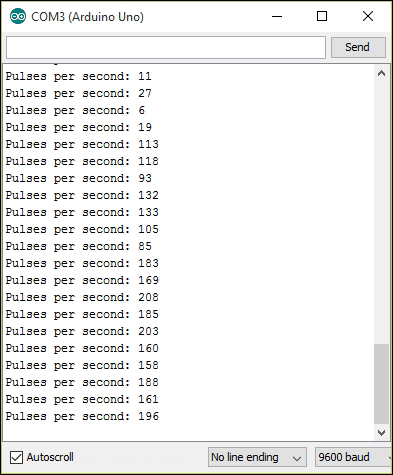

Reading and counting pulses with Arduino

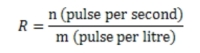

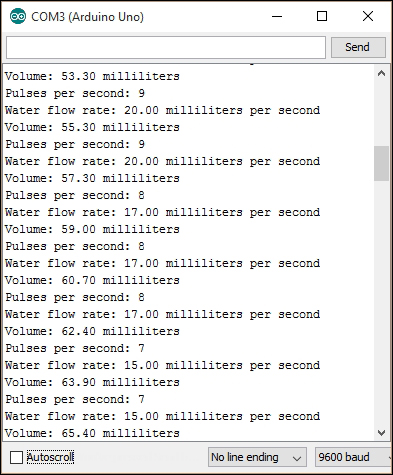

Calculating the water flow rate

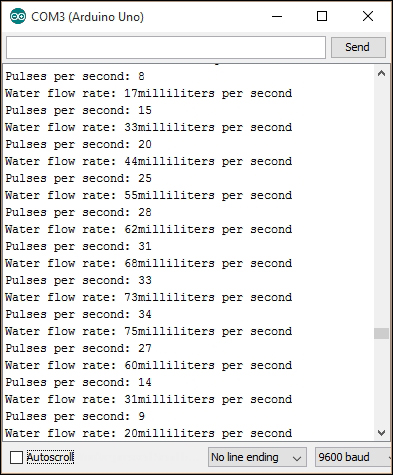

Calculating the water flow volume

Adding an LCD screen to the water meter

Converting your water meter to a web server

4. Arduino Security Camera with Motion Detection

Getting started with TTL Serial Camera

Wiring the TTL Serial Camera for image capturing

Wiring the TTL Serial Camera for video capturing

Testing NTSC video stream with video screen

Connecting the TTL Serial Camera with Arduino and Ethernet Shield

Generating the photo upload sketch

Connecting the camera output with Temboo

5. Solar Panel Voltage Logging with NearBus Cloud Connector and Xively

Connecting a solar cell with the Arduino Ethernet board

Building the circuit with Arduino

Creating and configuring a Xively account

Configuring the NearBus connected device for Xively

Developing a web page to display the real-time voltage values

6. GPS Location Tracker with Temboo, Twilio, and Google Maps

Hardware and software requirements

Getting started with the Arduino GPS shield

Connecting the Arduino GPS shield with the Arduino Ethernet board

Displaying the current location on Google Maps

Finding Twilio LIVE API credentials

Finding Twilio test API credentials

Creating Twilio Choreo with Temboo

Sending an SMS with Twilio API

Send a GPS location data using Temboo

7. Tweet-a-Light – Twitter-Enabled Electric Light

Hardware and software requirements

Setting environment variables for Python

Installing the setuptools utility on Python

Installing the pip utility on Python

Opening the Python interpreter

Creating a Twitter app and obtaining API keys

Writing a Python script to read Twitter tweets

Reading the serial data using Arduino

Connecting the PowerSwitch Tail with Arduino

8. Controlling Infrared Devices Using IR Remote

Building an Arduino infrared recorder and remote

Building the IR receiver module

Capturing IR commands in hexadecimal

Capturing IR commands in the raw format

Adding an IR socket to non-IR enabled devices

Cybersecurity versus IoT security and cyber-physical systems

Why cross-industry collaboration is vital

Energy industry and smart grid

Connected vehicles and transportation

Implantables and medical devices

IoT device and service deployment

Data link and physical protocols

IoT data collection, storage, and analytics

IoT integration platforms and solutions

The IoT of the future and the need to secure

The future – cognitive systems and the IoT

2. Vulnerabilities, Attacks, and Countermeasures

Primer on threats, vulnerability, and risks (TVR)

The classic pillars of information assurance

Primer on attacks and countermeasures

Fault tree and attack tree differences

Merging fault and attack tree analysis

Example anatomy of a deadly cyber-physical attack

Wireless reconnaissance and mapping

Lessons learned and systematic approaches

Step 2 – create a system/architecture overview

Step 3 – decompose the IoT system

3. Security Engineering for IoT Development

Building security in to design and development

Security in agile developments

Focusing on the IoT device in operation

Consider new liabilities and guard against risk exposure

Establish an IoT physical security plan

Technology selection – security products and services

Selecting a real-time operating system (RTOS)

The secure IoT system implementation lifecycle

Implementation and integration

Network and security integration

Examining network and security integration for WSNs

Examining network and security integration for connected cars

Planning for updates to existing network and security infrastructures

Planning for provisioning mechanisms

Integrating with security systems

System security verification and validation (V&V)

Security awareness training for users

Security administration training for the IoT

Secure gateway and network configurations

Managing identities, roles, and attributes

Identity relationship management and context

Attribute-based access control

Consider third-party data requirements

Asset and configuration management

Secure device disposal and zeroization

Data archiving and records management

5. Cryptographic Fundamentals for IoT Security Engineering

Cryptography and its role in securing the IoT

Types and uses of cryptographic primitives in the IoT

Cryptographic module principles

Cryptographic key management fundamentals

Summary of key management recommendations

Examining cryptographic controls for IoT protocols

Cryptographic controls built into IoT communication protocols

Near field communication (NFC)

Cryptographic controls built into IoT messaging protocols

Future directions of the IoT and cryptography

6. Identity and Access Management Solutions for the IoT

An introduction to identity and access management for the IoT

Establish naming conventions and uniqueness requirements

Credential and attribute provisioning

Account monitoring and control

Account/credential deactivation/deletion

New work in authorization for the IoT

Authorization and access control

Authorization and access controls within publish/subscribe protocols

Access controls within communication protocols

7. Mitigating IoT Privacy Concerns

Privacy challenges introduced by the IoT

Metadata can leak private information also

New privacy approaches for credentials

Privacy impacts on IoT security systems

Guide to performing an IoT PIA

Characterizing collected information

Privacy engineering recommendations

Privacy throughout the organization

Privacy engineering professionals

Privacy engineering activities

8. Setting Up a Compliance Monitoring Program for the IoT

Implementing IoT systems in a compliant manner

Policies, procedures, and documentation

Internal compliance monitoring

A complex compliance environment

Challenges associated with IoT compliance

Examining existing compliance standards support for the IoT

Underwriters Laboratory IoT certification

NIST Risk Management Framework (RMF)

Service provisioning, billing, and entitlement management

Customer intelligence and marketing

Examining IoT threats from a cloud perspective

Exploring cloud service provider IoT offerings

Authentication (and authorization)

End-to-end security recommendations

Secure bootstrap and enrollment of IoT devices

Tailoring an enterprise IoT cloud security architecture

New directions in cloud-enabled IOT computing

Software defined networking (SDN)

Container support for secure development environments

Containers for deployment support

On-demand computing and the IoT (dynamic compute resources)

New distributed trust models for the cloud

Threats both to safety and security

Planning and executing an IoT incident response

IoT incident response procedures

IoT incident response team composition

Exercises and operationalizing an IRP in your organization

Analyzing the compromised system

Analyzing the IoT devices involved

Containment, eradication, and recovery

IoT: Building Arduino-Based Projects

IoT: Building Arduino-Based Projects

Explore and learn about Internet of Things to develop interactive Arduino-based Internet projects

A course in three modules

BIRMINGHAM - MUMBAI

IoT: Building Arduino-Based Projects

Copyright © 2016 Packt Publishing

All rights reserved. No part of this course may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, without the prior written permission of the publisher, except in the case of brief quotations embedded in critical articles or reviews.

Every effort has been made in the preparation of this course to ensure the accuracy of the information presented. However, the information contained in this course is sold without warranty, either express or implied. Neither the authors, nor Packt Publishing, and its dealers and distributors will be held liable for any damages caused or alleged to be caused directly or indirectly by this course.

Packt Publishing has endeavored to provide trademark information about all of the companies and products mentioned in this course by the appropriate use of capitals. However, Packt Publishing cannot guarantee the accuracy of this information.

Published on: August 2016

Published by Packt Publishing Ltd.

Livery Place

35 Livery Street

Birmingham B3 2PB, UK.

ISBN 978-1-78712-063-1

Authors

Peter Waher

Pradeeka Seneviratne

Brian Russell

Drew Van Duren

Reviewers

Fiore Basile

Dominique Guinard

Phodal Huang

Joachim Lindborg

Ilesh Patel

Francesco Azzola

Paul Deng

Charalampos Doukas

Paul Massey

Aaron Guzman

Content Development Editor

Nikhil Borkar

Graphics

Abhinash Sahu

Production Coordinator

Melwyn Dsa

Internet of Things is one of the current top tech buzzwords. Large corporations value its market in tens of trillions of dollars for the upcoming years, investing billions into research and development. On top of this, there is the plan for the release of tens of billions of connected devices during the same period. So you can see why it is only natural that it causes a lot of buzz. While we benefit from the IoT, we must prevent, to the highest possible degree, our current and future IoT from harming us; and to do this, we need to secure it properly and safely. We hope you enjoy this course and find the information useful for securing your IoT.

Module 1, Learning Internet of Things, begins with exploring the popular HTTP, UPnP, CoAP, MQTT, and XMPP protocols. You will learn how protocols and patterns can put limitations on network topology and how they affect the direction of communication and the use of firewalls. This module gives you a practical overview of the existing protocols, communication patterns, architectures, and security issues important to Internet of Things

There are a few Appendices which are not present in this module but are available for download at the following link: https://www.packtpub.com/sites/default/files/downloads/3494_3532OT_Appendices.pdf

Module 2, Internet of Things with Arduino Blueprints, provides you up to eight projects that will allow devices to communicate with each other, access information over the Internet, store and retrieve data, and interact with users―creating smart, pervasive, and always-connected environments. You can use these projects as blueprints for many other IoT projects and put them to good use.

Module 3, Practical Internet of Things Security, provides a set of guidelines to architect and deploy a secure IoT in your Enterprise. The aim is to showcase how the IoT is implemented in early-adopting industries and describe how lessons can be learned and shared across diverse industries to support a secure IoT.

For Module 1, Apart from a computer running Windows, Linux, or Mac OS, you will need four or five Raspberry Pi model B credit-card-sized computers, with SD cards containing the Raspbian operating system installed. Appendix R, Bill of Materials, which is available online, lists the components used to build the circuits used in the examples presented in this module.

The software used in this module is freely available on the Internet. The source code for all the projects presented in this module is available for download from GitHub. See the section about downloading example code, which will follow, for details.

Module 2, has been written and tested on the Windows environment and uses various software components with Arduino.

For Module 3, you will need SecureITree version 4.3, a common desktop or laptop, and a Windows, Mac, or Linux platform running Java 8.

If you’re a developer or electronics engineer who is curious about Internet of Things, then this is the course for you. A rudimentary understanding of electronics, Raspberry Pi, or similar credit-card sized computers, and some programming experience using managed code such as C# or Java will be helpful. Business analysts and managers will also find this course useful.

Feedback from our readers is always welcome. Let us know what you think about this course—what you liked or disliked. Reader feedback is important for us as it helps us develop titles that you will really get the most out of.

To send us general feedback, simply e-mail <feedback@packtpub.com>, and mention the course’s title in the subject of your message.

If there is a topic that you have expertise in and you are interested in either writing or contributing to a book, see our author guide at www.packtpub.com/authors.

Now that you are the proud owner of a Packt course, we have a number of things to help you to get the most from your purchase.

You can download the example code files for this course from your account at http://www.packtpub.com. If you purchased this course elsewhere, you can visit http://www.packtpub.com/support and register to have the files e-mailed directly to you.

You can download the code files by following these steps:

Log in or register to our website using your e-mail address and password.

Hover the mouse pointer on the SUPPORT tab at the top.

Click on Code Downloads & Errata.

Enter the name of the course in the Search box.

Select the course for which you’re looking to download the code files.

Choose from the drop-down menu where you purchased this course from.

Click on Code Download.

You can also download the code files by clicking on the Code Files button on the course’s webpage at the Packt Publishing website. This page can be accessed by entering the course’s name in the Search box. Please note that you need to be logged in to your Packt account.

Once the file is downloaded, please make sure that you unzip or extract the folder using the latest version of:

WinRAR / 7-Zip for Windows

Zipeg / iZip / UnRarX for Mac

7-Zip / PeaZip for Linux

The code bundle for the course is also hosted on GitHub at https://github.com/PacktPublishing/IoT-Building-Arduino-based-Projects. We also have other code bundles from our rich catalog of books, videos and courses available at https://github.com/PacktPublishing/. Check them out!

Although we have taken every care to ensure the accuracy of our content, mistakes do happen. If you find a mistake in one of our courses—maybe a mistake in the text or the code—we would be grateful if you could report this to us. By doing so, you can save other readers from frustration and help us improve subsequent versions of this course. If you find any errata, please report them by visiting http://www.packtpub.com/submit-errata, selecting your course, clicking on the Errata Submission Form link, and entering the details of your errata. Once your errata are verified, your submission will be accepted and the errata will be uploaded to our website or added to any list of existing errata under the Errata section of that title.

To view the previously submitted errata, go to https://www.packtpub.com/books/content/support and enter the name of the course in the search field. The required information will appear under the Errata section.

Piracy of copyrighted material on the Internet is an ongoing problem across all media. At Packt, we take the protection of our copyright and licenses very seriously. If you come across any illegal copies of our works in any form on the Internet, please provide us with the location address or website name immediately so that we can pursue a remedy.

Please contact us at <copyright@packtpub.com> with a link to the suspected pirated material.

We appreciate your help in protecting our authors and our ability to bring you valuable content.

If you have a problem with any aspect of this course, you can contact us at <questions@packtpub.com>, and we will do our best to address the problem.

Learning Internet of Things

Explore and learn about Internet of Things with the help of engaging and enlightening tutorials designed for Raspberry Pi

Chapter 1. Preparing our IoT Projects

This book will cover a series of projects for Raspberry Pi that cover very common and handy use cases within Internet of Things (IoT). These projects include the following:

Sensor: This project is used to sense physical values and publish them together with metadata on the Internet in various ways.

Actuator: This performs actions in the physical world based on commands it receives from the Internet.

Controller: This is a device that provides application intelligence to the Internet.

Camera: This is a device that publishes a camera through which you will take pictures.

Bridge: This is the fifth and final project, which is a device that acts as a bridge between different protocols. We will cover this at an introductory level later in the book (Chapter 8, Creating Protocol Gateways, if you would like to take a look at it now), as it relies on the IoT service platform.

Before delving into the different protocols used in Internet of Things, we will dedicate some time in this chapter to set up some of these projects, present circuit diagrams, and perform basic measurement and control operations, which are not specific to any communication protocol. The following chapters will then use this code as the basis for the new code presented in each chapter.

Along with the project preparation phase, you will also learn about some of the following concepts in this chapter:

Development using C# for Raspberry Pi

The basic project structure

Introduction to Clayster libraries

The sensor, actuator, controller, and camera projects

Interfacing the General Purpose Input/Output pins

Circuit diagrams

Hardware interfaces

Introduction to interoperability in IoT

Data persistence using an object database

Our first project will be the sensor project. Since it is the first one, we will cover it in more detail than the following projects in this book. A majority of what we will explore will also be reutilized in other projects as much as possible. The development of the sensor is broken down into six steps, and the source code for each step can be downloaded separately. You will find a simple overview of this here:

Firstly, you will set up the basic structure of a console application.

Then, you will configure the hardware and learn to sample sensor values and maintain a useful historical record.

After adding HTTP server capabilities as well as useful web resources to the project, you will publish the sensor values collected on the Internet.

You will then handle persistence of sampled data in the sensor so it can resume after outages or software updates.

The next step will teach you how to add a security layer, requiring user authentication to access sensitive information, on top of the application.

In the last step, you will learn how to overcome one of the major obstacles in the request/response pattern used by HTTP, that is, how to send events from the server to the client.

Tip

Only the first two steps are presented here, and the rest in the following chapter, since they introduce HTTP. The fourth step will be introduced in this chapter but will be discussed in more detail in Appendix C, Object Database.

I assume that you are familiar with Raspberry Pi and have it configured. If not, refer to http://www.raspberrypi.org/help/faqs/#buyingWhere.

In our examples, we will use Model B with the following:

An SD card with the Raspbian operating system installed

A configured network access, including Wi-Fi, if used

User accounts, passwords, access rights, time zones, and so on, all configured correctly

Note

I also assume that you know how to create and maintain terminal connections with the device and transfer files to and from the device.

All our examples will be developed on a remote PC (for instance, a normal working laptop) using C# (C + + + + if you like to think of it this way), as this is a modern programming language that allows us to do what we want to do with IoT. It also allows us to interchange code between Windows, Linux, Macintosh, Android, and iOS platforms.

Tip

Don't worry about using C#. Developers with knowledge in C, C++, or Java should have no problems understanding it.

Once a project is compiled, executable files are deployed to the corresponding Raspberry Pi (or Raspberry Pi boards) and then executed. Since the code runs on .NET, any language out of the large number of CLI-compatible languages can be used.

Tip

Development tools for C# can be downloaded for free from http://xamarin.com/.

To prepare Raspberry for the execution of the .NET code, we need to install Mono, which contains the Common Language Runtime for .NET that will help us run the .NET code on Raspberry. This is done by executing the following commands in a terminal window in Raspberry Pi:

$ sudo apt-get

update

$ sudo apt-get upgrade

$ sudo apt-get

install mono-complete

Your device is now ready to run the .NET code.

To facilitate the development of IoT applications, this book provides you with the right to use seven Clayster libraries for private and commercial applications. These are available on GitHub with the downloadable source code for each chapter. Of these seven libraries, two are provided with the source code so that the community can extend them as they desire. Furthermore, the source code of all the examples shown in this book is also available for download.

The following Clayster libraries are included:

|

Library |

Description |

|

Clayster.Library.Data |

This provides the application with a powerful object database. Objects are persisted and can be searched directly in the code using the object's class definition. No database coding is necessary. Data can be stored in the SQLite database provided in Raspberry Pi. |

|

Clayster.Library.EventLog |

This provides the application with an extensible event logging architecture that can be used to get an overview of what happens in a network of things. |

|

Clayster.Library.Internet |

This contains classes that implement common Internet protocols. Applications can use these to communicate over the Internet in a dynamic manner. |

|

Clayster.Library.Language |

This provides mechanisms to create localizable applications that are simple to translate and that can work in an international setting. |

|

Clayster.Library.Math |

This provides a powerful extensible, mathematical scripting language that can help with automation, scripting, graph plotting, and others. |

|

Clayster.Library.IoT |

This provides classes that help applications become interoperable by providing data representation and parsing capabilities of data in IoT. The source code is also included here. |

|

Clayster.Library.RaspberryPi |

This contains Hardware Abstraction Layer (HAL) for Raspberry Pi. It provides object-oriented interfaces to interact with devices connected to the General Purpose Input/Output (GPIO) pins available. The source code is also included here. |

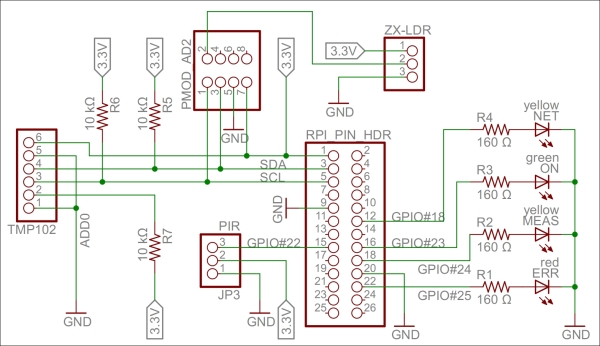

Our sensor prototype will measure three things: light, temperature, and motion. To summarize, here is a brief description of the components:

The light sensor is a simple ZX-LDR analog sensor that we will connect to a four-channel (of which we use only one) analog-to-digital converter (Digilent Pmod AD2), which is connected to an I2C bus that we will connect to the standard GPIO pins for I2C.

Note

The I2C bus permits communication with multiple circuits using synchronous communication that employs a Serial Clock Line (SCL) and Serial Data Line (SDA) pin. This is a common way to communicate with integrated circuits.

The temperature sensor (Texas Instruments TMP102) connects directly to the same I2C bus.

The SCL and SDA pins on the I2C bus use recommended pull-up resistors to make sure they are in a high state when nobody actively pulls them down.

The infrared motion detector (Parallax PIR sensor) is a digital input that we connect to GPIO 22.

We also add four LEDs to the board. One of these is green and is connected to GPIO 23. This will show when the application is running. The second one is yellow and is connected to GPIO 24. This will show when measurements are done. The third one is yellow and is connected to GPIO 18. This will show when an HTTP activity is performed. The last one is red and is connected to GPIO 25. This will show when a communication error occurs.

The pins that control the LEDs are first connected to 160 Ω resistors before they are connected to the LEDs, and then to ground. All the hardware of the prototype board is powered by the 3.3 V source provided by Raspberry Pi. A 160 Ω resistor connected in series between the pin and ground makes sure 20 mA flows through the LED, which makes it emit a bright light.

Tip

For an introduction to GPIO on Raspberry Pi, please refer to http://www.raspberrypi.org/documentation/usage/gpio/.

Two guides on GPIO pins can be found at http://elinux.org/RPi_Low-level_peripherals.

For more information, refer to http://pi.gadgetoid.com/pinout.

The following figure shows a circuit diagram of our prototype board:

A circuit diagram for the Sensor project

Tip

For a bill of materials containing the components used, see Appendix R, Bill of Materials.

We also need to create a console application project in Xamarin. Appendix A, Console Applications, details how to set up a console application in Xamarin and how to enable event logging and then compile, deploy, and execute the code on Raspberry Pi.

Interaction with our hardware is done using corresponding classes defined in the Clayster.Library.RaspberryPi library, for which the source code is provided. For instance, digital output is handled using the DigitalOutput class and digital input with the DigitalInput class. Devices connected to an I2C bus are handled using the I2C class. There are also other generic classes, such as ParallelDigitalInput and ParallelDigitalOutput, that handle a series of digital input and output at once. The SoftwarePwm class handles a software-controlled pulse-width modulation output. The Uart class handles communication using the UART port available on Raspberry Pi. There's also a subnamespace called Devices where device-specific classes are available.

In the end, all classes communicate with the static GPIO class, which is used to interact with the GPIO layer in Raspberry Pi.

Each class has a constructor that initializes the corresponding hardware resource, methods and properties to interact with the resource, and finally a Dispose method that releases the resource.

Tip

It is very important that you release the hardware resources allocated before you terminate the application. Since hardware resources are not controlled by the operating system, the fact that the application is terminated is not sufficient to release the resources. For this reason, make sure you call the Dispose methods of all the allocated hardware resources before you leave the application. Preferably, this should be done in the finally statement of a try-finally block.

The hardware interfaces used for our LEDs are as follows:

private static

DigitalOutput executionLed = new DigitalOutput (23, true);

private

static DigitalOutput measurementLed = new DigitalOutput (24,

false);

private static DigitalOutput errorLed = new

DigitalOutput (25, false);

private static DigitalOutput

networkLed = new DigitalOutput (18, false);

We use a DigitalInput class for our motion detector:

private static

DigitalInput motion = new DigitalInput (22);

With our temperature sensor on the I2C bus, which limits the serial clock frequency to a maximum of 400 kHz, we interface as follows:

private

static I

2

C

i

2

cBus

= new I

2

C

(3, 2, 400000);

private static TexasInstrumentsTMP102 tmp102 =

new TexasInstrumentsTMP102 (0, i

2

cBus);

We interact with the light sensor using an analog-to-digital converter as follows:

private

static AD799x adc = new AD799x (0, true, false, false, false,

i

2

cBus);

The sensor data values will be represented by the following set of variables:

private static bool

motionDetected = false;

private static double

temperatureC;

private static double lightPercent;

private

static object synchObject = new object ();

Historical values will also be kept so that trends can be analyzed:

private static

List<Record> perSecond = new List<Record> ();

private

static List<Record> perMinute = new List<Record>

();

private static List<Record> perHour = new List<Record>

();

private static List<Record> perDay = new List<Record>

();

private static List<Record> perMonth = new

List<Record> ();

Note

Appendix B, Sampling and History, describes how to perform basic sensor value sampling and historical record keeping in more detail using the hardware interfaces defined earlier. It also describes the Record class.

Persisting data is simple. This is done using an object database. This object database analyzes the class definition of objects to persist and dynamically creates the database schema to accommodate the objects you want to store. The object database is defined in the Clayster.Library.Data library. You first need a reference to the object database, which is as follows:

internal static

ObjectDatabase db;

Then, you need to provide information on how to connect to the underlying database. This can be done in the .config file of the application or the code itself. In our case, we will specify a SQLite database and provide the necessary parameters in the code during the startup:

DB.BackupConnectionString

= "Data Source=sensor.db;Version=3;";

DB.BackupProviderName

= "Clayster.Library.Data.Providers." +

"SQLiteServer.SQLiteServerProvider";

Finally, you will get a proxy object for the object database as follows. This object can be used to store, update, delete, and search for objects in your database:

db = DB.GetDatabaseProxy

("TheSensor");

Tip

Appendix C, Object Database, shows how the data collected in this application is persisted using only the available class definitions through the use of this object database.

By doing this, the sensor does not lose data if Raspberry Pi is restarted.

To facilitate the interchange of sensor data between devices, an interoperable sensor data format based on XML is provided in the Clayster.Library.IoT library. There, sensor data consists of a collection of nodes that report data ordered according to the timestamp. For each timestamp, a collection of fields is reported. There are different types of fields available: numerical, string, date and time, timespan, Boolean, and enumeration-valued fields. Each field has a field name, field value of the corresponding type and the optional readout type (if the value corresponds to a momentary value, peak value, status value, and so on), a field status, or Quality of Service value and localization information.

The Clayster.Library.IoT.SensorData namespace helps us export sensor data information by providing an abstract interface called ISensorDataExport. The same logic can later be used to export to different sensor data formats. The library also provides a class named ReadoutRequest that provides information about what type of data is desired. We can use this to tailor the data export to the desires of the requestor.

The export starts by calling the Start() method on the sensor data export module and ends with a call to the End() method. Between these two, a sequence of StartNode() and EndNode() method calls are made, one for each node to export. To simplify our export, we then call another function to output data from an array of Record objects that contain our data. We use the same method to export our momentary values by creating a temporary Record object that would contain them:

private static void

ExportSensorData (ISensorDataExport Output, ReadoutRequest

Request)

{

Output.Start ();

lock (synchObject)

{

Output.StartNode ("Sensor");

Export

(Output, new Record[]

{

new Record

(DateTime.Now, temperatureC, lightPercent, motionDetected)

},ReadoutType.MomentaryValues, Request);

Export (Output,

perSecond, ReadoutType.HistoricalValuesSecond, Request);

Export (Output, perMinute, ReadoutType.HistoricalValuesMinute,

Request);

Export (Output, perHour,

ReadoutType.HistoricalValuesHour, Request);

Export (Output,

perDay, ReadoutType.HistoricalValuesDay, Request);

Export

(Output, perMonth, ReadoutType.HistoricalValuesMonth, Request);

Output.EndNode ();

}

Output.End ();

}

For each array of Record objects, we then export them as follows:

Note

It is important to note here that we need to check whether the corresponding readout type is desired by the client before you export data of this type.

The Export method exports an enumeration of Record objects as follows. First it checks whether the corresponding readout type is desired by the client before exporting data of this type. The method also checks whether the data is within any time interval requested and that the fields are of interest to the client. If a data field passes all these tests, it is exported by calling any of the instances of the overloaded method ExportField(), available on the sensor data export object. Fields are exported between the StartTimestamp() and EndTimestamp() method calls, defining the timestamp that corresponds to the fields being exported:

private static void

Export(ISensorDataExport Output, IEnumerable<Record> History,

ReadoutType Type,ReadoutRequest Request)

{

if((Request.Types & Type) != 0)

{

foreach(Record

Rec in History)

{

if(!Request.ReportTimestamp

(Rec.Timestamp))

continue;

Output.StartTimestamp(Rec.Timestamp);

if

(Request.ReportField("Temperature"))

Output.ExportField("Temperature",Rec.TemperatureC, 1,"C",

Type);

if(Request.ReportField("Light"))

Output.ExportField("Light",Rec.LightPercent, 1, "%",

Type);

if(Request.ReportField ("Motion"))

Output.ExportField("Motion",Rec.Motion, Type);

Output.EndTimestamp();

}

}

}

We can test the method by exporting some sensor data to XML using the SensorDataXmlExport class. It implements the ISensorDataExport interface. The result would look something like this if you export only momentary and historic day values.

Note

The ellipsis (…) represents a sequence of historical day records, similar to the one that precedes it, and newline and indentation has been inserted for readability.

<?xml

version="1.0"?>

<fields

xmlns="urn:xmpp:iot:sensordata">

<node

nodeId="Sensor">

<timestamp

value="2014-07-25T12:29:32Z">

<numeric

value="19.2" unit="C" automaticReadout="true"

momentary="true" name="Temperature"/>

<numeric value="48.5" unit="%"

automaticReadout="true" momentary="true"

name="Light"/>

<boolean value="true"

automaticReadout="true" momentary="true"

name="Motion"/>

</timestamp>

<timestamp value="2014-07-25T04:00:00Z">

<numeric value="20.6" unit="C"

automaticReadout="true" name="Temperature"

historicalDay="true"/>

<numeric

value="13.0" unit="%" automaticReadout="true"

name="Light" historicalDay="true"/>

<boolean value="true" automaticReadout="true"

name="Motion" historicalDay="true"/>

</timestamp>

...

</node>

</fields>

Another very common type of object used in automation and IoT is the actuator. While the sensor is used to sense physical magnitudes or events, an actuator is used to control events or act with the physical world. We will create a simple actuator that can be run on a standalone Raspberry Pi. This actuator will have eight digital outputs and one alarm output. The actuator will not have any control logic in it by itself. Instead, interfaces will be published, thereby making it possible for controllers to use the actuator for their own purposes.

Note

In the sensor project, we went through the details on how to create an IoT application based on HTTP. In this project, we will reuse much of what has already been done and not explicitly go through these steps again. We will only list what is different.

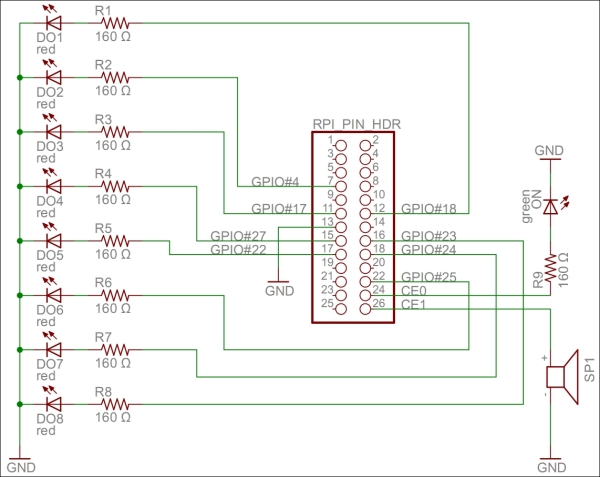

Our actuator prototype will control eight digital outputs and one alarm output:

Each one of the digital output is connected to a 160 Ω resistor and a red LED to ground. If the output is high, the LED is turned on. We have connected the LEDs to the GPIO pins in this order: 18, 4, 17, 27, 22, 25, 24, and 23. If Raspberry Pi R1 is used, GPIO pin 27 should be renumbered to 21.

For the alarm output, we connect a speaker to GPIO pin 7 (CE1) and then to ground. We also add a connection from GPIO 8 (CE0), a 160 Ω resistor to a green LED, and then to ground. The green LED will show when the application is being executed.

Tip

For a bill of materials containing components used, refer to Appendix R, Bill of Materials.

The actuator project can be better understood with the following circuit diagram:

A circuit diagram for the actuator project

All the hardware interfaces except the alarm output are simple digital outputs. They can be controlled by the DigitalOutput class. The alarm output will control the speaker through a square wave signal that will be output on GPIO pin 7 using the SoftwarePwm class, which outputs a pulse-width-modulated square signal on one or more digital outputs. The SoftwarePwm class will only be created when the output is active. When not active, the pin will be left as a digital input.

The declarations look as follows:

private static

DigitalOutput executionLed =

new DigitalOutput (8,

true);

private static SoftwarePwm alarmOutput = null;

private

static Thread alarmThread = null;

private static DigitalOutput[]

digitalOutputs = new DigitalOutput[]

{

new

DigitalOutput (18, false),

new DigitalOutput (4, false),

new DigitalOutput (17, false),

new DigitalOutput (27,

false),// pin 21 on RaspberryPi R1

new DigitalOutput (22,

false),

new DigitalOutput (25, false),

new

DigitalOutput (24, false),

new DigitalOutput (23, false)

};

Digital output is controlled using the objects in the digitalOutputs array directly. The alarm is controlled by calling the AlarmOn() and AlarmOff()methods.

Tip

Appendix D, Control, details how these hardware interfaces are used to perform control operations.

We have a sensor that provides sensing and an actuator that provides actuating. But none have any intelligence yet. The controller application provides intelligence to the network. It will consume data from the sensor, then draw logical conclusions and use the actuator to inform the world of its conclusions.

The controller we create will read the ambient light and motion detection provided by the sensor. If it is dark and there exists movement, the controller will sound the alarm. The controller will also use the LEDs of the controller to display how much light is being reported.

Tip

Of the three applications we have presented thus far, this application is the simplest to implement since it does not publish any information that needs to be protected. Instead, it uses two other applications through the interfaces they have published. The project does not use any particular hardware either.

The first step toward creating a controller is to access sensors from where relevant data can be retrieved. We will duplicate sensor data into these private member variables:

private static bool

motion = false;

private static double lightPercent = 0;

private

static bool hasValues = false;

In the following chapter, we will show you different methods to populate these variables with values by using different communication protocols. Here, we will simply assume the variables have been populated by the correct sensor values.

We get help from Clayster.Library.IoT.SensorData to parse data in XML format, generated by the sensor data export we discussed earlier. So, all we need to do is loop through the fields that are received and extract the relevant information as follows. We return a Boolean value that would indicate whether the field values read were different from the previous ones:

private static bool

UpdateFields(XmlDocument Xml)

{

FieldBoolean Boolean;

FieldNumeric Numeric;

bool Updated = false;

foreach (Field F in Import.Parse(Xml))

{

if(F.FieldName == "Motion" && (Boolean = F as

FieldBoolean) != null)

{

if(!hasValues || motion

!= Boolean.Value)

{

motion = Boolean.Value;

Updated = true;

}

} else if(F.FieldName ==

"Light" && (Numeric = F as FieldNumeric) != null &&

Numeric.Unit == "%")

{

if(!hasValues ||

lightPercent != Numeric.Value)

{

lightPercent

= Numeric.Value;

Updated = true;

}

}

}

return Updated;

}

The controller needs to calculate which LEDs to light along with the state of the alarm output based on the values received by the sensor. The controlling of the actuator can be done from a separate thread so that communication with the actuator does not affect the communication with the sensor, and the other way around. Communication between the main thread that is interacting with the sensor and the control thread is done using two AutoResetEvent objects and a couple of control state variables:

private static

AutoResetEvent updateLeds = new AutoResetEvent(false);

private

static AutoResetEvent updateAlarm = new

AutoResetEvent(false);

private static int lastLedMask =

-1;

private static bool? lastAlarm = null;

private static

object synchObject = new object();

We have eight LEDs to control. We will turn them off if the sensor reports 0 percent light and light them all if the sensor reports 100 percent light. The control action we will use takes a byte where each LED is represented by a bit. The alarm is to be sounded when there is less than 20 percent light reported and the motion is detected. The calculations are done as follows:

private static void

CheckControlRules()

{

int NrLeds =

(int)System.Math.Round((8 * lightPercent) / 100);

int LedMask

= 0;

int i = 1;

bool Alarm;

while(NrLeds >

0)

{

NrLeds--;

LedMask |= i;

i <<=

1;

}

Alarm = lightPercent < 20 &&

motion;

We then compare these results with the previous ones to see whether we need to inform the control thread to send control commands:

lock(synchObject)

{

if(LedMask != lastLedMask)

{

lastLedMask = LedMask;

updateLeds.Set();

}

if (!lastAlarm.HasValue || lastAlarm.Value != Alarm)

{

lastAlarm = Alarm;

updateAlarm.Set();

}

}

}

In this book, we will also introduce a camera project. This device will use an infrared camera that will be published in the network, and it will be used by the controller to take pictures when the alarm goes off.

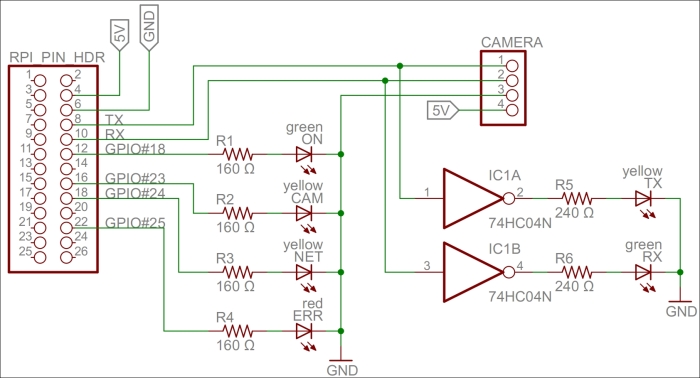

For our camera project, we've chosen to use the LinkSprite JPEG infrared color camera instead of the normal Raspberry Camera module or a normal UVC camera. It allows us to take photos during the night and leaves us with two USB slots free for Wi-Fi and keyboard. You can take a look at the essential information about the camera by visiting http://www.linksprite.com/upload/file/1291522825.pdf. Here is a summary of the circuit connections:

The camera has a serial interface that we can use through the UART available on Raspberry Pi. It has four pins, two of which are reception pin (RX) and transmission pin (TX) and the other two are connected to 5 V and ground GND respectively.

The RX and TX on the Raspberry Pi pin header are connected to the TX and RX on the camera, respectively. In parallel, we connect the TX and RX lines to a logical inverter. Then, via 240 Ω resistors, we connect them to two LEDs, yellow for TX and green for RX, and then to GND. Since TX and RX are normally high and are drawn low during communication, we need to invert the signals so that the LEDs remain unlit when there is no communication and they blink when communication is happening.

We also connect four GPIO pins (18, 23, 24, and 25) via 160 Ω resistors to four LEDs and ground to signal the different states in our application. GPIO 18 controls a green LED signal when the camera application is running. GPIO 23 and 24 control yellow LEDs; the first GPIO controls the LED when communication with the camera is being performed, and the second controls the LED when a network request is being handled. GPIO 25 controls a red LED, and it is used to show whether an error has occurred somewhere.

This project can be better understood with the following circuit diagram:

A circuit diagram for the camera project

Tip

For a bill of materials containing components used, see Appendix R, Bill of Materials.

To be able to access the serial port on Raspberry Pi from the code, we must first make sure that the Linux operating system does not use it for other purposes. The serial port is called ttyAMA0 in the operating system, and we need to remove references to it from two operating system files: /boot/cmdline.txt and /etc/inittab. This will disable access to the Raspberry Pi console via the serial port. But we will still be able to access it using SSH or a USB keyboard. From a command prompt, you can edit the first file as follows:

$ sudo nano

/boot/cmdline.txt

You need to edit the second file as well, as follows:

$ sudo nano

/etc/inittab

Tip

For more detailed information, refer to the http://elinux.org/RPi_Serial_Connection#Preventing_Linux_using_the_serial_port article and read the section on how to prevent Linux from using the serial port.

To interface the hardware laid out on our prototype board, we will use the Clayster.Library.RaspberryPi library. We control the LEDs using DigitalOutput objects:

private static

DigitalOutput executionLed = new DigitalOutput (18, true);

private

static DigitalOutput cameraLed = new DigitalOutput (23,

false);

private static DigitalOutput networkLed = new

DigitalOutput (24, false);

private static DigitalOutput errorLed

= new DigitalOutput (25, false);

The LinkSprite camera is controlled by the LinkSpriteJpegColorCamera class in the Clayster.Library.RaspberryPi.Devices.Cameras subnamespace. It uses the Uart class to perform serial communication. Both these classes are available in the downloadable source code:

private static

LinkSpriteJpegColorCamera camera = new LinkSpriteJpegColorCamera

(LinkSpriteJpegColorCamera.BaudRate.Baud__38400);

For our camera to work, we need four persistent and configurable default settings: camera resolution, compression level, image encoding, and an identity for our device. To achieve this, we create a DefaultSettings class that we can persist in the object database:

public class

DefaultSettings : DBObject

{

private

LinkSpriteJpegColorCamera.ImageSize resolution =

LinkSpriteJpegColorCamera.ImageSize._320x240;

private byte

compressionLevel = 0x36;

private string imageEncoding =

"image/jpeg";

private string udn =

Guid.NewGuid().ToString();

public DefaultSettings() :

base(MainClass.db)

{

}

We publish the camera resolution property as follows. The three possible enumeration values are: ImageSize_160x120, ImageSize_320x240, and ImageSize_640x480. These correspond to the three different resolutions supported by the camera:

[DBDefault

(LinkSpriteJpegColorCamera.ImageSize._320x240)]

public

LinkSpriteJpegColorCamera.ImageSize Resolution

{

get

{

return this.resolution;

}

set

{

if (this.resolution != value)

{

this.resolution = value;

this.Modified = true;

}

}

}

We publish the compression-level property in a similar manner.

Internally, the camera only supports JPEG-encoding of the pictures that are taken. But in our project, we will add software support for PNG and BMP compression as well. To make things simple and extensible, we choose to store the image-encoding method as a string containing the Internet media type of the encoding scheme implied:

[DBShortStringClipped

(false)]

[DBDefault ("image/jpeg")]

public string

ImageEncoding

{

get

{

return

this.imageEncoding;

}

set

{

if(this.imageEncoding != value)

{

this.imageEncoding = value;

this.Modified = true;

}

}

}

We add a method to load any persisted settings from the object database:

public static

DefaultSettings LoadSettings()

{

return

MainClass.db.FindObjects

<DefaultSettings>().GetEarliestCreatedDeleteOthers();

}

}

In our main application, we create a variable to hold our default settings. We make sure to define it as internal using the internal access specifier so that we can access it from other classes in our project:

internal static

DefaultSettings defaultSettings;

During application initialization, we load any default settings available from previous executions of the application. If none are found, the default settings are created and initiated to the default values of the corresponding properties, including a new GUID identifying the device instance in the UDN property the UDN property:

defaultSettings =

DefaultSettings.LoadSettings();

if(defaultSettings == null)

{

defaultSettings = new DefaultSettings();

defaultSettings.SaveNew();

}

To avoid having to reconfigure the camera every time a picture is to be taken, something that is time-consuming, we need to remember what the current settings are and avoid reconfiguring the camera unless new properties are used. These current settings do not need to be persisted since we can reinitialize the camera every time the application is restarted. We declare our current settings parameters as follows:

private static

LinkSpriteJpegColorCamera.ImageSize currentResolution;

private

static byte currentCompressionRatio;

During application initialization, we need to initialize the camera. First, we get the default settings as follows:

Log.Information("Initializing

camera.");

try

{

currentResolution =

defaultSettings.Resolution;

currentCompressionRatio =

defaultSettings.CompressionLevel;

Here, we need to reset the camera and set the default image resolution. After changing the resolution, a new reset of the camera is required. All of this is done on the camera's default baud rate, which is 38,400 baud:

try

{

camera.Reset();// First try @ 38400 baud

camera.SetImageSize(currentResolution);

camera.Reset();

Since image transfer is slow, we then try to set the highest baud rate supported by the camera:

camera.SetBaudRate

(LinkSpriteJpegColorCamera.BaudRate.Baud_115200);

camera.Dispose();

camera

= new LinkSpriteJpegColorCamera

(LinkSpriteJpegColorCamera.BaudRate.Baud_115200);

If the preceding procedure fails, an exception will be thrown. The most probable cause for this to fail, if the hardware is working correctly, is that the application has been restarted and the camera is already working at 115,200 baud. This will be the case during development, for instance. In this case, we simply set the camera to 115,200 baud and continue. Here is room for improved error handling, and trying out different options to recover from more complex error conditions and synchronize the current states with the states of the camera:

}

catch(Exception)

// If already at 115200 baud.

{

camera.Dispose ();

camera = new LinkSpriteJpegColorCamera

(LinkSpriteJpegColorCamera.BaudRate.Baud_115200);

We then set the camera compression rate as follows:

}finally

{

camera.SetCompressionRatio(currentCompressionRatio);

}

If this fails, we log the error to the event log and light our error LED to inform the end user that there is a failure:

}catch(Exception ex)

{

Log.Exception(ex);

errorLed.High();

camera = null;

}

In this chapter, we presented most of the projects that will be discussed in this book, together with circuit diagrams that show how to connect our hardware components. We also introduced development using C# for Raspberry Pi and presented the basic project structure. Several Clayster libraries were also introduced that help us with common programming tasks such as communication, interoperability, scripting, event logging, interfacing GPIO, and data persistence.

In the next chapter, we will introduce our first communication protocol for the IoT: The Hypertext Transfer Protocol (HTTP).

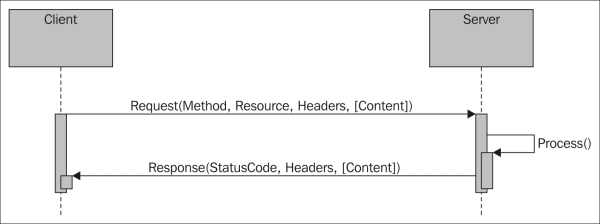

Now that we have a definition for Internet of Things, where do we start? It is safe to assume that most people that use a computer today have had an experience of Hypertext Transfer Protocol (HTTP), perhaps without even knowing it. When they "surf the Web", what they do is they navigate between pages using a browser that communicates with the server using HTTP. Some even go so far as identifying the Internet with the Web when they say they "go on the Internet" or "search the Internet".