Users Online

· Guests Online: 23

· Members Online: 0

· Total Members: 204

· Newest Member: meenachowdary055

· Members Online: 0

· Total Members: 204

· Newest Member: meenachowdary055

Forum Threads

Newest Threads

No Threads created

Hottest Threads

No Threads created

Latest Articles

Articles Hierarchy

Storage 101: common concepts in the IT enterprise storage

Storage 101: common concepts in the IT enterprise storage

More or less everybody in the IT market understands the basics of Networking, however when we start talking about Storage Networks, things get a bit dizzier, and this is where I would like to help.

This article is written to explain the most common concepts in the IT enterprise storage space, as in our days we hear the terms: virtualization, storage, SAN, NAS, RAID, virtualized storage, data deduplication, zoning, etc., constantly.

The final section of the article will try to collect Recommended Best Practices on the storage space, so this will be a dynamic article, as I find more best practices that are worth recommending.

Storage Cabinets / Storage Arrays

A Storage Cabinet or a Storage Array is a hardware appliance supporting a high number of hard disks, and a storage controller to be able to do disk groups and present various disks as one, offering advantages such as: better performance, data loss protection, and failure protection.

Storage Cabinets are quite old nowadays as today storage appliances have evolved quite a lot, changing its name in the process.

They modern variants are known as enterprise storage systems, unified storage systems, virtualized storage systems, etc.

The first storage cabinets supported RAID groups, and connected to servers directly via SCSI or similar (ESCON, FICON, SSA, InfiniBand) connections.

SAN

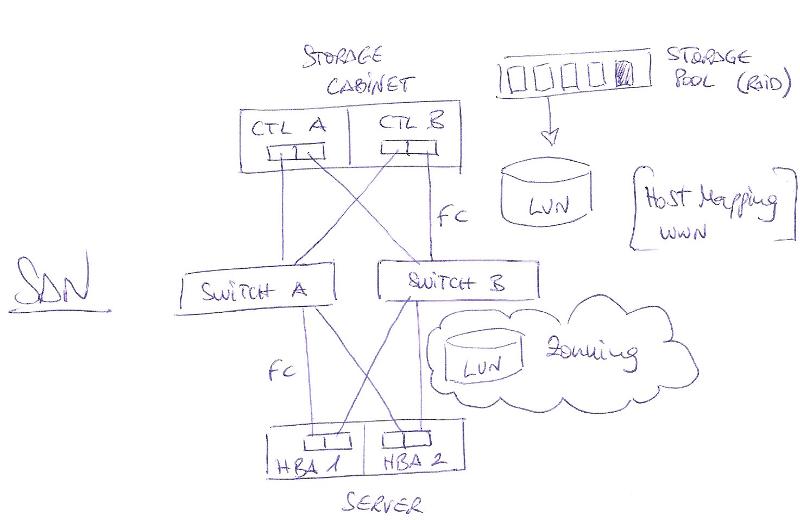

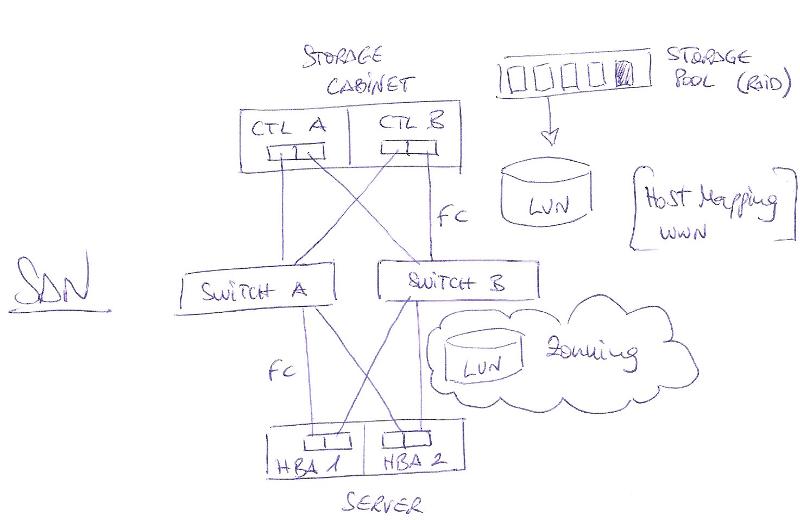

A SAN or Storage Area Network is on its most basic setup a storage cabinet with Fibre adapters or HBAs (Host Bus Adapters) that support FC connections.

A SAN can be connected directly to a few servers, however the most common setup is to connect a SAN cabinet to a SAN fibre switch, and connect the SAN switch to many servers, forming a fabric.

The SAN cabinet has an purpose-made embedded OS (can be based on Linux, AIX, Windows, etc.), installed over 2 storage controllers working in active-passive cluster, and each storage controller has at least 2 HBA connections, giving a minimum total output of 4 FC connections to be able to do multiple path failover.

SAN Fabric

A SAN Fabric or Switched Fabric is the network formed by storage cabinets, switches and servers interconnected by fibre cables.

A SAN Fabric is built with a minimum of 1 SAN cabinet (the storage host) connected to 1 SAN fibre switch, and different servers (the storage clients) connected to the fibre switch.

If we connect servers directly to a SAN cabin, we can only connect 2 servers with dual multipath, or 4 servers with a unique connection.

If we connect servers to a SAN using the recommended setup, you can use as many connections as your switches and entitled licenses support.

FC Switch Zoning

Once the cabling has been done, the switch zoning configuration must be performed, to create networks or zones between 1 client adapter and 2 host adapters, since one client adapter (HBA port on a server), must be able to see 2 host ports on the cabin, through 1 san switch, therefore having 1 connection to the switch, but 2 different paths to both of the cabin storage controllers.

Since the HBAs on the servers have at least 2 ports and connect to 2 san switches, they will have 2 connections with 2 different paths each, having 4 connections in total using multipath.

There are 2 kinds of zoning (previously called hard and soft): Port Based zoning and WWN Based zoning.

Port Based zoning is based on fixed ports of the switch, and if a cable or HBA is replaced, they will always have to be connected to the same switch port.

The advantage of this zoning is that it's quick to implement (you don´t need to know WWNs), and any technician can replace a faulty HBA or fibre cable as long as it connects the replaced cables to the same ports where they were plugged.

The disadvantage of this zoning is that if a port on the switch is faulty and a new one must be used, a storage admin must change the zone to add the new port and remove the old port defined.

WWN Based zoning is based on the WWN of the HBA and switch ports (WWN are the fibre equivalents of the NICs MAC addresses).

All the WWN are defined with an easy to handle Alias, and the networks are defined by aggregating the Alias in a zone.

The advantage of this zoning is that cables can be plugged anywhere on the switch, and that it's the actual brocade manufacturer recommended best practice.

The disadvantage if this zoning is that if a faulty HBA must be replaced, the storage admin must change the alias defined over the WWN of the HBA.

NAS

A NAS or Network Attached Storage is a storage cabinet with ethernet or FoE NICs that support tcp/ip and therefore CIFS, NFS or iSCSI connections.

A NAS can be self-made, since it's just a matter of building a server with a few disks, and then with the OS serve logical volumes as LUNs via CIFS, NFS or iSCSI.

For this you can use W2008 & 2012 as they have a storage rol to serve disks as NFS or iSCSI, or you can use many different Linux options FreeNAS, Nas4Free, OpenFiler, etc.

The Linux distro NanoNAS can boot from a CD or USB with a really small footprint and runs on memory as a 4MB RAM-Disk, making ideal for small NAS appliances.

LUN

LUN stands for Logical Unit Number, and it's the minimal assignation unit of a storage device host to a storage device client.

In a storage cabinet, once we have created a storage group or storage set with disks from the cabinet, we can then create virtual disks to assign to a storage device host as a LUN. The storage device host will see this LUN as a single disk.

To expand or shrink a LUN, is usually as easy as using the storage cabinet management software to do the LUNs expansion or shrinkage and then use the OS on the storage device host to perform a storage rescan.

If the cabinet does not support LUN resizing, or the device host OS do not support storage rescan, a new volume will have to be created, data moved to the new volume, and the old volume deleted.

Hybrid/Unified Storage

To be able to unify storage, and provide the best of both worlds, storage vendors have Hybrid or Unified Storage Cabins, which serve storage LUNs as Fibre (SAN), CIFS, NFS or iSCSI (NAS).

Technically they are a SAN, with additional storage controllers to also serve storage as a NAS.

Both SAN and NAS functionality is achieved, serving virtualization of storage, and virtualization and consolidation of file servers (they can even implement extra features through software like backup and anti-virus technology).

Examples are: EMC VNX/VNXe, Oracle ZFS Storage Appliance, IBM Storwize V7000, Hitachi Unified Storage 100.

All Flash Arrays

All-Flash arrays are the latest generation of Storage Arrays available, use all SSD disks and are tuned for the extreme IO pumping of this type of arrays (they deliver the highest IOPS available on the market).

Technically are storage arrays using SSD instead of HD, however as SSDs have different requirements than HDs (wear level, high throughput), they might use modified RAID protocols and special cache implementations.

Examples are: Oracle FS1, EMC XtremIO, IBM FlashSystem, Pure Storage FlashArray.

Storage Virtualization

by Hardware

Some storage providers have Storage Virtualizers, which act as a virtualization layer on top of many different storage cabins, making possible to connect storage from EMC, HP, IBM, NetApp, Oracle, etc.and serve LUNs from them as if they were a single storage appliance.

In effect, storage virtualization is to storage devices, what a storage cabinet is to hard disks:

by Software

Software based Storage Virtualization or Software Defined Storage (SDS) products manage to virtualize storage from many sources, making them act as one, usually they use the storage found on many servers to be used as distributed storage, making good use of all the wasted space that stand-alone servers have.

Examples are VMware VSAN, DataCore SANsymphony, EMC ViPR.

vSAN - VMware vSAN

VMware software defined storage virtualizer, vSAN ships together with vSphere ESXi as a layer to provide Storage Virtualization to the ESXi Operating System.

With vSAN, systems with a good number of hard drive slots and multicore processors can build a very capable SAN product built under VMware.

vSAN requires a minimum of 2 server nodes (3 recommended), each with 1 SSD disk and 1 HD disk and can use lots of SSDs and/or HDs to deliver a high capacity and throughput storage product, eliminating the need of hardware based storage systems.

In the last release advanced storage features have been added such as Stretched Cluster, Deduplication, Compression, All-Flash systems, and different RAID protection levels.

It' a product which VMware keeps on upgrading and offering more enhancements, simple to manage, update and upgrade.

vSAN is somewhat cheaper than the usual SAN/Fabric/Fibre Switches, specially when you work it out on a 4 year period adding support and hardware maintenance.

It's a good option for reliability and speed: in a FC SAN you usually have 1 storage cabinet with it's 2 storage controllers, so to build maximum reliability then you will need 2 storage cabinets, and 2 FC switches, which works out quite expensive.

vSAN scales from 2 nodes upwards, but a good reliable setup is to start with 3 nodes, and if more space and resiliency is needed add further nodes.

VSAN - Virtual SAN

Virtual SAN technology that permits you create virtual fibre fabrics over physical fibre fabrics, very much like creating VLANs over a LAN in Ethernet networks.

It offers the same advantages as ethernet virtual LANs: traffic isolation, redundancy, ease of administration, and better use of a single switch with higher scalability.

VSAN is a technology developed by CISCO, and now used as a storage standard by various HW vendors.

vSAN vs VSAN

VSAN is a software layer on top of existing SAN fibre switches to isolate SAN traffics, and split one physical switch into several virtual switches.

VSAN was defined by Cisco and modelled over the ethernet VLAN technology, but applied to the storage networking.

VMware's vSAN is a particular storage product to be able to create a local SAN using ESXi nodes with SSD & HD disks, and that replicates storage between it's nodes, creating a virtual implementation of a storage area network.

It's easy to get confused between them, usually VMware's is spelled as vSAN and the rest as VSAN.

The truth is that both are "Virtual" SANs, one only for use with VMware, and the other for Fibre/Storage Fabrics. And they use very different concepts and architecture.

vSAN is better targetted at meidum or small shops, as it is competitive price-wise, performance-wise and with a better learning curve that most traditional SANs, it also scales as big as SAN storage cabins. The downfall is that it is only particular to the VMware vSphere world, whereas VSAN is applicable to all the storage spectrum.

Cloud Storage

Cloud Storage is storage which is accessible through the internet.

Internally it might be on a SAN, NAS, etc., it doesn´t really matter, the end result is that we can connect to the storage from any computer connected to the internet as long as we use some software to permit the use of an internet connection as if it were a local disk.

Personal cloud storage provider examples are: Google Drive, Microsoft's OneDrive, Dropbox.

Enterprise cloud storage provider examples are: Amazon ECS, EMC Hybrid Cloud, VMware vCloud Air, IBM SmartCloud.

The software really transmits back and forth files between the remote storage and our computer or server using a special internet connection transparently.

It's main benefit is that we are free from disasters as is not based in hardware, and that we can access it from multiple locations.

It's disadvantage is that a good internet connection is needed, and that you must pay a monthly fee to your cloud storage provider.

However it's getting a very good choice for small businesses as they can save from buying technical equipment, saving in insurance, electricity, cooling needs, maintenance contracts, backup software and hardware, and specialized technicians.

Deduplication

Storage technology to reduce the data saved on disk or tape, by eliminating redundant data. An algorithm detects duplicated files, and saves 1 copy of the file, replacing the others by file stubs to point to the saved file.

Deduplication can be done on the fly or in batch. Some storage systems use a once or twice a day batch process to perform deduplication on an storage array basis.

Rehydration

Rehydration is the reconstruction of storage that has been deduplicated.

Basically it entails replacing the pointers or file stubs previously replaced by the deduplication process by its original copy, thereby expanding the "thinned" storage to its original size.

This term is quite a graphic way to name what it does, when you think about it.

Thin Provisioning

Thin Provisioning is a virtualization technology to use a shared storage pool between a few LUNs or hosts, making them appear that they have more space that what is actually available.

It is a space saving technology, to reuse the unused portions usually found on most server disks.

This technology has also evolved, the first versions used a ratio of oversubscription, and newer versions use more complex algorithms taking into account feedback monitoring from real disk usage.

But all this is a lot easier explained with a quick example:

We have a shared pool with a 1TB space, and we create on it 4 Thin LUNs of 500GB each, and each one assigned to a different server to install their OS.

--Realistically we are giving 500GB of virtual space to each OS, but since disks are not usually used at 100% capacity, we can have 4 servers with 500GB instead of 2.

--Now comes the catch: If one of this 4 servers goes AWOL, and fills up its 500GB space, there will only be 500GB left to the other 3 servers, and this can fill the real capacity causing oversubscription.

--In case of running out of real space, the storage appliance will halt read-write access to the shared pool, in most cases causing a failure of most of the servers (in our example a 4 server failure).

--The trick here is really not to oversubscribe: the ratio for disk storage shouldn't be more than 1.5 oversubscription, so 1TB shared between 4 servers should be 4 LUNs of 375GB each (1000 * 1.5 / 4) , and not 500GB as this is more than likely to cause mayhem in the near-future. To be on the safe-side, use 1.25 oversubscription, and create 4 LUNs of 300GB.

Recommended SAN & Storage Best Practices:

1.- SAN Fibre networks are faster that iSCSI and NFS on same speed comparison, they have better bandwidth and throughput, and are more resilient.

2.- WWN zoning is more feature rich than Port zoning.

3.- Zones must be defined with 1 client adapter per zone.

4.- Switch configuration is quicker and less error prone via commands through a terminal connection than through the WebGUI (at a harder learning curve expense).

5.- When using Thin Provisioning, it is imperative to have good monitoring alerts and react to them quickly, as if we run out of space because of overprovisioning, thin provisioned LUNs will become locked (read only), and usually will only be fixed by adding extra disks (if we have maxed out our cabinet, we will need an expansion cabinet and some extra disks). So Thin Provisioning = Ease of Life and better Space Management, at the expense of a Single Point of Failure.

Thank you for reading my article, feel free to leave me some feedback regarding the content or to recommend future work.

If you liked this article please click the big "Good Article?" button at the bottom.

I look forward to hearing from you. - Carlos Ijalba ( LinkedIn )

The final section of the article will try to collect Recommended Best Practices on the storage space, so this will be a dynamic article, as I find more best practices that are worth recommending.

Table of Contents

Storage Cabinets / Storage Arrays

SAN

SAN Fabric

FC Switch Zoning

NAS

LUN

Hybrid / Unified Storage

All Flash Arrays

Storage Virtualization

SAN

SAN Fabric

FC Switch Zoning

NAS

LUN

Hybrid / Unified Storage

All Flash Arrays

Storage Virtualization

by Hardware

by Software

by Software

vSAN - VMware vSAN

VSAN - Virtual SAN

vSAN vs VSAN

Cloud Storage

Deduplication

Rehydration

Thin Provisioning

Recommended SAN & Storage Best Practices

VSAN - Virtual SAN

vSAN vs VSAN

Cloud Storage

Deduplication

Rehydration

Thin Provisioning

Recommended SAN & Storage Best Practices

Storage Cabinets / Storage Arrays

A Storage Cabinet or a Storage Array is a hardware appliance supporting a high number of hard disks, and a storage controller to be able to do disk groups and present various disks as one, offering advantages such as: better performance, data loss protection, and failure protection.

Storage Cabinets are quite old nowadays as today storage appliances have evolved quite a lot, changing its name in the process.

They modern variants are known as enterprise storage systems, unified storage systems, virtualized storage systems, etc.

The first storage cabinets supported RAID groups, and connected to servers directly via SCSI or similar (ESCON, FICON, SSA, InfiniBand) connections.

SAN

A SAN or Storage Area Network is on its most basic setup a storage cabinet with Fibre adapters or HBAs (Host Bus Adapters) that support FC connections.

A SAN can be connected directly to a few servers, however the most common setup is to connect a SAN cabinet to a SAN fibre switch, and connect the SAN switch to many servers, forming a fabric.

The SAN cabinet has an purpose-made embedded OS (can be based on Linux, AIX, Windows, etc.), installed over 2 storage controllers working in active-passive cluster, and each storage controller has at least 2 HBA connections, giving a minimum total output of 4 FC connections to be able to do multiple path failover.

SAN Fabric

A SAN Fabric or Switched Fabric is the network formed by storage cabinets, switches and servers interconnected by fibre cables.

A SAN Fabric is built with a minimum of 1 SAN cabinet (the storage host) connected to 1 SAN fibre switch, and different servers (the storage clients) connected to the fibre switch.

If we connect servers directly to a SAN cabin, we can only connect 2 servers with dual multipath, or 4 servers with a unique connection.

If we connect servers to a SAN using the recommended setup, you can use as many connections as your switches and entitled licenses support.

FC Switch Zoning

Once the cabling has been done, the switch zoning configuration must be performed, to create networks or zones between 1 client adapter and 2 host adapters, since one client adapter (HBA port on a server), must be able to see 2 host ports on the cabin, through 1 san switch, therefore having 1 connection to the switch, but 2 different paths to both of the cabin storage controllers.

Since the HBAs on the servers have at least 2 ports and connect to 2 san switches, they will have 2 connections with 2 different paths each, having 4 connections in total using multipath.

There are 2 kinds of zoning (previously called hard and soft): Port Based zoning and WWN Based zoning.

Port Based zoning is based on fixed ports of the switch, and if a cable or HBA is replaced, they will always have to be connected to the same switch port.

The advantage of this zoning is that it's quick to implement (you don´t need to know WWNs), and any technician can replace a faulty HBA or fibre cable as long as it connects the replaced cables to the same ports where they were plugged.

The disadvantage of this zoning is that if a port on the switch is faulty and a new one must be used, a storage admin must change the zone to add the new port and remove the old port defined.

WWN Based zoning is based on the WWN of the HBA and switch ports (WWN are the fibre equivalents of the NICs MAC addresses).

All the WWN are defined with an easy to handle Alias, and the networks are defined by aggregating the Alias in a zone.

The advantage of this zoning is that cables can be plugged anywhere on the switch, and that it's the actual brocade manufacturer recommended best practice.

The disadvantage if this zoning is that if a faulty HBA must be replaced, the storage admin must change the alias defined over the WWN of the HBA.

NAS

A NAS or Network Attached Storage is a storage cabinet with ethernet or FoE NICs that support tcp/ip and therefore CIFS, NFS or iSCSI connections.

A NAS can be self-made, since it's just a matter of building a server with a few disks, and then with the OS serve logical volumes as LUNs via CIFS, NFS or iSCSI.

For this you can use W2008 & 2012 as they have a storage rol to serve disks as NFS or iSCSI, or you can use many different Linux options FreeNAS, Nas4Free, OpenFiler, etc.

The Linux distro NanoNAS can boot from a CD or USB with a really small footprint and runs on memory as a 4MB RAM-Disk, making ideal for small NAS appliances.

LUN

LUN stands for Logical Unit Number, and it's the minimal assignation unit of a storage device host to a storage device client.

In a storage cabinet, once we have created a storage group or storage set with disks from the cabinet, we can then create virtual disks to assign to a storage device host as a LUN. The storage device host will see this LUN as a single disk.

To expand or shrink a LUN, is usually as easy as using the storage cabinet management software to do the LUNs expansion or shrinkage and then use the OS on the storage device host to perform a storage rescan.

If the cabinet does not support LUN resizing, or the device host OS do not support storage rescan, a new volume will have to be created, data moved to the new volume, and the old volume deleted.

Hybrid/Unified Storage

To be able to unify storage, and provide the best of both worlds, storage vendors have Hybrid or Unified Storage Cabins, which serve storage LUNs as Fibre (SAN), CIFS, NFS or iSCSI (NAS).

Technically they are a SAN, with additional storage controllers to also serve storage as a NAS.

Both SAN and NAS functionality is achieved, serving virtualization of storage, and virtualization and consolidation of file servers (they can even implement extra features through software like backup and anti-virus technology).

Examples are: EMC VNX/VNXe, Oracle ZFS Storage Appliance, IBM Storwize V7000, Hitachi Unified Storage 100.

All Flash Arrays

All-Flash arrays are the latest generation of Storage Arrays available, use all SSD disks and are tuned for the extreme IO pumping of this type of arrays (they deliver the highest IOPS available on the market).

Technically are storage arrays using SSD instead of HD, however as SSDs have different requirements than HDs (wear level, high throughput), they might use modified RAID protocols and special cache implementations.

Examples are: Oracle FS1, EMC XtremIO, IBM FlashSystem, Pure Storage FlashArray.

Storage Virtualization

by Hardware

Some storage providers have Storage Virtualizers, which act as a virtualization layer on top of many different storage cabins, making possible to connect storage from EMC, HP, IBM, NetApp, Oracle, etc.and serve LUNs from them as if they were a single storage appliance.

In effect, storage virtualization is to storage devices, what a storage cabinet is to hard disks:

a mean to put a layer on top of various storage devices, to provide added resiliency, capacity, performance, scalability, consolidation and improved features.

Examples are: IBM SAN Volume Controller (IBM SVC), EMC VPLEX, Hitachi Virtual Storage, NetApp FlexVol.by Software

Software based Storage Virtualization or Software Defined Storage (SDS) products manage to virtualize storage from many sources, making them act as one, usually they use the storage found on many servers to be used as distributed storage, making good use of all the wasted space that stand-alone servers have.

Examples are VMware VSAN, DataCore SANsymphony, EMC ViPR.

vSAN - VMware vSAN

VMware software defined storage virtualizer, vSAN ships together with vSphere ESXi as a layer to provide Storage Virtualization to the ESXi Operating System.

With vSAN, systems with a good number of hard drive slots and multicore processors can build a very capable SAN product built under VMware.

vSAN requires a minimum of 2 server nodes (3 recommended), each with 1 SSD disk and 1 HD disk and can use lots of SSDs and/or HDs to deliver a high capacity and throughput storage product, eliminating the need of hardware based storage systems.

In the last release advanced storage features have been added such as Stretched Cluster, Deduplication, Compression, All-Flash systems, and different RAID protection levels.

It' a product which VMware keeps on upgrading and offering more enhancements, simple to manage, update and upgrade.

vSAN is somewhat cheaper than the usual SAN/Fabric/Fibre Switches, specially when you work it out on a 4 year period adding support and hardware maintenance.

It's a good option for reliability and speed: in a FC SAN you usually have 1 storage cabinet with it's 2 storage controllers, so to build maximum reliability then you will need 2 storage cabinets, and 2 FC switches, which works out quite expensive.

vSAN scales from 2 nodes upwards, but a good reliable setup is to start with 3 nodes, and if more space and resiliency is needed add further nodes.

VSAN - Virtual SAN

Virtual SAN technology that permits you create virtual fibre fabrics over physical fibre fabrics, very much like creating VLANs over a LAN in Ethernet networks.

It offers the same advantages as ethernet virtual LANs: traffic isolation, redundancy, ease of administration, and better use of a single switch with higher scalability.

VSAN is a technology developed by CISCO, and now used as a storage standard by various HW vendors.

vSAN vs VSAN

VSAN is a software layer on top of existing SAN fibre switches to isolate SAN traffics, and split one physical switch into several virtual switches.

VSAN was defined by Cisco and modelled over the ethernet VLAN technology, but applied to the storage networking.

VMware's vSAN is a particular storage product to be able to create a local SAN using ESXi nodes with SSD & HD disks, and that replicates storage between it's nodes, creating a virtual implementation of a storage area network.

It's easy to get confused between them, usually VMware's is spelled as vSAN and the rest as VSAN.

The truth is that both are "Virtual" SANs, one only for use with VMware, and the other for Fibre/Storage Fabrics. And they use very different concepts and architecture.

vSAN is better targetted at meidum or small shops, as it is competitive price-wise, performance-wise and with a better learning curve that most traditional SANs, it also scales as big as SAN storage cabins. The downfall is that it is only particular to the VMware vSphere world, whereas VSAN is applicable to all the storage spectrum.

Cloud Storage

Cloud Storage is storage which is accessible through the internet.

Internally it might be on a SAN, NAS, etc., it doesn´t really matter, the end result is that we can connect to the storage from any computer connected to the internet as long as we use some software to permit the use of an internet connection as if it were a local disk.

Personal cloud storage provider examples are: Google Drive, Microsoft's OneDrive, Dropbox.

Enterprise cloud storage provider examples are: Amazon ECS, EMC Hybrid Cloud, VMware vCloud Air, IBM SmartCloud.

The software really transmits back and forth files between the remote storage and our computer or server using a special internet connection transparently.

It's main benefit is that we are free from disasters as is not based in hardware, and that we can access it from multiple locations.

It's disadvantage is that a good internet connection is needed, and that you must pay a monthly fee to your cloud storage provider.

However it's getting a very good choice for small businesses as they can save from buying technical equipment, saving in insurance, electricity, cooling needs, maintenance contracts, backup software and hardware, and specialized technicians.

Deduplication

Storage technology to reduce the data saved on disk or tape, by eliminating redundant data. An algorithm detects duplicated files, and saves 1 copy of the file, replacing the others by file stubs to point to the saved file.

Deduplication can be done on the fly or in batch. Some storage systems use a once or twice a day batch process to perform deduplication on an storage array basis.

Rehydration

Rehydration is the reconstruction of storage that has been deduplicated.

Basically it entails replacing the pointers or file stubs previously replaced by the deduplication process by its original copy, thereby expanding the "thinned" storage to its original size.

This term is quite a graphic way to name what it does, when you think about it.

Thin Provisioning

Thin Provisioning is a virtualization technology to use a shared storage pool between a few LUNs or hosts, making them appear that they have more space that what is actually available.

It is a space saving technology, to reuse the unused portions usually found on most server disks.

This technology has also evolved, the first versions used a ratio of oversubscription, and newer versions use more complex algorithms taking into account feedback monitoring from real disk usage.

But all this is a lot easier explained with a quick example:

We have a shared pool with a 1TB space, and we create on it 4 Thin LUNs of 500GB each, and each one assigned to a different server to install their OS.

--Realistically we are giving 500GB of virtual space to each OS, but since disks are not usually used at 100% capacity, we can have 4 servers with 500GB instead of 2.

--Now comes the catch: If one of this 4 servers goes AWOL, and fills up its 500GB space, there will only be 500GB left to the other 3 servers, and this can fill the real capacity causing oversubscription.

--In case of running out of real space, the storage appliance will halt read-write access to the shared pool, in most cases causing a failure of most of the servers (in our example a 4 server failure).

--The trick here is really not to oversubscribe: the ratio for disk storage shouldn't be more than 1.5 oversubscription, so 1TB shared between 4 servers should be 4 LUNs of 375GB each (1000 * 1.5 / 4) , and not 500GB as this is more than likely to cause mayhem in the near-future. To be on the safe-side, use 1.25 oversubscription, and create 4 LUNs of 300GB.

Recommended SAN & Storage Best Practices:

1.- SAN Fibre networks are faster that iSCSI and NFS on same speed comparison, they have better bandwidth and throughput, and are more resilient.

2.- WWN zoning is more feature rich than Port zoning.

3.- Zones must be defined with 1 client adapter per zone.

4.- Switch configuration is quicker and less error prone via commands through a terminal connection than through the WebGUI (at a harder learning curve expense).

5.- When using Thin Provisioning, it is imperative to have good monitoring alerts and react to them quickly, as if we run out of space because of overprovisioning, thin provisioned LUNs will become locked (read only), and usually will only be fixed by adding extra disks (if we have maxed out our cabinet, we will need an expansion cabinet and some extra disks). So Thin Provisioning = Ease of Life and better Space Management, at the expense of a Single Point of Failure.

Thank you for reading my article, feel free to leave me some feedback regarding the content or to recommend future work.

If you liked this article please click the big "Good Article?" button at the bottom.

I look forward to hearing from you. - Carlos Ijalba ( LinkedIn )

Comments

No Comments have been Posted.

Post Comment

Please Login to Post a Comment.