Introduction to Avro to JSON

It is defined as, avro is data repository that provides serialized, flatten, and row-dependent format and it is largely utilized as a serialized data repository format, it has a systematic structure for reading data from the complete row and it is shorter organized for reading data, the file has schema in which the formation and type have been reserved and demonstrate in JSON format as it is supported by avro which is very easy to read by humans for any system, for converting we need to do direct mapping so that resulting JSON will have the same structure.

What is Avro to JSON?

It is the serialization mechanism in which it can be constructed to serialize and interchange the large data around various Hadoop projects, it can able to serialize the data in dense binary format and schema is in the JSON format which can describe the field name and data types, we can able to convert into JSON in various languages like Java and Python, as in Java using avro for converting, we need to use ‘ConvertRecord’ or ‘ConvertAvroToJSON’, if the avro file does not have a schema in it then we will have to give, it can be converted as schema and file it means avro can be converted into JSON format and JSON file also. We can also able to convert the avro file into JSON file format with the help of a Data frame and with the help of JSON format in another way, so the avro file can have markers to distribute the huge sets of data into subsets.

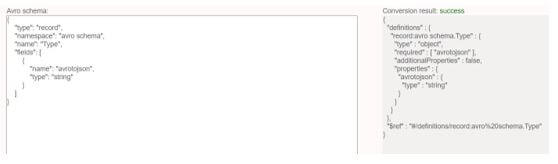

Schema

It is a schema-based serialization mechanism that can take schemas as input and avro can have its own standard to describe the schema in which it can have the type of file, location of the record, name of the record, and fields in the record with its equaling data type, the avro schema can be constructed in JSON’s document format which is the data exchanging format which can be a JSON string, a JSON object, and a JSON array.

If we try to construct a schema with data operation belongings then we need to describe the format so that the objects can able to read and write, when we try to describe avro format we have to describe the schema in the ‘.avsc’ file and when we try to arrange the data operation properties in JSON schema then we need to describe the format so that the data object can able to read and write, for specifying the JSON we need to describe the JSON file.

Example:

Code:

{

"type": "record",

"namespace": "avroschema",

"name": "Student",

"fields": [

{"name": "Name", "type": "string"},

{"name": "Class", "type": "int"}

]

}Output: JSON schema

In the above example, the type can describe the data type, a namespace can describe the in which object can be the remainder, the name can describe the name of the field, and fields also describe the name of the field.

Files

Let us see how to convert the file into JSON file format in scala, so for converting it first we have to read the avro file into a data frame and after that, we can able to convert it into a JSON file, as we know avro is open-source that can support the data serialization and interchange and that can be used together, by using serialization any program can productively serialize data into files, as we know it has dense data repository so that it can able to reserve both data definition and data in a file, avro can reserve the data definition in JSON format so that it can be easily read and written by the human.

The avro files may contain markers that can help to distribute large sets into subsets, in some cases, some data interchange services can able to use the code generator for showing the data definition and generate the code to be received by the data.

1. Read Avro File using Data Frame

These functions are not supported by spark, hence we can able to use the ‘DataSource’ format as avro and the load function has been used for reading the avro file.

Code:

val df = spark.read.format("avrofile")

.load("src/main/resources/zipcodes.avro")

df.show( )

df.printSchema( )And if we have data separation then we can use the where () function for loading the particular separation.

Code:

spark.read

.format("avro")

.load("zipcodes_partition.avro")

.where(col("Zipcode") === 19802)

.show()The spark.read.json(“path”) can able to read the JSON file into a Spark data frame in which this method can accept the file path as an argument.

2. Convert File

This is another way to convert the file into the JSON file, let us see how to convert the file into a JSON file with the help of JSON file format.

We have to use a link for converting into JSON as given below:

Code:

df.write.mode(SaveMode.Overwrite)

.json("/tmp/json/zipcodes.json")We can write above as:

Code:

df.write

.json("/tmp/json/zipcodes.json")Example:

Code:

package com.sparkbyexamples.spark.dataframe

import org.apache.spark.sql.{SaveMode, SparkSession}

object AvroToJson extends App {

val spark: SparkSession = SparkSession.builder()

.master("local[1]")

.appName("avrotojsonfile")

.getOrCreate()

spark.sparkContext.setLogLevel("mistake")

val df = spark.read.format("avro")

.load("src/main/resources/zipcodes.avro")

df.show()

df.printSchema()

df.write.mode(SaveMode.Overwrite)

.json("/tmp/json/zipcodes.json")

}In the above example, we have code of converting the avro file to JSON file format using data frame, for that we have import the package then we have written five-line code to read the avro file and we have also written code in last two line that is for to convert file into JSON file format.

Conclusion

In this article, we have seen how avro can be converted into JSON schema and JSON file, the avro file can convert into JSON file with the help of data frame and also with the help of JSON format so this article will help to understand the concept.