Users Online

· Members Online: 0

· Total Members: 188

· Newest Member: meenachowdary055

Forum Threads

Latest Articles

Articles Hierarchy

Creating a Flutter audio player and recorder app

Creating a Flutter audio player and recorder app

Creating a Flutter audio player and recorder app

December 30, 2021 9 min read

Recording audio has become a vastly used feature of many modern apps. From apps created to help users record and take notes during meetings or lectures, learn a new language, create podcasts, and more, recording audio has is an ingrained facet of technological life.

An audio-playing feature is just as important. Seen in music apps, podcasts, games, and notifications, it’s used to dynamically change how we interact and use apps.

In this tutorial, we’ll look at how to add audio recording and playing features to a Flutter app so you can create your own audio-based modern apps.

Before continuing with the tutorial, ensure you have the following:

- Flutter installed

- Android Studio or Xcode installed

Creating and setting up a new Flutter app

To begin, let’s create a new Flutter app with the following command:

flutter create appname

We’ll use two packages in this tutorial: flutter_sound for audio recording and assetsaudio_player for audio playing.

Open the newly created Flutter application in your preferred code editor and navigate to main.dart. You can remove the debug mode banner by setting debugShowCheckedModeBanner to false:

return MaterialApp( debugShowCheckedModeBanner: false, title: 'Flutter Demo', theme: ThemeData( primarySwatch: Colors.blue, ), home: MyHomePage(title: 'Flutter Demo Home Page'), ); }

All of our code will be inside the MyHomePageState class.

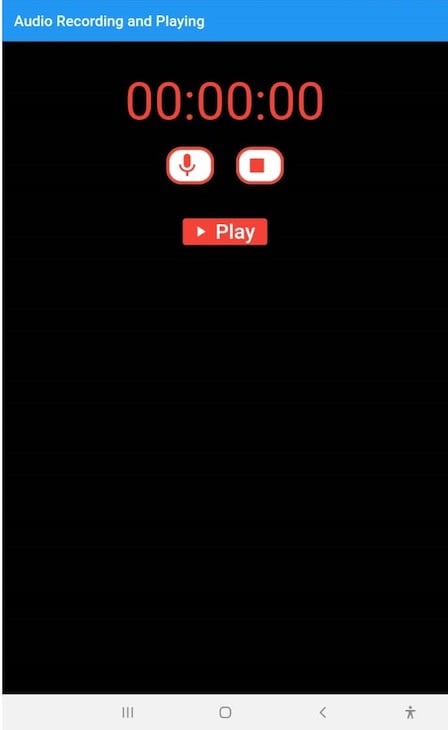

Inside its build method, let’s set the background color for our page to Colours.black87. This gives our page a black background with 87% opacity. We can also add a title for our AppBar:

backgroundColor: Colors.black87, appBar: AppBar(title: Text('Audio Recording and Playing')),

Adding UI widgets to the Flutter audio app

Recorders usually have timers that read for as long as the audio records.

To add a timer feature to our app, let’s add a Container widget to the body of the app. This will have a Text widget as a child, which displays the recording timer. We’ll also give the timer text some styling in TextStyle:

body: Center( child: Column( mainAxisAlignment: MainAxisAlignment.start, children: <Widget>[ Container( child: Center( child: Text( _timerText, style: TextStyle(fontSize: 70, color: Colors.red), ), ), ),

As we progress, we’ll create a function to pass the timer into the _timerText variable.

Starting and stopping recording

Next, let’s create two buttons to start recording and stop recording. First, create a sized box to add some vertical space between the timer text and the two buttons. The buttons will be on the same row, so we’ll use a Row widget.

All the buttons we’ll use on this page will utilize Flutter’s ElevatedButton widget. However, each button will have its own unique icon, text, and color.

Since the two buttons we are about to create will be similar to each other, let’s create a widget that has all the properties common to both and add arguments to pass in their unique properties.

Let’s name the widget createElevatedButton; to use it for our start and stop buttons, we’ll call the widget and pass in the required features for that particular button:

ElevatedButton createElevatedButton( {IconData icon, Color iconColor, Function onPressFunc}) { return ElevatedButton.icon( style: ElevatedButton.styleFrom( padding: EdgeInsets.all(6.0), side: BorderSide( color: Colors.red, width: 4.0, ), shape: RoundedRectangleBorder( borderRadius: BorderRadius.circular(20), ), primary: Colors.white, elevation: 9.0, ), onPressed: onPressFunc, icon: Icon( icon, color: iconColor, size: 38.0, ), label: Text(''), ); }

The three properties that this widget requires every time are the icon, the color of the icon, and the function that carries out when pressing the button.

Note that the widget has a padding of 6px on all sides with red borders that have a width of 4px. We also added a border radius of 15px. The primary color is white with an elevation of 9 for a box shadow.

Whatever function is passed as onPressFunc to the widget serves as its onPressed function. Whatever icon is passed to it will have a size of 38px and carry the color passed through the iconColor argument.

Now that the createElevatedButton widget is set, we can use it for our startRecording and stopRecording buttons.

In the row we created above, we can add our startRecording button to it by using the createElevatedButton widget, passing a mic icon to it, giving the icon a red color, and giving the widget an onPressed function named startRecording. We’ll create this function later.

Next, let’s add our stopRecording button by using the CreateElevatedButton widget, passing the stop icon to it, and giving it a white color and an onPressed function named stopRecording, which we’ll create later:

Row( mainAxisAlignment: MainAxisAlignment.center, children: <Widget>[ createElevatedButton( icon: Icons.mic, iconColor: Colors.red, onPressFunc: startRecording, ), SizedBox( width: 30, ), createElevatedButton( icon: Icons.stop, iconColor: Colors.red, onPressFunc: stopRecording, ), ], ),

Playing the recorded audio

Now that we have the buttons for starting and stopping recording, we need a button to play the recorded audio. First, let’s put some vertical space between the row we just created and the button we are about to create using a SizedBox widget with its height set to 20px.

This button will serve two functions: playing recorded audio and stoping the audio. And so, to toggle back and forth between these two functions, we need a boolean. We’ll name the boolean play_audio and set it to false by default:

bool _playAudio = false;

It’s pretty straightforward; when the value is false, audio will not play, and when the value is true, the audio plays.

Continuing, let’s create an ElevatedButton with an elevation of 9 and a red background color and add an onPressed function to the button.

With the setState function, we can toggle back and forth between the two boolean values, so every time the button is pressed, the value changes and executes setState:

SizedBox( height: 20, ), ElevatedButton.icon( style: ElevatedButton.styleFrom(elevation: 9.0, primary: Colors.red), onPressed: () { setState(() { _playAudio = !_playAudio; }); if (_playAudio) playFunc(); if (!_playAudio) stopPlayFunc(); }, icon: _playAudio ? Icon( Icons.stop, ) : Icon(Icons.play_arrow), label: _playAudio ? Text( "Stop", style: TextStyle( fontSize: 28, ), ) : Text( "Play", style: TextStyle( fontSize: 28, ), ), ),

If the current value is false, which means audio is not currently playing, the playFunc function executes. If the value is true, which means audio is currently playing and the button is pressed, the stopPlayFunc function executes; we’ll create these two functions below.

When audio plays, we want to display a stop icon on the button with the text "stop". When the audio stops playing, we’ll display a play icon and the text "play" on the button.

Installing packages for the Flutter audio app

Next, we must install the packages that will enable us to record and play audio in our app. Begin by navigating to the pubspec.yaml file and add them under dependencies:

dependencies: flutter_sound: ^8.1.9 assets_audio_player: ^3.0.3+3

Now, we can go to our main.dart file and import the packages to use in our app:

import 'package:flutter_sound/flutter_sound.dart'; import 'package:assets_audio_player/assets_audio_player.dart';

To use them, we must first create instances of them:

FlutterSoundRecorder _recordingSession; final recordingPlayer = AssetsAudioPlayer();

To play a piece of audio, we need the path to the audio recorded, which is the location on a phone that stores the recorded audio. Let’s create a variable for that:

String pathToAudio;

Creating functions for the Flutter audio app

Initializing the app

To initialize our app upon loading, we can create a function called initializer:

void initializer() async { pathToAudio = '/sdcard/Download/temp.wav'; _recordingSession = FlutterSoundRecorder(); await _recordingSession.openAudioSession( focus: AudioFocus.requestFocusAndStopOthers, category: SessionCategory.playAndRecord, mode: SessionMode.modeDefault, device: AudioDevice.speaker); await _recordingSession.setSubscriptionDuration(Duration( milliseconds: 10)); await initializeDateFormatting(); await Permission.microphone.request(); await Permission.storage.request(); await Permission.manageExternalStorage.request(); }

Inside this function, we give the variable pathToAudio the path where we save and play our recorded audio from.

Next, we can create an instance of FlutterSoundRecorder and open an audio session with openAudioSession so our phone can start recording.

The parameters focus, category, mode, and device added to the session achieve audio focus. Audio focus stops every other app on our phone that has the capability to record or play sound so our app can function properly.

setSubscriptionDuration then helps us track and update the amount of time that we record for. In other words, it tracks the amount of time that subscribes to the recorder.

Next, the initializeDateFormatting function helps us format our timer text, and, finally, the Permission.microphone.request, Permission.storage.request, and Permission.manageExternalStorage functions enable a request to use the phone’s microphone and external storage.

Finally, add the initializer method to your initState method:

void initState() { super.initState(); initializer(); }

Granting permissions in Android phones

For Android phones, additional setup is required to grant these permissions to our app. Navigate to the following and add permissions for recording audio, reading files from the external storage,

and saving files to the external storage:

android/app/src/main/AndroidManifest.XML

To access the storage of phones with Android 10 or API level 29, we must set the value of requestLegacyExternalStorage to true:

<uses-permission android:name="android.permission.RECORD_AUDIO" /> <uses-permission android:name= "android.permission.READ_EXTERNAL_STORAGE" /> <uses-permission android:name= "android.permission.WRITE_EXTERNAL_STORAGE" /> <application android:requestLegacyExternalStorage="true"

Next, go to your terminal and run the following:

flutter pub add permission_handler

Adding the startRecording() function

We can proceed to create the functions we added to our buttons; the first function is startRecording():

Future<void> startRecording() async { Directory directory = Directory(path.dirname(pathToAudio)); if (!directory.existsSync()) { directory.createSync(); } _recordingSession.openAudioSession(); await _recordingSession.startRecorder( toFile: pathToAudio, codec: Codec.pcm16WAV, ); StreamSubscription _recorderSubscription = _recordingSession.onProgress.listen((e) { var date = DateTime.fromMillisecondsSinceEpoch( e.duration.inMilliseconds, isUtc: true); var timeText = DateFormat('mm:ss:SS', 'en_GB').format(date); setState(() { _timerText = timeText.substring(0, 8); }); }); _recorderSubscription.cancel(); }

With Directory directory = Directory(path.dirname(pathToAudio)), we specify the directory that we want to save our recording to. Then, using an if statement, we can check if the directory exists. If it doesn’t, we can create it.

We then open an audio session with the openAudioSession function and start recording. Inside the startRecorder function, we specify the path to save the audio to with the format saved.

Using a stream to monitor data

If we want to monitor what’s happening while data is recorded, we can use a stream. In this case, we use StreamSubscription to subscribe to events from our recording stream.

_recordingSession.onProgress.listen then listens while the audio recording is in progress. While this happens, we also want to take the time for every millisecond and save it in a variable named timeText.

We can then use the setState method to update the timer in our app. When we do not need to monitor the stream anymore, we cancel the subscription.

Adding the stopRecording function

Next, we’ll create the stopRecording function:

Future<String> stopRecording() async { _recordingSession.closeAudioSession(); return await _recordingSession.stopRecorder(); }

Inside this function, we use the closeAudioSession method to free up all of the phone’s resources that we’re using and close the recording session. Then, we use the stopRecorder function

to stop recording.

Adding the play function

Next, we’ll create the play function:

Future<void> playFunc() async { recordingPlayer.open( Audio.file(pathToAudio), autoStart: true, showNotification: true, ); }

We use the open function to start the audio player, providing it with the path to the audio, specifying that the audio should play automatically, and specifying that a notification appears at the top of the phone screen while audio is playing.

Adding the stopPlay function

Lastly, we’ll create the stopPlay function, inside of which we add the stop method to stop the player:

Future<void> stopPlayFunc() async { recordingPlayer.stop(); }

Conclusion

And with that, we have a finalized simple audio recorder and player application:

Below is the final code for everything we just built. Happy coding!

main.dart

Here is the full code for the main.dart file:

import 'dart:async'; import 'dart:io'; import 'package:flutter/cupertino.dart'; import 'package:flutter/material.dart'; import 'package:flutter_sound/flutter_sound.dart'; import 'package:intl/date_symbol_data_local.dart'; import 'package:permission_handler/permission_handler.dart'; import 'package:path/path.dart' as path; import 'package:assets_audio_player/assets_audio_player.dart'; import 'package:intl/intl.dart' show DateFormat; void main() { runApp(MyApp()); } class MyApp extends StatelessWidget { @override Widget build(BuildContext context) { return MaterialApp( debugShowCheckedModeBanner: false, title: 'Flutter Demo', theme: ThemeData( primarySwatch: Colors.blue, ), home: MyHomePage(title: 'Flutter Demo Home Page'), ); } } class MyHomePage extends StatefulWidget { MyHomePage({Key key, this.title}) : super(key: key); final String title; @override _MyHomePageState createState() => _MyHomePageState(); } class _MyHomePageState extends State<MyHomePage> { FlutterSoundRecorder _recordingSession; final recordingPlayer = AssetsAudioPlayer(); String pathToAudio; bool _playAudio = false; String _timerText = '00:00:00'; @override void initState() { super.initState(); initializer(); } void initializer() async { pathToAudio = '/sdcard/Download/temp.wav'; _recordingSession = FlutterSoundRecorder(); await _recordingSession.openAudioSession( focus: AudioFocus.requestFocusAndStopOthers, category: SessionCategory.playAndRecord, mode: SessionMode.modeDefault, device: AudioDevice.speaker); await _recordingSession.setSubscriptionDuration(Duration(milliseconds: 10)); await initializeDateFormatting(); await Permission.microphone.request(); await Permission.storage.request(); await Permission.manageExternalStorage.request(); } @override Widget build(BuildContext context) { return Scaffold( backgroundColor: Colors.black87, appBar: AppBar(title: Text('Audio Recording and Playing')), body: Center( child: Column( mainAxisAlignment: MainAxisAlignment.start, children: <Widget>[ SizedBox( height: 40, ), Container( child: Center( child: Text( _timerText, style: TextStyle(fontSize: 70, color: Colors.red), ), ), ), SizedBox( height: 20, ), Row( mainAxisAlignment: MainAxisAlignment.center, children: <Widget>[ createElevatedButton( icon: Icons.mic, iconColor: Colors.red, onPressFunc: startRecording, ), SizedBox( width: 30, ), createElevatedButton( icon: Icons.stop, iconColor: Colors.red, onPressFunc: stopRecording, ), ], ), SizedBox( height: 20, ), ElevatedButton.icon( style: ElevatedButton.styleFrom(elevation: 9.0, primary: Colors.red), onPressed: () { setState(() { _playAudio = !_playAudio; }); if (_playAudio) playFunc(); if (!_playAudio) stopPlayFunc(); }, icon: _playAudio ? Icon( Icons.stop, ) : Icon(Icons.play_arrow), label: _playAudio ? Text( "Stop", style: TextStyle( fontSize: 28, ), ) : Text( "Play", style: TextStyle( fontSize: 28, ), ), ), ], ), ), ); } ElevatedButton createElevatedButton( {IconData icon, Color iconColor, Function onPressFunc}) { return ElevatedButton.icon( style: ElevatedButton.styleFrom( padding: EdgeInsets.all(6.0), side: BorderSide( color: Colors.red, width: 4.0, ), shape: RoundedRectangleBorder( borderRadius: BorderRadius.circular(20), ), primary: Colors.white, elevation: 9.0, ), onPressed: onPressFunc, icon: Icon( icon, color: iconColor, size: 38.0, ), label: Text(''), ); } Future<void> startRecording() async { Directory directory = Directory(path.dirname(pathToAudio)); if (!directory.existsSync()) { directory.createSync(); } _recordingSession.openAudioSession(); await _recordingSession.startRecorder( toFile: pathToAudio, codec: Codec.pcm16WAV, ); StreamSubscription _recorderSubscription = _recordingSession.onProgress.listen((e) { var date = DateTime.fromMillisecondsSinceEpoch(e.duration.inMilliseconds, isUtc: true); var timeText = DateFormat('mm:ss:SS', 'en_GB').format(date); setState(() { _timerText = timeText.substring(0, 8); }); }); _recorderSubscription.cancel(); } Future<String> stopRecording() async { _recordingSession.closeAudioSession(); return await _recordingSession.stopRecorder(); } Future<void> playFunc() async { recordingPlayer.open( Audio.file(pathToAudio), autoStart: true, showNotification: true, ); } Future<void> stopPlayFunc() async { recordingPlayer.stop(); } }

AndroidManifest.xml

Here is the final code for the AndroidManifest.xml to configure permissions in Android phones:

<manifest xmlns:android="http://schemas.android.com/apk/res/android" package="my.app.audio_recorder"> <uses-permission android:name="android.permission.RECORD_AUDIO" /> <uses-permission android:name="android.permission.READ_EXTERNAL_STORAGE" /> <uses-permission android:name="android.permission.WRITE_EXTERNAL_STORAGE" /> <application android:requestLegacyExternalStorage="true" android:label="audio_recorder" android:icon="@mipmap/ic_launcher"> <activity android:name=".MainActivity" android:launchMode="singleTop" android:theme="@style/LaunchTheme" android:configChanges="orientation|keyboardHidden| keyboard|screenSize|smallestScreenSize|locale |layoutDirection|fontScale|screenLayout|density|uiMode" android:hardwareAccelerated="true" android:windowSoftInputMode="adjustResize">

pubspec.yaml

Here is the final code for the pubspec.yaml file containing the project’s dependencies:

LogRocket: Full visibility into your web and mobile apps

LogRocket is a frontend application monitoring solution that lets you replay problems as if they happened in your own browser. Instead of guessing why errors happen, or asking users for screenshots and log dumps, LogRocket lets you replay the session to quickly understand what went wrong. It works perfectly with any app, regardless of framework, and has plugins to log additional context from Redux, Vuex, and @ngrx/store.

In addition to logging Redux actions and state, LogRocket records console logs, JavaScript errors, stacktraces, network requests/responses with headers + bodies, browser metadata, and custom logs. It also instruments the DOM to record the HTML and CSS on the page, recreating pixel-perfect videos of even the most complex single-page and mobile apps.

Try it for free.dependencies: flutter: sdk: flutter cupertino_icons: ^1.0.2 flutter_sound: ^8.1.9 permission_handler: ^8.1.2 path: ^1.8.0 assets_audio_player: ^3.0.3+3 intl: ^0.17.0